PA5 remote testing is similar to PA4's. The only difference is, the reference won't try to detect all the possible semantic errors in the testcase. Instead, it will detect the first semantic error it finds and then exit. Therefore, remote testing will say "pass" as long as the reference finds a semantic error and your solution finds one, regardless the error type. HOWEVER, IN REAL GRADING, ONLY VALID SEMANTIC ERROR REPORTING WILL BE GIVEN CREDITS. That means, if a testcase contains multiple errors, you will get credit only if you report at least one of them. If the one you report doesn't belong to the valid error set, you won't get credit. That said, real grading is more strict than remote testing. It's your responsibility to make sure the error you reported actually exists in the testcase.

Remote testing will run from 11/19 to 12/12 11:59PM, every 4 hours. We strongly encourage you to work on it PA5 early for the following reasons:

Collection script will run from 12/7 to 12/12 11:59PM. You have to commit your DONE file before the last collection runs.

Remote Testing for PA4 (10/31/2004)

In PA4, your will be building a decaf compiler that compiles .decaf files to .s files. Same as PA1, your testcases will be the .decaf files and they have no input files.

Remote tester will take your .decaf files, compile them with your compiler and our compiler. Then tester runs gcc on the two sets of .s files. After that two sets of executable binaries will be generated. At the end, we will run the binaries and compare the output of the binary files.

If a .decaf file contains semantic errors, we will compare the error output of your compiler with the reference compiler. Since you are not required to output all errors found, we will consider you compiler pass if it produces a subset of our messages. However, if for the same testcase your compiler doesn't find any error but the reference finds some, the testcase is considered to be failed.

It's easy to write testcases that have infinite loops in decaf. We prevent this by setting a 20 second timeout for each executable. We reserve the rights to reduce this timeout allowance as the remote tester gets more load. We generally don't encourage loops with unnecessary iterations because they usually don't expose more bugs and only delay other people's remote testing results. Please be noted that the more testcases you have, the longer it takes to finish one round of remote testing.

Remote testing will be running from 10/31/2004 to 11/13/2004 midnight.

Remote testing for PA3 is similar to PA2. Your testcases will be the .dpar files and the inputs will be the .decaf files. The same convention is followed: each testcase has a multiple input files associated with it by prefixing the input files with the testcase's name.

Rules:

1) For each .dpar file you create, we will feed it to our parser generator and yours. With the generated parser, we run each of the decaf files associated with the parser spec on the your LL1Parser.java and compare the result. Note that you also need a token spec file for running the parser generator and the generated paresr. In PA3, we will use the decaf.dtok as provided in the starter kit for all testing because the lexer we use was generated from decaf.dlex

2) There is an exception for rule 1). Since writing decaf-with-actions.dpar is part of your assignment, to assist you with testing it, we will use our own decaf-with-actions.dpar to test against yours.

3) For each dpar files you create, you can put in your actions. Our generator will generate those actions too. So when we run the our generated parser, you actions will be there and get executed.

4) Our testing stub will examine the object on top of your semantic stack after parsing. If it's an ASTNode object, we will use ASTPrinter to print it out for comparison. For any other kind of object, we will call its toString() method and print out whatever it returns.

When remote testing PA2, since implementing decaf.dlex is part of your assignment, we will help you test it. Here is what will happen:

However, for other dlex files, we will apply your testcase file to both your LexerCodeGenerator.java and ours. In these cases, we won't guarantee the reference solution will output the correct line numbers.

In PA2, there are two kinds of files you can create to do remote testing.

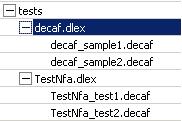

Here is a picture that illustrates the naming convention described above:

In PA2, to ensure comparability, we will use our own code to invoke your LexerCodeGenerator and LookaheadLexer. The testing methodology is similar to your part4. We will call LookaheadLexer with LexerCode.java generated with the dlex-dtok pair to do the actual lexing.