Project 1: Images of the Russian Empire

About

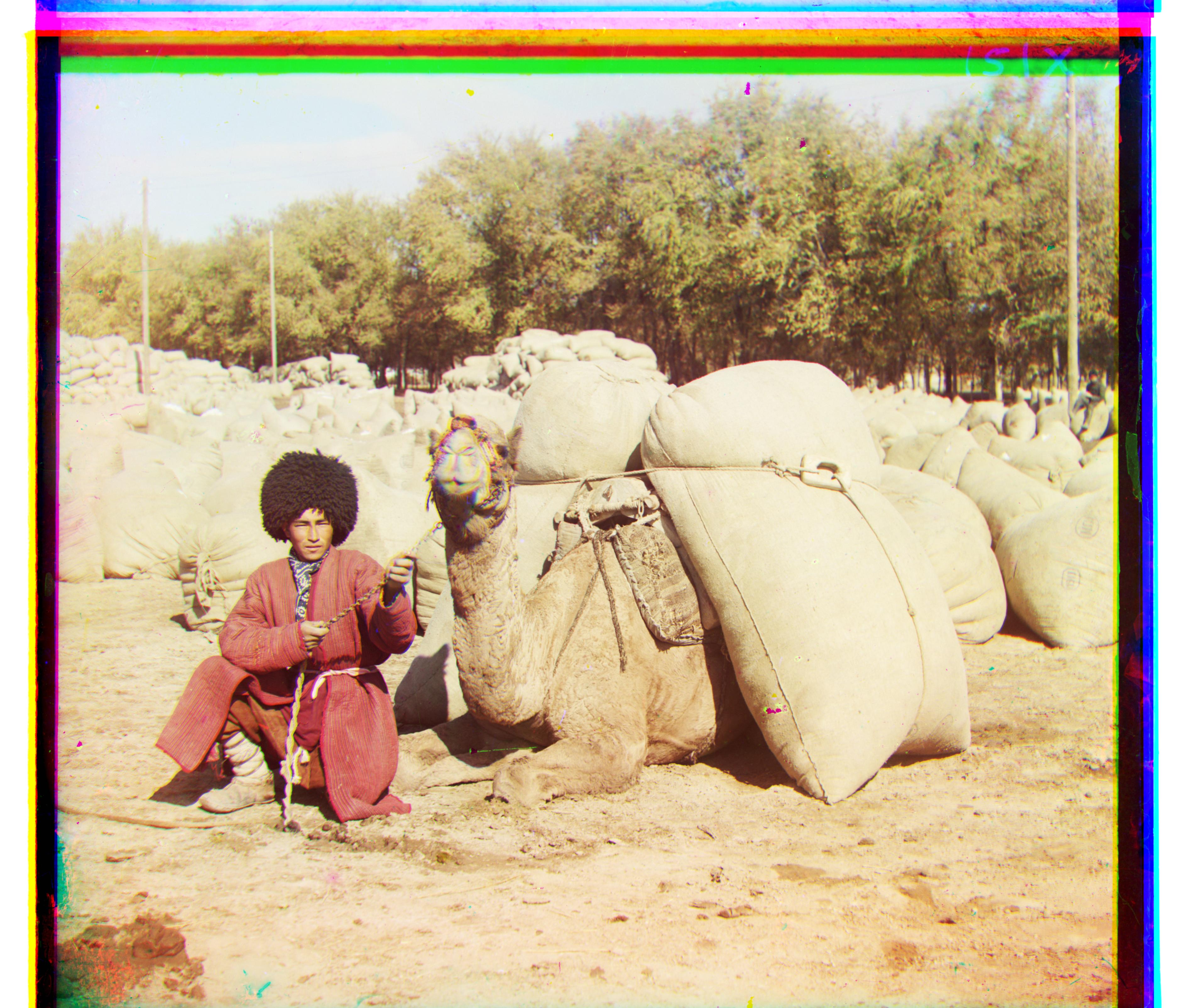

In this project, we explore image alignment through Sergei Prokudin-Gorskii's method of color photography. Given plates of images that were taken through colored filters, we can reconstruct a colored photo by applying the corresponding colors and intensities to each plate and then realigning all three plates. Unfortunately, the plates are not completely aligned, so we have to find the best alignment. We do this by aligning the green and red plates to the blue, which is used as a reference. Below each image is the pixel shift used for the red and green images respectively.

Approach

The first approach was to do a complete cross-correlation over the entirety of all three images and find the shift that resulted in the large cross-correlation value. This worked great for the small images, but is infeasible for the larger .tif files. Each one of these images are around 3000x3000 pixels, so taking a single dot-product would take a non-trivial amount of time. To deal with these images, I used an image pyramid to work on a shrunken image and moved my way upwards. I tried both sum of squared difference and normalized cross-correlation and found NCC achieves much better performance with almost the same results.

Image Pyramid

For the image pyramid, I began with the full image and recursively called the alignment function on a 0.5-size rescaled versions of the target and reference images. I do this until I reach the base-case of a image that has either a length of width of less than 250 pixels. At this point, I just perform the original full image alignment as used on the the smaller images. Once I find the best shift, I return it to the level above, rescale the shifts by a factor of 2 (to accomdate the resizing), and then search in a [-30, 30] area to find the best shift at this level. Continue until the outer level is reached.

Issues

An issue I ran into was the borders interferring with the images (giving bad values from NCC), so I cropped the images before applying NCC and performing the search. I ended up removing 1/3 off of each edge, so only using the middle 1/9-th of the images, which seems to fix the problem.

An issue I ran into that I did not manage to fix was Emir's photo. Because the filters drastically changing the values of the pixels (due to the color of his robe), neither NCC nor SSD with pixel values as features result in a better value when the images were actually aligned. If we look at the image through the blue filter, we see that his robe is mostly white, but while through the other filters, it is much darker.

Images

Small Aligned Images

red: [12, 3], green: [5, 2]

red: [3, 2], green: [339, 2]

red: [8, 0], green: [345, 1]

red: [15, -1], green: [7, 0]

Large Aligned Images

red: [124, 15], green: [59, 17]

red: [90, 23], green: [41, 18]

red: [112, 3772], green: [51, 7]

red: [175, 37], green: [78, 29]

red: [109, 11], green: [50, 14]

red: [86, 33], green: [42, 6]

red: [116, 28], green: [56, 22]

red: [137, 3841], green: [64, 12]

red: [-188, -36], green: [48, 24]

Additional Large Aligned Images

red: [133, 3755], green: [12, 3760]

red: [35, 38], green: [9, 19]

red: [75, 3770], green: [34, 23]

red: [55, 30], green: [21, 18]