Red displacement: (3, 12)

Red displacement: (2, 3)

Red displacement: (0, 8)

Red displacement: (-1, 15)

The Prokudin-Gorskii glass plate images contain three recorded exposures of a scene from using a red, green, and blue filter. The goal of this project is to split these images into the separate color channels, and align them into a single colorized image. To do so, we fix one of the channels (in this case, the blue channel) as the reference and align the other channels against it.

For lower resolution images, we perform a single-scale exhaustive search over some window of possible (x, y) displacements. We score the displacements based on a metric - the one used to obtain the results below is simply the L2-norm of the difference between the reference and displaced channels (i.e. score = numpy.linalg.norm(reference_image - displaced_image)).

For higher resolution images, this exhaustive search is too slow to run. Instead, we use an image pyramid to speed up our search. Conceptually, as we go up the image pyramid, we scale down the resolution of the image by a factor of 2. To align the images, we perform a single-scale exhaustive search (described above) in a small window on the uppermost level of the image pyramid (lowest resolution) and use the displacement in that level to determine the window to search on the next lower level. For instance, if we wanted to only search a window of [-2, 2] by [-2, 2] at each level and in the uppermost level the best displacement is (-1, 0), the next window of displacements we search in the second level is a window of [-2, 2] by [-2, 2] centered at (-2, 0). Specifically, this is the window of [-4, 0] in the x direction and a window of [-2, 2] in the y direction. We iteratively do this until we reach the lowest level (full resolution) of our image pyramid. The displacement on the final level is the one we take.

In an earlier version of our algorithm, even single-scale exhaustive search of displacements did not successfully align images. To amend this, we only used the inner 2/3 of each channel (i.e. we cropped off 1/6 of each edge before searching over possible displacements). The edges were often faded or eroded and skewed our metric calculations. After cropping, our images properly aligned.

The lower resolution jpg images that we aligned look as follows.

| Image name | Aligned image | Displacement (x, y) |

|---|---|---|

| cathedral.jpg |  |

Green displacement: (2, 5) Red displacement: (3, 12) |

| monastery.jpg |  |

Green displacement: (2, -3) Red displacement: (2, 3) |

| nativity.jpg |  |

Green displacement: (1, 3) Red displacement: (0, 8) |

| settlers.jpg |  |

Green displacement: (0, 7) Red displacement: (-1, 15) |

The higher resolution tif images that we aligned look as follows. Note that emir.tif is not fully aligned. This is explained in a later section, titled "Algorithmic failures".

| Image name | Aligned image | Displacement (x, y) |

|---|---|---|

| emir.jpg |  |

Green displacement: (24, 49) Red displacement: (61, 102) |

| harvesters.jpg |  |

Green displacement: (17, 59) Red displacement: (14, 124) |

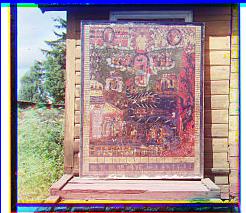

| icon.jpg | Green displacement: (17, 41) Red displacement: (23, 89) |

|

| lady.jpg |  |

Green displacement: (9, 55) Red displacement: (12, 117) |

| self_portrait.jpg |  |

Green displacement: (29, 78) Red displacement: (37, 176) |

| three_generations.jpg |  |

Green displacement: (14, 53) Red displacement: (12, 111) |

| train.jpg |  |

Green displacement: (6, 43) Red displacement: (32, 87) |

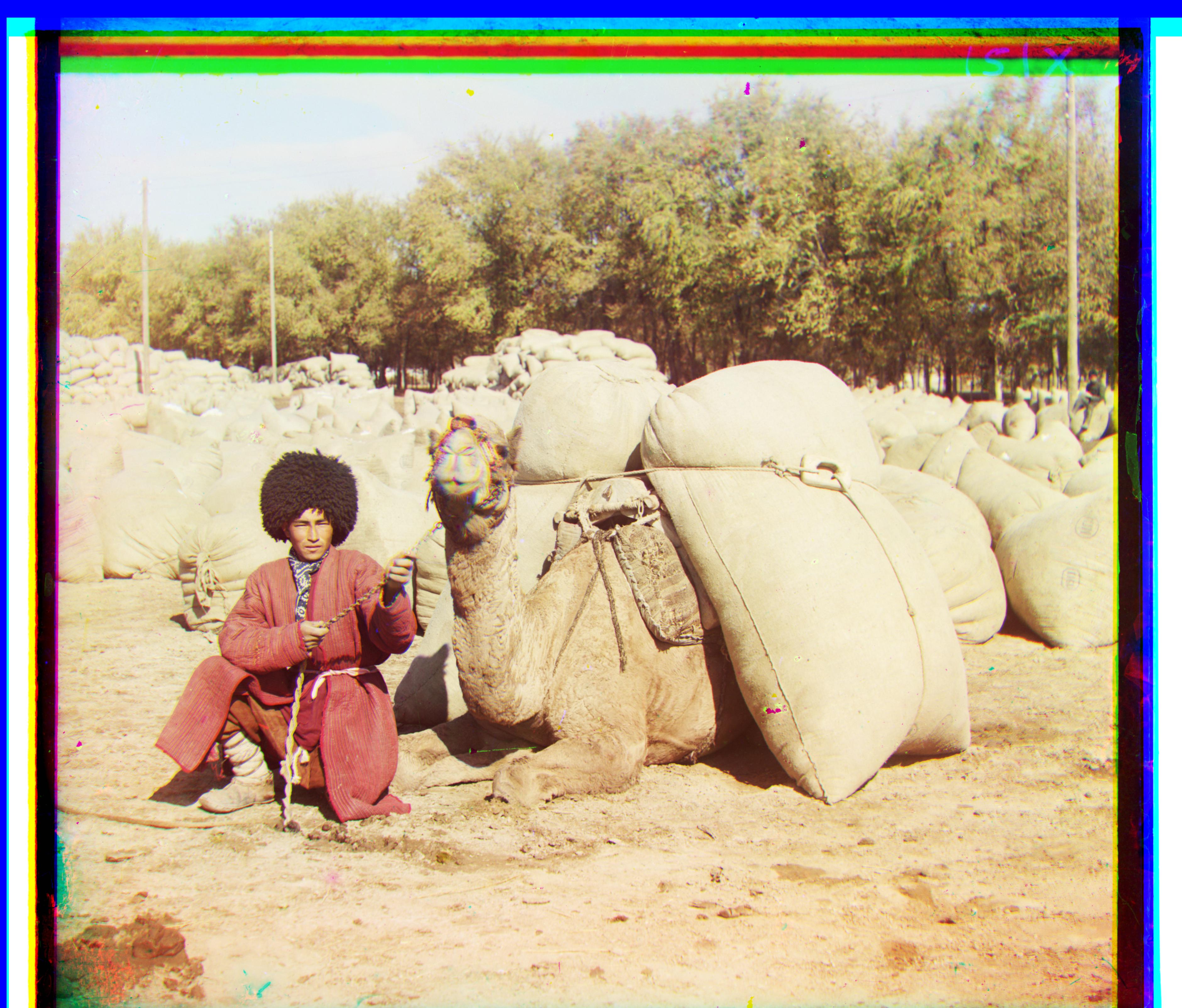

| turkmen.jpg |  |

Green displacement: (21, 56) Red displacement: (28, 116) |

| village.jpg |  |

Green displacement: (12, 65) Red displacement: (22, 128) |

These additional examples were selected from the Prokudin-Gorskii collection.

| Image name | Aligned image | Displacement (x, y) |

|---|---|---|

| hobbit_house.jpg |  |

Green displacement: (1, 0) Red displacement: (3, 2) |

| river.jpg |  |

Green displacement: (0, 2) Red displacement: (0, 4) |

| wooden_screen.jpg |  |

Green displacement: (1, 3) Red displacement: (1, 7) |

Our algorithm does not properly align emir.tif. In the case of this image, the brightness levels of the channels were significant enough to skew our metric. For instance, if we take a look at the robe in each of the channels (see above), the brightness levels are noticeably different (much brighter in the blue/first channel than the other two). Since we are aligning against the blue channel, this will have a fairly big impact on the alignment of the red channel, which is much darker on the robe region and much lighter on the doors. If we take a look at the resulting colorized image for emir.tif (see above), we can see that the noticeably unaligned regions are from the red and the blue channels.

For this part, I used edge detectors to improve our alignment. In particular, the canny edge detector in the package skimage.feature was used. The idea is to apply the edge detector filter on each color channel, and use the edges of the channels to for alignment instead of simply aligning the raw color channels. We then apply the displacements to the original color channels to colorize the image. The results from doing this to emir.tif are shown below. Notice that we no longer have the small offsets in the color channels as was seen previously. We are still using an image pyramid here.

Styling of this page is modified from bettermotherfuckingwebsite.com.