green_displacement = [2,5] red_displacement = [3,12]

green_displacement = [2,5] red_displacement = [3,12] Generally the goal of this project is to find the proper alignment for three glass plate images taken with a red, blue, and green filter to create a juxtaposed RGB color image. Generally you utilize a loss function to find the optimal displacement between two images, and shift one image by that displacement to allow the two images to be better aligned. To do this you align green with blue and then red with blue, after you juxtapose them all together using dstack. To start I wrote an align function that compared different feature box’s to find which had the best displacement. I choose a feature window of about 300 by 300 pixels that was centered at the center of the blue frame image. Then I made different sliding windows on the other image with middle pixels ranging in a 40 by 40 box around the original middle pixel. With Normalized Cross Correlation comparing the original feature box of the blue panel to the many different feature box’s in the sliding window being developed on the other panel, I was able to pick which feature box was the best displacement. The best displacement coordinates are the displacement from the middle coordinate of the picture to the middle coordinate of whatever the best feature box was. After this one shifts the non-reference image by these displacement coordinates. For larger images a recursive image pyramid function was needed to better align the images. To do this, rescale the image by .5 and run align on it, you do this recursing down until you reach a certain small enough base case sized image. Then with these new coordinates, you realign your non-reference image. You then run align on your reference image and this newly realigned non-reference image to get another pair of coordinates. Lastly you return the originally alignment coordinates from the .5 scaled image added to the coordinates you just obtained to get the proper displacement vector. This process is repeated all the way up the recursive pyramid, and makes displacement vectors much more accurate for very large images. Numpy was very helpful as it allowed me to easily roll non-reference images when I was shifting them by the displacement values. It also allowed multidimensional array indexing to create the many different feature box’s and easy matrix multiplication. Lastly, the coordinates shown in this site to represent displacement are in [Y,X] format.

green_displacement = [2,5] red_displacement = [3,12]

green_displacement = [2,5] red_displacement = [3,12]  green_displacement = [23, 48] red_displacement = [-19, 4]

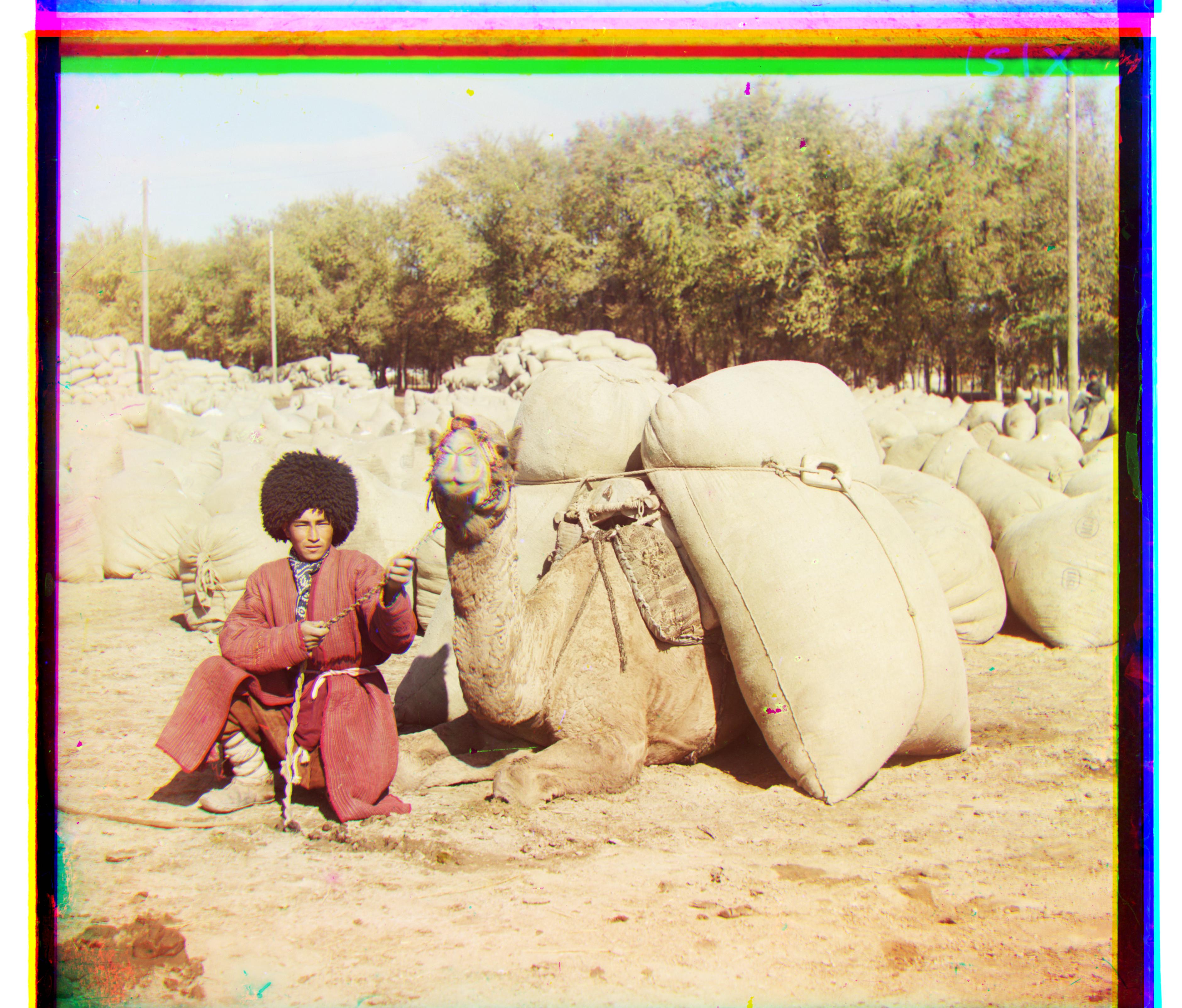

green_displacement = [23, 48] red_displacement = [-19, 4]  green_displacement = [18, 59] red_displacement = [16, 123]

green_displacement = [18, 59] red_displacement = [16, 123]  green_displacement = [7, 53] red_displacement = [10, 113]

green_displacement = [7, 53] red_displacement = [10, 113]  green_displacement = [2, -3] red_displacement = [2, -3]

green_displacement = [2, -3] red_displacement = [2, -3]  green_displacement = [1, 3] red_displacement = [0, 8]

green_displacement = [1, 3] red_displacement = [0, 8]  green_displacement = [29, 77] red_displacement = [37, 175]

green_displacement = [29, 77] red_displacement = [37, 175]  green_displacement = [0, 7] red_displacement = [-1, 14]

green_displacement = [0, 7] red_displacement = [-1, 14]  green_displacement = [15, 49] red_displacement = [11, 109]

green_displacement = [15, 49] red_displacement = [11, 109]  green_displacement = [6, 42] red_displacement = [32, 85]

green_displacement = [6, 42] red_displacement = [32, 85]  green_displacement = [22, 56] red_displacement = [29, 117]

green_displacement = [22, 56] red_displacement = [29, 117]  green_displacement = [13, 64] red_displacement = [23, 137]

green_displacement = [13, 64] red_displacement = [23, 137] Further Prokudin-Gorskii collection images.

green_displacement = [2, 1] red_displacement = [3, 3]

green_displacement = [2, 1] red_displacement = [3, 3]  green_displacement = [1, 3] red_displacement = [1, 6]

green_displacement = [1, 3] red_displacement = [1, 6]  green_displacement = [-2, 5] red_displacement = [-6, 11]

green_displacement = [-2, 5] red_displacement = [-6, 11] The only image that seemed to align really poorly with my algorithm is the emir.tif image. From what I read the pixel intensity of the different plate images had a large variance between them. This was especially due to the rich color of the robe. These varying intensities make the algorithm unable to align the image affectively, leading the emir image to look like the there are multiple images slightly offset over each other rather than perfectly juxtaposed.