Offsets, red and green respectively.

[5, 2]

[12, 3]

Offsets, red and green respectively.

[5, 2]

[12, 3]

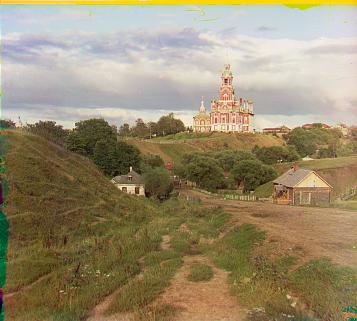

The goal of the project is to combine 3 similar images that were taken with different color filters, to create a single colorized image. However, the images are not necessarily perfectly aligned with one another. If ignored, the different images would not combine properly, soiling the resulting image.

To align relatively small images, I chose a small window in the center of the blue channel, which would be used to compare it to a similarly sized window in the 'other' channel, by taking either the l2-norm of the two windows or the normalized cross correlation. I then iterated through a certain area around the center of this 'other' channel, and kept track of the optimal displacement (best l2-norm/normalized cross correlation value) of the blue channel and the 'other' channel.

With larger images, the problem is that the displacements could be arbitrarily large in absolute terms, but searching through a massive window would be very inefficient. So to align larger images, I implemented the recursive image pyramid method. This works by rescaling the two channels (halving), finding the optimum displacement at that level (recursive call), re-scaling this displacement value so that it applies to the larger image, and then shifting the location that I search for the optimal window by this displacement value. The reason I do this is because while scaling down the image should keep the general features in tact, some precision gets lost, which we have to fix when we return to the larger scales.

To crop the images, I assumed that we wished to crop the final resulting image (including color distortions from rolling the image). To make things easier, I worked on a greyscale copy of the image. I decided that a border exists when there is an abrupt difference between adjacent pixels. Of course, if we only compared two neighboring pixels, there may be a bunch of false positives due to the different features of the image. Thus, I iterated through a window of pixels, comparing many adjacent pixels at a time and computing the average distance between sets of adjacent pixels. Then, if the change exceeded a certain value, I stored its position, and then cropped the corresponding side of the image up to these pixels. I did this for a couple iterations, to eliminate the many different borders that could arise.

Offsets, red and green respectively.

[5, 2]

[12, 3]

Offsets, red and green respectively.

[5, 2]

[12, 3]

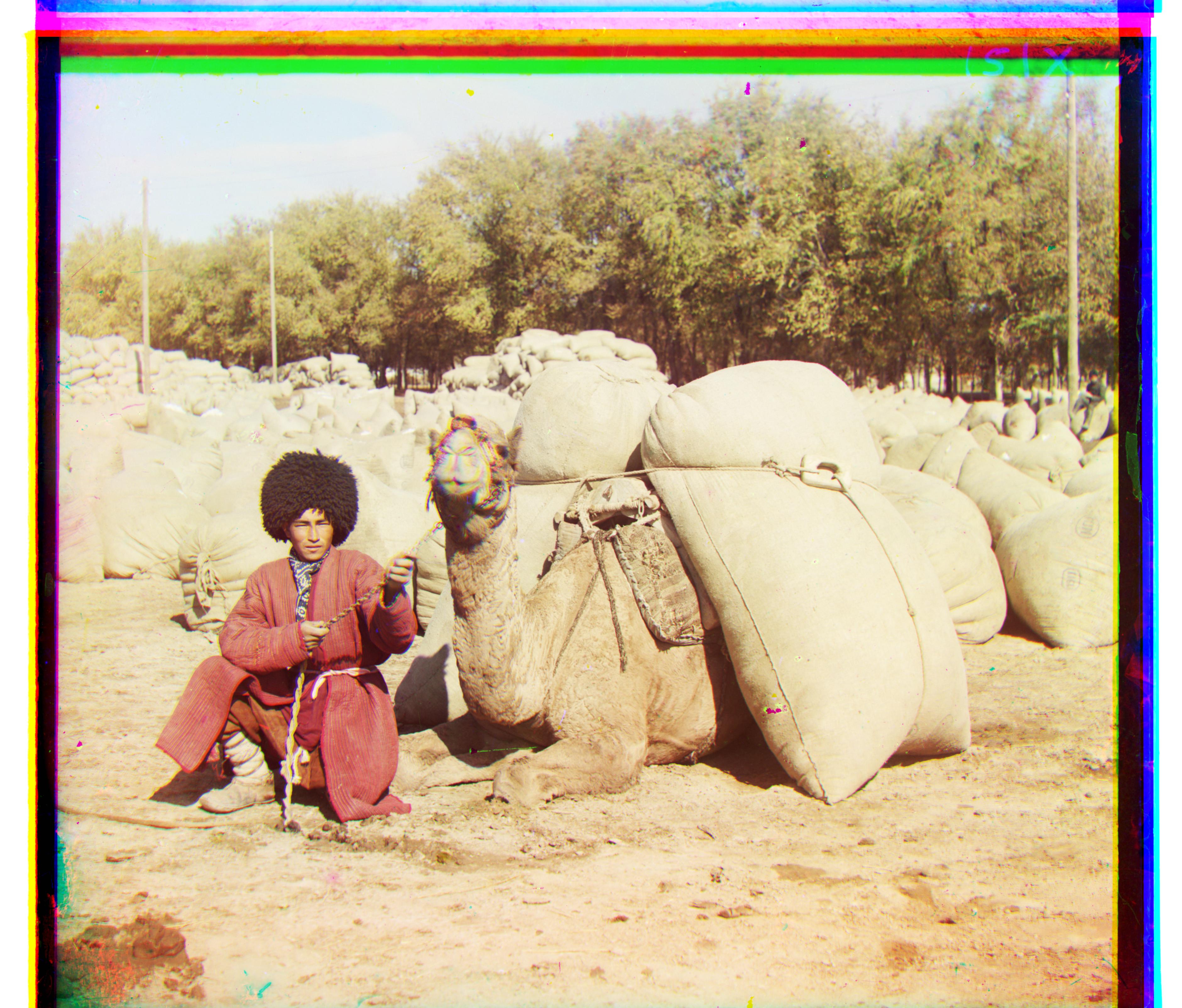

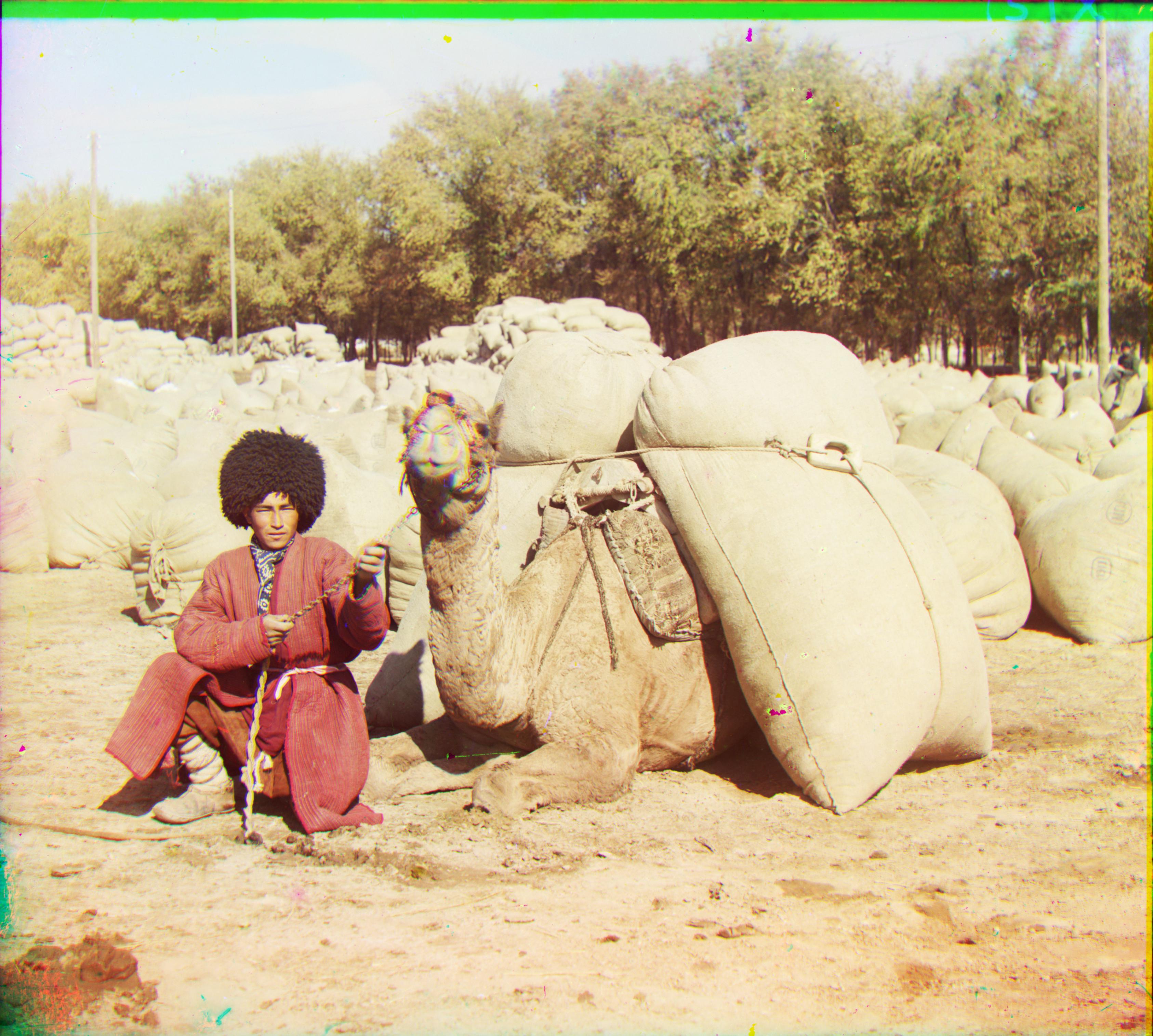

Offsets, red and green respectively.

[48, 23]

[-73, -70]

My algorithm failed to align this image properly. I believe this is because the raw pixel intensities varied greatly across the red, blue, and green channels, in the particular area I was searching through (the clothing). This led to an inaccurate calculation for the displacement of the image.

Offsets, red and green respectively.

[48, 23]

[-73, -70]

My algorithm failed to align this image properly. I believe this is because the raw pixel intensities varied greatly across the red, blue, and green channels, in the particular area I was searching through (the clothing). This led to an inaccurate calculation for the displacement of the image.

Offsets, red and green respectively.

[59, 19]

[123, 19]

Offsets, red and green respectively.

[59, 19]

[123, 19]

Offsets, red and green respectively.

[51, 6]

[111, 10]

Offsets, red and green respectively.

[51, 6]

[111, 10]

Offsets, red and green respectively.

[-3, 2]

[3, 2]

Offsets, red and green respectively.

[-3, 2]

[3, 2]

Offsets, red and green respectively.

[3, 1]

[8, 0]

Offsets, red and green respectively.

[3, 1]

[8, 0]

Offsets, red and green respectively.

[77, 29]

[175, 37]

Offsets, red and green respectively.

[77, 29]

[175, 37]

Offsets, red and green respectively.

[7, 0]

[15, -1]

Offsets, red and green respectively.

[7, 0]

[15, -1]

Offsets, red and green respectively.

[49, 15]

[108, 10]

Offsets, red and green respectively.

[49, 15]

[108, 10]

Offsets, red and green respectively.

[42, 6]

[85, 32]

Offsets, red and green respectively.

[42, 6]

[85, 32]

Offsets, red and green respectively.

[56, 22]

[117, 30]

Offsets, red and green respectively.

[56, 22]

[117, 30]

Offsets, red and green respectively.

[64, 13]

[136, 23]

Offsets, red and green respectively.

[64, 13]

[136, 23]

Chosen image. Offsets, red and green respectively.

[2 1]

[-10, 2]

Chosen image. Offsets, red and green respectively.

[2 1]

[-10, 2]

Chosen image. Offsets, red and green respectively.

[3, 0]

[7, -1]

Chosen image. Offsets, red and green respectively.

[3, 0]

[7, -1]

Chosen image. Offsets, red and green respectively.

[6, 1]

[12, 1]

Chosen image. Offsets, red and green respectively.

[6, 1]

[12, 1]

Cropped image.

Cropped image.

Cropped image.

Cropped image.

Cropped image.

Cropped image.