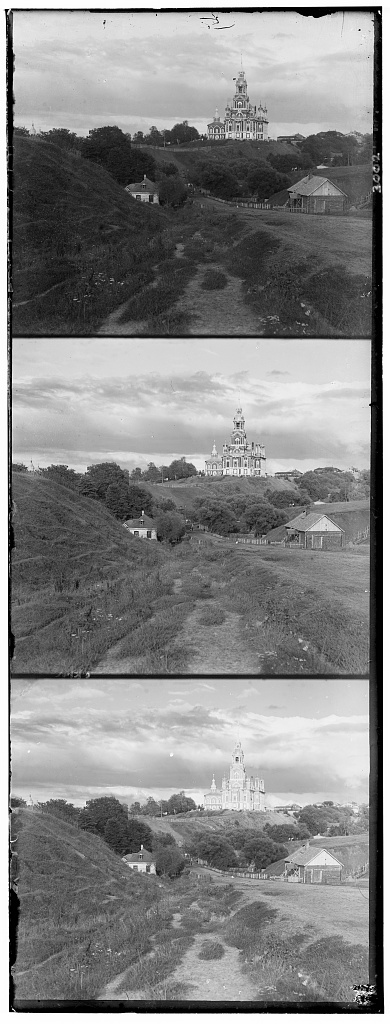

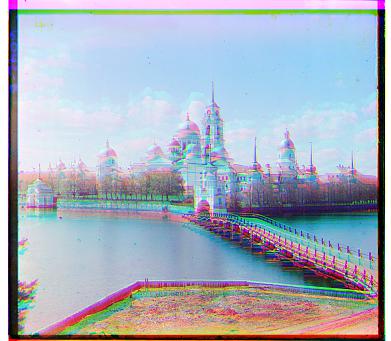

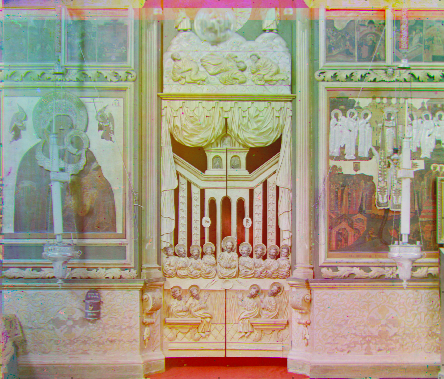

Three separated color channels (R,G,B)

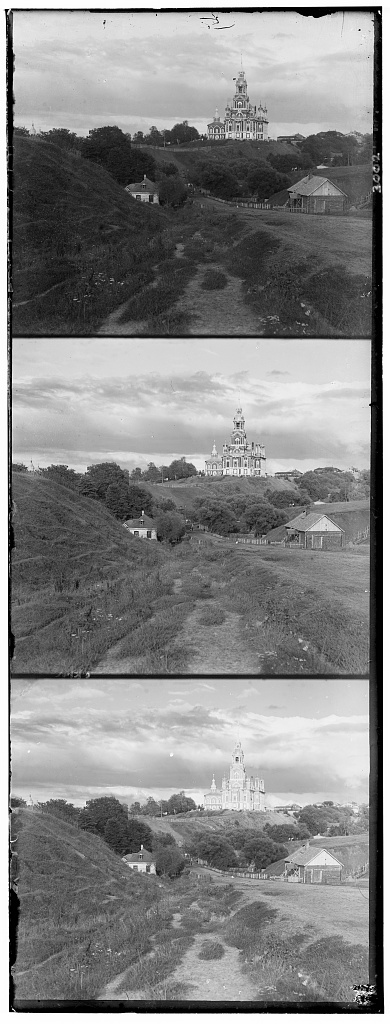

Image of combined color channels

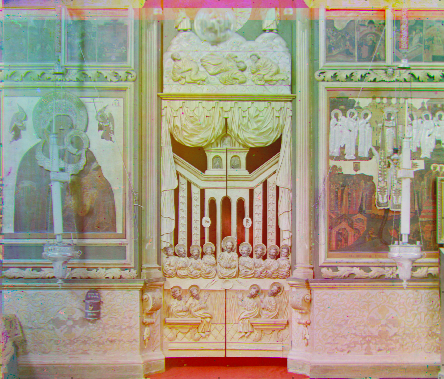

Three separated color channels (R,G,B)

Image of combined color channels

This is done via extracting the three color channel images (above left image), placing them on top of each other, and aligning them so that they form a single RGB color image (above right image).

1. Exhaustive search: Using low-res images of ~300 by 300 pixels, an exhaustive search over a window of possible displacements (starting with [-15, 15]) was conducted.

2. Image matching metric: Each displacement was scored with the original blue color channel image using Sum of Squared Differences (SSD). The red/green color channel displaced image with the lowest score was taken as the best alignment match.

Results of image alignment shift are displayed in the following format:

image_name.jpg

g: (row_shift, col_shift), r: (row_shift, col_shift)

Original

Aligned

cathedral.jpg

g: (1, -1), r: (7, -1)

Original

Aligned

settlers.jpg

g: (7, 0), r: (14, -1)

Original

Aligned

nativity.jpg

g: (3, 1), r: (7, 1)

Original

Aligned

empire.jpg

g: (2, 0), r: (7, 1)

These images only worked with 10% margin image cropping due to the border bleeds affecting the image matching metric.

Original (before cropping)

"Aligned" (before cropping)

Original (after cropping)

Aligned (after cropping)

.

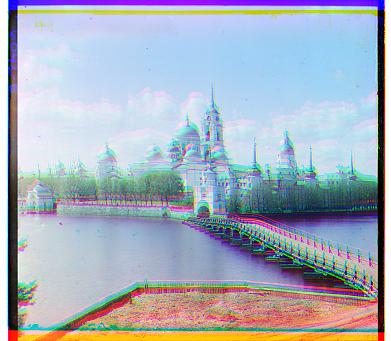

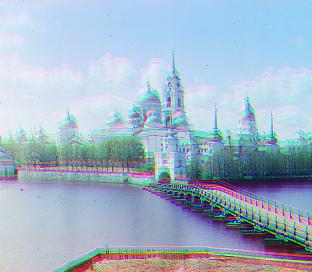

monastery.jpg

g: (-3, 2), r: (3, 2)

Original (after cropping)

Aligned (after cropping)

belozersk.jpg

g: (4, 2), r: (10, 3)

Exhaustive search will become prohibitively expensive if the pixel displacement is too large, which is the case for the high-resolution glass plate scans (~3000x3000 pixels). As such, we need to implement a faster search procedure such as an image pyramid. An image pyramid represents the image at multiple scales and the processing is done sequentially starting from the coarsest scale (smallest image) and going down the pyramid, updating the estimate as it goes.

1. Downscale image: I scaled down the huge .tif images by a factor, f, using skimage.transform.downscale_local_mean(). I started with f = 20 so that the 3000px images would scale down to around 150-200px.

2. Modified exhaustive search: With the scaled down images, I did a search over possible displacements of radius, rad.

3. Image alignment metric: Similar to the naive implementation, each displacement was scored with the original blue color channel image using SSD. The red/green color channel displaced image with the lowest score was taken as the best alignment match.

4. Updating actual image: The radius, rad, was taken and multipled by f. This gives the actual row_shift and col_shift on the original 3000px image. The actual image was updated with shift of rad*f amount.

5. Updating factors: Next, f and rad are updated by dividing them by their respective factors: factor_f = 2 and factor_rad = 2. We half the variable, f, because we would like to increase the pixel density of the image we search over. Hence we downscale the image by a smaller magnitude. At the same time, with a larger image, we cannot afford to exhaustively search over such a large radius. Hence we half the rad.

6. Recurse: The above steps are repeated until f == 1, which is when we are manipulating the full 3000px image.

I started with f = 20 (downscaling images by a factor of 20). I computed the initial rad based on f and the image height so as to ensure I have a radius that accounts for the image size (and not larger than it).

Each image took around 20-30 seconds to run. Overall, they worked pretty nicely for more most of the images.

Original

Aligned

lady.tif

g: (57, -6), r: (123, -17)

Original

Aligned

three_generations.tif

g: (51, 5), r: (108, 7)

Original

Aligned

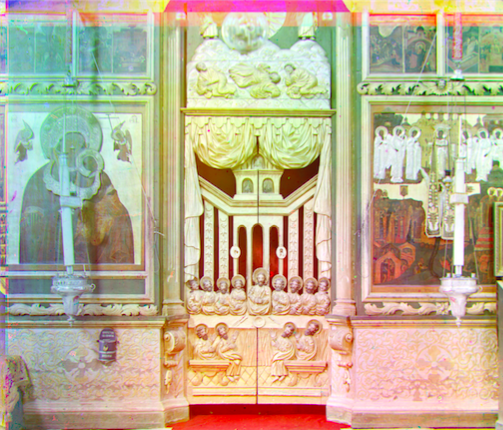

icon.tif

g: (42, 16), r: (89, 22)

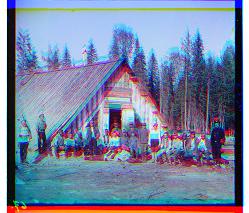

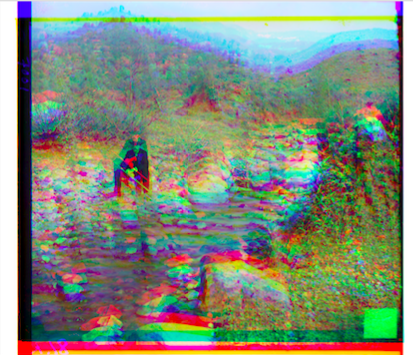

The following images only worked after cropping 10% margins off the original color channel images, followed by applying multi-scale pyramid algorithm.

Original

Aligned

.

self_portrait.tif

g: (78, 29), r: (176, 37)

Original

Aligned

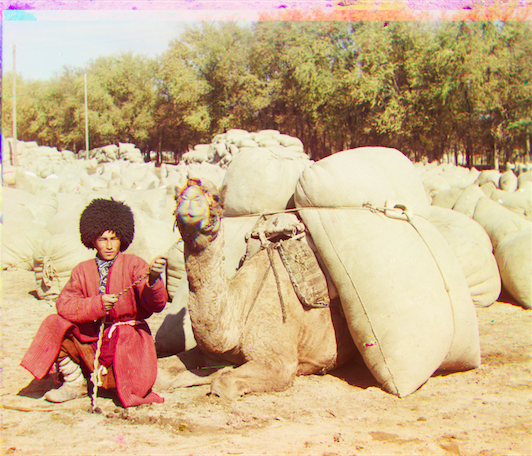

turkmen.tif

g: (56, 21), r: (116, 28)

Original

"Aligned"

.

village.tif

g: (64, 12), r: (137, 22)

Original

"Aligned"

.

train.tif

g: (42, 5), r: (86, 32)

Original

"Aligned"

harvesters.tif

g: (59, 16), r: (123, 13)

Original

"Aligned"

shore.tif

g: (25, -16), r: (99, -32)

Original

"Aligned"

.

door.tif

g: (74, 32), r: (159, 50)

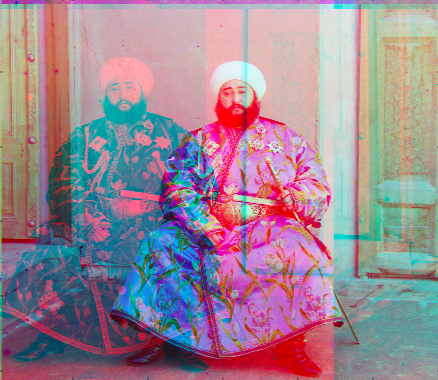

Emir.tif did not work despite applying the 10% image crop. This is because the images to be matched do not actually have the same brightness values (they are different color channels).

Original

"Aligned"

Up to 2 pts: Better features. Instead of aligning based on RGB similarity, try using gradients or edges.

Before applying the multi-scale pyramid algorithm, we use sobel operator to pre-process the original image.

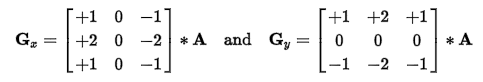

1. Sobel operator: Two 3x3 kernels were created shown below:

G_x and G_y are the resulting images when A (the source image) is convolved (denoted by * operation) with the kernel. Edges have high gradients as they experience a sharp change in pixel brightness. Non-edges would have low gradients. As such, we use the kernels account for the horizontal and vertical derrivative approximations.

2. Multi-scale pyramid: These processed color channel images are then passed through the multi-scale pyramid algorithm. Image matching metric is computed with the processed color channels.

3. Aligning the image: Taking the ideal row_shifts and col_shifts for green and red color channels, we np.roll() the original image (not the one processed with sobel) and display it.

Emir.tif finally aligns after employing edge detection using Sobel operator!

Original

Aligned

.

emir.tif

g: (49, 24), r: (105, 41)

Up to 2pts: It is usually safe to rescale image intensities such that the darkest pixel is zero (on its darkest color channel) and the brightest pixel is 1 (on its brightest color channel). More drastic or non-linear mappings may improve perceived image quality.

Auto contrasting was applied after images were generated from mutitscale pyramid / naive algorithm.

1. Find the original median of the image's pixel intensities

2. Multiply the image matrix by 1.5x (or some factor)

3. Shift the matrix elements such that the new median stays in the same place as original median

4. The values which are less than -1 or greater than 1 get clipped to -1 and 1 respectively

This approach increases distance between pixel intensities, allowing the image to appear more contrasted.

Automatic contrasting applied

Automatic contrasting applied