Project description

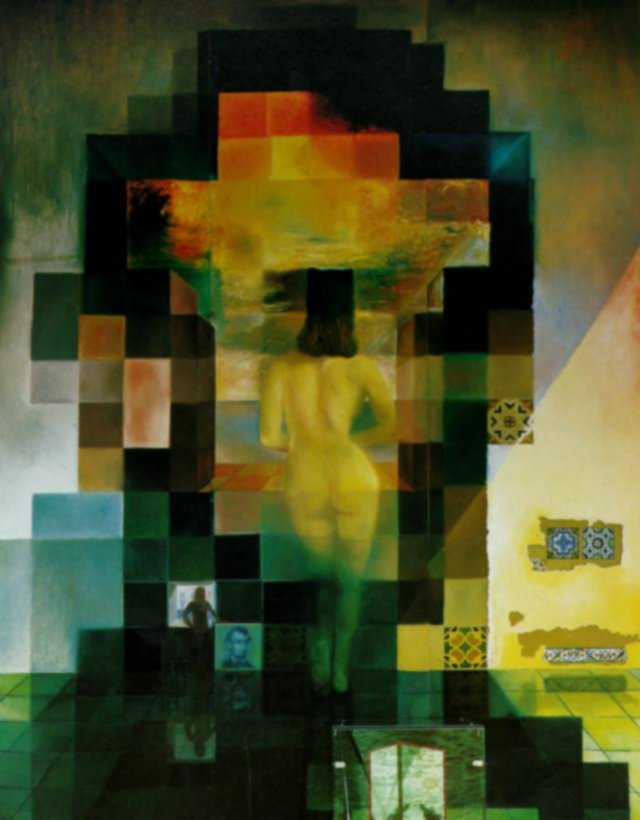

This project deals with transforming images with a variety of convolutions filters as well as algorithms to blend images together.

1.1 Sharpening Image

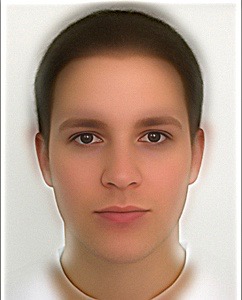

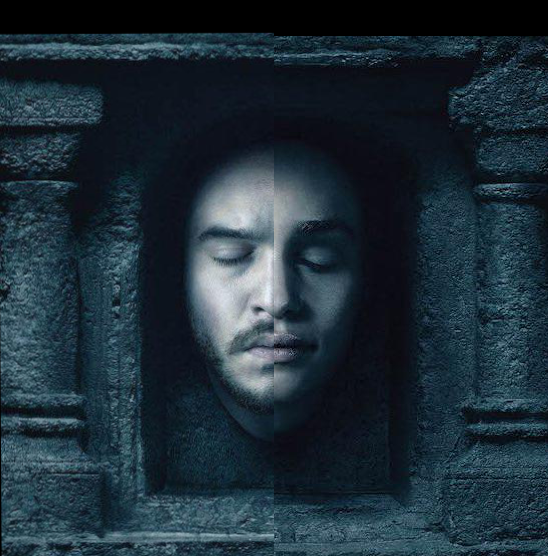

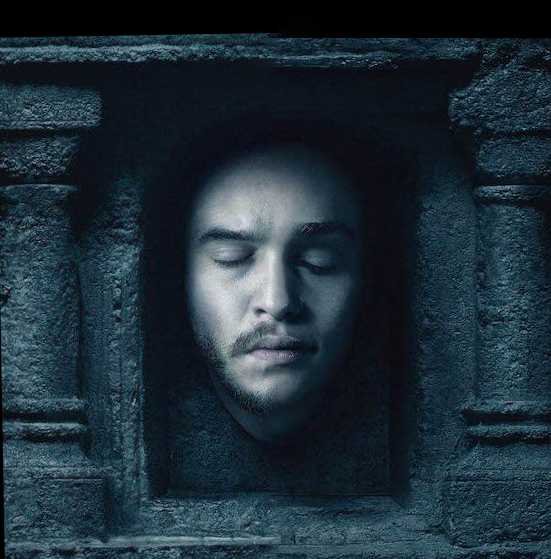

Sharpening can be simply done by adding the difference of the original image and the gaussian-blurred image back to the original image. Here is an example of applying sharpening to Drogon and Ned.

As we can see, both looks more sharp now (lower images) than before (upper images).