In this project we played frequency filtering, Gaussian and Laplacian stacks, and Poisson blending in order to do cool image editing, blending and hybridization.

I sharpened an image of a rose in black and white and in color. To sharpen the colored image, I treated each channel as a grayscale image, sharpened it, and then stacked the channels back together.

| Original Color Image | Sharpened Color Version |

|

|

| Original Black and White Image | Sharpened Black and White Image |

|

|

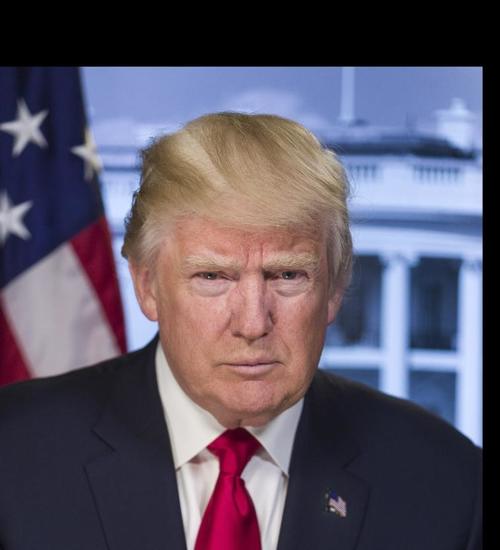

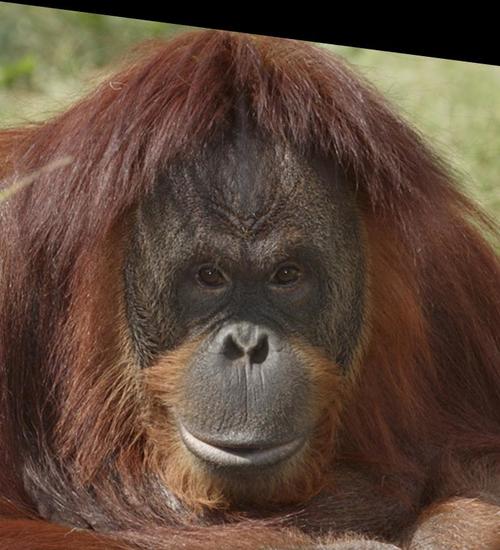

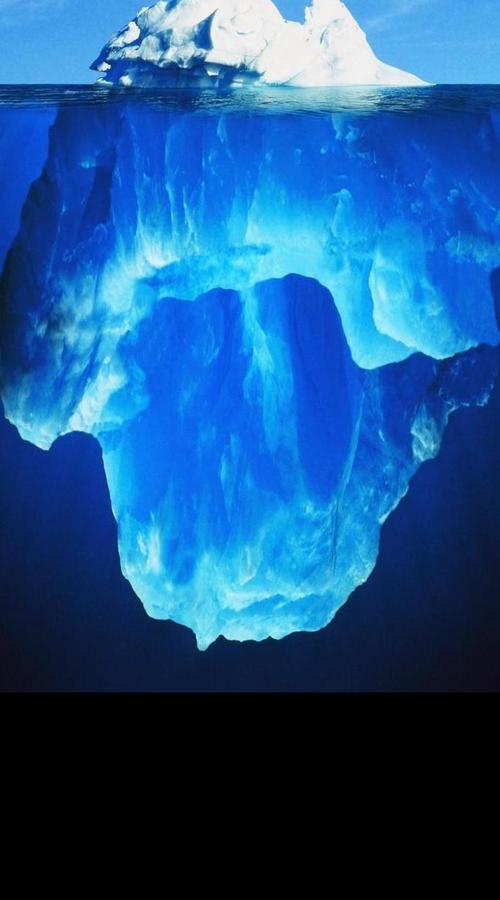

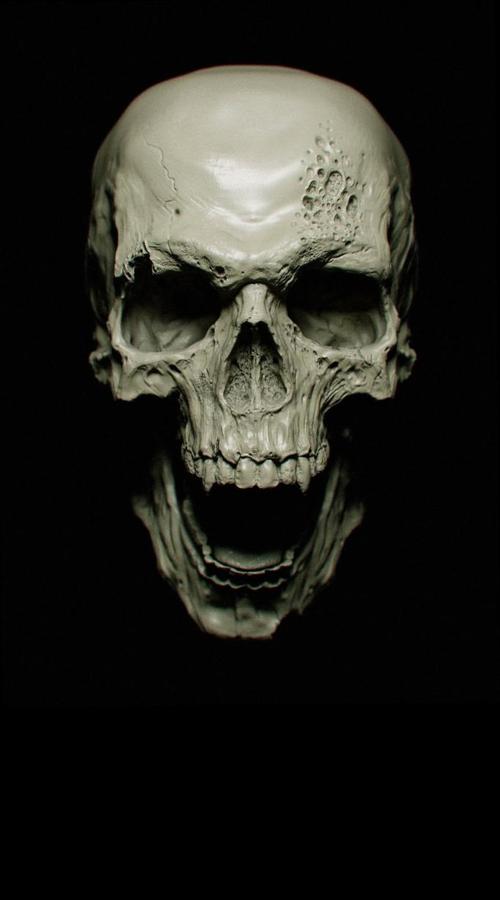

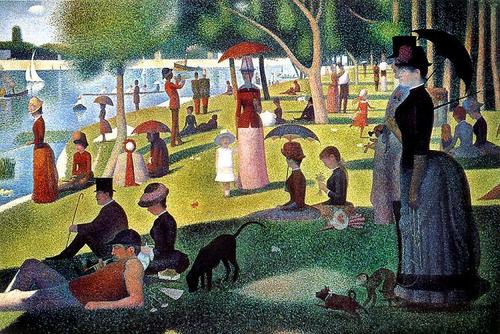

I hybridized three pairs of images, keep the low frequencies of the first,

keeping the high frequencies of the second, and then adding the images

together: Donald Trump and an orangutan, the White House and a cruise

ship, and an iceberg and skull.

For each hybrid, I played with a sigma parameter that controlled what

frequencies were filtered with the Gaussian and Laplacian kernels, and I

tried my best to pick ones that created good hybrids. The hybrids aren't

perfect; for some you really have to get far away to be able to drop

the high frequency portion.

I would consider the White House and cruise ship hybrid to be the failure

here because it's pretty hard to recognize the high frequency image of the

ship. The reason for this is probably because the two source images weren't

the most similar in the first place.

| Low Frequency Source: Trump | High Frequency Source: Orangutan | Hybrid Image |

|

|

|

| Low Frequency Source: White House | High Frequency Source: Cruise Ship | Hybrid Image |

|

|

|

| Low Frequency Source: Iceberg | High Frequency Source: Skull | Hybrid Image |

|

|

|

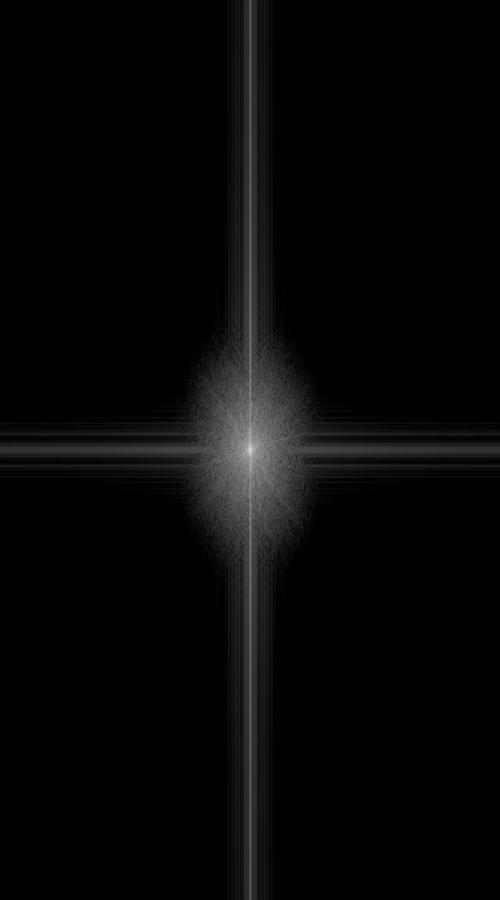

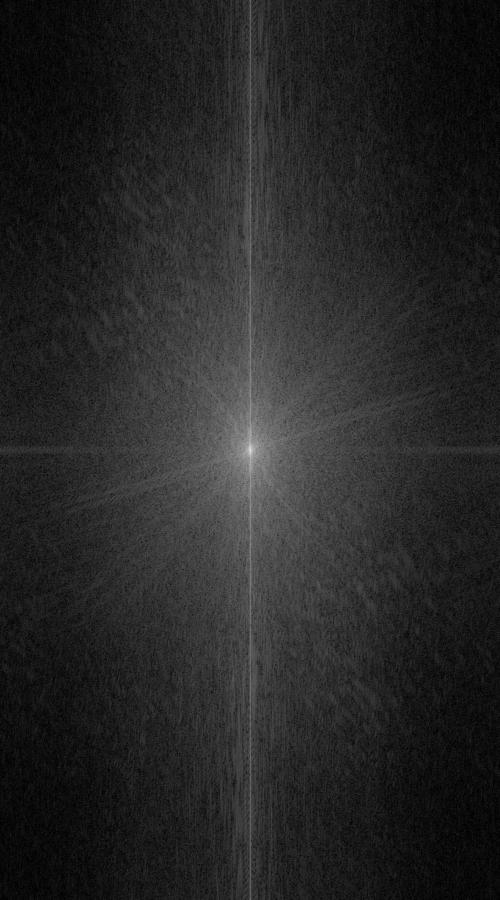

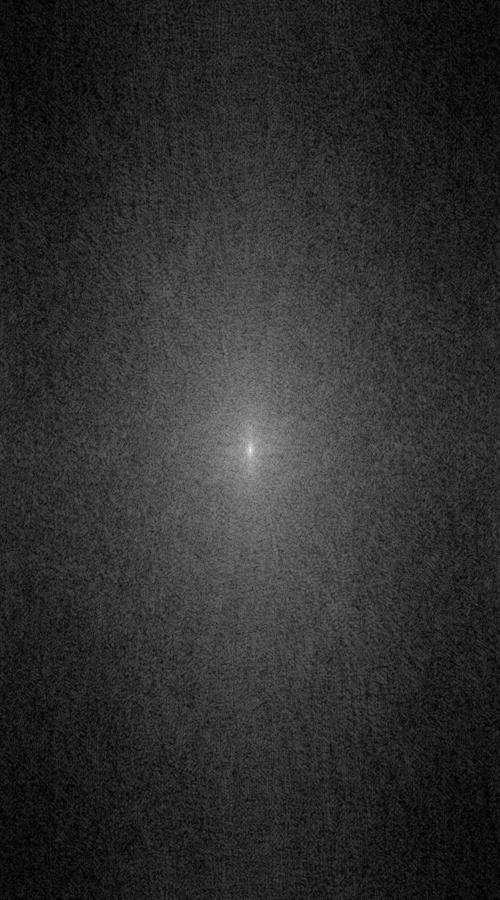

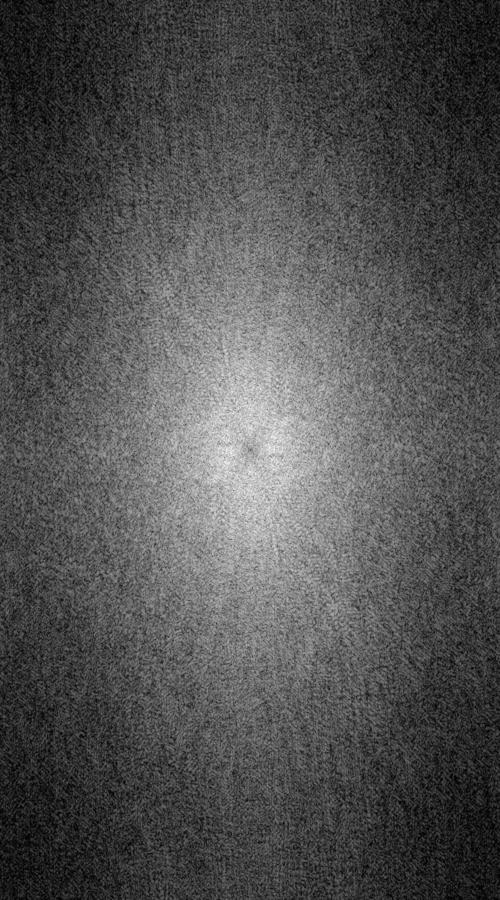

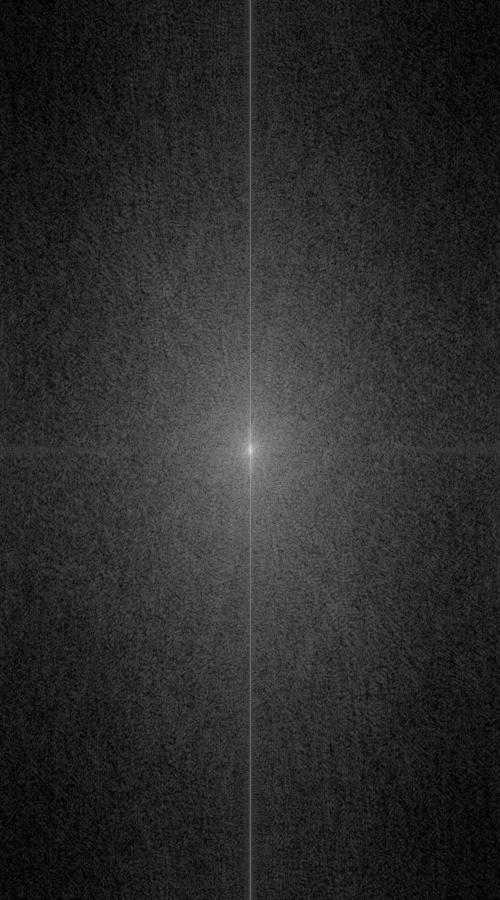

My favorite result was the iceberg and skull hybrid, and so I plotted the log magnitued of the Fourier transforms of the hybrid and its components. You can clearly see the removal of high frequencies in the low pass filtered image, but it's hard to see the low frequencies get removed in the high pass filtered image. This may be because of image normalization.

| Iceberg Frequency | Iceberg Low Filtered Frequency |

|

|

| Skull Frequency | Skull High Filtered Frequency |

|

|

| Hybrid Frequency | |

|

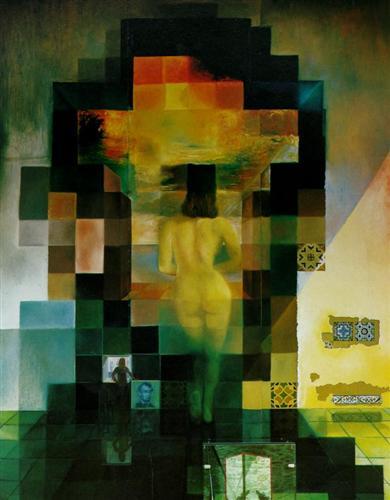

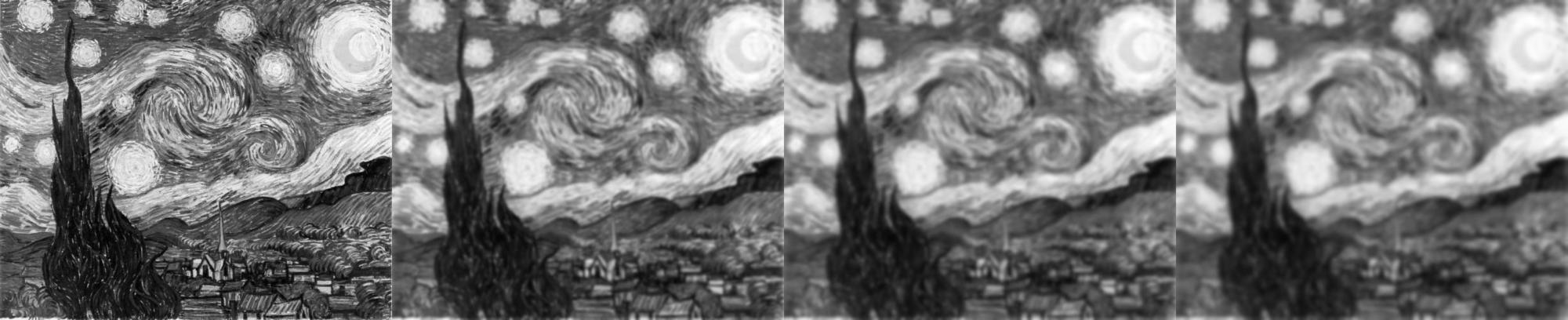

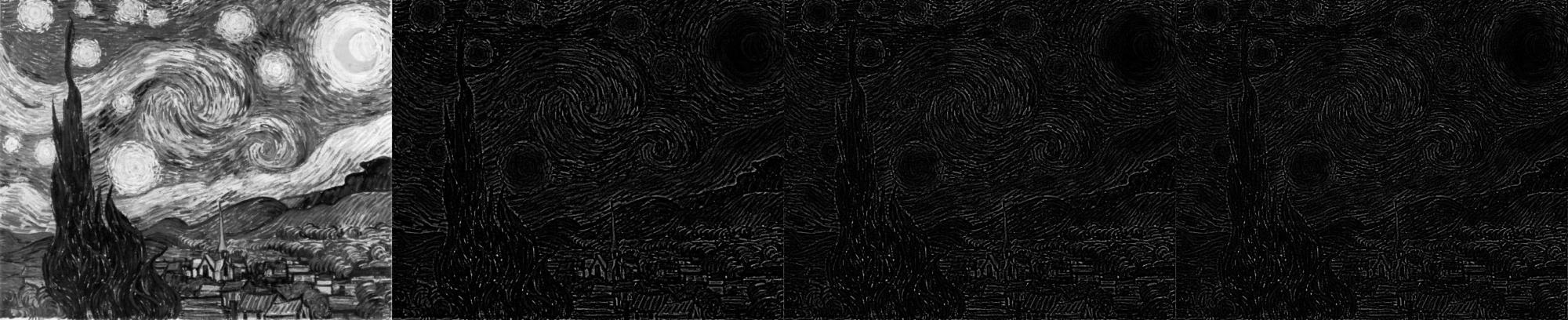

I took the Lincoln painting from class and three other famous paintings

and displayed Gaussian and Laplacian stacks for each. I tried to pick

parameters so that the last image in each composition was significantly

different from the first, and so that each level of the stack showed a

noticeable difference from its neighboring levels.

My stacks don't seem to look as nice as the

ones in the papers. I wasn't sure if it was because of some clipping and

normalization that had to occur to write the images to disk, or if it was

an error in my code or algorithms. The first images in the compositions

are the originals.

Lincoln in Dalivision by Salvador Dalí

Gaussian Pyramid

Laplacian Pyramid

Mona Lisa by Leonardo da Vinci

Gaussian Pyramid

Laplacian Pyramid

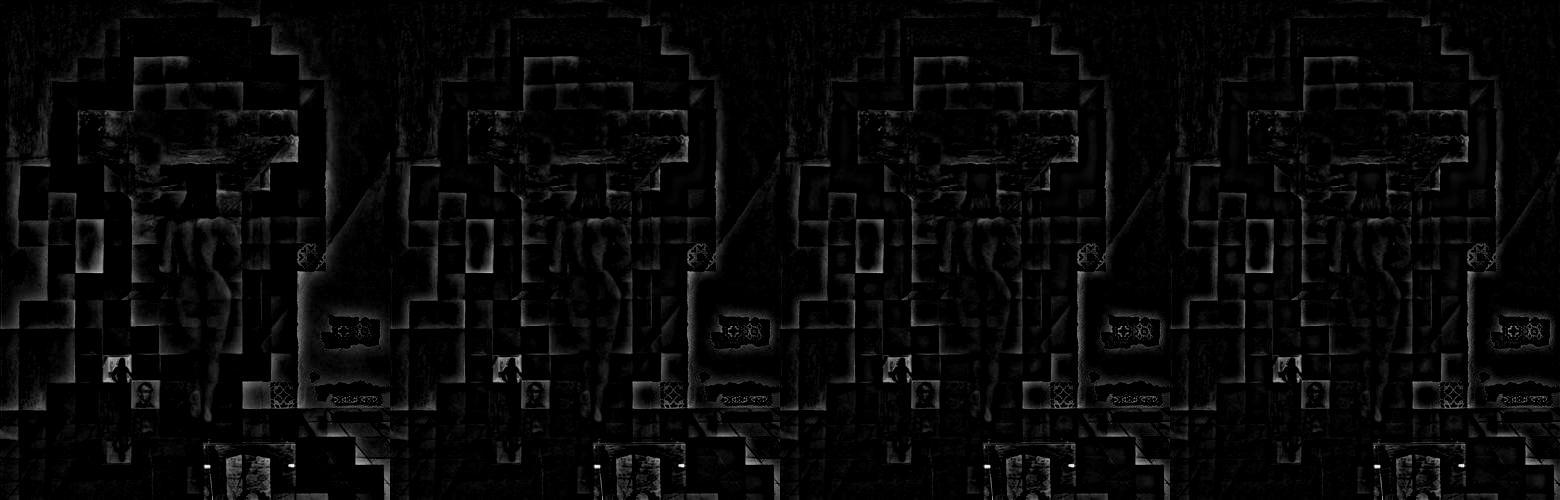

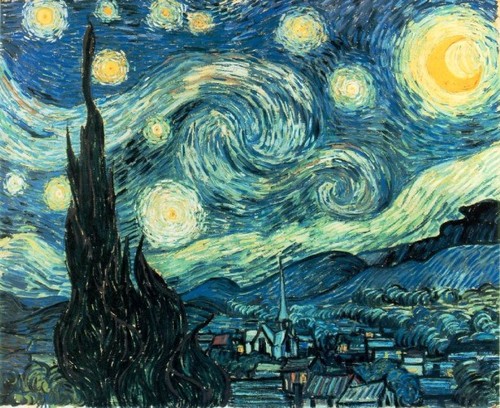

The Starry Night by Vincent van Gogh

Gaussian Pyramid

Laplacian Pyramid

A Sunday Afternoon on the Island of Lad Grande Jatte by Georges Seurat

Gaussian Pyramid

Laplacian Pyramid

I took my favorite result from the hybrid images and created its Gaussian and Laplacian stacks. Again, it doesn't look as nice as in the papers.

Iceberg Skull from Part 1.2

Gaussian Pyramid

Laplacian Pyramid

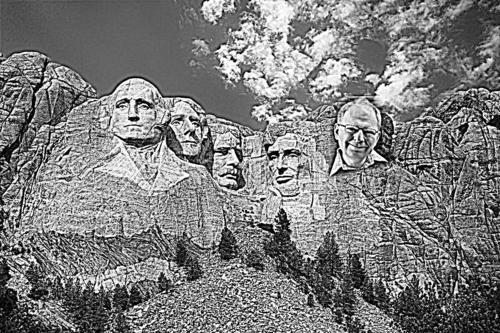

I made a vertical seam blend to produce the orapple. I then blended three

other pairs of images: an ocean floor and New York City with a horizontal

seam, New York City and Hong Kong with a vertical seam, and Mt. Rushmore

and Professor Efros with a custom irregular seam/mask generated using

MATLAB code provided for Part 2.

My blends seam to emphasize high frequencies because of the way I added

successive levels of the Laplacian stacks, which may or may not be an error.

The ocean and New York City blend is my favorite, but it could also use

some work since a couple of skyscrapers were actually in the half of the

image that the ocean replaced.

York Kong is a clear failure because the original images had very large

differences in their average brightess (day versus night time).

Efroshmore is better, but it also has issues because the source image for

the head does not have the same texture as the mountain.

| Apple | Orange | Orapple |

|

|

|

| Ocean Floor | New York City | New York City Underwater |

|

|

|

| New York City | Hong Kong | York Kong |

|

|

|

| Mt. Rushmore | Alexei Efros | Efroshmore |

|

|

|

I took my favorite result and showed the stacks for the masked input images and the resulting image.

| Masked Ocean Floor Laplacian Stack |

|

| Masked New York City Laplacian Stack |

|

| Result Image Laplacian Stack |  |

For this part of the project, we implemented Poisson blending. The premise

behind Poisson blending is that humans care more about the way images

change than the values of the pixels themselves. In other words, the

pixel gradient is much more important to the human eye than pixel values.

Therefore, if we want to transplant some object into another image, we

can achieve much success by simply transplanting its gradient and then

calculating the actual pixel values by matching the gradient to the

target image.

We can do the last part by modeling the gradient restraints and the

restraint forcing the new object to blend into the pixels of the target

image as a system of linear equations. The system is actually very sparse

so solving it is not too computationally expensive.

Here is the recovered image:

My favorite image blend was that of a whale in the sky of New York City. I

included the source and target images, as well as a side by side comparison

of what the composition looks like without and with Poisson blending.

The way this blending works is that the code will compute the pixel

gradients of the object cutout (the whale), and then try to reconstruct

what pixels would have to go in the target image (New York City) in order

to achieve a seamless blend while still matching the source gradient.

This way, the new image would look like it had a whale in it, but also like

that whale was not pasted directly into the image. The pixel reconstruction

is computed by solving a system of linear equations generated by the

gradient and seam constraints.

| Whale Source | New York City Target |

|

|

| Whale Over New York City (Paste) | Whale Over New York City (Blended) |

|

|

I blended a couple of other images: I put a whale over New York City; I used

some of the sample images and put a penguin at Mt. Ranier; I put a meteor

over San Francisco; and I put a jetski next to the Great Pyramids of Giza.

The jetski at Giza image did not blend so well. The splashing water from

the jetski source image contrasts too highly with the sand of the pyramids.

This leads to a noticeable difference in brightness that makes the blend

less believable. The reason for this difficulty is that the water splash

is also really contrastive with the water of the jetski image, which makes

sense since water turns white when it splashes. However, sand does not turn

that white when it splashes, making the image less believable.

| Penguin at Rainier | Meteor Over San Francisco | Jetskiing at Giza |

|

|

|

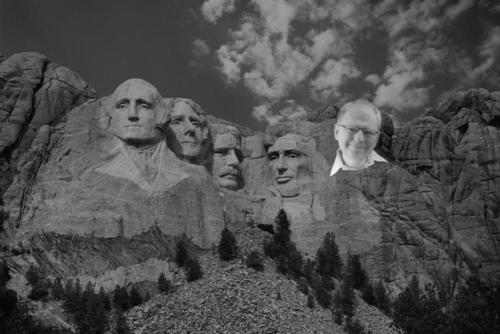

Here we retry the attempt to blend Alexei Efros and Mt. Rushmore using

Laplacian stacks from Part 1 by using Poisson blending techniques. Here, it

seems like the best approach was made with the multiresolution blend

because the professor's head is much more distinguishable in the Poisson

blended version.

My implementation of multiresolution blend sharpened the rest of the image,

which made the professor's image less noticeable, while Poisson blending did

not. Additionally, the professor's head is much brighter than its background

in the source image, and so the gradient will try to push the brightness

of the head to be much higher in the target image when Poisson blending.

However, for Mt. Rushmore, we want the heads to have similar intensities

to the surrounding rock. This is why mulitresolution blending worked better:

the original intensity of the source image was a better match in the target

image than new intensities that would attempt to follow the source gradient.

If we have an object that does not contrast in the same way in its image

than it should in the target image, then Poisson blending will often work

worse than Laplacian stack (multiresolution) blending since it will try

to match the contrast in the new blended image, which is not what's desired.

If however, the source object has a background similar to what its new

background would be in the target image, then Poisson blending will fare

better because it will be able to recreate the gradient and contrast while

also leaving less of a seam.

| Efroshmore (Multiresolution Blended) | Efroshmore (Poisson Blended) |

|

|

The most cumbersome part of image processing is procuring the images themselves and preprocessing them to work with the image processing pipeline. It took me half of the time to actually write the code to do the image processing, while the other half was spent looking for images modifying their sizes, generating masks and cutouts, and writing the report and webpage.