Original

OriginalBelow is the original image of a Rubik's cube, along with the sharpened image, which was achieved by using a high-pass filter on the original image. This filter was achieved by subtracting a gaussian filtered version of the image from the original image.

Original

Original

Sharpened

Sharpened

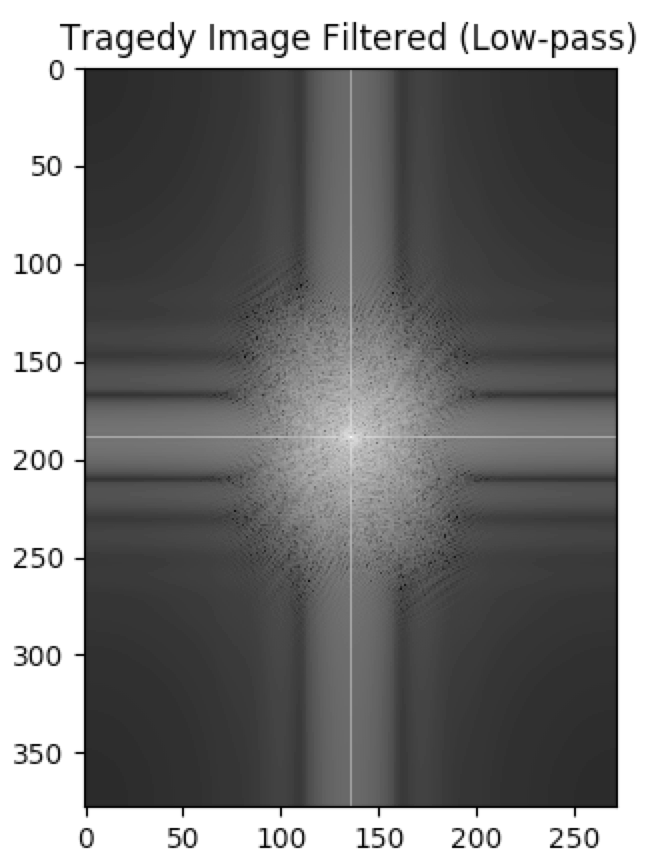

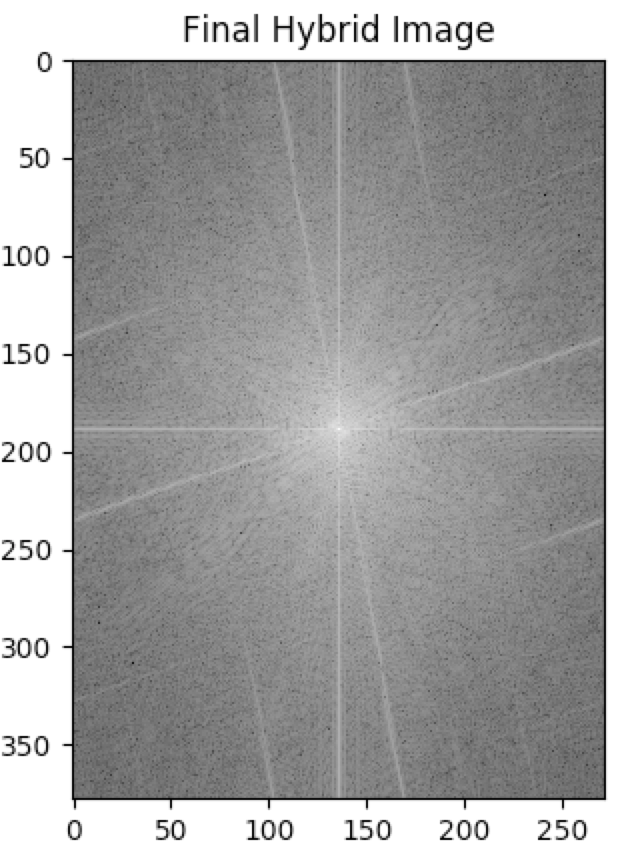

For the hybrid images, we created a singular figure out of two different objects by using a high-pass filter on one photo, and a low-pass filter on the other. By aligning these two filtered images, the result is a photo that shows the first when viewed close up, and the second image when viewed from afar. The image that is high-pass filtered emphasizes edges of the object, which is easier to see up close. The opposite goes for the image that is low-pass filtered. Below are a few examples of our hybrid images.

Julie (Original)

Julie (Original)

Mia (Original)

Mia (Original)

Catwoman (High-pass: Mia, Low-pass: Julie)

Catwoman (High-pass: Mia, Low-pass: Julie)

Apple (Original)

Apple (Original)

Earth (Original)

Earth (Original)

Applearth (High-pass: Apple, Low-pass: Earth)

Applearth (High-pass: Apple, Low-pass: Earth)

Happy (Original)

Happy (Original)

Sad (Original)

Sad (Original)

??? (High-pass: Happy, Low-pass: Sad)

??? (High-pass: Happy, Low-pass: Sad)

As you can see above, the happy/sad hybrid was less successful than the other hybrids. This is probably due to the fact that the photos are very plain, and therefore every detail of both faces is clear to the viewer from a larger distance. This could be fixed by playing around with the sigmas of each filter, or just looking at the image from further away.

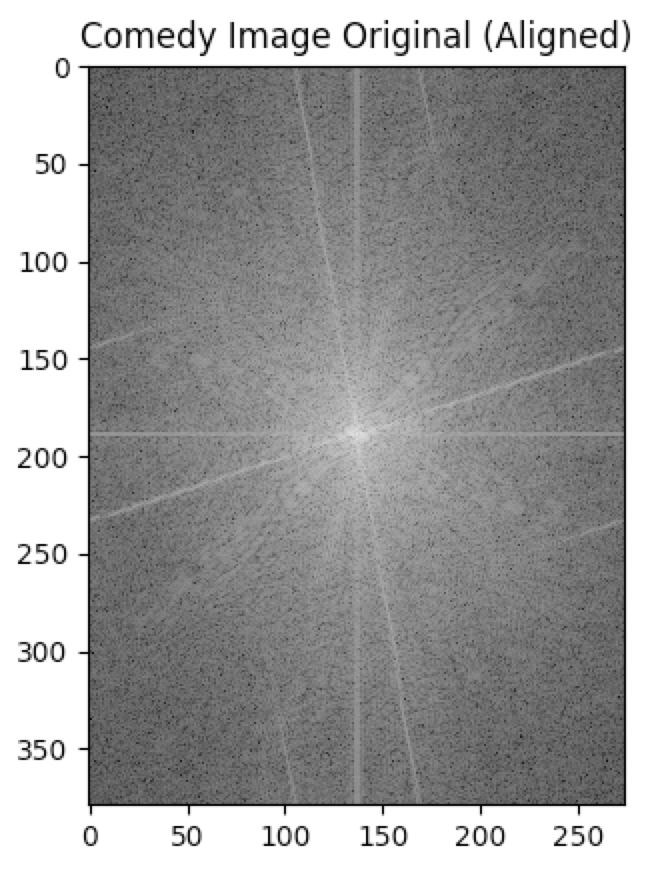

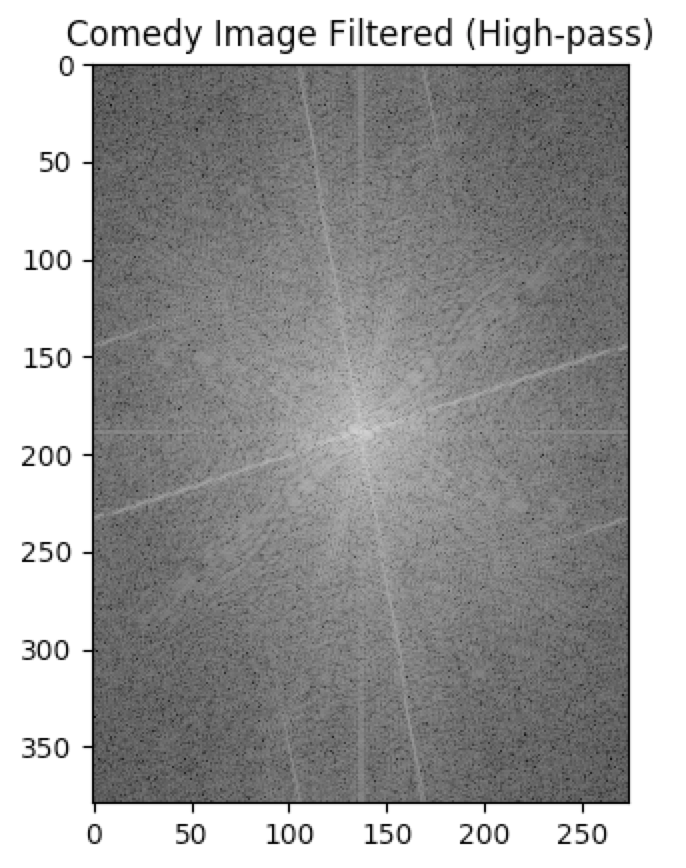

Comedy (Original)

Comedy (Original)

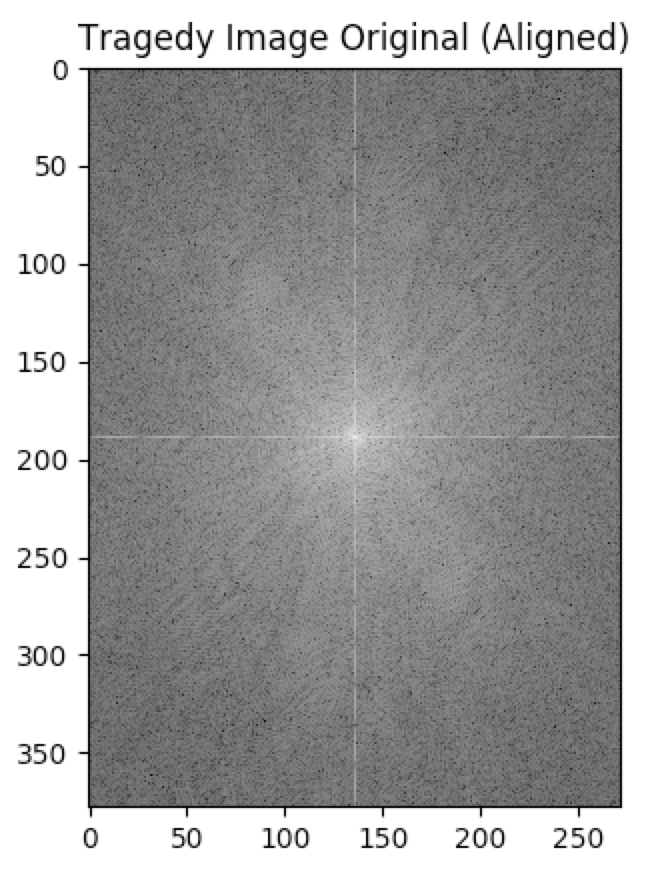

Tragedy (Original)

Tragedy (Original)

Comedy-Tragedy (High-pass: Comedy, Low-pass: Tragedy)

Comedy-Tragedy (High-pass: Comedy, Low-pass: Tragedy)

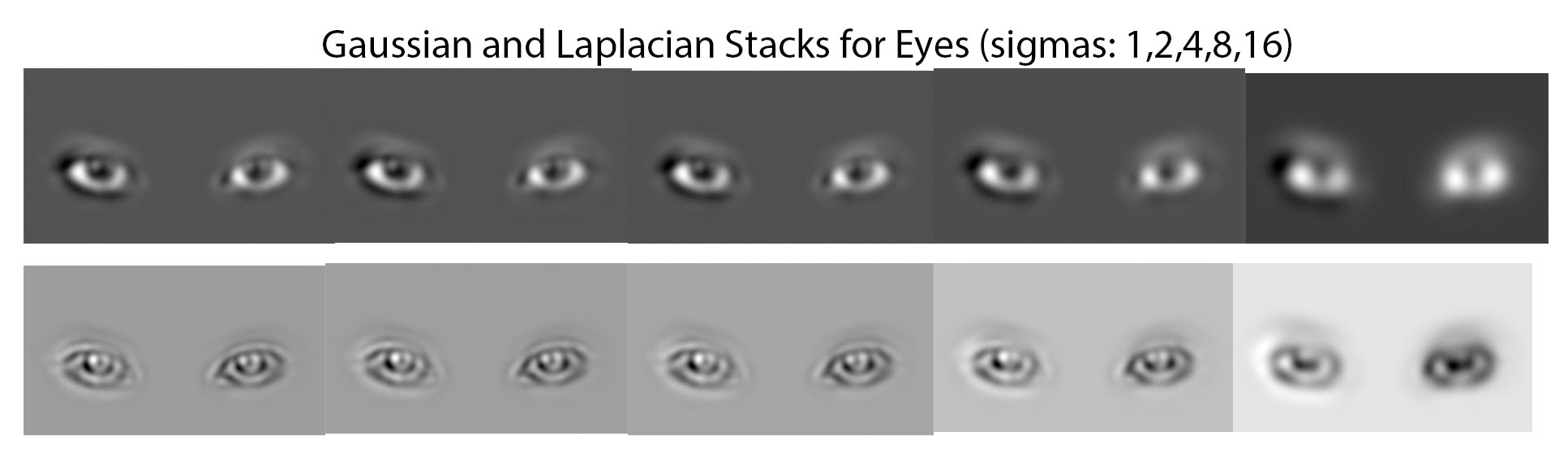

To build the Gaussian and Laplacian stacks, we set a starting sigma, and then repeatedly filter the image, doubling this sigma each time. Unlike a pyramid, stacks do not decrease in size, but instead preserve the original image dimensions.

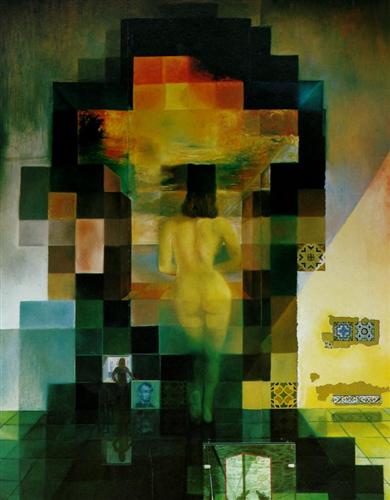

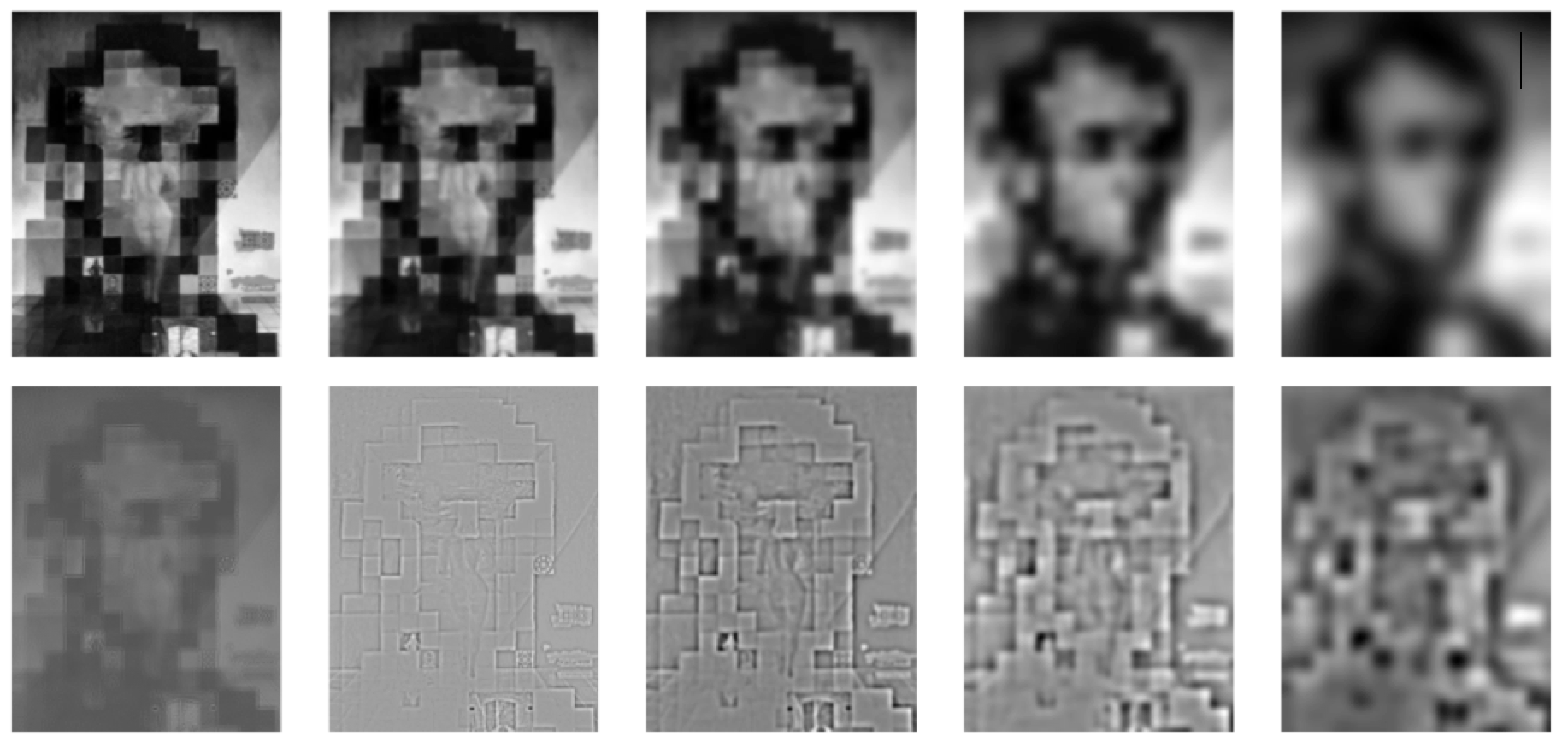

Lincoln in Dalivision

Lincoln in Dalivision

Gaussian (Top) and Laplacian (Bottom) Stacks, with sigmas

of 1, 2, 4, 8, and 16.

Gaussian (Top) and Laplacian (Bottom) Stacks, with sigmas

of 1, 2, 4, 8, and 16.

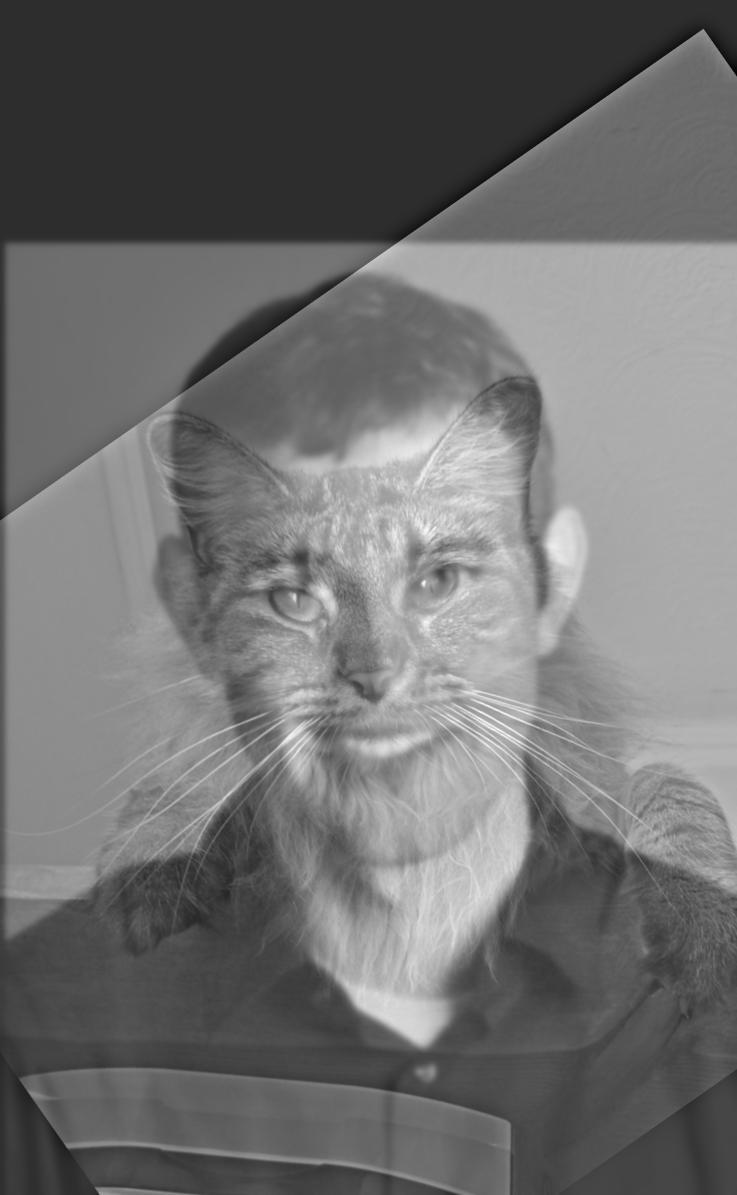

Catman

Catman

Gaussian (Top) and Laplacian (Bottom) Stacks, with sigmas

of 1, 2, 4, 8, and 16.

Gaussian (Top) and Laplacian (Bottom) Stacks, with sigmas

of 1, 2, 4, 8, and 16.

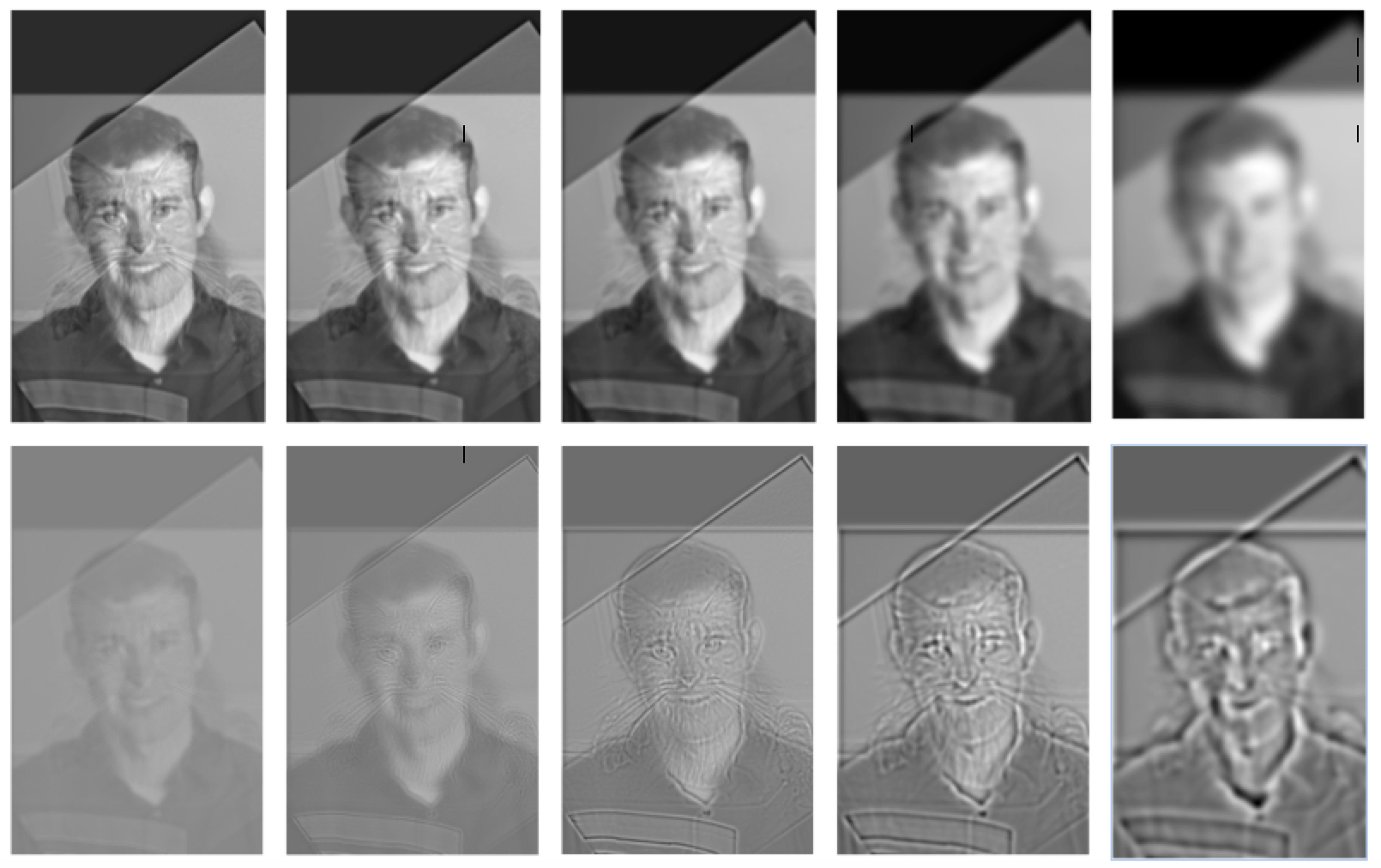

Comedy/Tragedy

Comedy/Tragedy

Gaussian (Top) and Laplacian (Bottom) Stacks, with sigmas

of 1, 2, 4, 8, and 16.

Gaussian (Top) and Laplacian (Bottom) Stacks, with sigmas

of 1, 2, 4, 8, and 16.

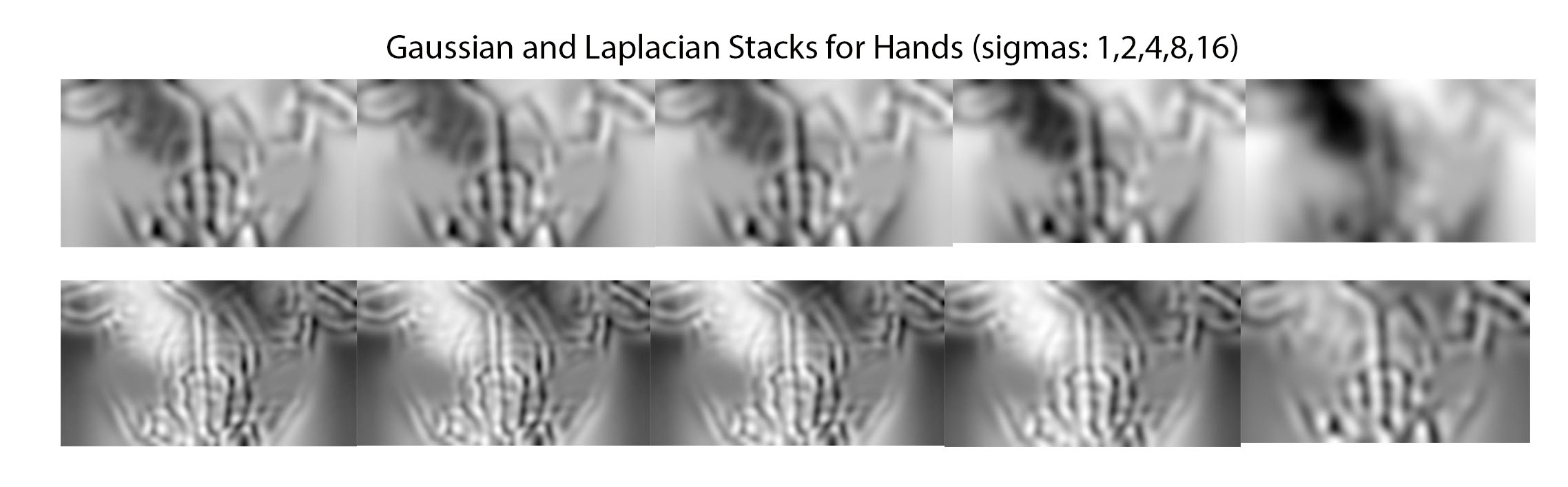

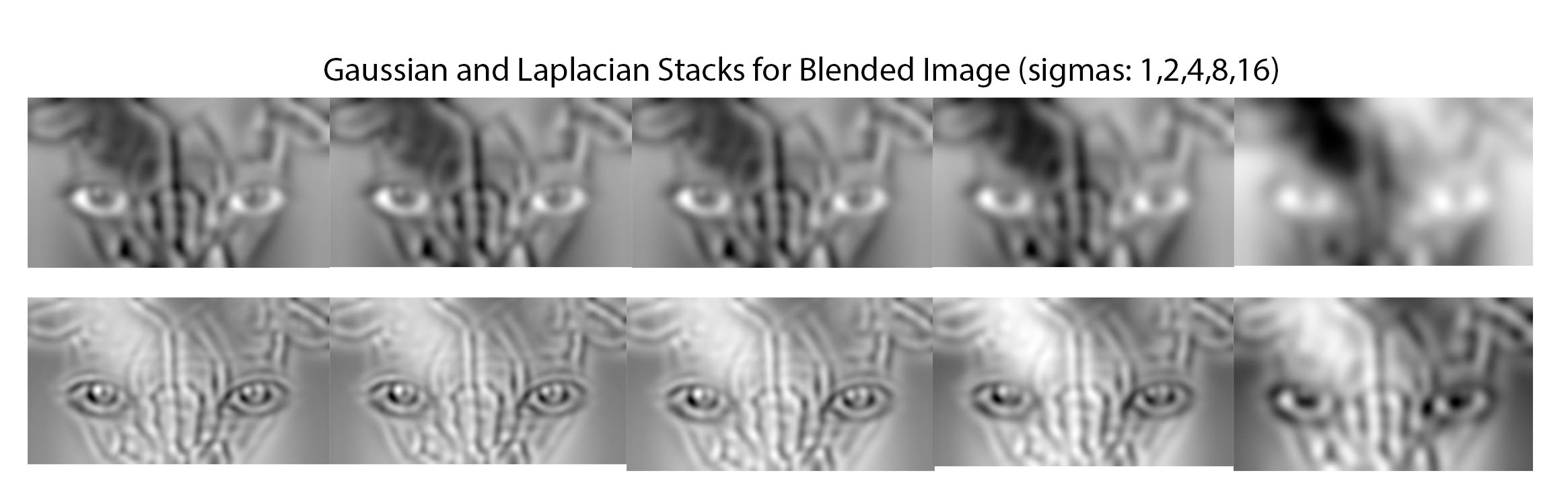

We achieve multiresolution blending by applying a mask to two images at different levels of their Laplacian stacks. We apply the Gaussian-filtered masks to these stacks to combine the images at different stages of blur, so that the seam itself is smooth, rather than abrupt. The Laplacian stacks allow this smoothing to occur "naturally".

Apple (Original)

Apple (Original)

Orange (Original)

Orange (Original)

Oraple

Oraple

Earth (Original)

Earth (Original)

Pokeball (Original)

Pokeball (Original)

EARTHBALL

EARTHBALL

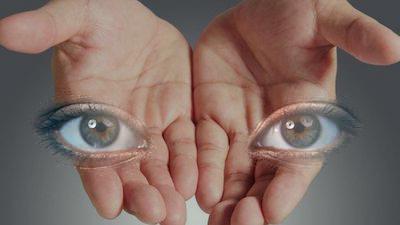

Eyes (Original)

Eyes (Original)

Hands (Original)

Hands (Original)

Creepy monster from Pan's Labyrinth

Creepy monster from Pan's Labyrinth

Below are the Gaussian and Laplacian stacks for the masked images of the eyes and hands, and the final result, from the above blended image.

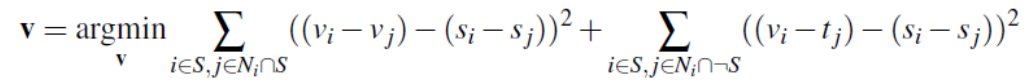

The second part of our project focused on Poisson blending, which is a method of gradient-domain processing that aims to blend a source object into a target background. Instead of focusing on maintaining the color of the source image while placing it on the target image, we are instead focused on maintaining the gradient of the source. In order to do this, we use the neighbors of the source pixels to calculate the new value through the following least-squares problem:

For the toy problem, we aimed to reconstruct the original image by recreating the gradients of the original image pixel by pixel, to each pixel's right and bottom. We use these gradient constraints, as well as the top left pixel of the original image, to run a least-squares optimization on Ax=b, where x is the final result. Below are the original and reconstructed images using this method.

Original

Original

Reconstructed

Reconstructed

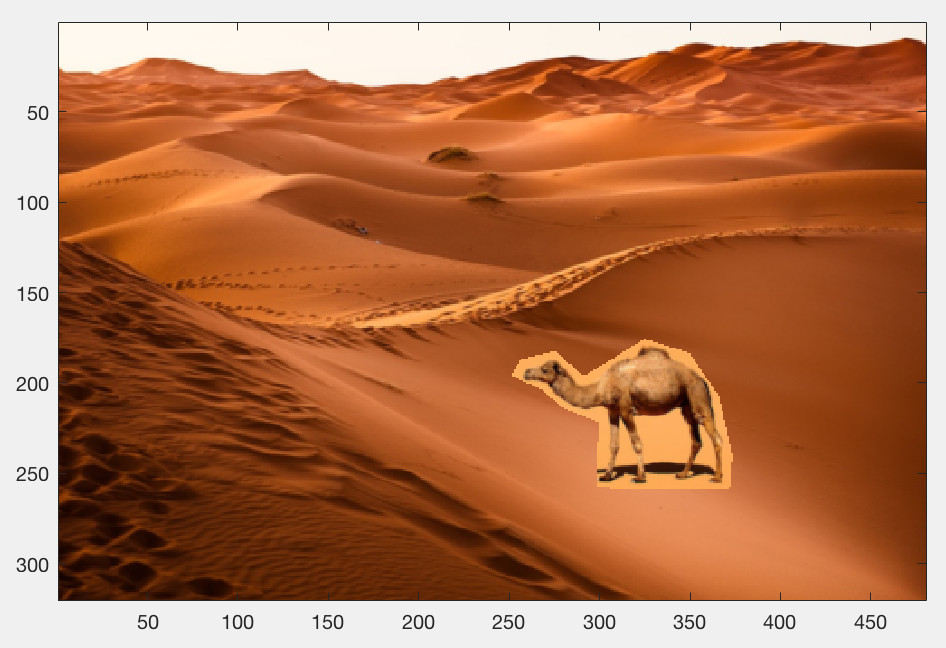

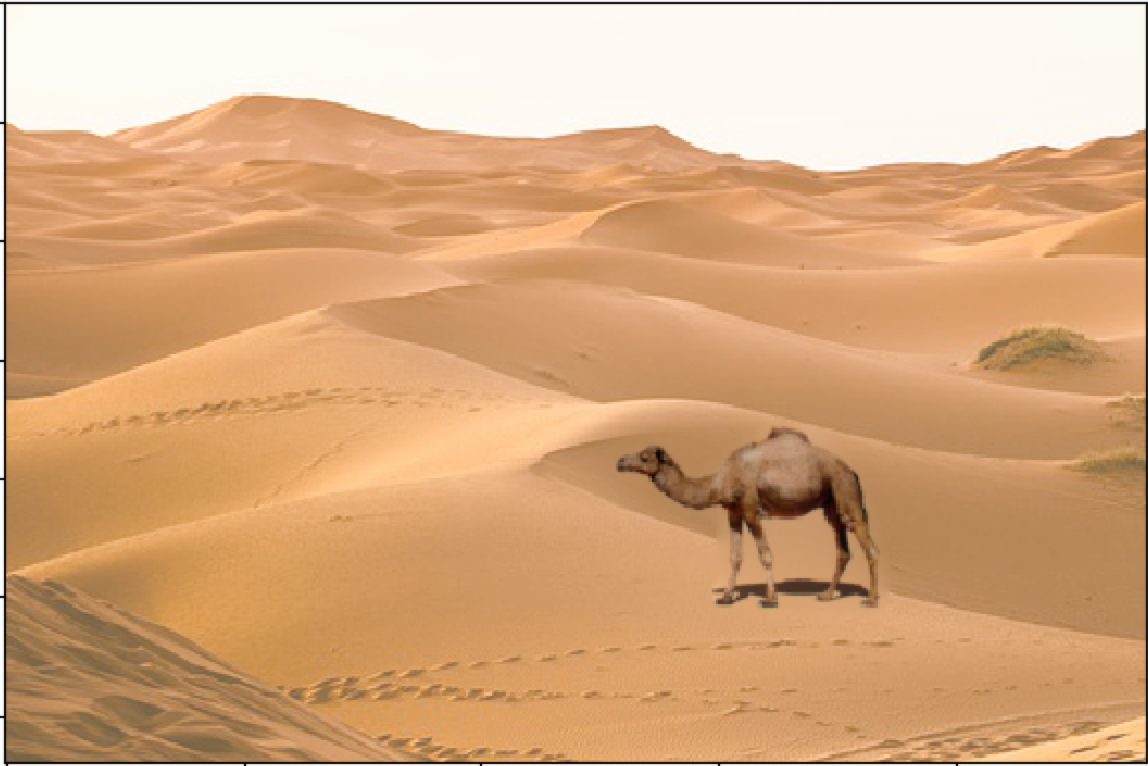

For the following images, we follow the original algorithm for Poisson blending, by finding the least-squares optimization for each pixel of the source object.

Source

Source

Target

Target

Source pasted onto target

Source pasted onto target

Poisson Blended

Poisson Blended

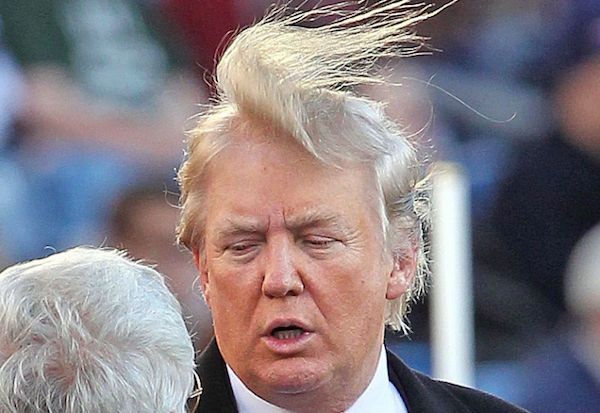

Some additional examples:

Source

Source

Target

Target

Poisson Blended

Poisson Blended

Source

Source

Target

Target

Poisson Blended

Poisson Blended

Although the Trump in Space photo has an extra corner from the source image that was not blended into the background, this could easily be fixed by cropping a little better. However, the Guy Fieri in Soup ended up drastically changing the color of the target photo, and made Fieri's skin yellow. This was probably due to the fact that the difference between Fieri's blue shirt and the yellow target image is too large, thus causing the rest of the photo to compensate for the difference.

Source

Source

Target

Target

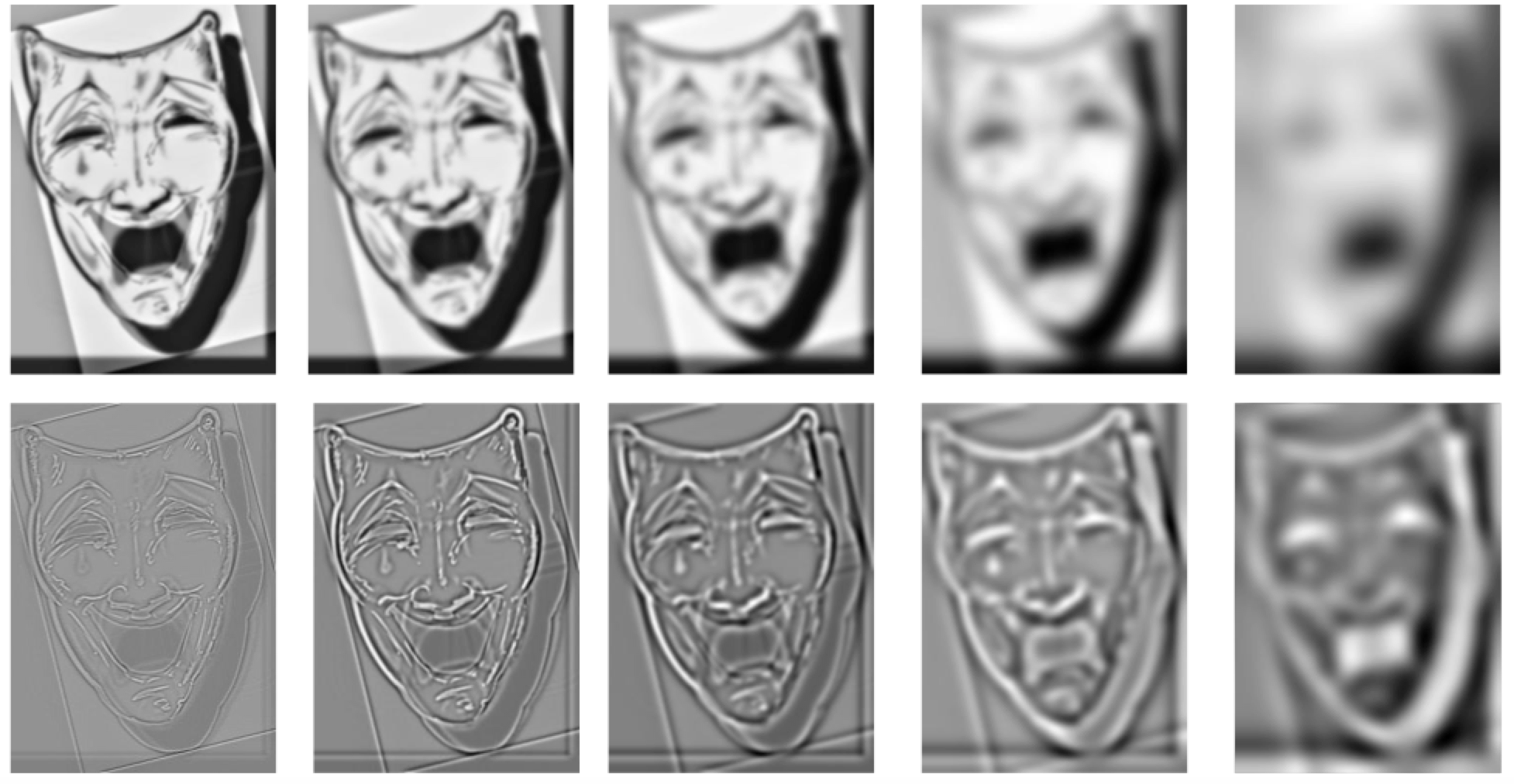

Laplacian Blending

Laplacian Blending

Poisson Blending

Poisson Blending

Even though the Laplacian-blended image is in black and white, it is still apparent that the area around the eyes do not blend into the hands at all. For the Poisson-blended image, the eyes are much more incorporated into the hands. In this situation, the Poisson-blended image works better. This seems to be the case for most images where the texture and/or the color of the source and the target are similar. In other cases, Laplacian blending might work better (have not tested this).

The most important thing I learned in this assignment was that, in addition to images being represented as arrays, manipulation of images can be done using simple linear algebra concepts on these arrays. The fact that images can actually be used just like arrays creates a multitude of possibilities in image processing, all accessible through simple algorithms.