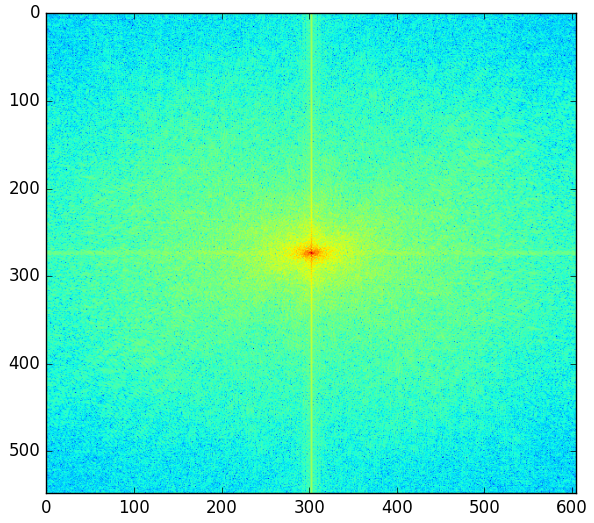

To get used to working with gradient domain processing, we started with a toy example. In this example we computed the

x and

y gradients from an image source

s, then use all the gradients, plus one pixel intensity, to reconstruct an image

v.

For each pixel, then, we have two objectives:

min (v(x+1,y)-v(x,y) - (s(x+1,y)-s(x,y)))^2: the x-gradients of

v should closely match the x-gradients of

s.

min (v(x,y+1)-v(x,y) - (s(x,y+1)-s(x,y)))^2: the y-gradients of

v should closely match the y-gradients of

s.

We also added a final objective, that the top left corners of the two images should be the same color.

minimize (v(1,1)-s(1,1))^2.

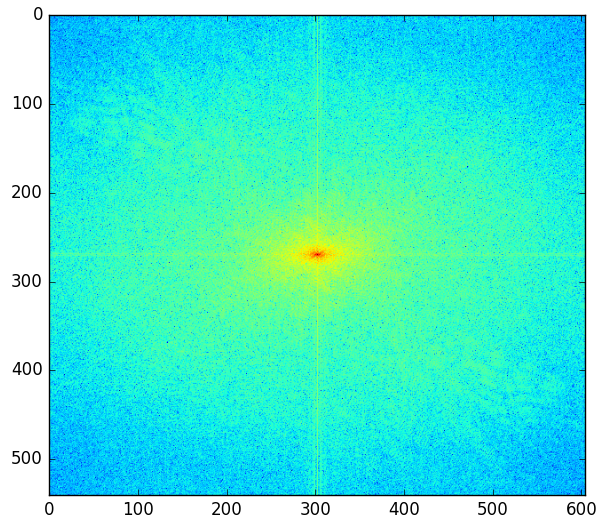

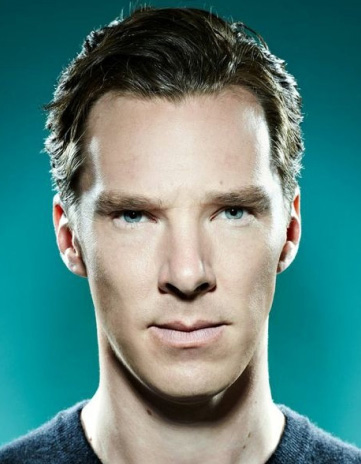

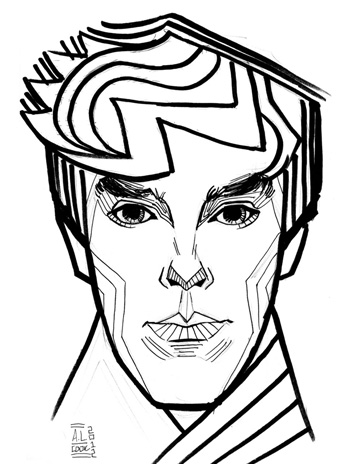

Displayed: original image, reconstructed image.

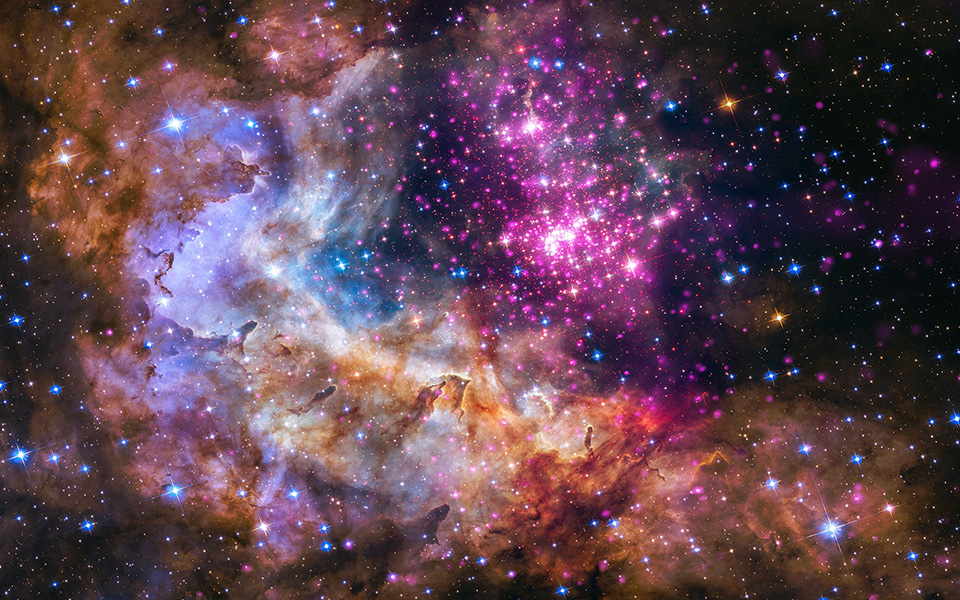

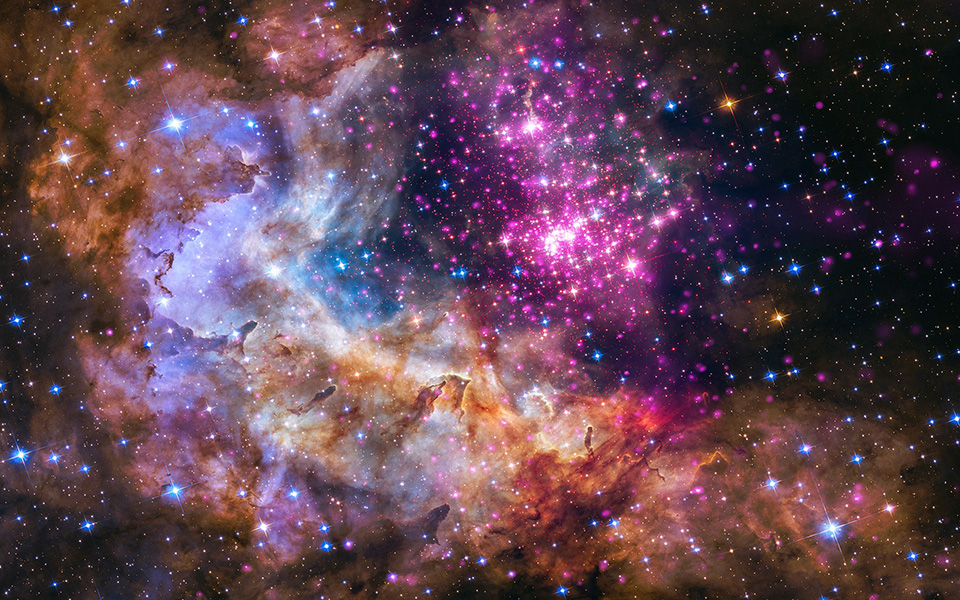

The primary goal of poisson blending is seamlessly blending an object or texture from a source image into a target image. The simplest method would be to just copy and paste the pixels from one image directly into the other for a naive blend. Unfortunately, this creates an obvious seam between the source and target image. How do we blend the seams... seamlessly?

Gradients are the difference in intensity between two pixels. If we can set up the problem as finding values for the target pixels that maximally preserve the gradient of the source region without changing any of the background pixels, we can create a nice blend. Note: we are making a deliberate decision here to ignore the overall intensity! So a green hat could turn red. But at least it'll still look like a hat.

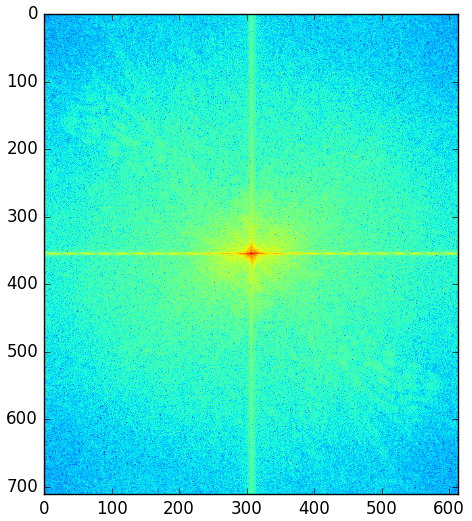

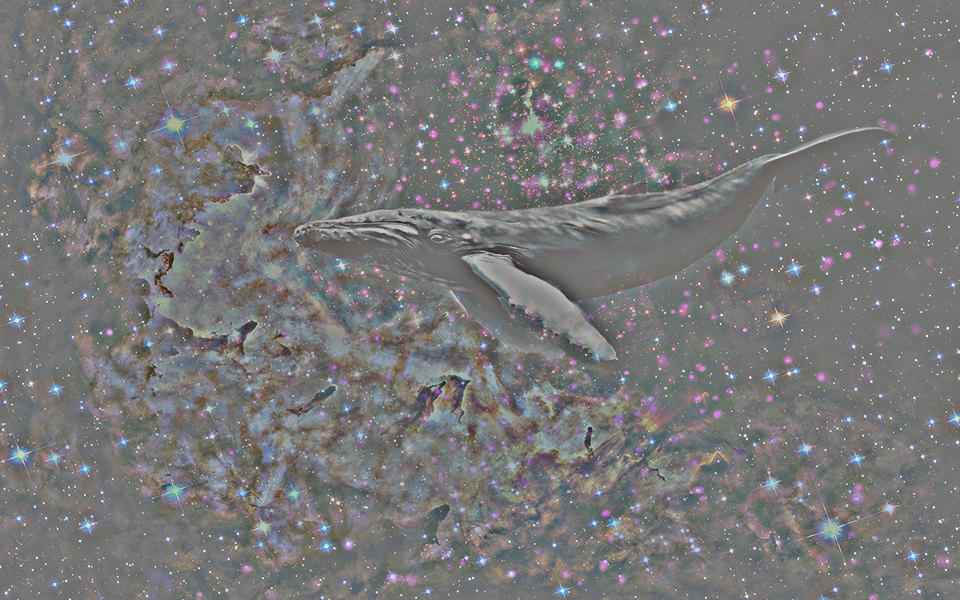

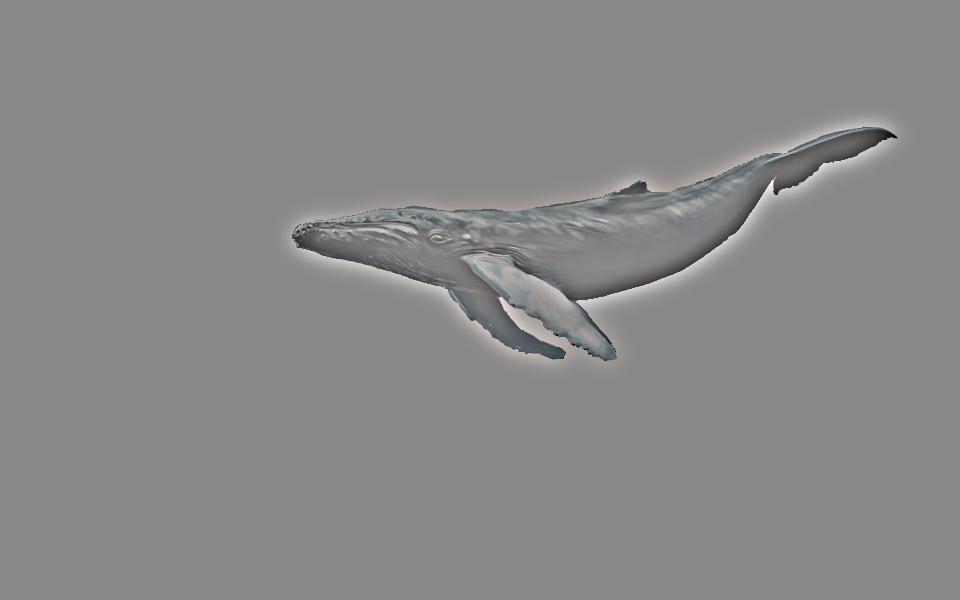

Displayed: spaaaace, whale, crude mask, naive blending attempt.

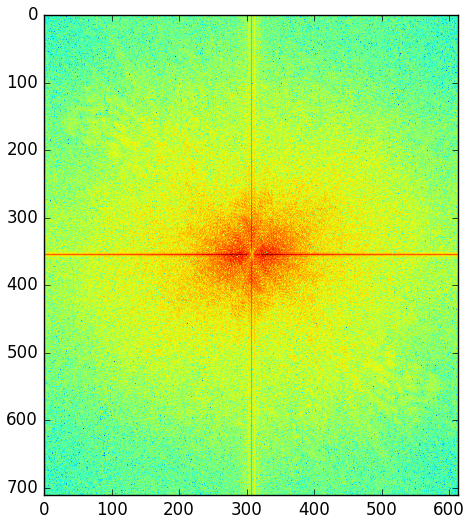

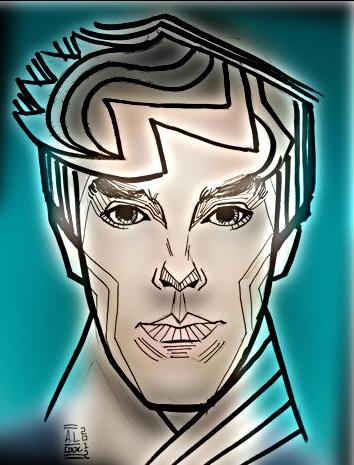

Here are the final results, comparing multiresolution blending with poisson blending. Clearly, multiresolution maintains the original coloring of the whale while poisson blending prefers to maintain the gradients from the space background, resulting in a whale that is rather "ghostly".

Despite the ghostly whale, we had decided that it was our favorite blend after blending multiple images. But we later decided we preferred the multiresolution blending because it preserved the colors more faithfully, even after trying to poisson blend with a close mask.

Thus, while this was our favorite result, we also decide it was mostly unsuccessful.

Displayed: multiresolution blending with a close mask, poisson blending with a crude mask, poisson blending with close mask.

Displayed: author with cat ears, also the author with cat ears, a mask for a third eye, third-eyed monstrosity.

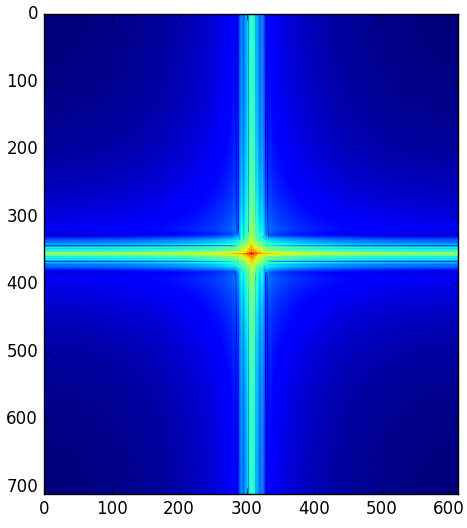

Displayed: hikers, penguin, crude mask, penguin with hikers.

Final thoughts: definitely, the most important thing we learned from implenting these functions is to read the source papers very closely. Many times we realized we misinterpreted the paper's instructions.