Part1:Frequency Domain

Part1.1 Warmup

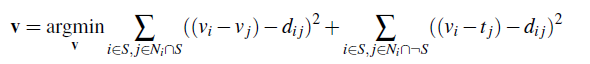

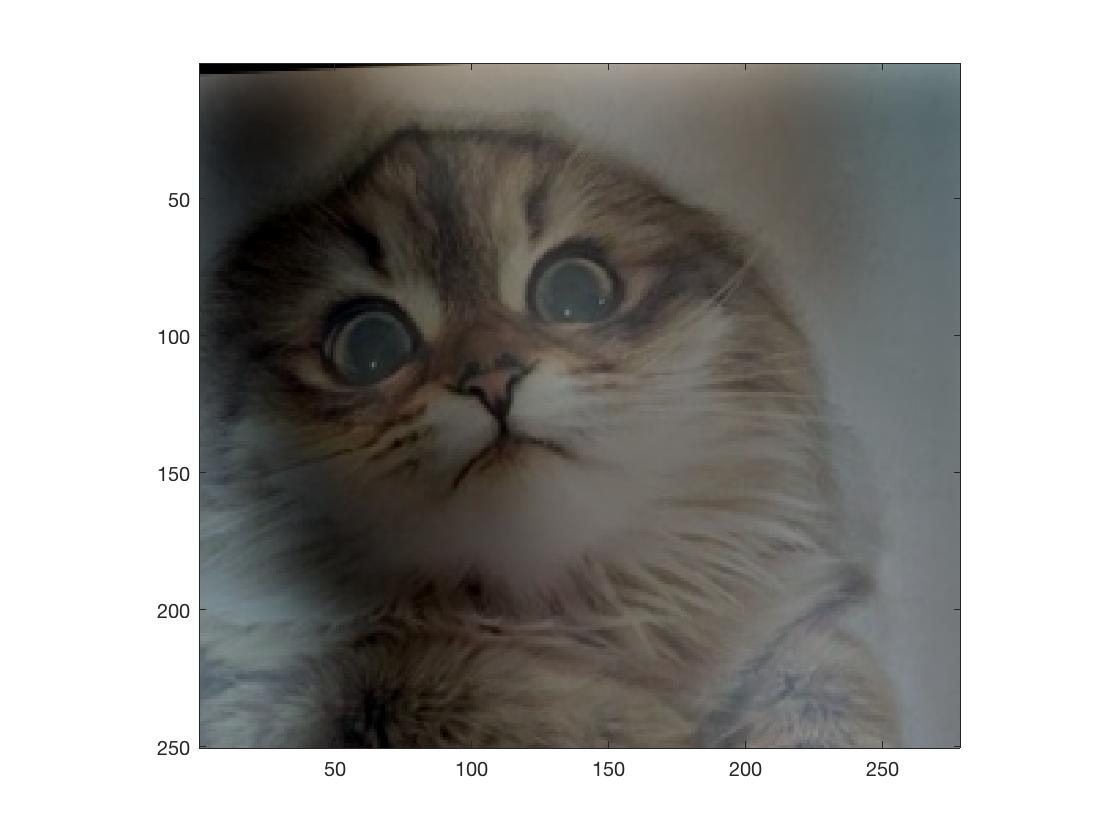

Pick an image and use the unsharp masking technique to process the picture

Part1.2 Hybrid Images + Part 1.3: Gaussian and Laplacian Stacks

Overview

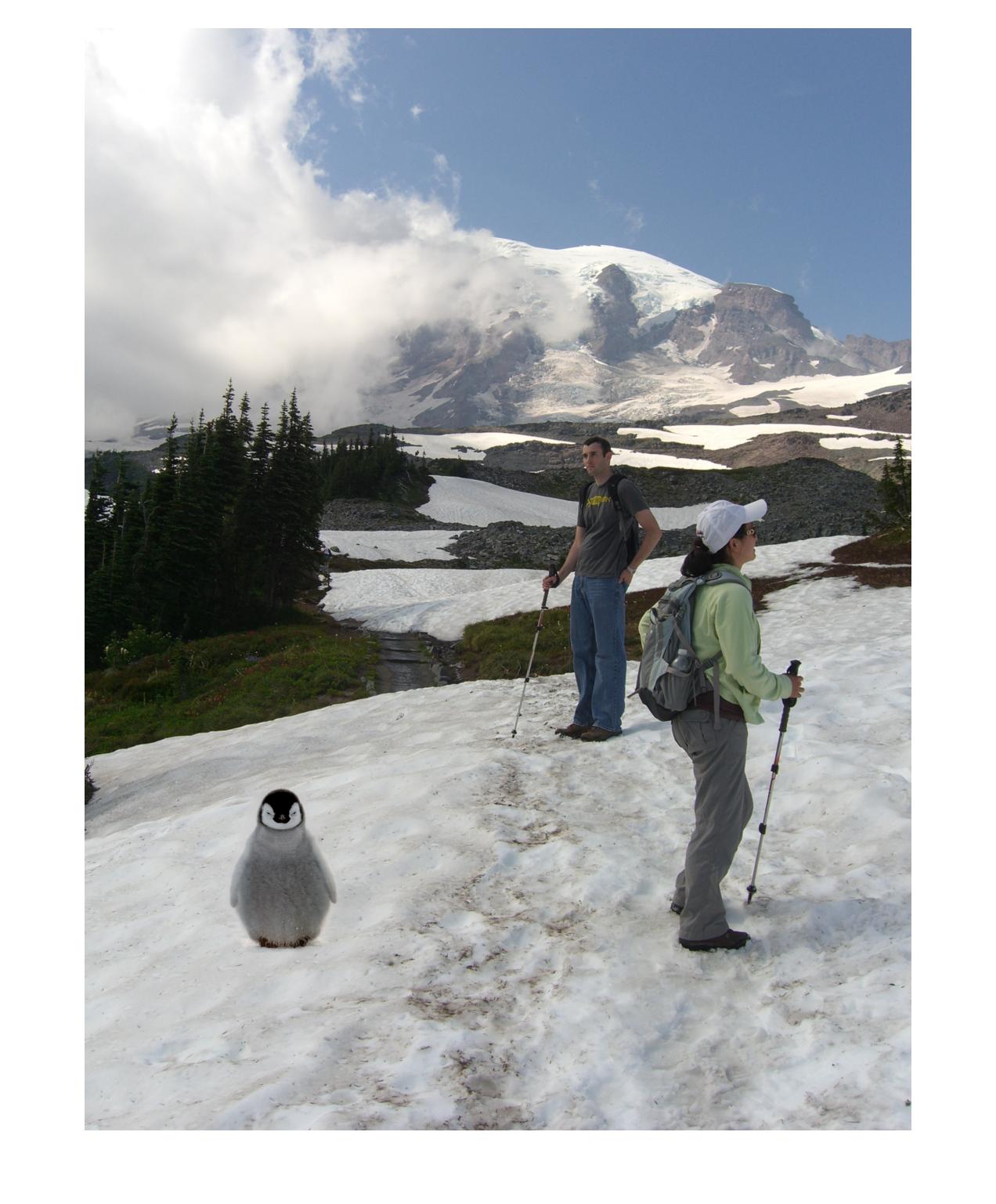

part1.2:The goal of this part of the assignment is to create hybrid images using the approach described in the SIGGRAPH 2006 paper by Oliva, Torralba, and Schyns. Hybrid images are static images that change in interpretation as a function of the viewing distance. The basic idea is that high frequency tends to dominate perception when it is available, but, at a distance, only the low frequency (smooth) part of the signal can be seen. By blending the high frequency portion of one image with the low-frequency portion of another, you get a hybrid image that leads to different interpretations at different distances.

part1.3:In this part you will implement Gaussian and Laplacian stacks, which are kind of like pyramids but without the downsampling. Then you will use these to analyze some images, and your results from part 1.2.

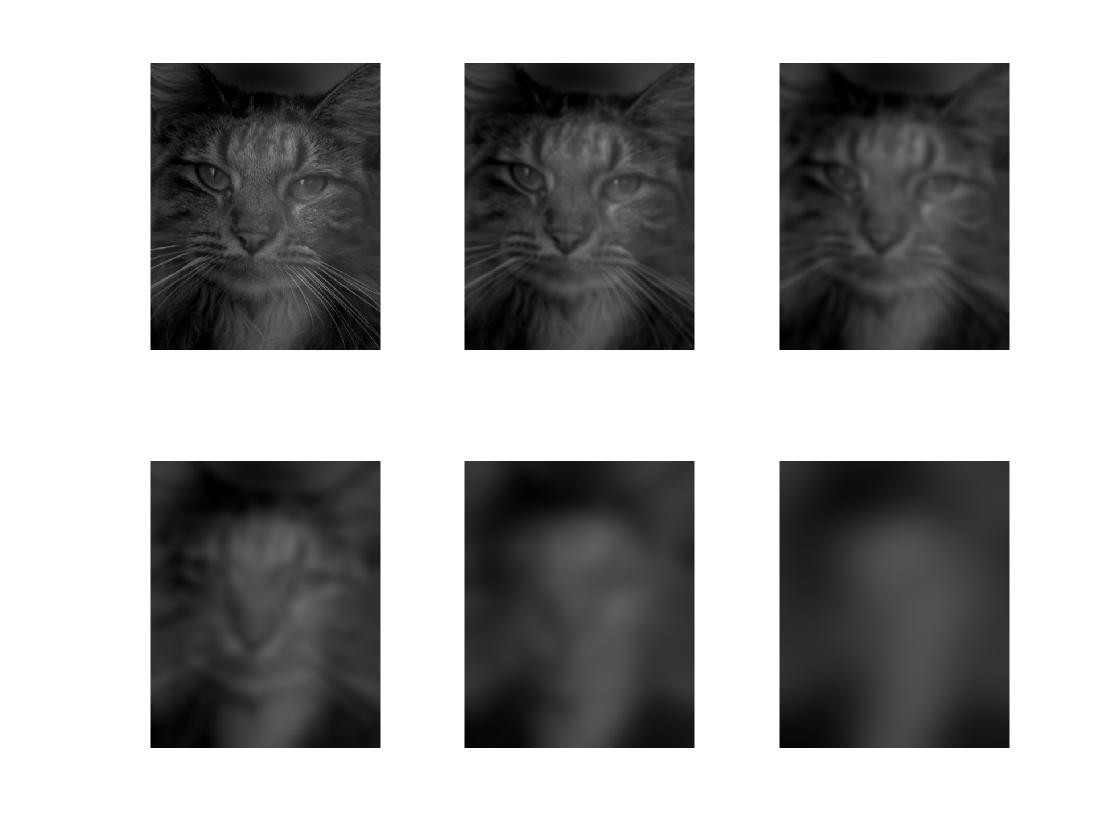

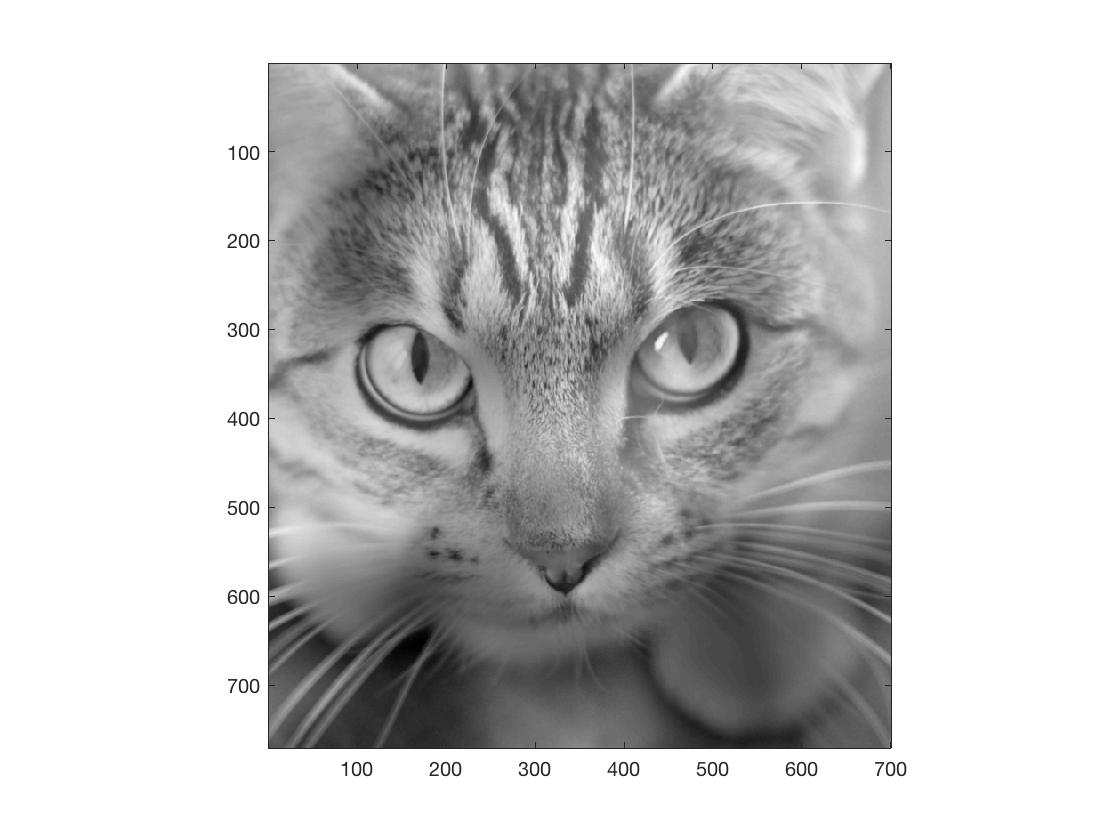

Grayscale pictures of Derick and Nutmeg

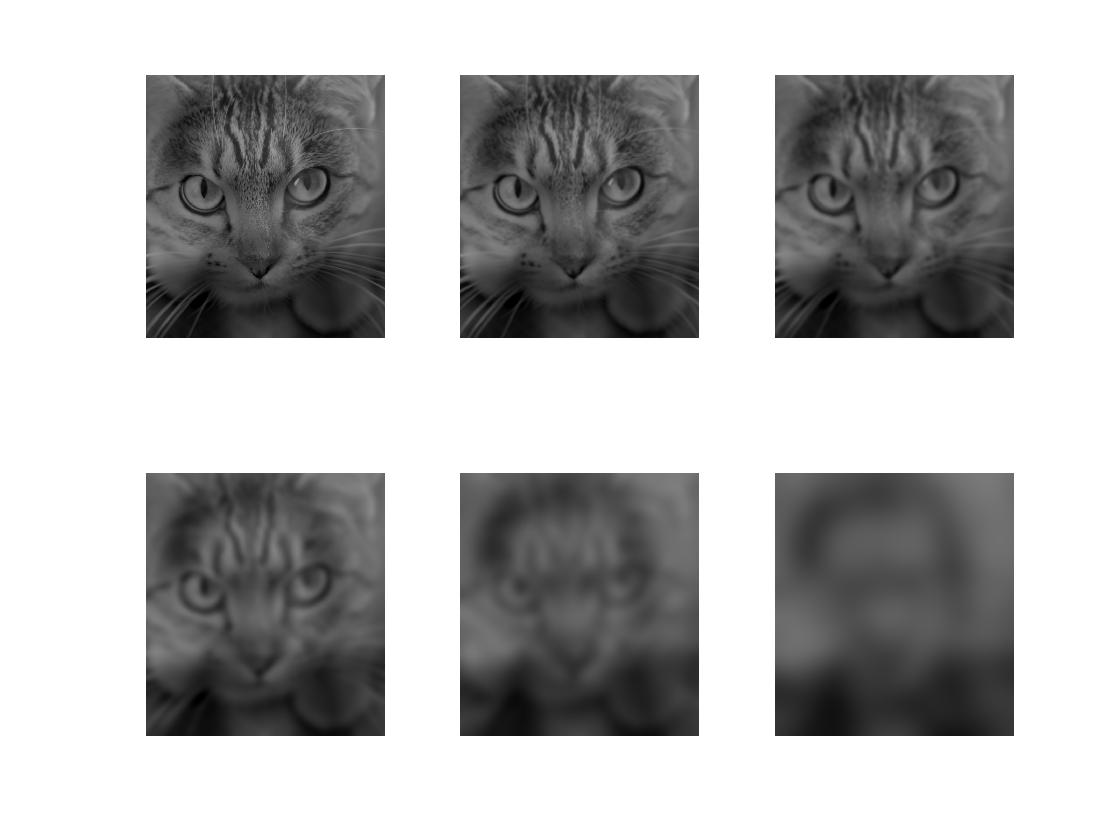

Gaussian Pyramids and Lapalacian Pyramids

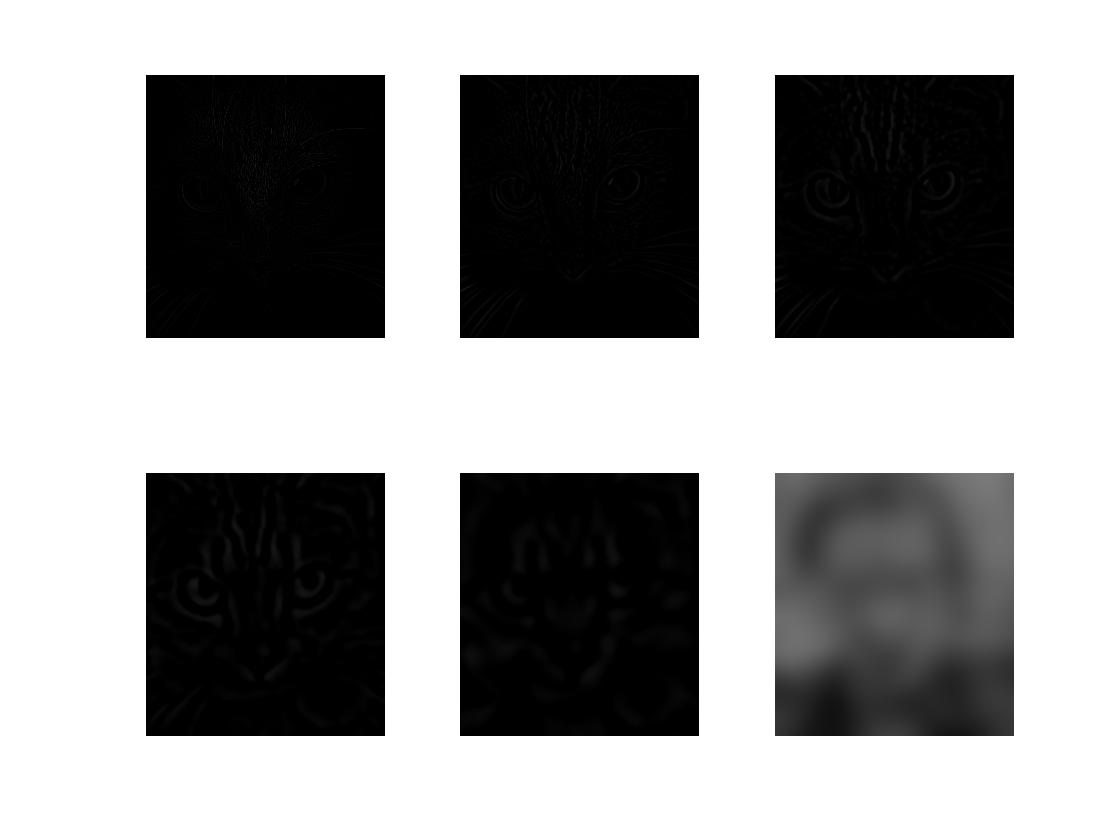

Gaussian stacks and Lapalacian stacks

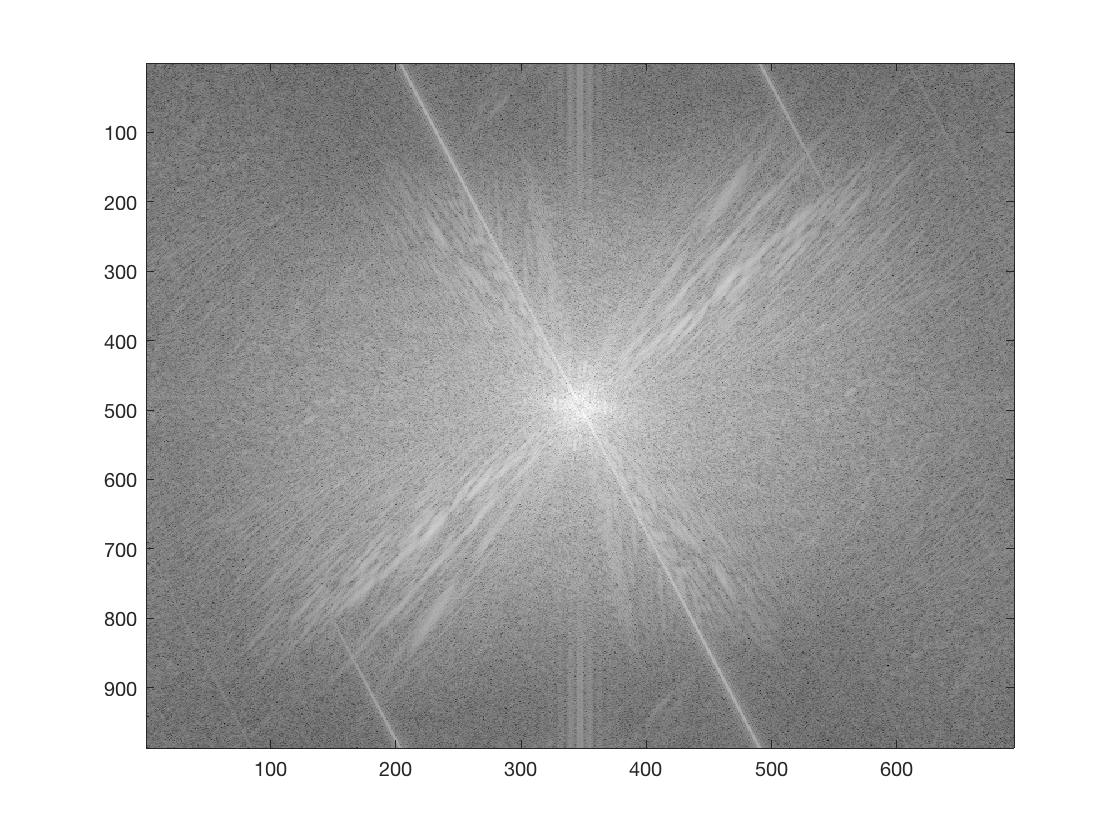

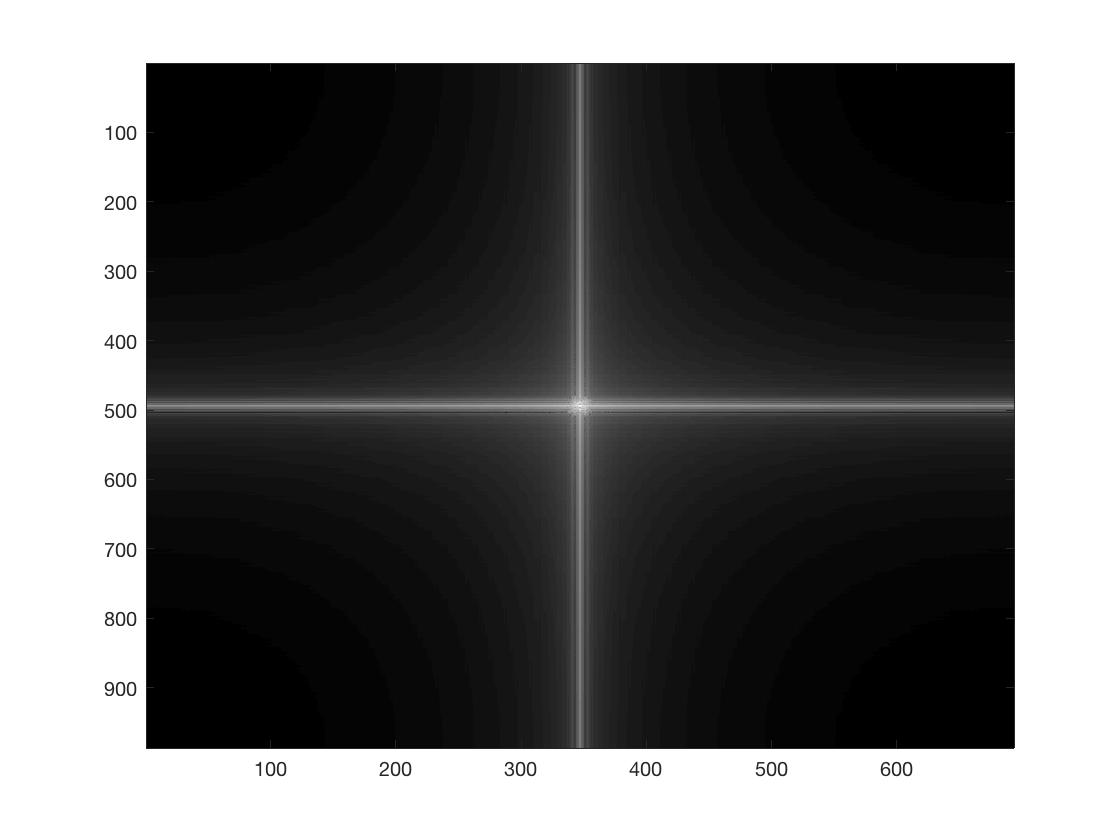

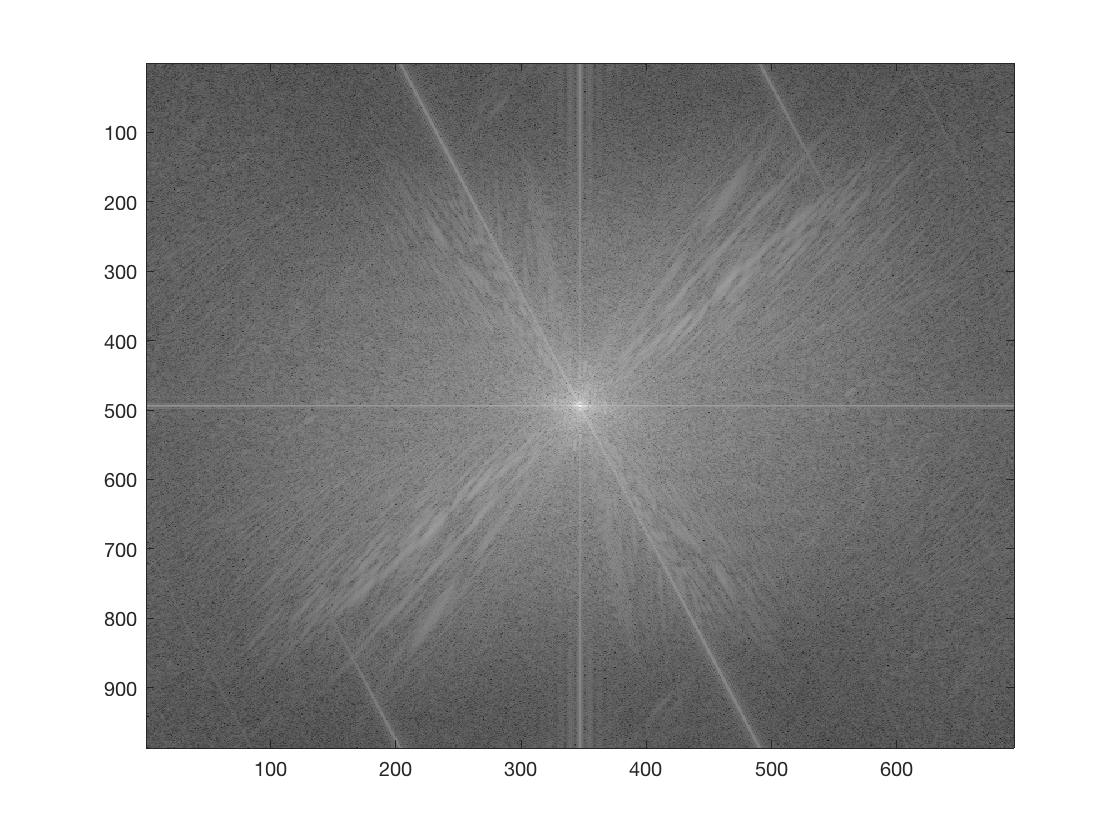

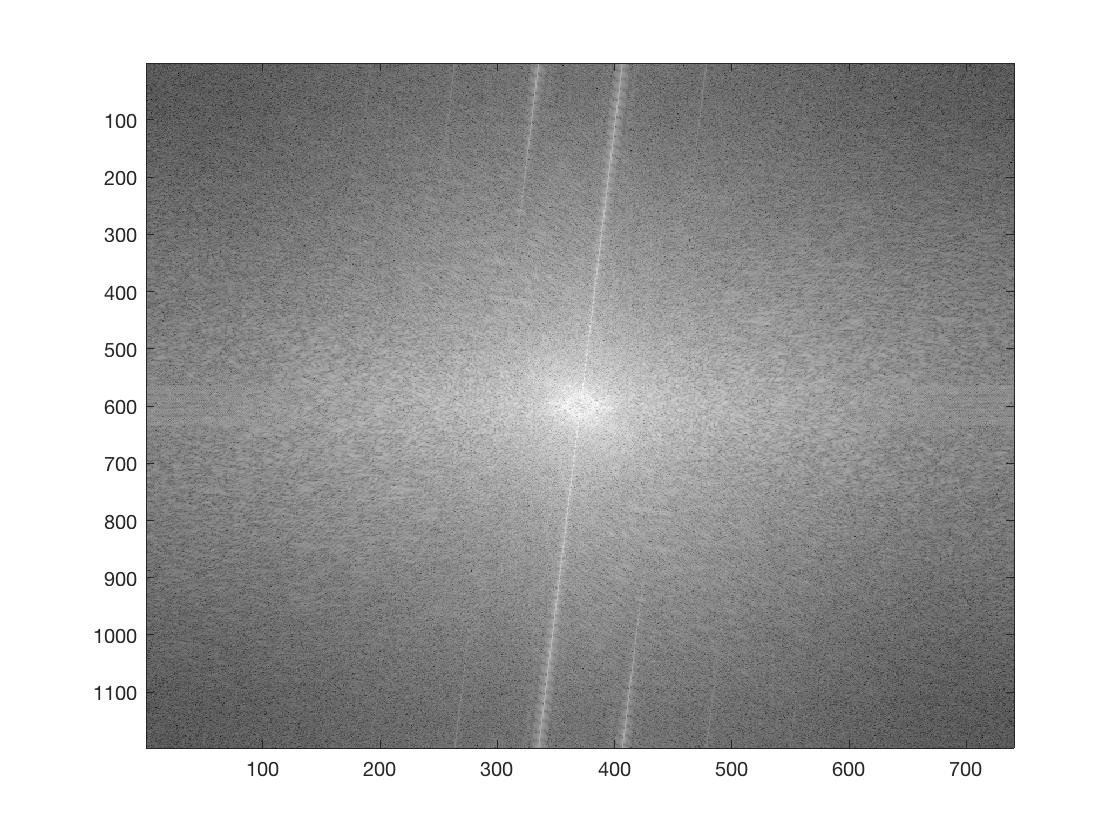

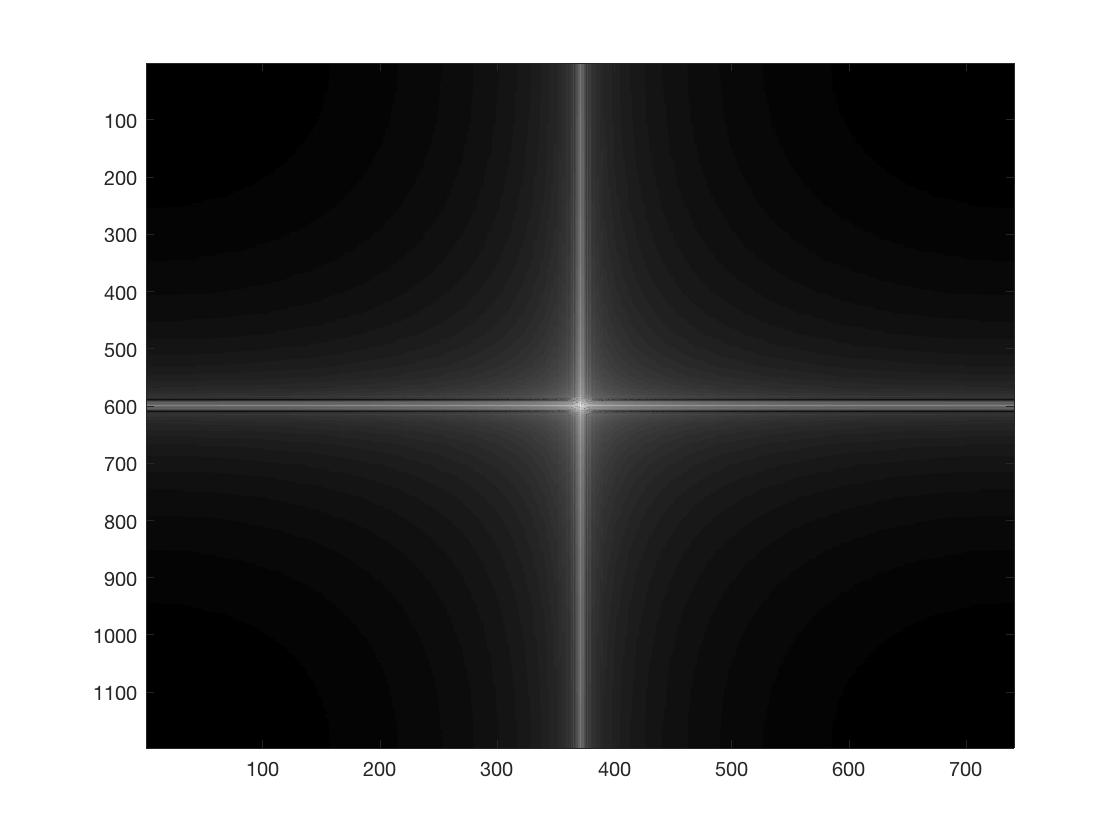

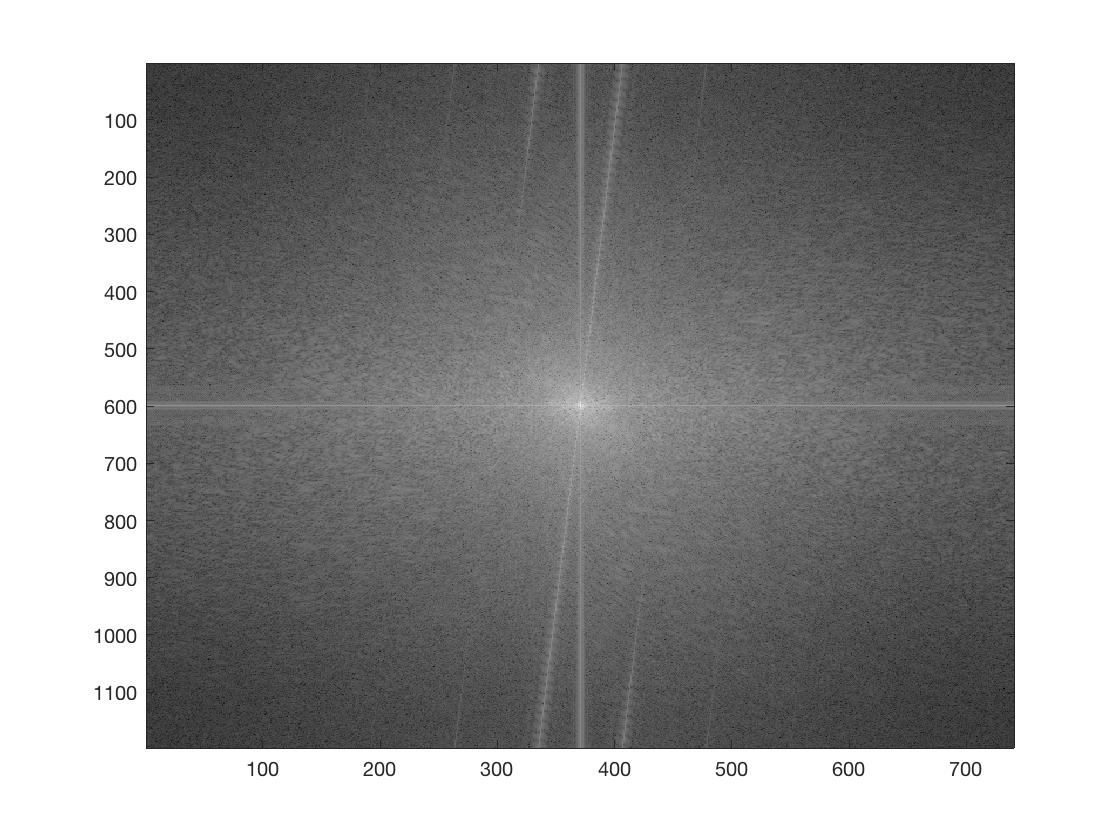

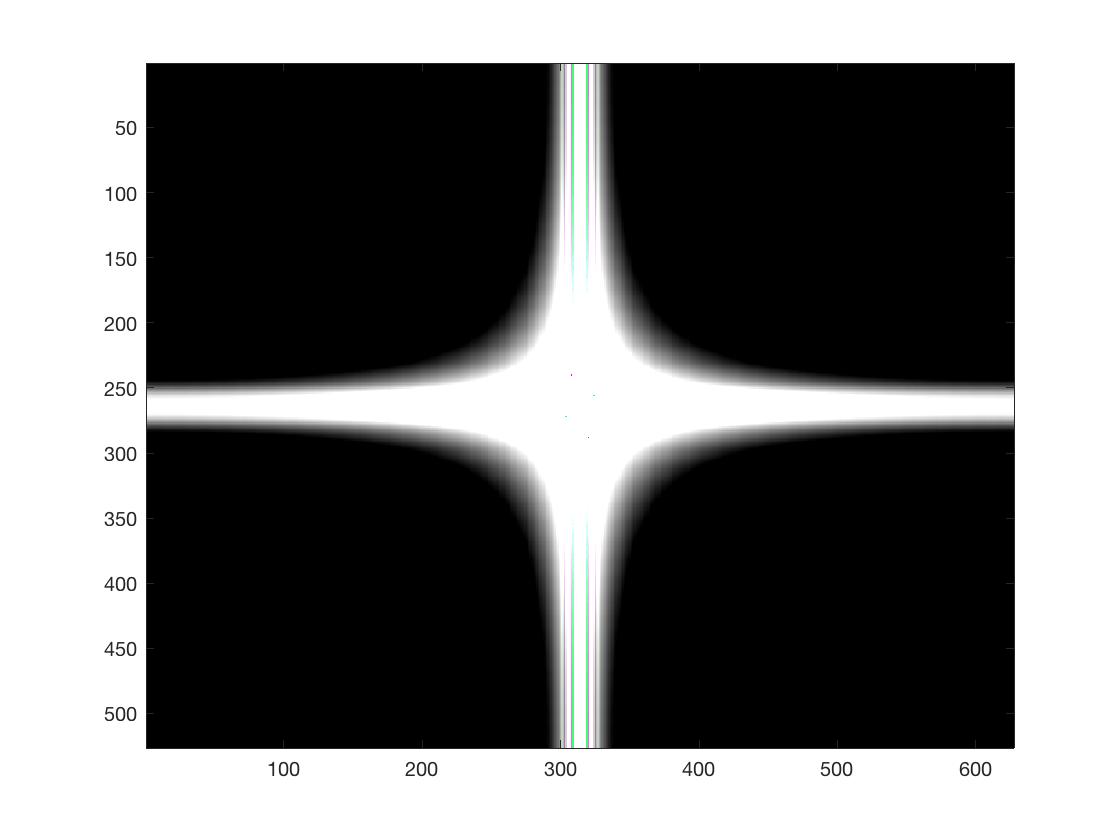

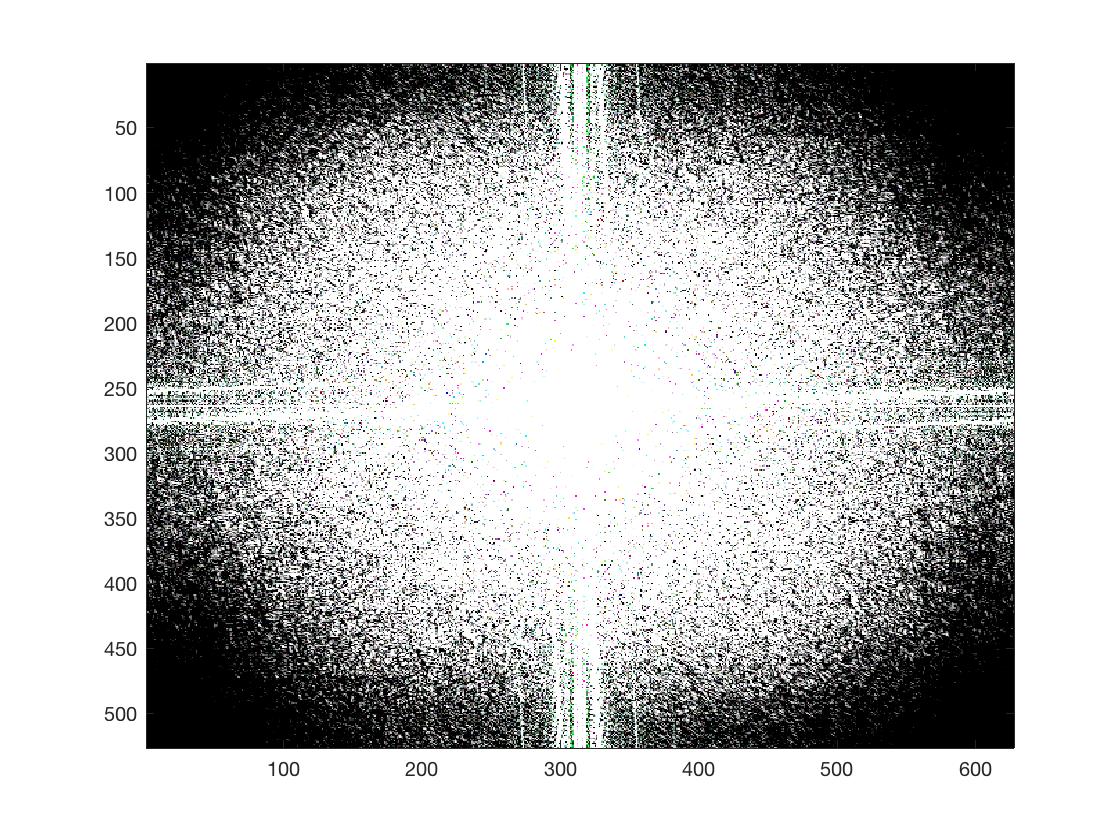

FFT for low-pass, high-pass and hybrid images

Grayscale pictures of me selecting photos

Gaussian Pyramids and Lapalacian Pyramids

Gaussian stacks and Lapalacian stacks

FFT for low-pass, high-pass and hybrid images

Now we use the color to enhance the effect

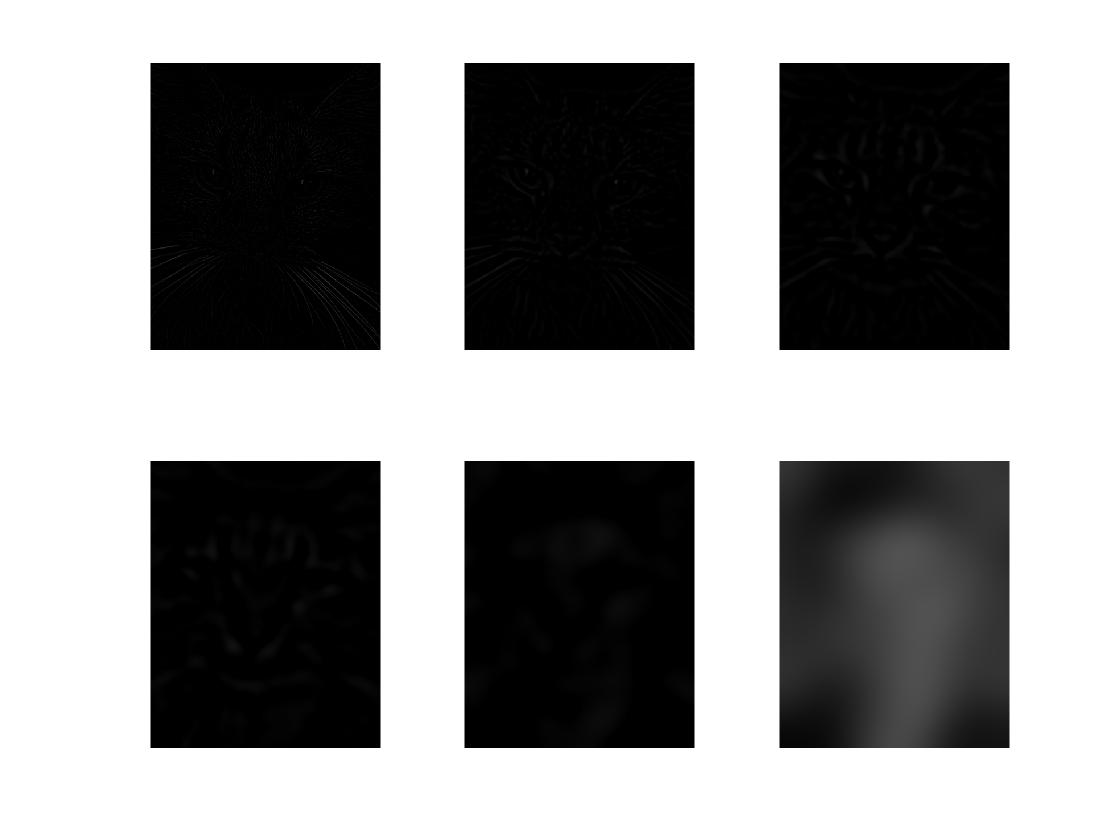

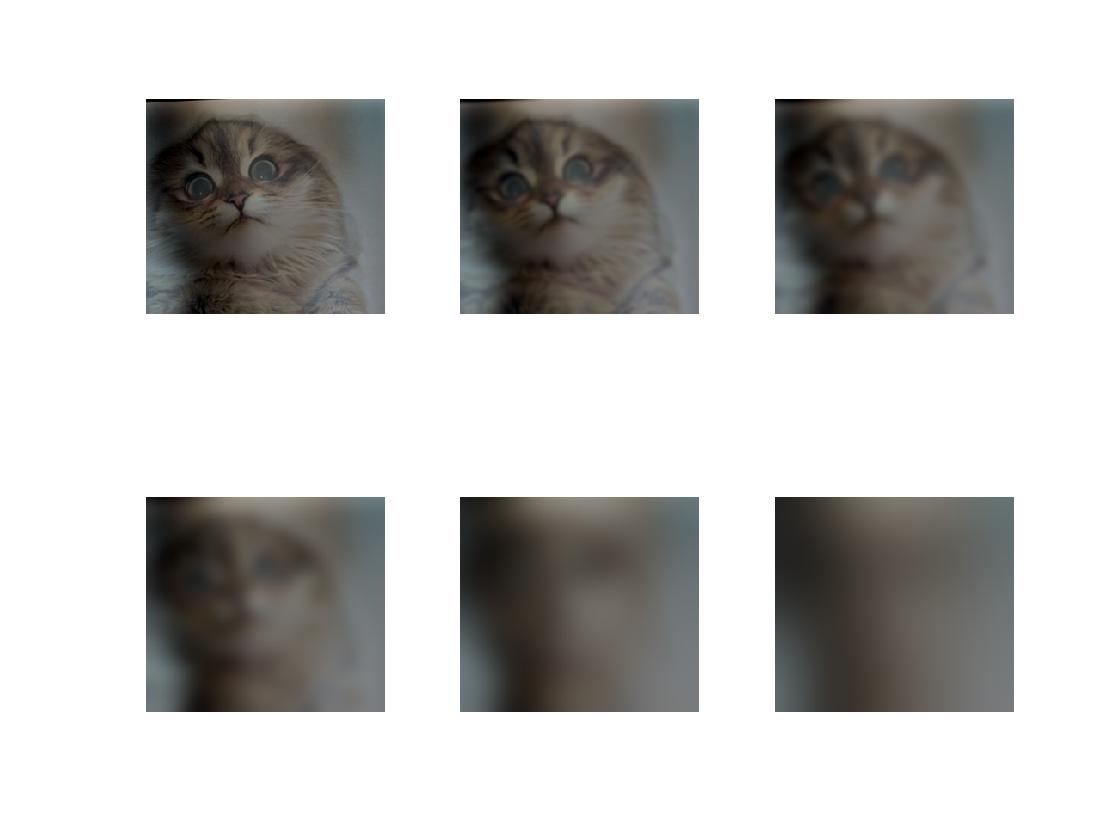

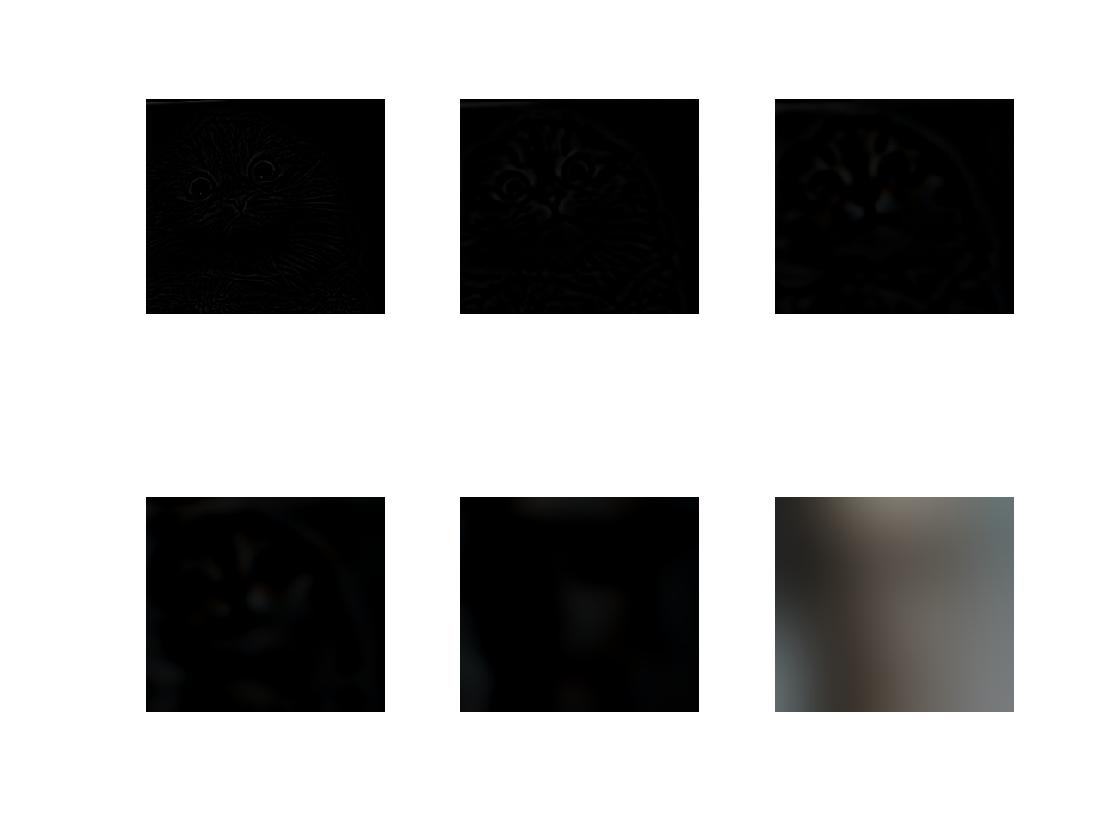

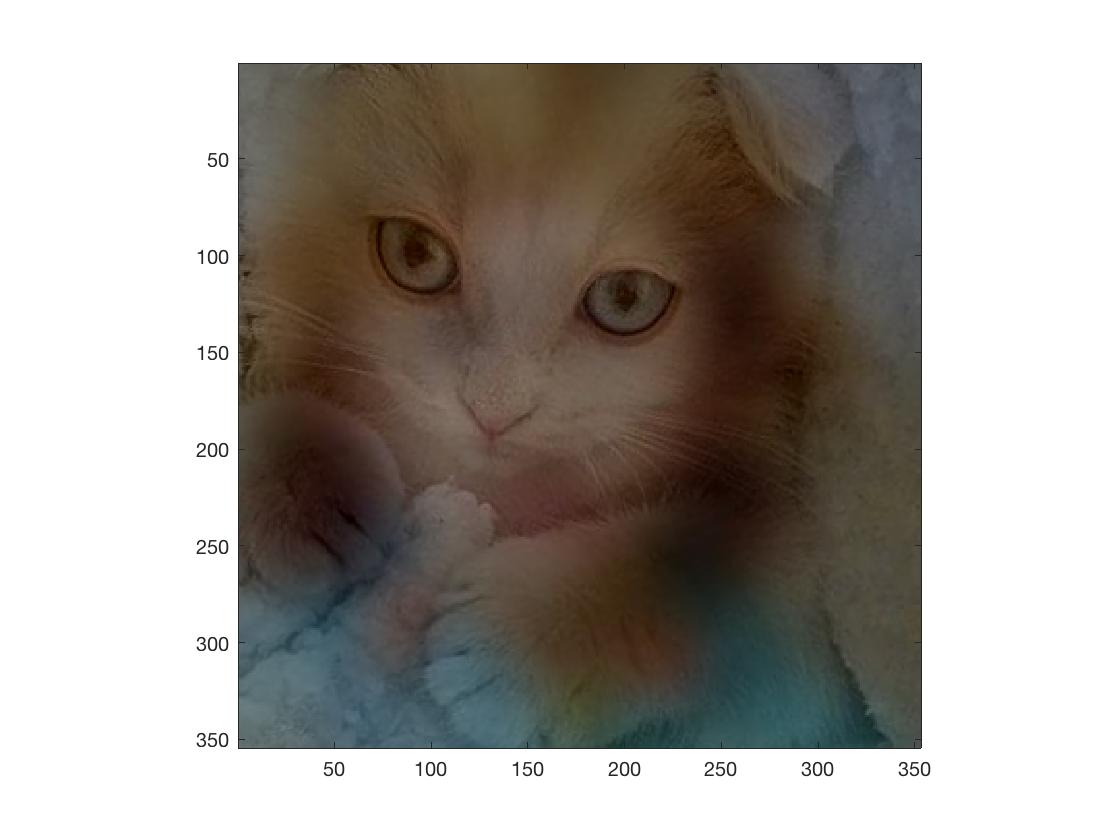

Colored pictures of selecting catss

Gaussian Pyramids and Lapalacian Pyramids

Gaussian stacks and Lapalacian stacks

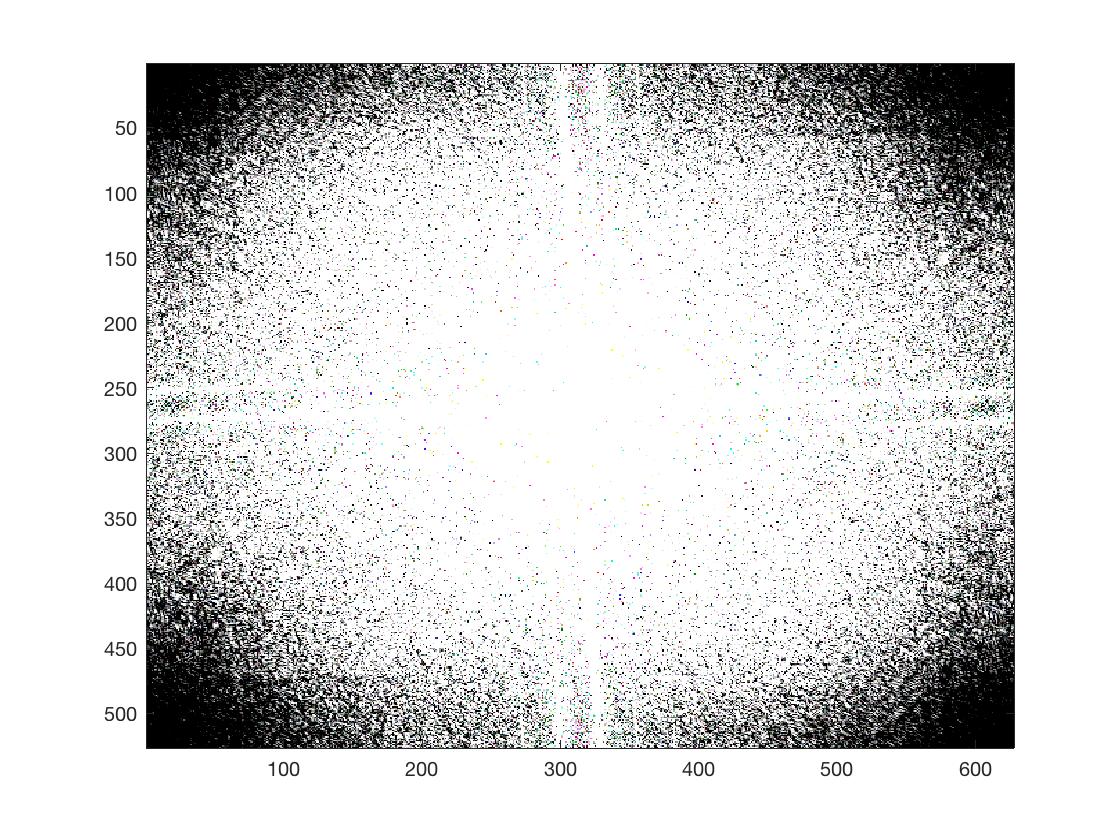

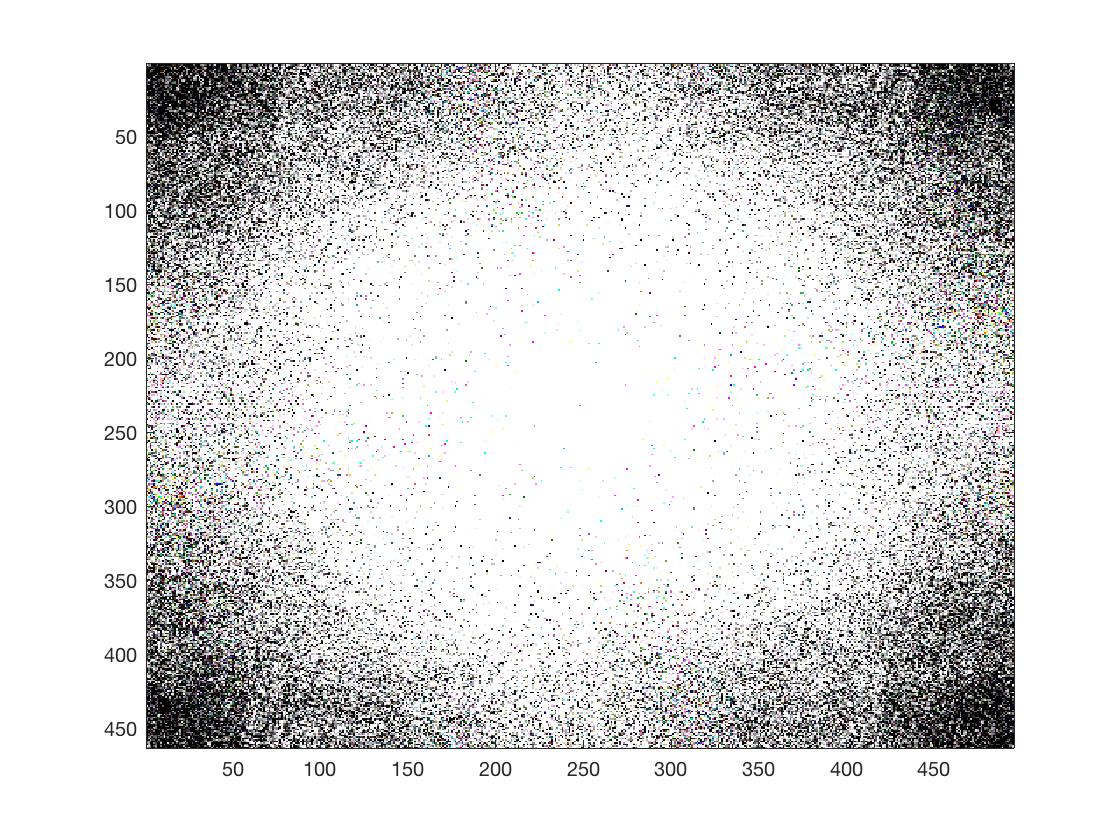

FFT for low-pass, high-pass and hybrid images

colored pictures of selecting cats

Gaussian Pyramids and Lapalacian Pyramids

Gaussian stacks and Lapalacian stacks

FFT for low-pass, high-pass and hybrid images

Part2:Gradient Domain Fushion

This project explores gradient-domain processing, a simple technique with a broad set of applications including blending, tone-mapping, and non-photorealistic rendering. For the core project, we will focus on "Poisson blending"; tone-mapping and NPR can be investigated as bells and whistles. The primary goal of this assignment is to seamlessly blend an object or texture from a source image into a target image. The simplest method would be to just copy and paste the pixels from one image directly into the other. Unfortunately, this will create very noticeable seams, even if the backgrounds are well-matched. How can we get rid of these seams without doing too much perceptual damage to the source region? One way to approach this is to use the Laplacian pyramid blending technique we implemented for the last project (and you will compare your new results to the one you got from Laplacian blending). Here we take a different approach. The insight we will use is that people often care much more about the gradient of an image than the overall intensity. So we can set up the problem as finding values for the target pixels that maximally preserve the gradient of the source region without changing any of the background pixels. Note that we are making a deliberate decision here to ignore the overall intensity! So a green hat could turn red, but it will still look like a hat. We can formulate our objective as a least squares problem. Given the pixel intensities of the source image "s" and of the target image "t", we want to solve for new intensity values "v" within the source region "S":

The method presented above is called "Poisson blending".

Part2.1 Toy Problem

The implementation for gradient domain processing is not complicated, but it is easy to make a mistake, so let's start with a toy example. In this example we'll compute the x and y gradients from an image s, then use all the gradients, plus one pixel intensity, to reconstruct an image v.

part2.2 Poisson Blending

Step 1: Select source and target regions. Select the boundaries of a region in the source image and specify a location in the target image where it should be blended. Then, transform (e.g., translate) the source image so that indices of pixels in the source and target regions correspond.

Step 2: Compare the image with the background image and process the bording using equation:

Here, each "i" is a pixel in the source region "S", and each "j" is a 4-neighbor of "i". Each summation guides the gradient values to match those of the source region. In the first summation, the gradient is over two variable pixels; in the second, one pixel is variable and one is in the fixed target region.

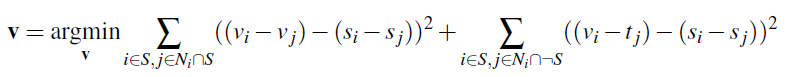

First, we want to blend some cute penguine into the snow

The penguine just perfectly blending into the snow.

Then, we blend the hurricane in front of the church

In this case, since the background is grayscale, the hurricane cannot be perfectly blending into the background.

We try to blend the boat on the water

In this case, since the color around boat has a high hue compared with the background, so that the color is a little bit wierd.

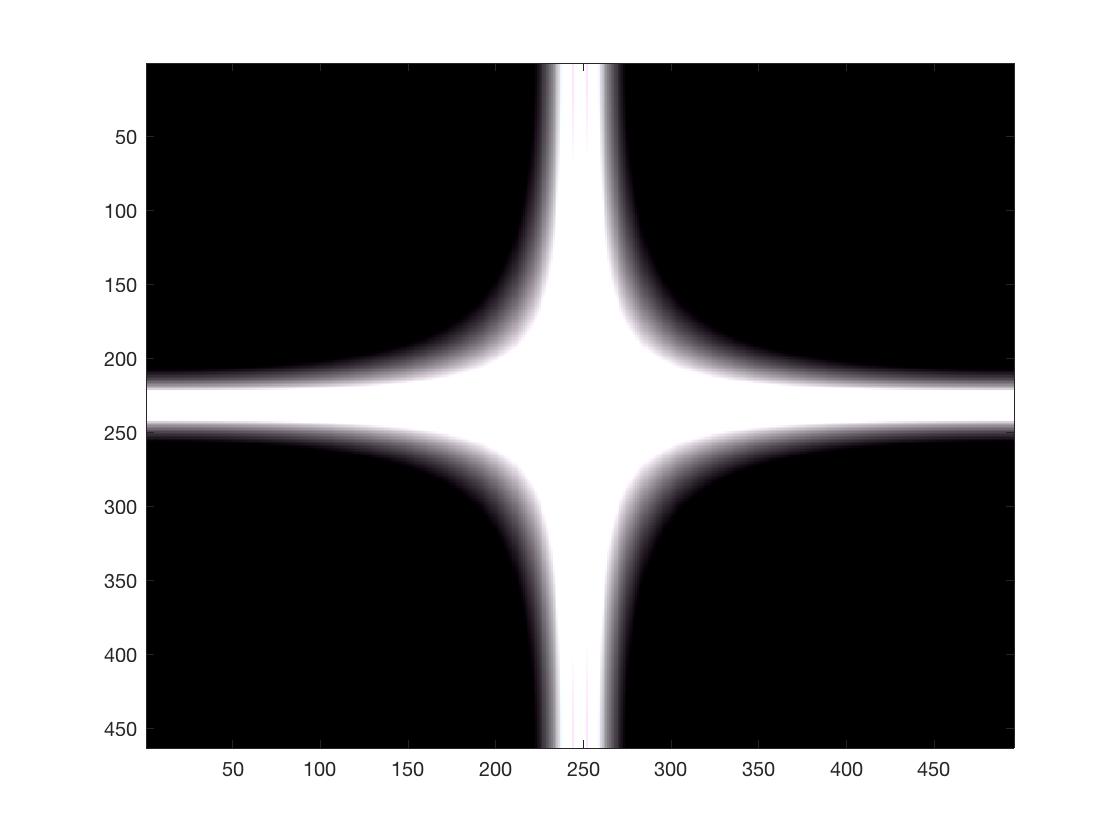

Comparing multiresolutiong blend with Naive blend and Poisson blend

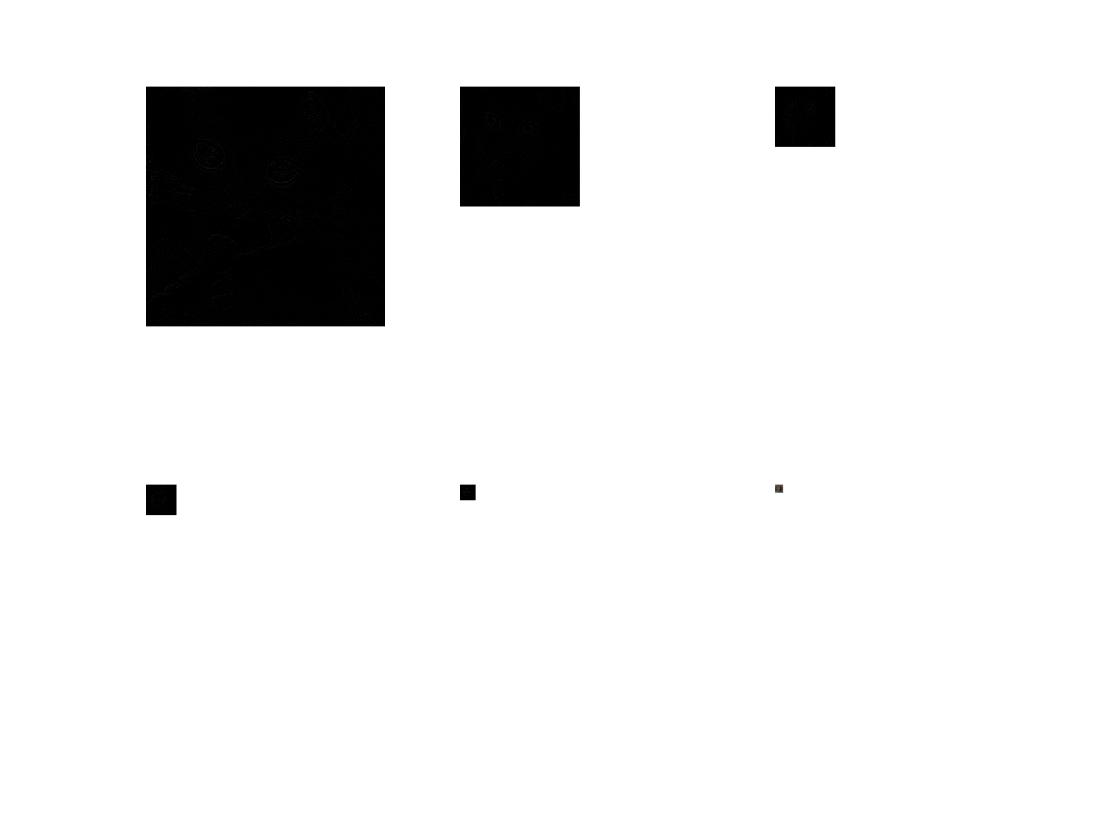

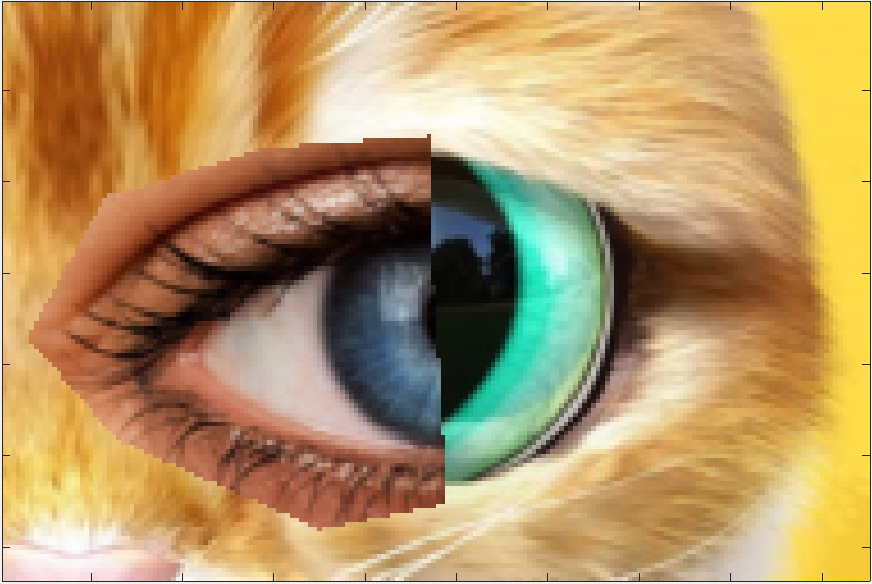

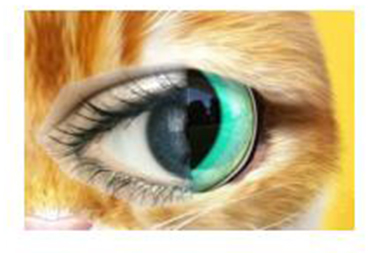

Human eyes AND Cat eyes

Naive Blend (left) Multiresolution Blend (middle) Poisson Blend (right)