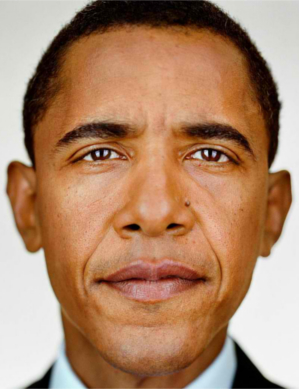

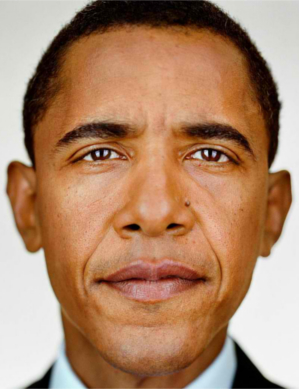

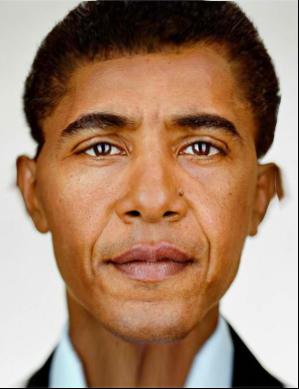

| Me | Obama |

|---|---|

|

|

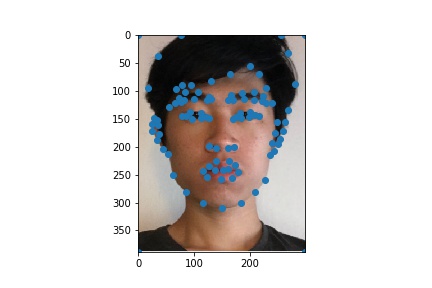

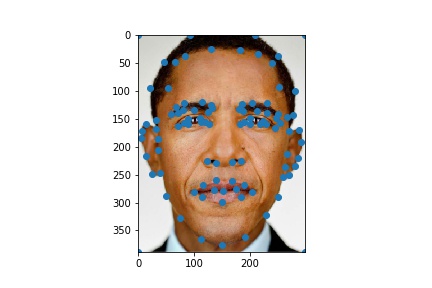

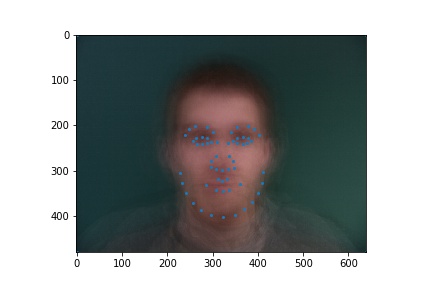

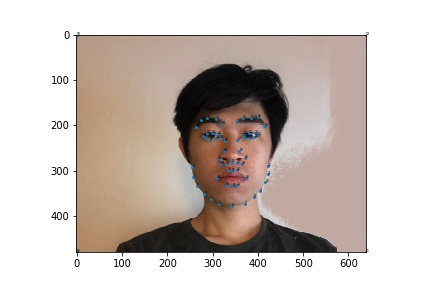

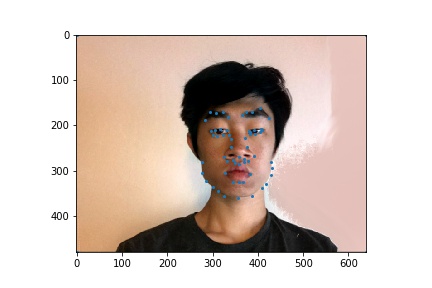

I started by defining 98 corresponding points between a picture of me and one of Obama:

|

|

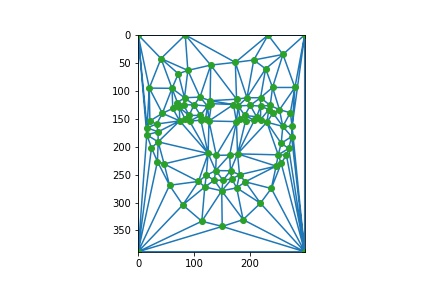

I create the mean points by average the two sets of points, then create a Delaunay triangulation using the mean points. Given a set of points, the triangulation thus defines a "shape." Here is the shape with the mean points:

Each image is then warped to the mean shape, by doing inverse warping for each individual triangle. In particular, for the $i$th triangle I make a $T_i$ such that: $$ T^i \begin{bmatrix} x_{dst}\\ y_{dst}\\ 1 \end{bmatrix} = \begin{bmatrix} x_{src}\\ y_{src}\\ 1 \end{bmatrix} $$ for $(x_{dst}, y_{dst})$ in the triangle. This means to find out the value of $(x_{dst}, y_{dst})$ for each pixel in triangle $i$, I can use $T^i$ to find $(x_{src}, y_{src})$ and use that to sample values from the source image.

$T^i$ is a 3x3 matrix but the bottom row is known, so there are only 6 parameters to recover. Using the vertices of the corresponding triangles gives 6 equations, and solving that system gives those parameters.

Here is the result of warping each image to the mean shape, and the midway face made by averaging the two warped images. Note that there are imperfections due to the big differences in hair shape and ear shape in the two images, but the area around the eyes, nose, and mouth work out fairly well:

|

|

|

The morph sequence is done by warping to a linear combination of the two shapes, which is controlled by a parameter. The dissolve is similarly parameterized. I simply used the fraction of the time step over the total duration to parameterize both. Here is the final result:

I used the linked Danes dataset. I used only the photos of males since it makes things easier for later parts of the homework where I need to transform my own picture. The mean face is created by first warping every face to the mean shape, then averaging the colors. Here is the mean shape, overlayed over a background of merely averaging the colors without warping first:

As you can see, merely averaging the colors without warping is not enough. Here is the mean face with the warping:

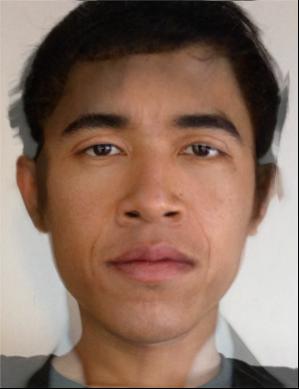

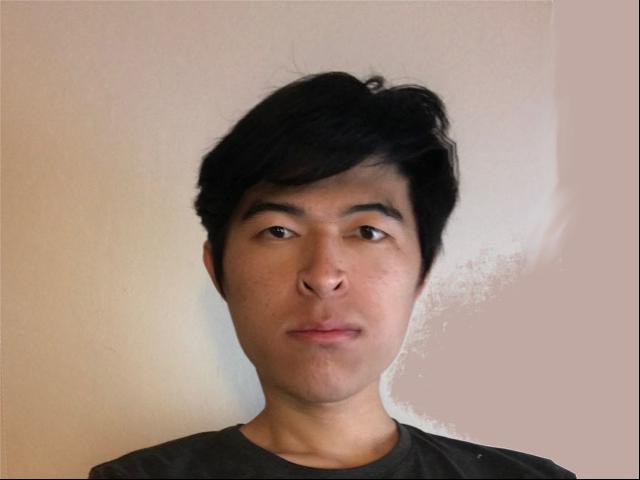

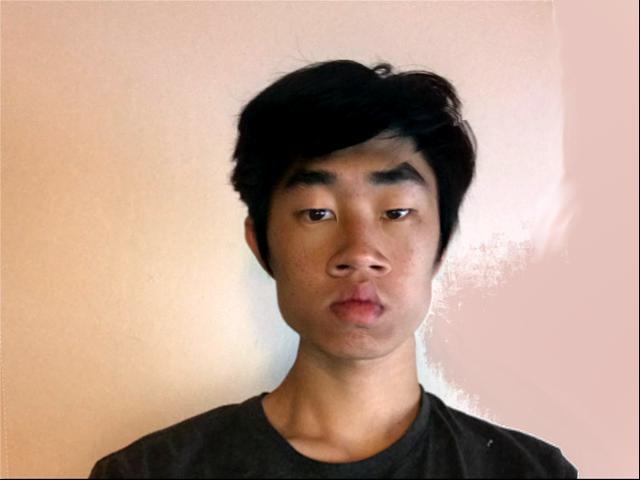

To do transforms on my own photo, I labeled my own photo with points in the same order as the dataset. I didn't center myself well when I took the original photo, so I had to poorly photoshop in some extra pixels on the right-hand side:

This can then be warped to the average shape from the dataset:

The average face from the dataset can also be warped to my shape:

To produce a caricature, I took the difference vector between my shape and the mean shape. I also took a difference between my color and the mean color. I then added these differences back onto the original, each scaled by a factor. I scaled the shape difference by $0.75$ and the color difference by $0.25$ before adding them back:

Here is the same with the shape overlayed, for illustration

A similar approach can be applied to make faces look happy. We can select out only the happy faces, and make an average happy face similar to the average face done earlier:

Taking the difference vector in both shape and color, and adding that back to my picture creates a happy transform. Here is the result only adding back the color difference scaled by $0.25$. There is not much difference, which makes sense because the primary factor in making things look happy is the shape around the mouth and eyes, not the color:

However, if we only add the shape difference vector the effect is obvious:

Here it is with both shape and color transformed:

First, I was unable to use my own picture for PCA with the Danes datset because I do not look similar enough to the faces in the dataset, so instead I "held out" one face from the dataset as the picture I am making a caricature of. Everything is done in grayscale. Here is the face I used:

I can create the caricature with the method used before, ie the not PCA method (color and shape coefficients are $.25$ and $.75$, respectively):

To do the caricature process using PCA, I first "flattened" both the color and shape data for each image into vectors. I stacked the color vectors horizontally to create a matrix, and did the same for all the shape vectors. I then used SVD to compute $$ D = U\Sigma V^T $$, where $U\in\mathbb{R}^{(wh) \times r}$ and $r = rank(D)$ and is used to project data into the PCA space. I did this for both color and shape data. I then proceeded with the caricature process as before, but using the PCA coordinates instead of the standard coordinates. I used the same coefficients as before.

Unfortunately, even here the dataset does not do a good job of representing the face. When I project the result back up into the standard coordinates, the result looks nothing like the original face:

As an alternative, I instead compute the caricature transformation in PCA space, but I apply the caricature transformation in the standard space. This reason this is different is that PCA is not invertible, and the process of projecting the original image into the PCA space and then projecting back into the standard space loses information. By only computing the transformation in the PCA space, the result is much more reasonable:

This face looks more realistic than the caricature produced without the PCA method, but it's hard to say it looks better, especially because realism is not necessarily better in caricatures.