Running the Code

numpy and skimage are needed to run the code. Images from the Stanford Light Field Archive were used to generate these images.

Depth Refocusing

The objects which are far away from the camera do not vary their position significantly when the camera moves around while keeping the optical axis direction unchanged. The nearby objects, on the other hand, vary their position significantly across images. Averaging all the images in the grid without any shifting will produce an image which is sharp around the far-away objects but blurry around the nearby ones.

Similarly, shifting the images 'appropriately' and then averaging allows one to focus on object at different depths.

The shift-and-add algorithm is used for depth refocusing: for every sub-aperture image l(x, y), compute the (u, v) corresponding to that image. Shift the sub-aperture image by C*(u, v), and average the shifted images into an output image. C is chosen by hand here, but can also be chosen according to best alignment. A larger C indicates refocusing further from the physical focus. The sign of C affects whether one focuses closer or further.

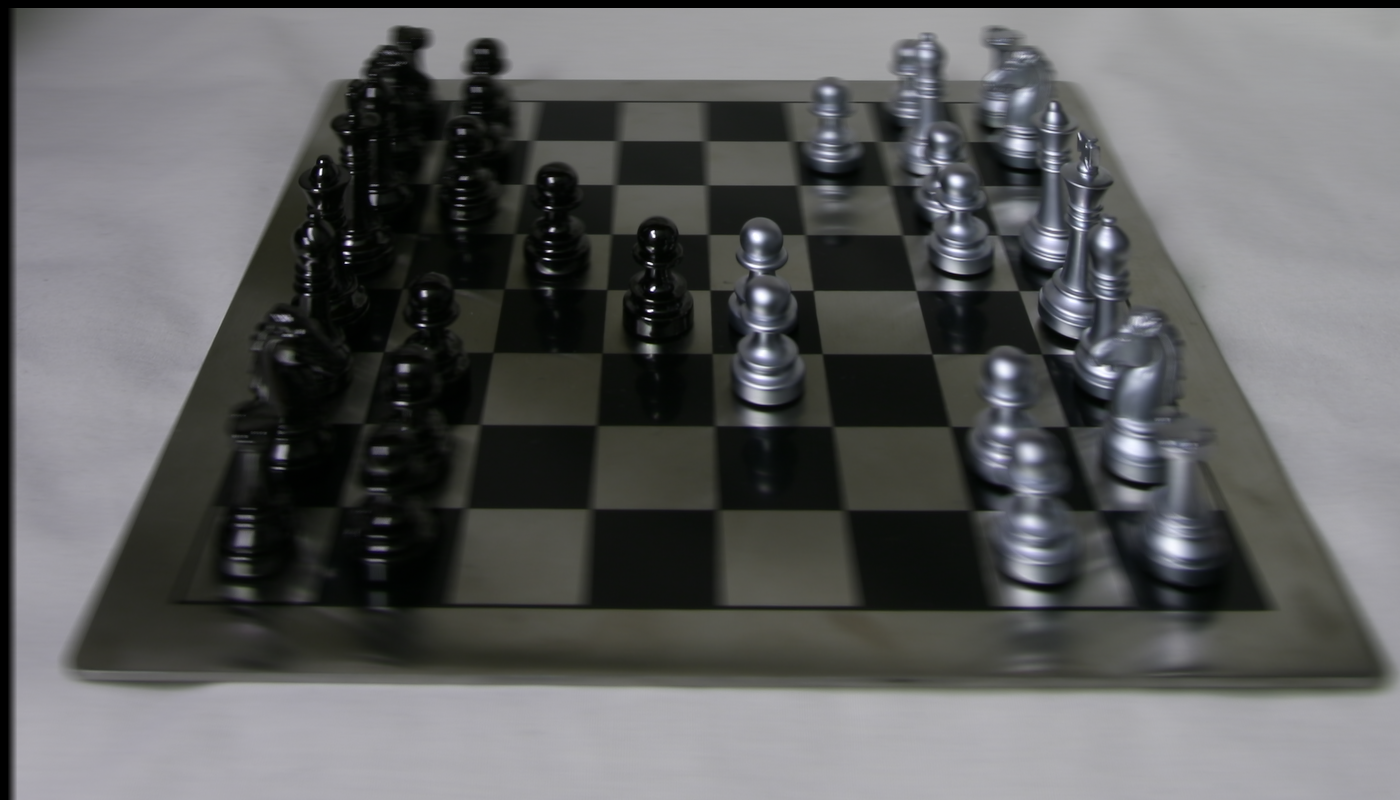

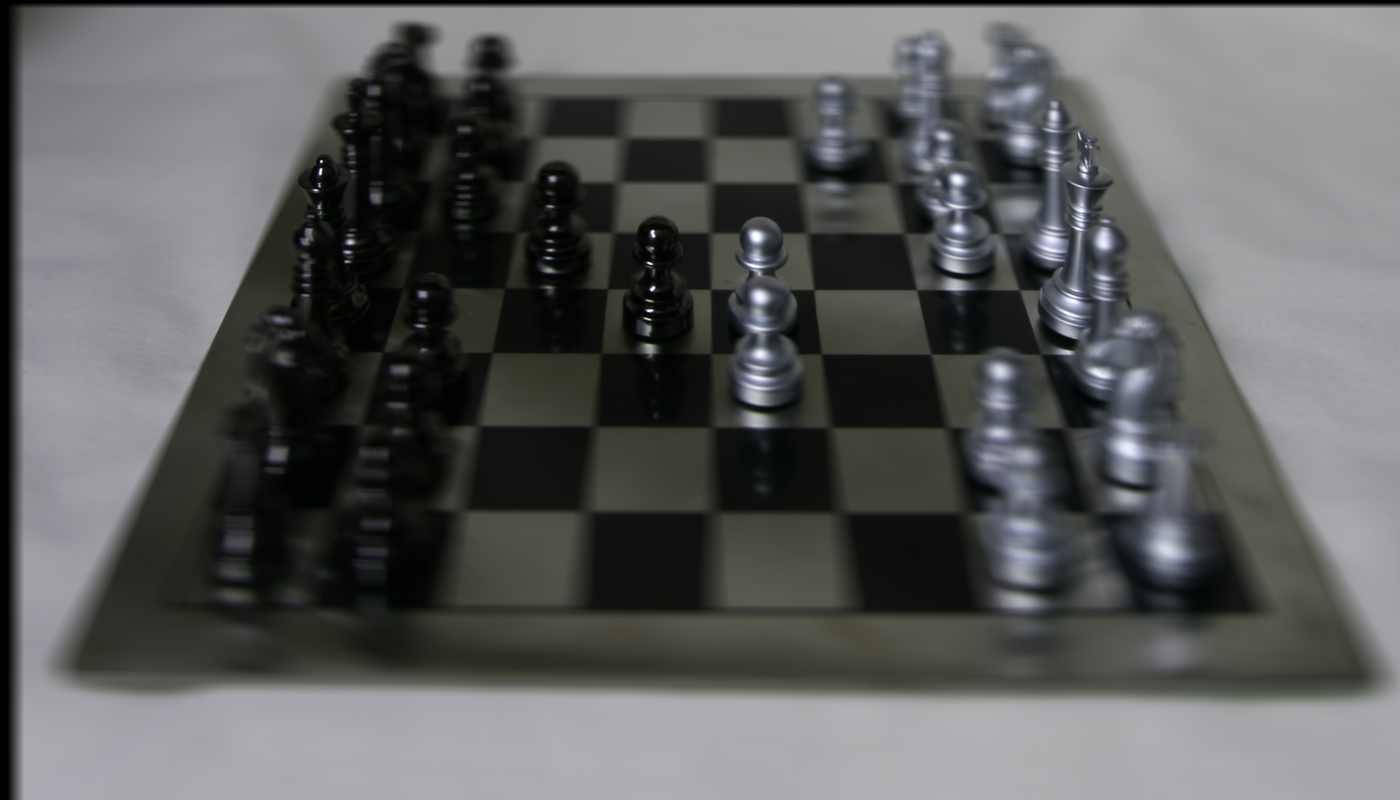

Here is a gif of shifted and averaged images, with C ranging from -0.4 to 3.0 in 0.2 increments.

Aperture Adjustment

This image of San Francisco was produced by averaging multiple images collected using a satellite. Averaging a large number of images sampled over the grid perpendicular to the optical axis mimics a camera with a much larger aperture. Using fewer images results in an image that mimics a smaller aperture. In this part of the project, I generate images which correspond to different apertures while focusing on the same point. This is done by leaving the C constant (where the focus lies) while choosing varying numbers of images to average.

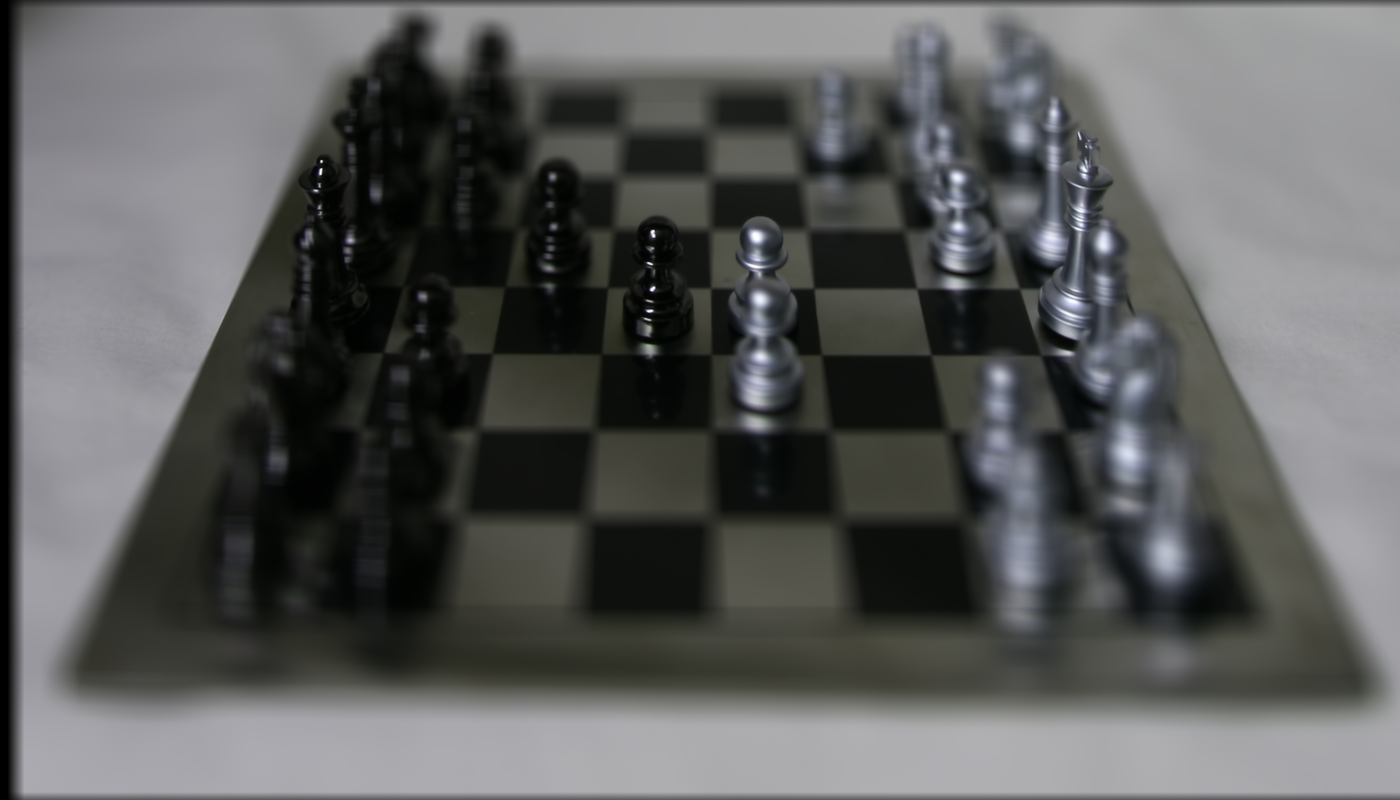

And here is a gif showing aperture adjusting with a radius (number of images averaged) ranging from 10 to 280 inclusive.

Summary

I learned hands on the power of having 4 dimensional vs. 3 dimensional data when it comes to image processing. I learned a new way to think about aperture and focus - thought of it in terms of averaging for this project, rather than the typical way to think of it, in terms of how a camera's body is being set up for a photo.