Overview

A light field camera uses a microlens array to capture 4 dimensional information about a scene. With this kind of data, we can achieve complex effects, like refocusing and aperture adjustment, with simple operations like shifting and averaging the existing data, eliminating the need to re-photograph images.

Depth Refocusing

If we simply average the images in the folder, we see that the resulting image is more focused away from the lens. This lenses that captured the photos live on a 17x17 grid, so when capturing an image, the difference in position of closer objects is larger than in farther objects because of parallax. Thus, when averaging, the closer objects appear blurrier.

To refocus images, I shift the images captured from the grid so that the desired portions of the images are aligned (and therefore clear). I loop through all the images and shift each image by an amount proportional to its distance from the center image (computed using the position in the grid at which each photo was taken). We can compute the shift amount based on the grid location because we know the cameras are spaced equidistant from each other. We multiply the distance by a factor ‘a’ which determines the plane of focus. By experimentation, I discovered that a values from 0 to 3 work best for this chess image. Below I show the effects of different alpha values.

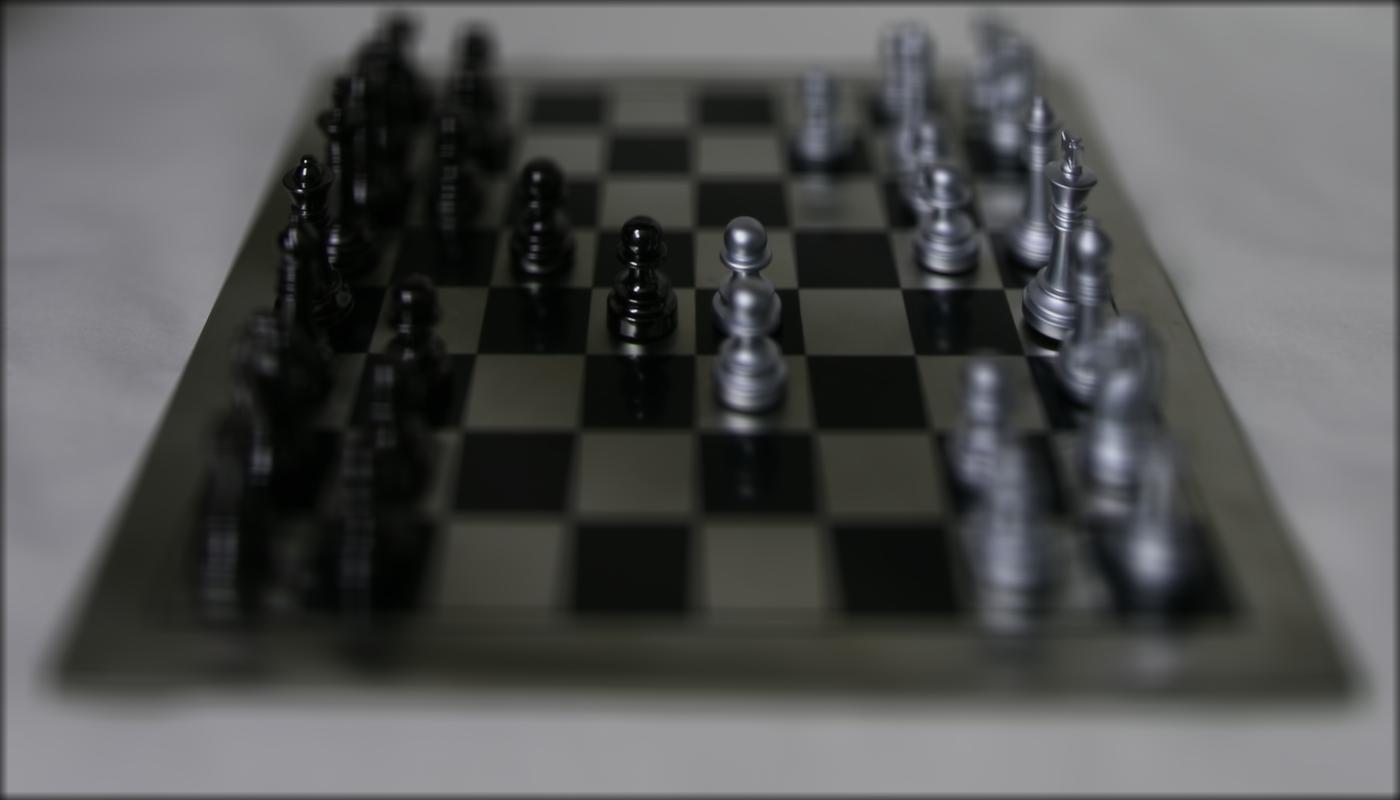

a = 0

a = 1

a = 2

a = 3

Gif of them all together!

Aperture Adjustment

The aperture refers to the size of the opening that allows light into the lens. A very small aperture will only allow a few rays of light into the camera producing a sharp image with a large depth of field. Increasing the aperture will blur some portions of the scene, because for a single point in the scene, it essentially averages the light rays coming from different directions.

To implement this, I average different amounts of images to achieve different aperture sizes. Using just the center image produces the smallest aperture. To produce the effect of a larger aperture, I average more and more of the images surrounding the center images. Below I show the results of averaging images 1, 2, 3, 4, 5, 6, and 7 grid positions (in the x and y directions) away from the center image. As the amount and distance between each image increases, the aperture increases and the resulting image becomes blurrier.

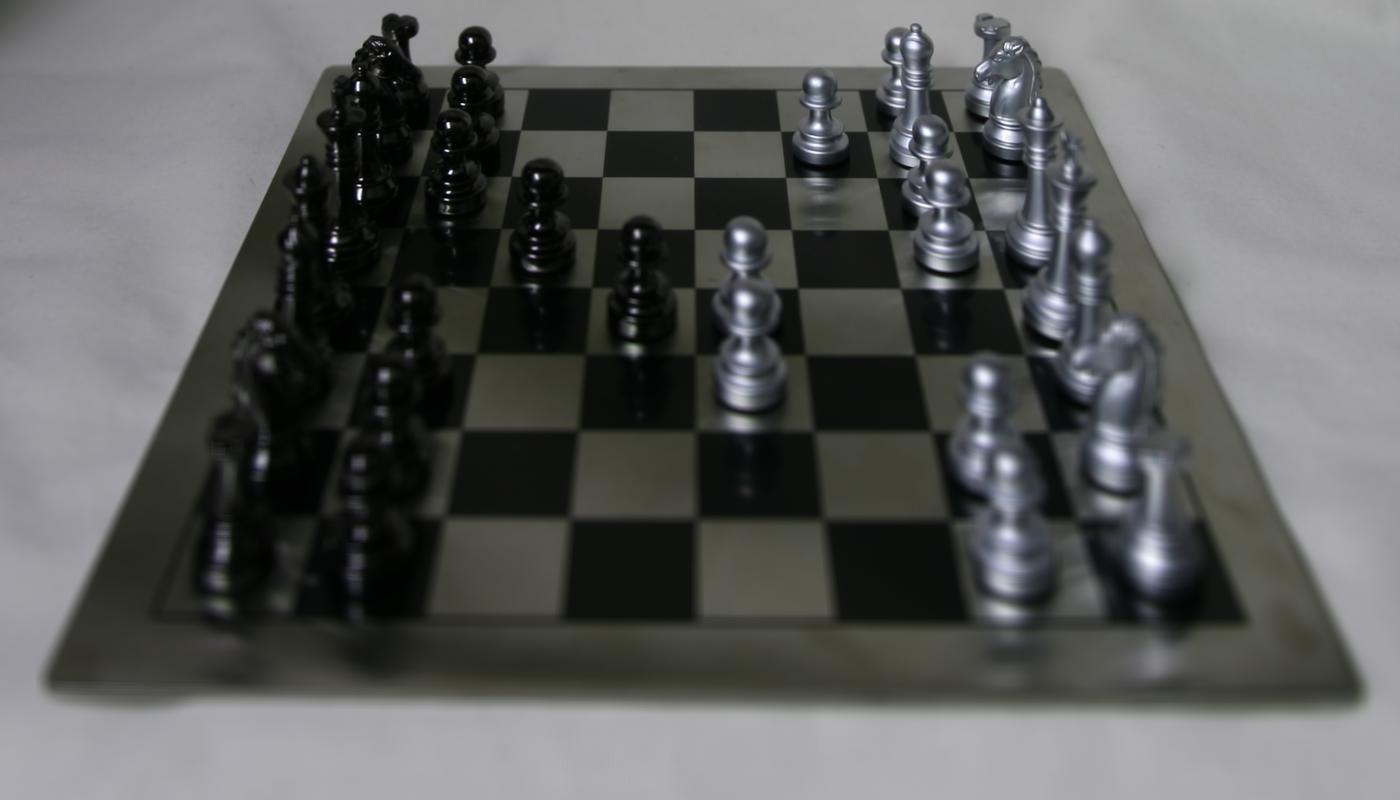

Distance = 0

Distance = 1

Distance = 2

Distance = 3

Distance = 4

Distance = 5

Distance = 6

Distance = 7

Gif of them all together!

Summary

I didn't know anything about light fields before this class, and I found this project really interesting because I had no idea people had thought of this concept of capturing 4D data so that image adjusments, like refocusing, could happen later. This project also clarified some camera-related terminology like aperture and depth of field as I saw its effects come to life!