Overview

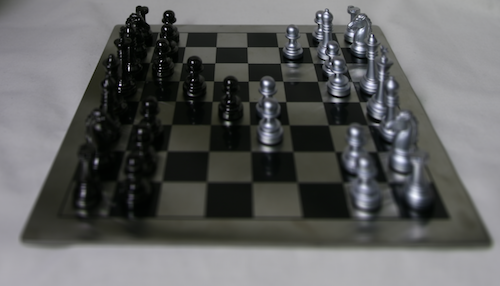

Goal of this project is to reproduce some of the complex effects in Professor Ren Ng's paper using real lightfield data. We explore depth refocusing and aperture adjustment.

Depth Refocusing

To simulate the effect of a depth of field, we average all the lightfield images taken of an image from Stanford's dataset. Without any shifting, we can see that the image is sharper further from the camera, and blurrier closer to the camera. This is because any shifts in movement of an object closer to from the camera results in a greater perceived change. By using the calibration data and a constant multipler, we can focus the image to sharper in the foreground or background.

Multipler values 0.0 to 0.05 (left to right)

Animation

Aperture Adjustment

We also simulate aperture adjustment by keeping a constant multipler, and varying the number of images to blend with. The greater number of images we average with, we can mimic a photo taken with a larger aperture. With fewer images, we mimic a photo taken with a smaller aperture.

Single Image

Radius values 2 to 8 (by 2)

Animation

Conclusion

Overall, I am impressed that such simple techniques can achieve such interesting results. I am also thankful for the dataset that Stanford has kindly collected and made public. The data curation made our lives much easier.