Depth Refocusing and Aperture Adjustment with Light Field Data

Sheng-Yu Wang (ade)

Html template thanks to Jacob Huynh

Overview

Focusing is strongly related to the concept of depth, which at first glance may requires complicated geometric ray calculations to achieve the refocusing effect. However, with data-driven techniques such as light-fields, we can achieve many interesting effect like refocusing without creating a 3D model. Lightfield utilizes the fact that light travels in a straight line in free space (we can approximate atmosphere as the free space, or that the atmosphere is uniform enough), so photos taken at multiple perspectives of the same view are sufficient to express the concept of depth. In this project, this idea is implemented the create virtual refocusing and aperture size changes.

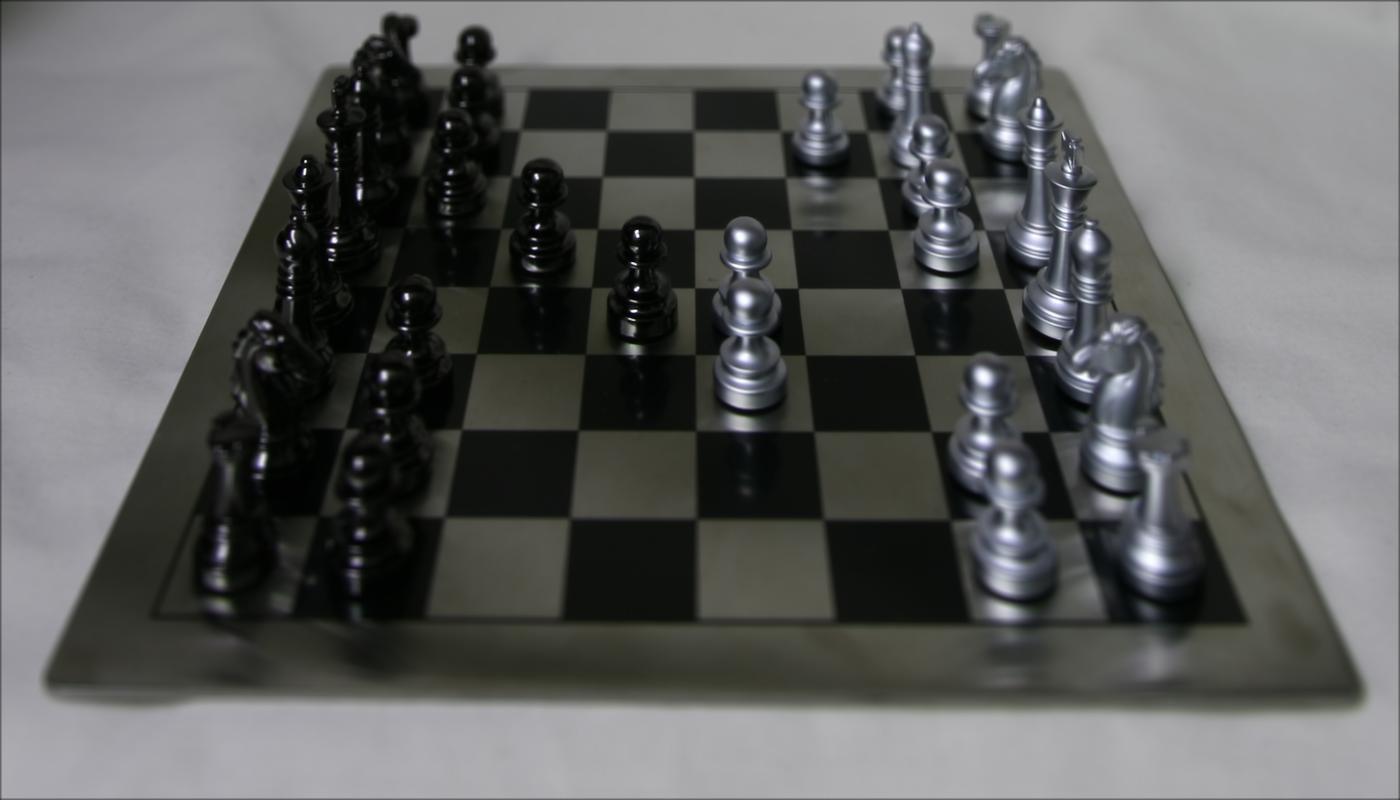

Depth Refocusing

By shifting and averaging the light-field data, the refocusing effect can be achieved. The shifting amount of an image is proportional to the pinhole postion (u, v), and by changing the proportion c, focusing at different depth is generated. Note that without shifting (c = 0), the average focuses further away. By increasing the shifting factor c, what actually happened was that the aligned part of the images are getting closer, so that the more focused part will be moved more up front. This creates the refocusing effect.

|

c = 0 |

|

c = 0.5 |

c = 1 |

c = 1.5 |

c = 2.0 |

c = 2.5 |

c = 3.0 |

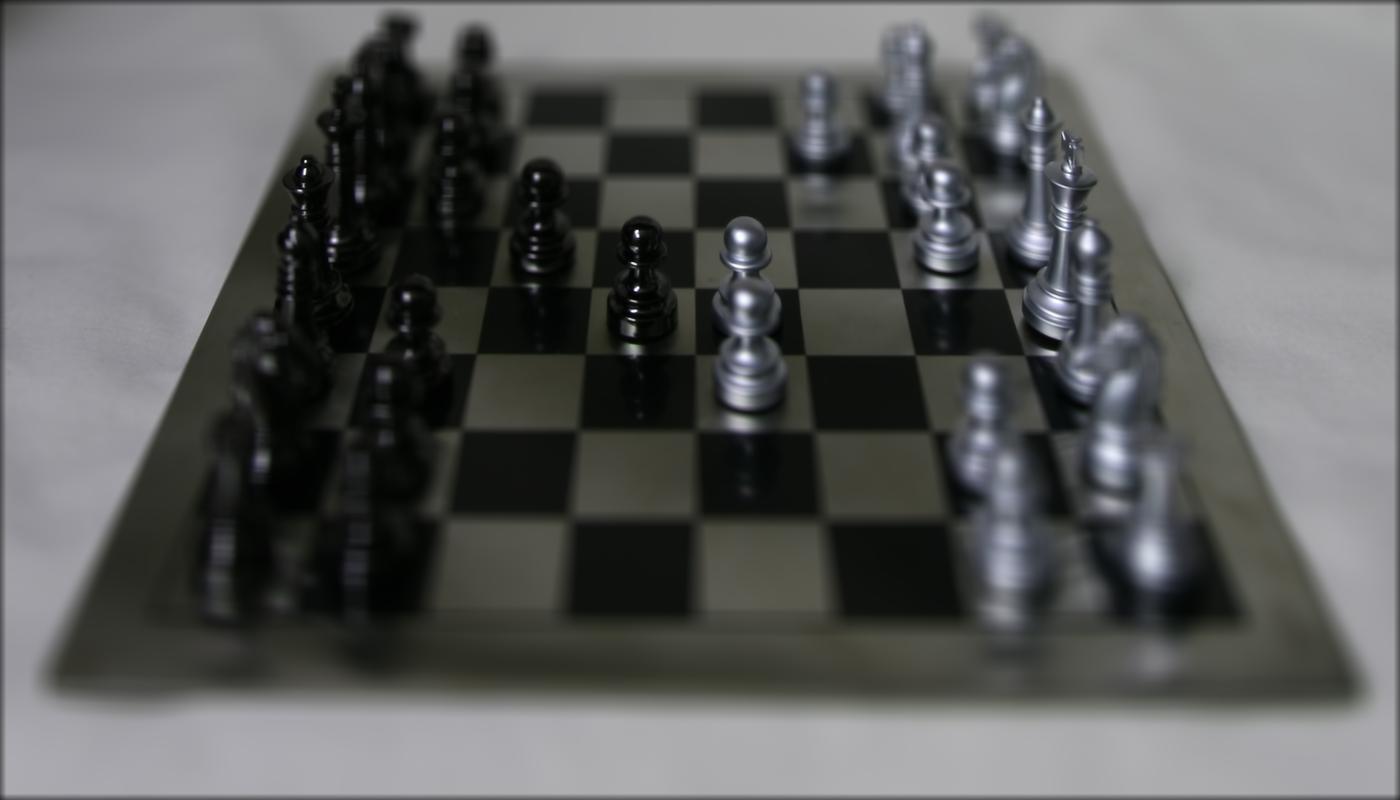

Aperture Adjustment

Aperture size determines DOF, where larger aperture size gives smaller DOF, and vice versa. With smaller DOF, we will have less range of depth being in focus.

Interestingly, we can do a virtual aperture size change by controlling the number of averaged light-field images. As there're more images, it is similar to having

a larger aperture size, which give less DOF.

Note that the refocusing factor c is choosed to be 1 for all images to have a better visual effect. The virtual aperture is centered at the middle of the pinhole

positions, where we varies the size of the aperture. The smallest on is a 1x1 square in the middle, the second is 3x3, and it goes all the way the 17x17. We can see

that, as predicted, the largest aperture size 17x17 gives the least DOF, while 1x1 square gives the largest DOF.

|

size = 1x1 |

|

size = 5x5 |

size = 9x9 |

size = 13x13 |

size = 17x17 |

Summary

It is really interesting to have data-driven methods that uses the core idea of light traveling in a straight line to create effects like refocusing which at the first glance sounds very complicated geometrically. By doing the shifting and averaging, we avoided the 3D geometry modeling but still have plenty of freedom to play around with depths, which is truly amazing.