The Stanford Light Field Archive has some sample datasets comprising of multiple images taken over a regularly spaced grid. In this project, we combine and average the images from the chess dataset in different ways to implement simulated depth refocusing and aperture adjustment. With different combinations of shifting / scaling / averaging, we can mimic certain effects in our generated GIFs.

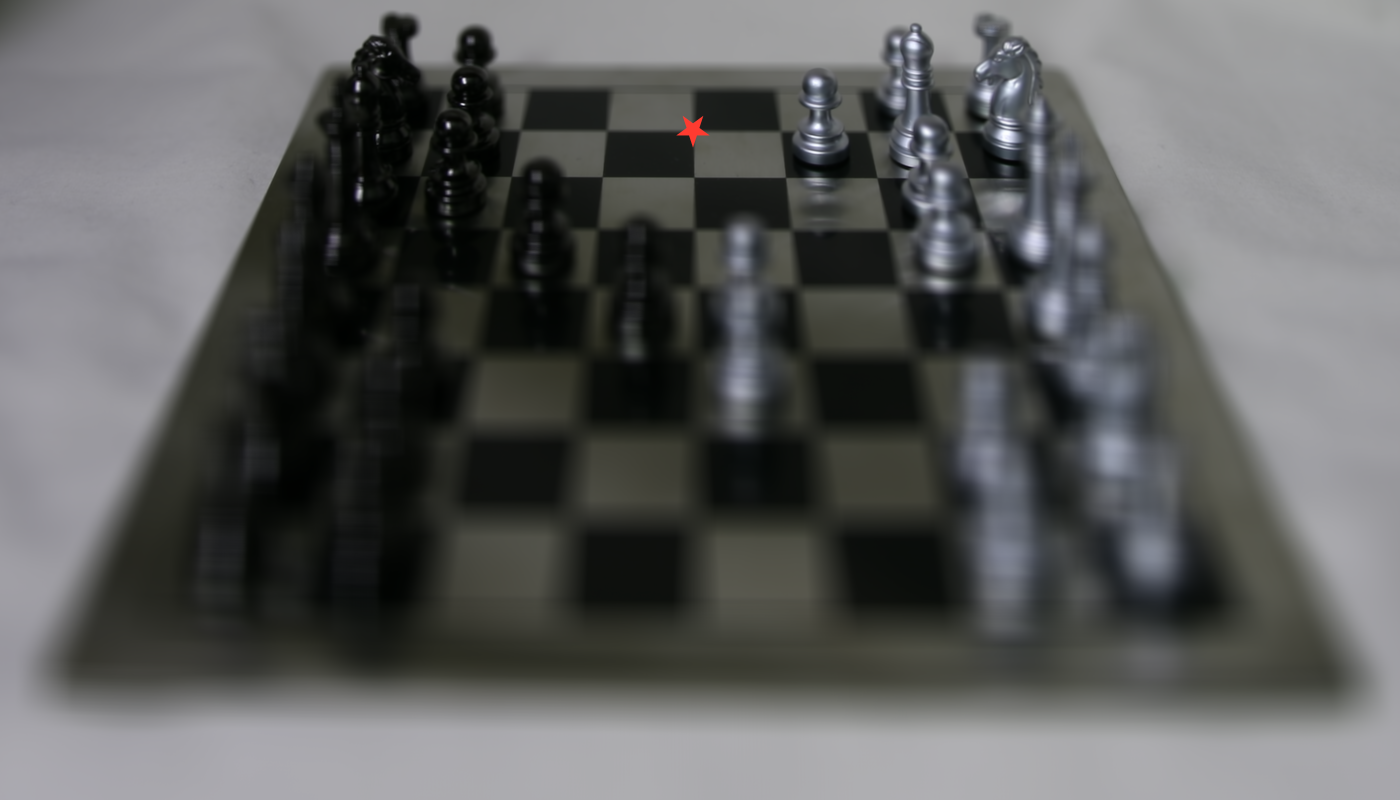

To implement depth refocusing, I shifted each image linearly in accordance with how far it is from the center of the image grid and averaged these shifted images. Scaling the shift amounts by various constants (with some hyperparameter tuning, I selected constants in the range -4 to 1) focused on different portions of the image. Below are a few select results of applying this procedure:

Chaining together several of these images with different scale amounts leads to a nice GIF that displays shifting camera focus as the scale is varied:

To implement aperture adjustment, I specified some radius r, and averaged only images within that radius from the center image in the image grid. Below are some select examples with the corresponding radius annotated below. Note how as we increase the radius size, the image gets blurrier; this makes sense as it's simulating allowing additional light into the camera and thus increasing the amount of noisy light that's detected.

Chaining together the images with different radii leads to the following GIF. Look at how as we increase the radius, you can see the image get blurrier!

Below is a depth-refocusing GIF generated using a grid of 9 pictures I took by hand of a bag of chocolate.

It seems to have somewhat worked - if you look closely you can distinctly see a degree of refocusing at different points in the image. However, the results aren't great due to two primary reasons. Firstly, there's a degree of inaccuracy since these images were taken by hand rather than using any degree of rectifying / more precise equipment. Secondly, rather than using a 17 x 17 grid of images, I only took a 3 x 3 grid of 9 images. The difference in sample size is clear in the lack of quality unfortunately.

Below are the results for aperture adjustment of the same bag of chocolate, with annotated radii:

This also seems to have worked - you can distinctly see some areas of the bag of chocolate in the radius = 1 case as being more blurred than other areas (the radius = 0 case is irrelevant here since it's simply a single image, the image in the middle of the grid). However, due to the noisiness of taking the pictures by hand and not rectifying them like those in the Stanford Light Field Archive, other areas of the image also appear somewhat blurred. Overall, similar to depth refocusing with the candy bag, the results appear to have worked but are lacking in quality due to poor equipment / small grid size.

To implement interactive refocusing, I enabled passing in custom coordinates on which to align. Then, my algorithm used a technique similar to the alignment technique used to colorize the Prokudin-Gorskii collection (modified to align around the custom coordinates). Then images were averaged, and the result was an image that was blurry away from the selected point, and focused around it. Below is an example for the selected point (100, 700):

Overall, I learned quite a bit about lightfields. I think it's amazing that using lightfield data, we can parrot a variety of camera effects. The most important takeaway about lightfields from this project (and all the other projects so far to be honest), in my opinion, is that thinking of computational photography algorithms requires a fine degree of intuition. Though there is significant mathematical founding underlying most of them, they seem to be developed initially using a high level though process detailing what needs to be done to achieve an effect.

In terms of failures, there were none! Implementing depth refocusing and aperture adjustment turned out to be very straightforward without any hiccups, and the results look absolutely wonderful! I really enjoyed this project, in tandem with the last, and love learning about photography algorithms for video generation using sequences of images. Very aesthetic results! 😍😍😍