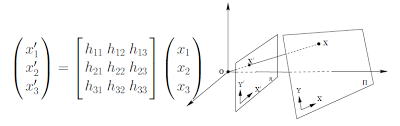

$\begin{bmatrix}

a & b & c \\

d & e & f \\

g & h & 1

\end{bmatrix}

\begin{bmatrix}

x \\

y \\

1

\end{bmatrix}

=

\begin{bmatrix}

wx' \\

wy' \\

w

\end{bmatrix}$

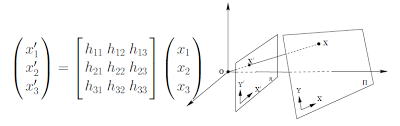

$\begin{bmatrix}

x & y & 1 & 0 & 0 & 0 & -xx' & -yx' \\

0 & 0 & 0& x& y & 1 & -xy'& -yy'

\end{bmatrix}

\begin{bmatrix}

a \\

b \\

c\\

d\\

e\\

f\\

g\\

h

\end{bmatrix}

=

\begin{bmatrix}

x' \\

y'

\end{bmatrix}$