Introduction

In this project, we are going to create a system for automatically stitching images into a mosaic. We will divide this into serveral steps:

1. Detecting corner features in an image; 2. Extracting a Feature Descriptor for each feature point;

3. Matching these feature descriptors between two images; 4. Use a robust method (RANSAC) to compute a homography

Feature Extraction

In the first part, we are going to use the use Harris Corner Detector with a threshold of 0.1, to detect interest points.

|

|

| Original 1 |

Original 2 |

|

|

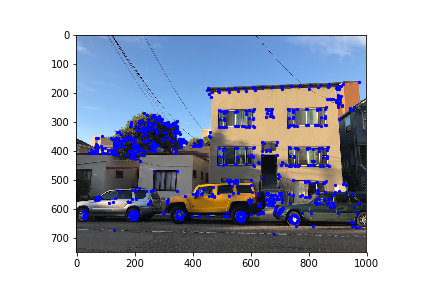

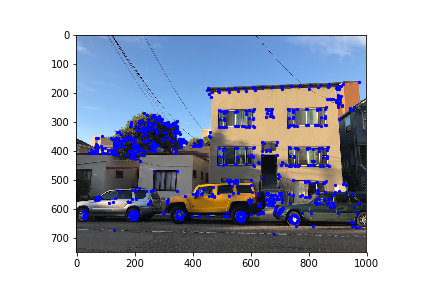

| Harris Corners 1 |

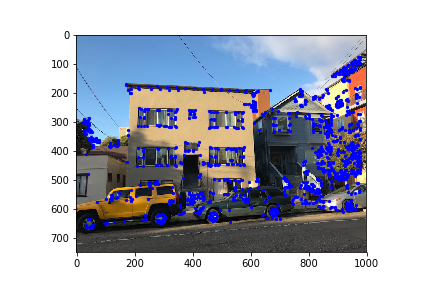

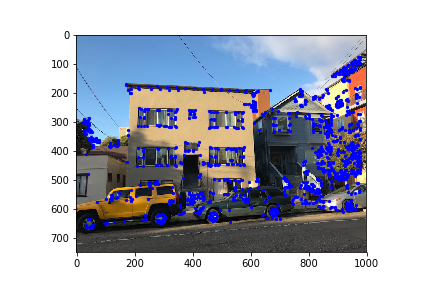

Harris Corners 2 |

Then, we use Adaptive Non-Maximal Suppression to suppress the number of feature points.

We calculate a radius to represent the distance to nearest neighbor with a larger Harris intensity.

Then, we sort the minimum radius for each point, and pick the largest 200 of them.

|

|

| Suppressed 1 |

Suppressed 2 |

Feature Descriptor Extraction and Feature Matching

Then, we extract axis-aligned 8x8 patches and sample these patches from the larger 40x40 window (spacing = 5 pixels) to get

feature descriptors. Then, we need to find pairs of features that look similar and are thus likely to be good matches.

RANSAC and Blending

Then, we run RANSAC with iteration number = 1000 and random choices = 4. This means for each iteration,

we randomly select 4 pair of matched correspondences to calculate the projective transformation.

Then we transform picture 1 according to the transformation. In the end, we pick the set of points with

most inliers, and use its homography and blend with picture 2 to get the mosaic.

|

|

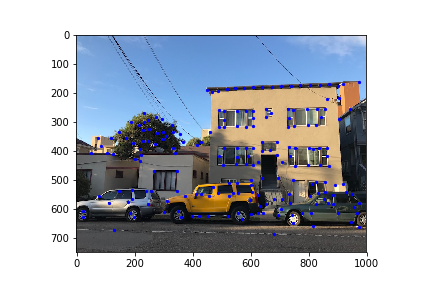

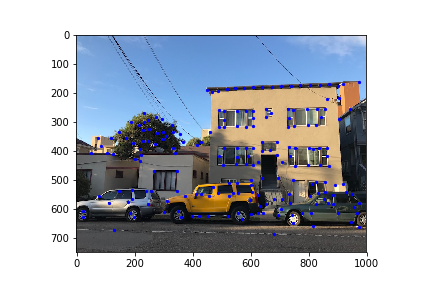

| Manual Result |

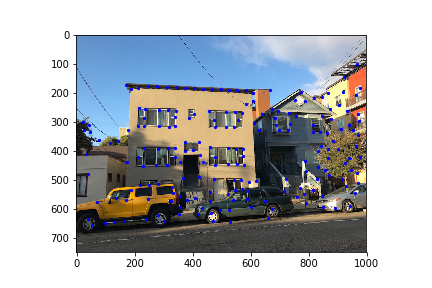

Automatic Result |

|

|

| Original 1 |

Original 2 |

|

| Result |

|

|

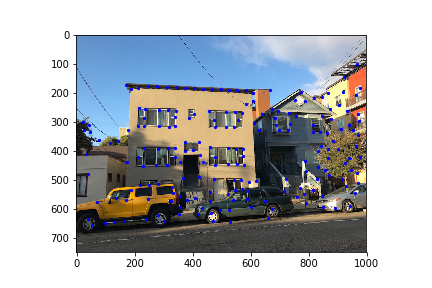

| Original 1 |

Original 2 |

|

| Result |

What have I learned?

The coolest thing I learned from this project is the process of suppress feature points and match them

together. With anms, feature descriptor extraction, feature matching and RANSAC, we could largely improve

the accuracy of matching.