CS194-26 Project 6A: Image Warping and Mosaicing

Maxwell Gerber

Brief Project Overview

In this project we explore a wonderful application of image warping using homographys in order to generate mosaics. Using data manually entered by the user we compute a transformation matrix

H which can then be used to map one image onto the coordinate space of another. We compose scenes made up of several photographs morphed together and combined using various blending tecnhiques.

Calculating Homographies

Image Rectification

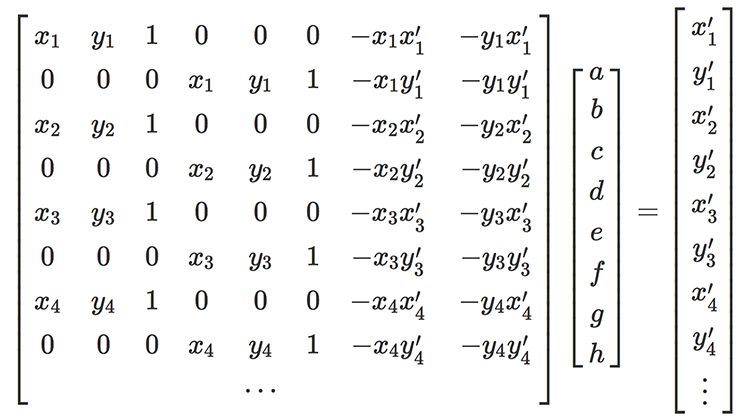

After implementing all of this, we have the ability to 'rectify' artifacts in images taken from different perspectives. This is an image of a poster hanging in my room taken at a pretty steep angle, which makes it hard to read the text. But, thanks to the power of homographies, we can morph the image to make it much easier to read! This proves that our image morphing techniques are working well, and that we are calculating

H correctly.

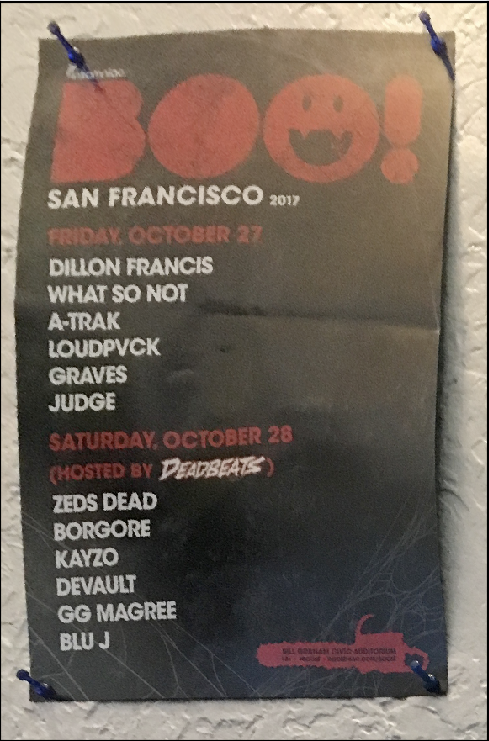

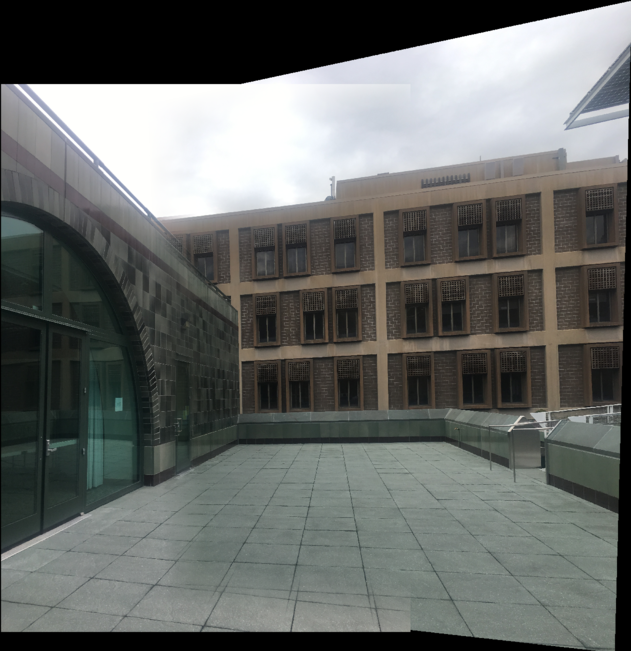

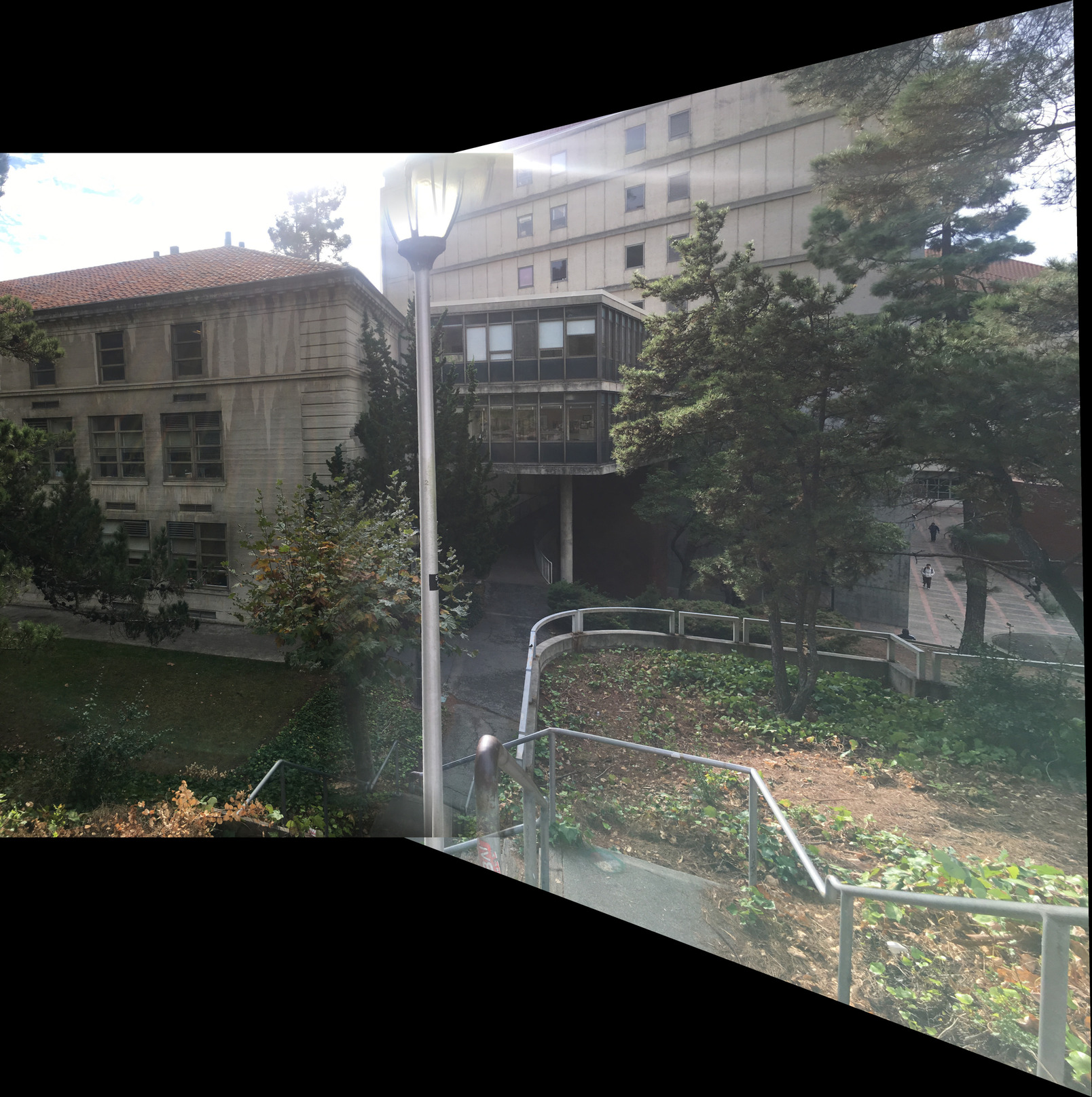

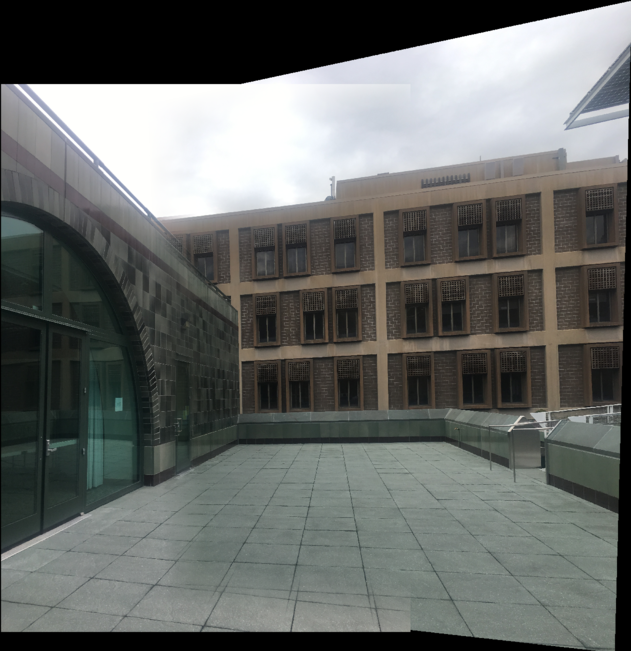

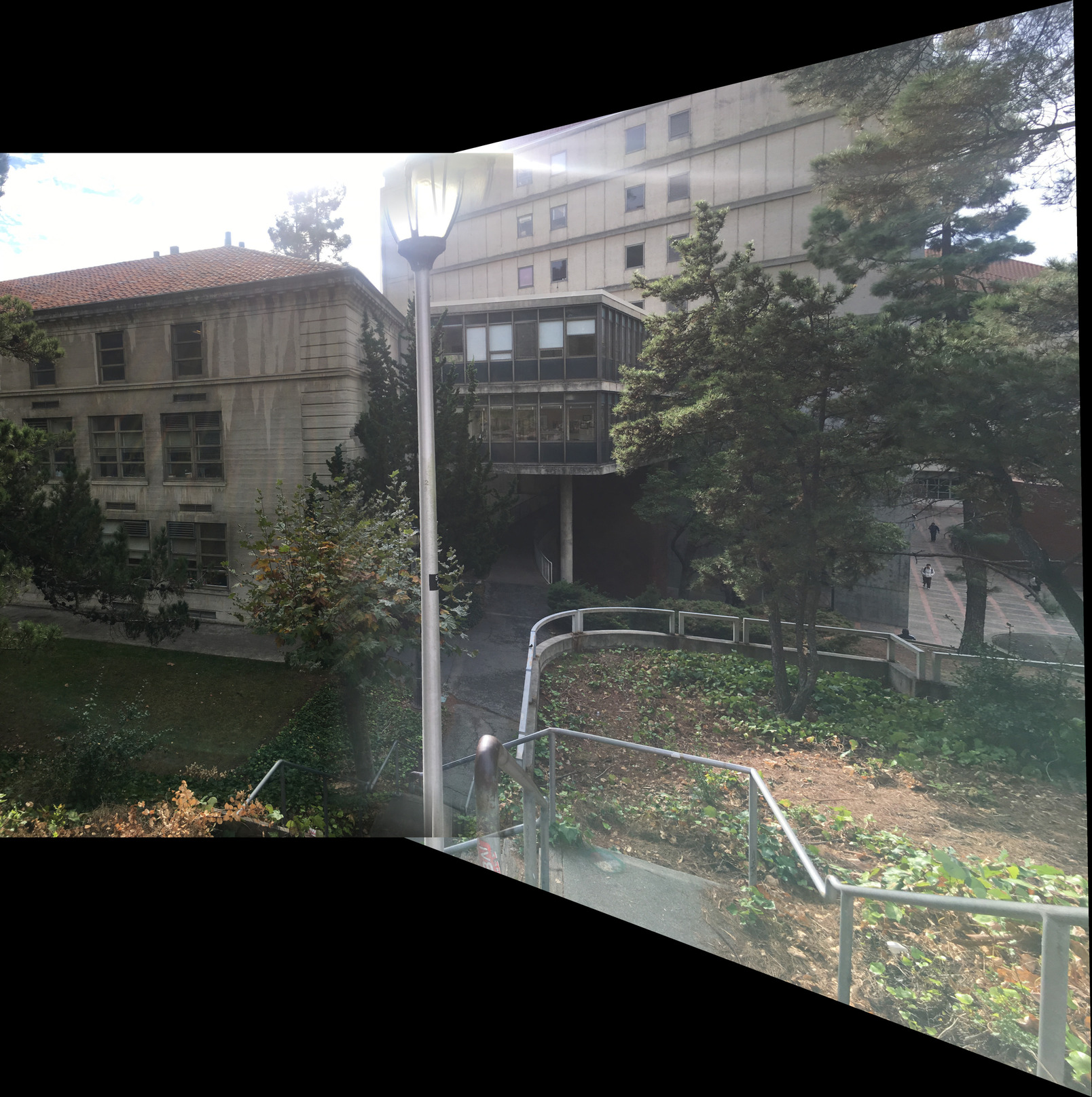

Image Mosaicing

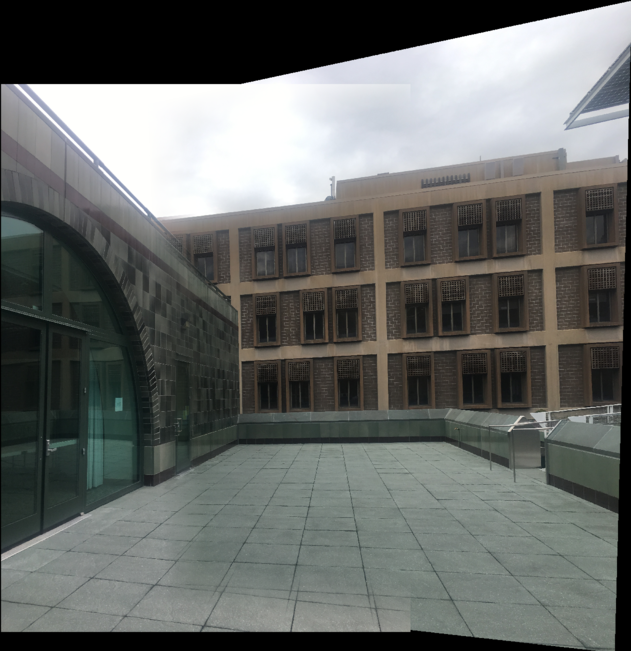

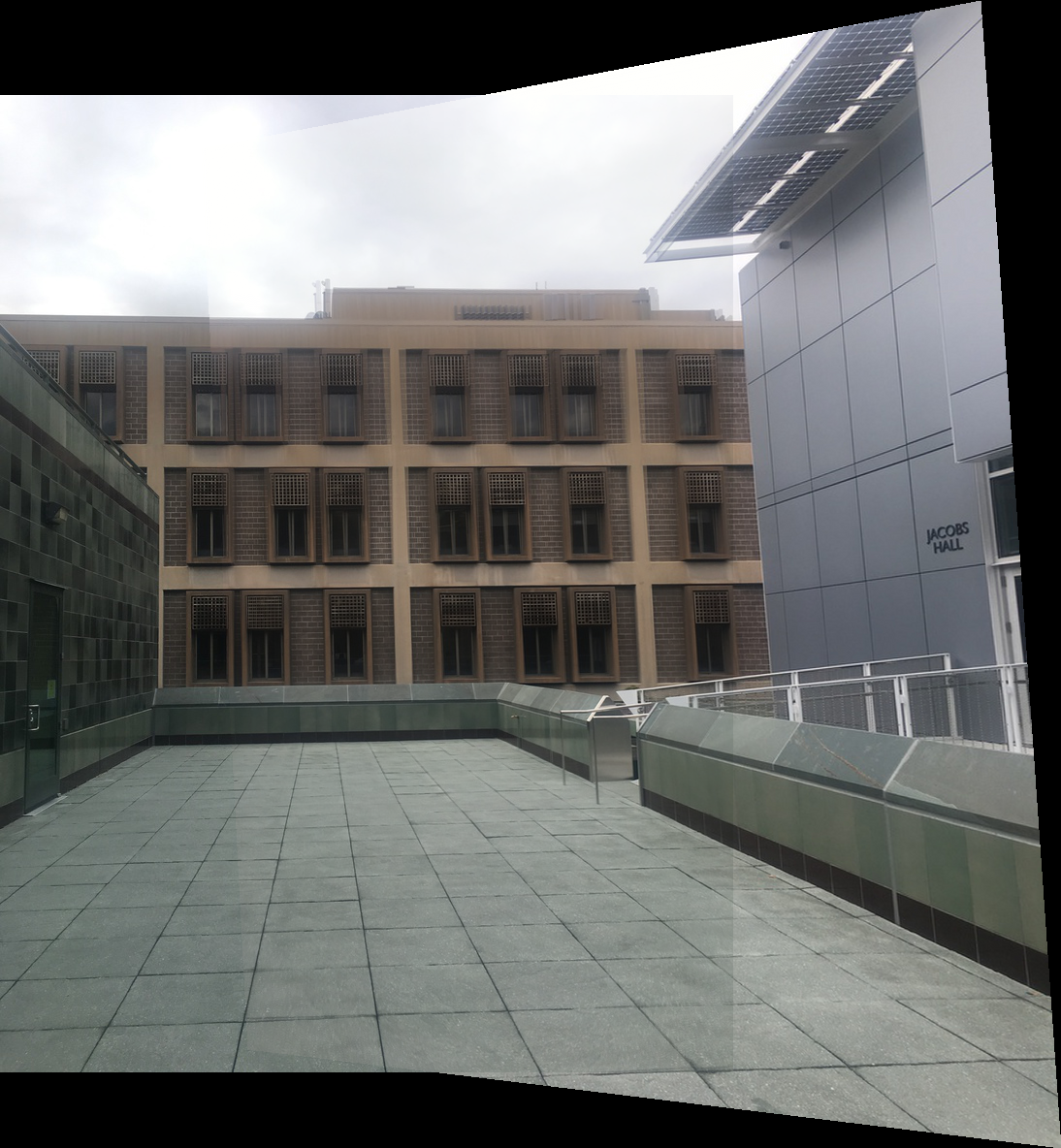

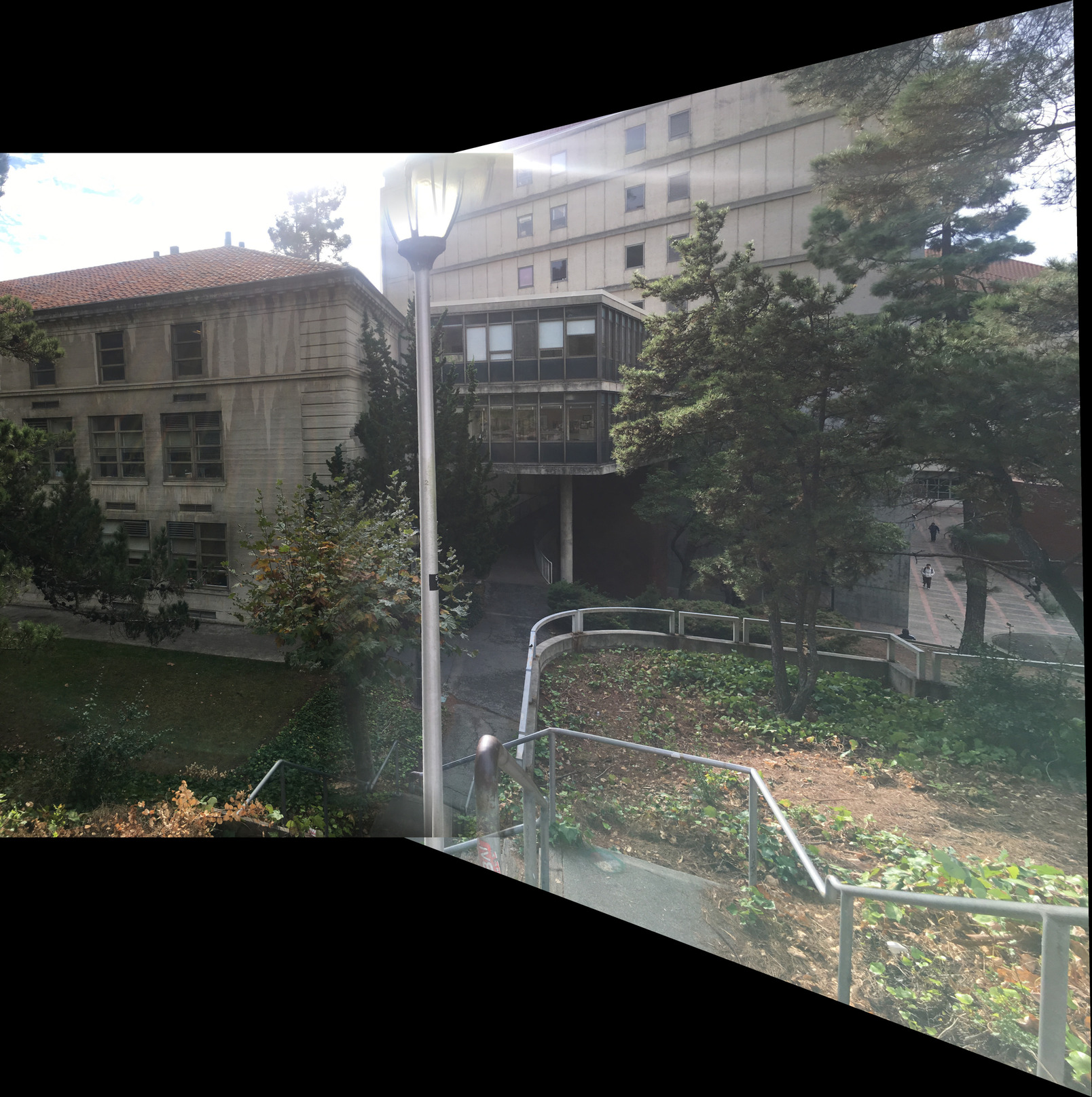

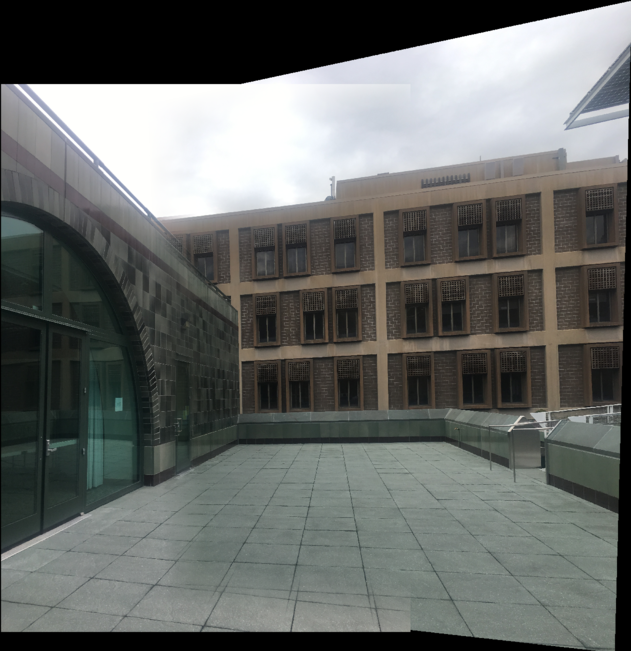

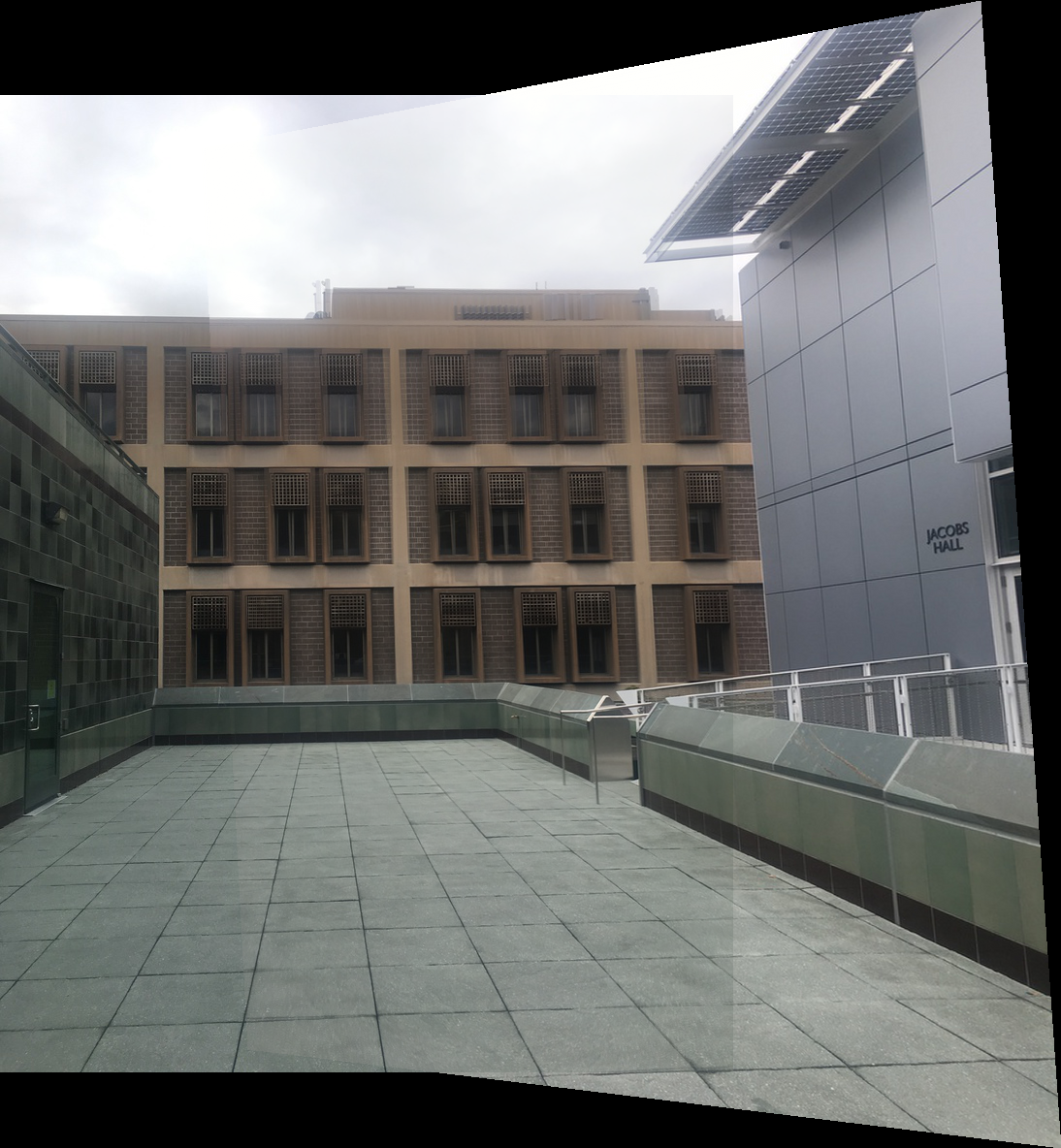

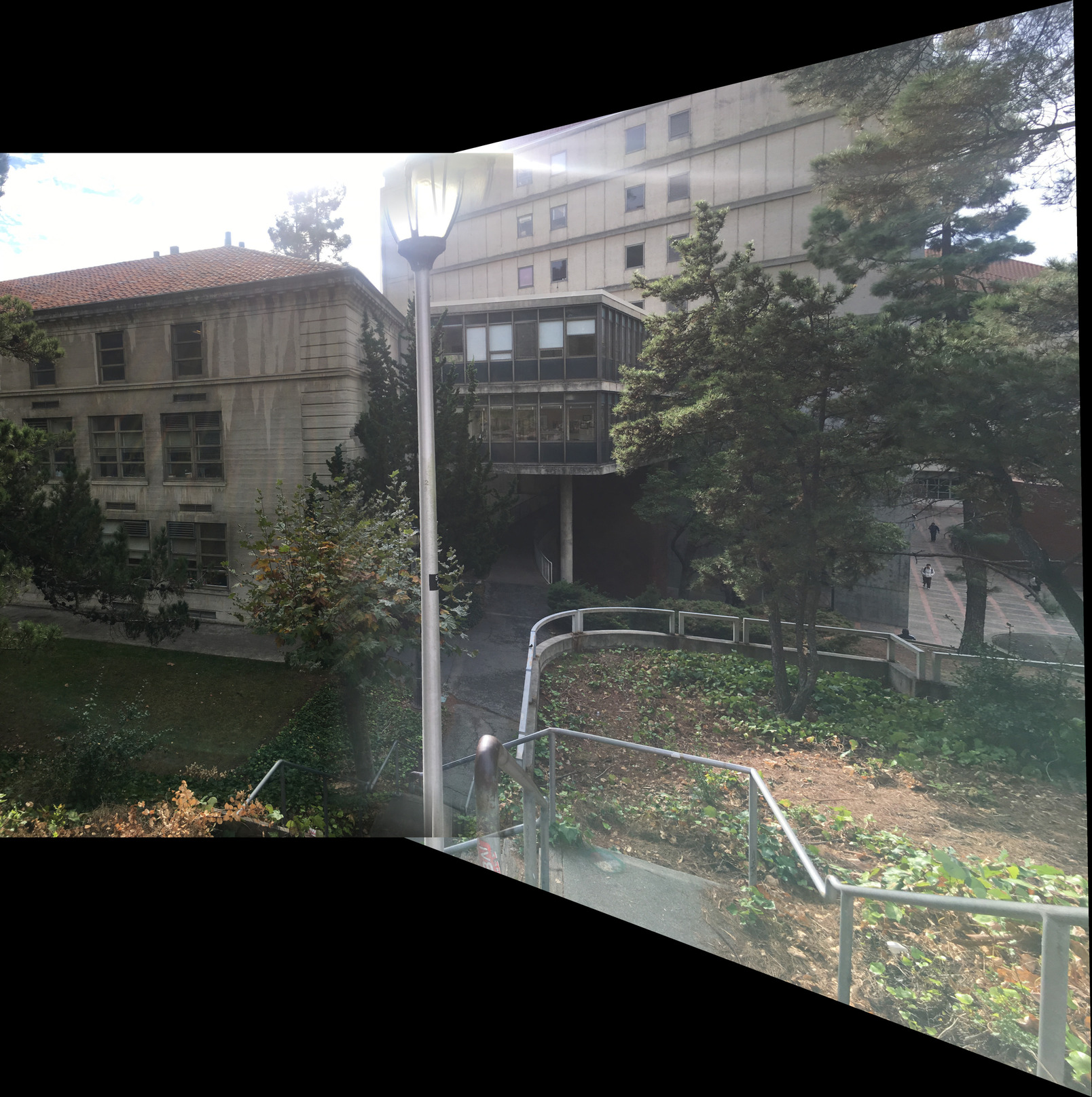

We can now use the tools developed to combine images to create awesome looking mosaics. The photos were taken using my iPhone camera and steadied by hand, which adds a significant amount of noise. It's awesome how well our algorithm can handle the noise produced. In order to make the results look better, I implemented linear blending at the edges. Whenever photos overlap, we blend the pixels proportionally. The closer a point is to the center of an image, the more weight we assign to that pixel. This creates smoother images overall, though small artifacts continue to pop up. Still, I am very happy with the results.

Bells and Whistles: Image Mosaicing Across Time (Failure)

I thought it would be really cool to find image correspondences across time, lining up new colored photos with old grayscale photos from a long time ago. It turned out to be much harder than expected because it's really difficult to find two photos that line up if they are taken at different times. Here are two photos of the Golden Gate Bridge and the best resulting mosaic that I got (Out of many tries).

Feature Matching for Autostitching

Now that we have the ability to blend and warp images given a homography matrix, we will investigate generating that homography matrix automatically. We will do this by implementing a simplified version of the MOPS paper discussed in class. This will involve implementing Harris Interest Point Detectors, Adaptive Non-Maximal Suppression, Feature Descriptor extraction, and Feature Matching. Once we have aligned features between two images, we can use the 4-point RANSAC algorithm as described in class to compute our homography matrix

H, as before.

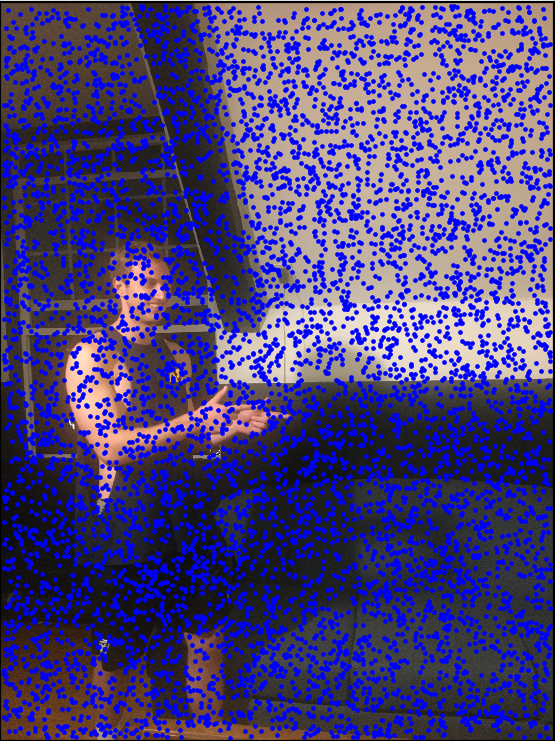

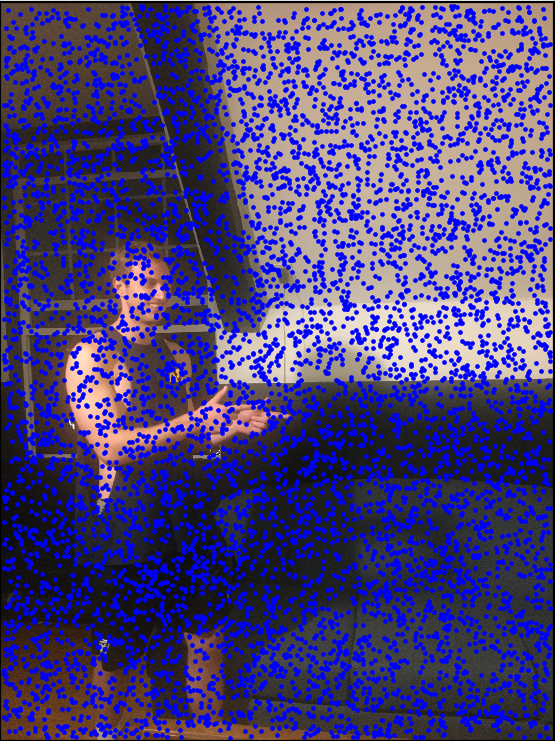

Harris Corner Detection

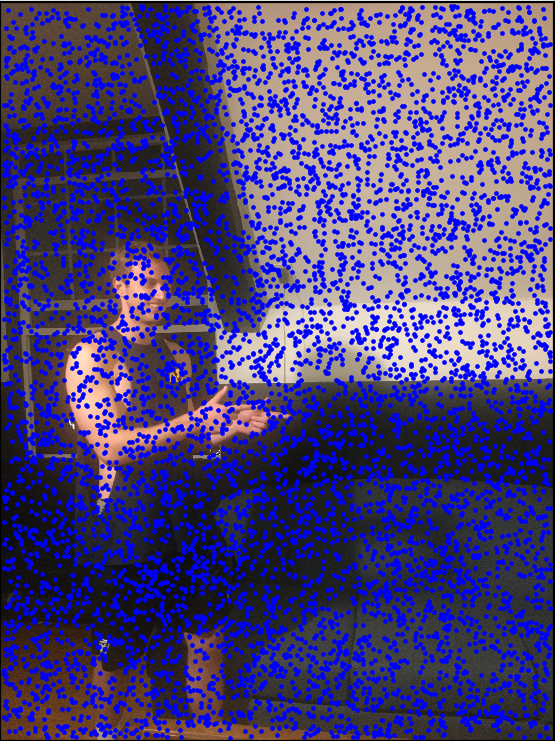

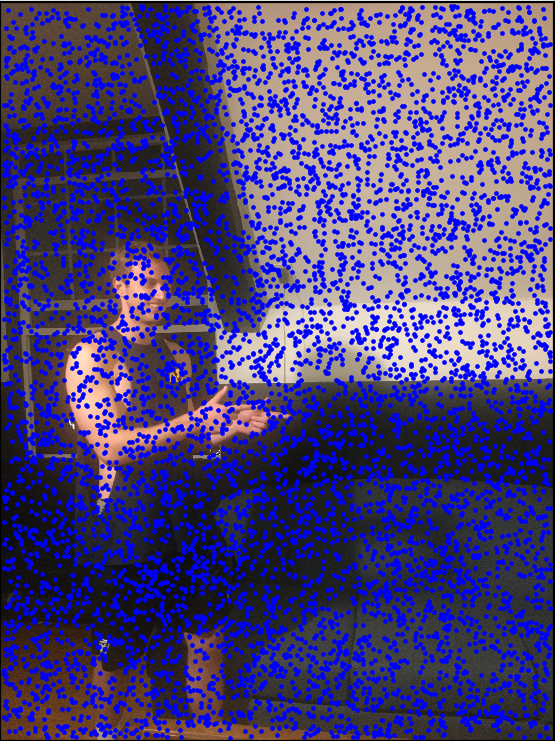

We first use Harris Corner Detection to detect points of interest in the images. Here is an example of Rob with the Harris Corner features overlayed. There are a huge number of Harris Corner features in this image - over 300,000! I have decided to only show 1/50th of the markers to make it easier to look at.

Adaptive Non-Maximal Suppression

In order to thin out the number of points being used, we implement Adaptive Non-Maximal Suppresion as described in the MOPS paper. This ensures we get "good" corner points with high corner strenght that are scattered roughly evenly throughout the entire image. Here, we pull 100 points from the 300,000 initially given to us.

Feature Descriptor Extraction and Matching

Next we need to implement Feature Discriptor extraction. From each Harris Corner, we extract a 40px by 40px box, before downsampling to 8px by 8px and normalizing for bias and gain. Once we do that for each image, we can find that patch's nearest neighbor in the other image. In order to further eliminate bad matches, we utilize the Russian Granny algoritm as discussed in class. If a patch closely resembles several other patches, then we assume it doesn't really match any of those and throw it out.

RANSAC and Results

Finally we implemet the RANSAC (Random Sample Consensus) method to finalize our resilts. We randomly select 4 correspondences between the images and construct a homography matrix. Then, we see how many other correspondences agree with this matrix, that is applying the transformation to one matches it up with the point in the other to within a reasonable degree of error. These matching points are called inliers. We repeat RANSAC 1000 times to find the matrix that produces the most inliers, and use that for our final warp.

The final auto-stiched images are shown below, compared to the manual images from before. I think the auto-stiched images seem more "proportional" than the manual ones, though significant ghosting artifacts still remain. I'm definitely happy with the results, though!

The final auto-stiched images are shown below, compared to the manual images from before. I think the auto-stiched images seem more "proportional" than the manual ones, though significant ghosting artifacts still remain. I'm definitely happy with the results, though!

What I Learned

This project ended up being waaaay harder than I expected. I realize I'm really starting to hate linear algebra. Mabe that's just the Senioritis talking.