Project 6 Homographies and Automatic Mosaics

In this project we will compute homographies between images in order to perform perspective warps in order to align images in a fashion that would allow the creation of panoramas. Similar to the affine transformations we used in a previous project to do face morphs, perspective transformations will be defined by pointwise correspondances in each image matching certain features. However, the perspective transform is in 8 degrees of freedom rather than 6, so we will be able to create a more powerful warp to accomplish various things.

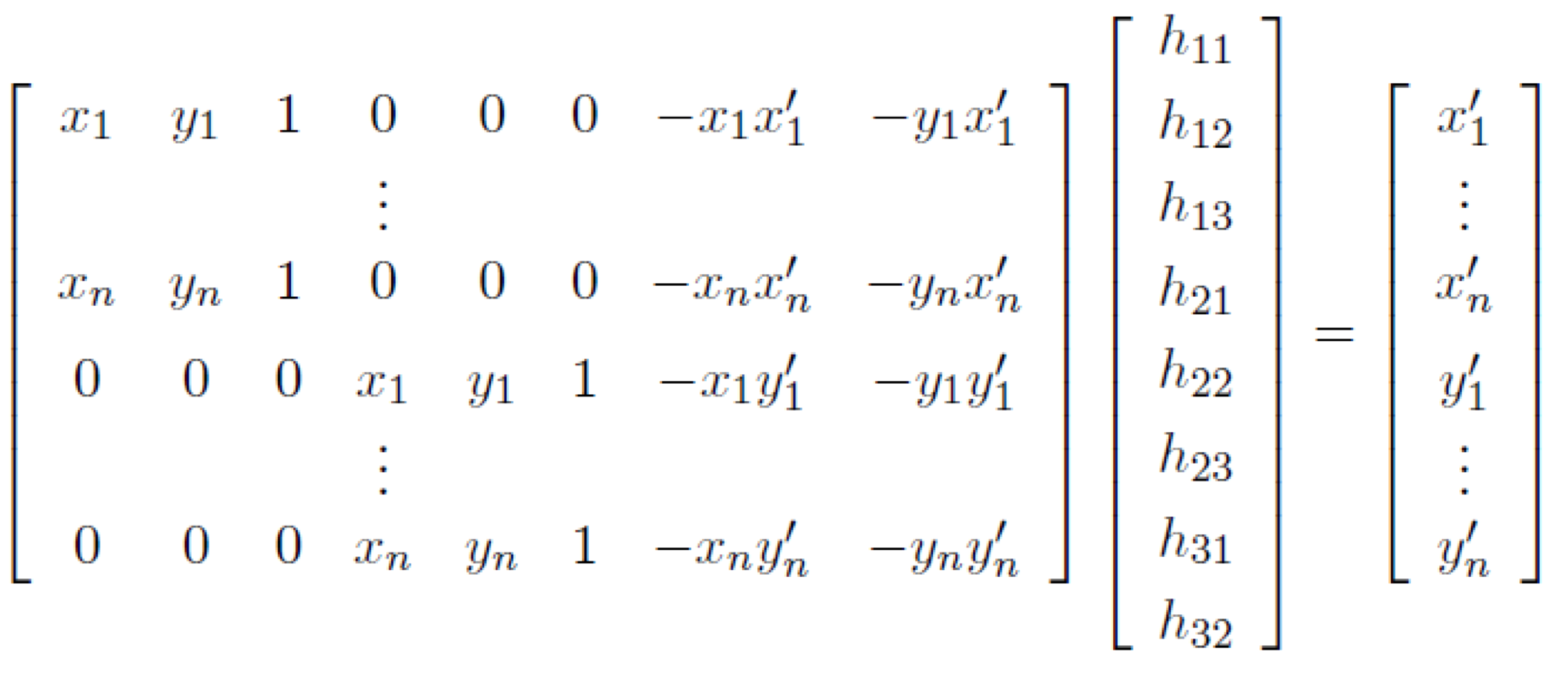

Here is the linear system that we will solve to get each of the 8 variables in the final homography H. In part A we have an overdetermined system since we will choose 4-8 point correspondences manually, so we will use the best least squares approximation. For part B we will choose 4 points in each iteration of RANSAC to compute the exact homography, so we can use a matrix solver while restricting the scale factor i to 1 or use the V matrix from the SVD of A matrix below.

Part A: Homographies

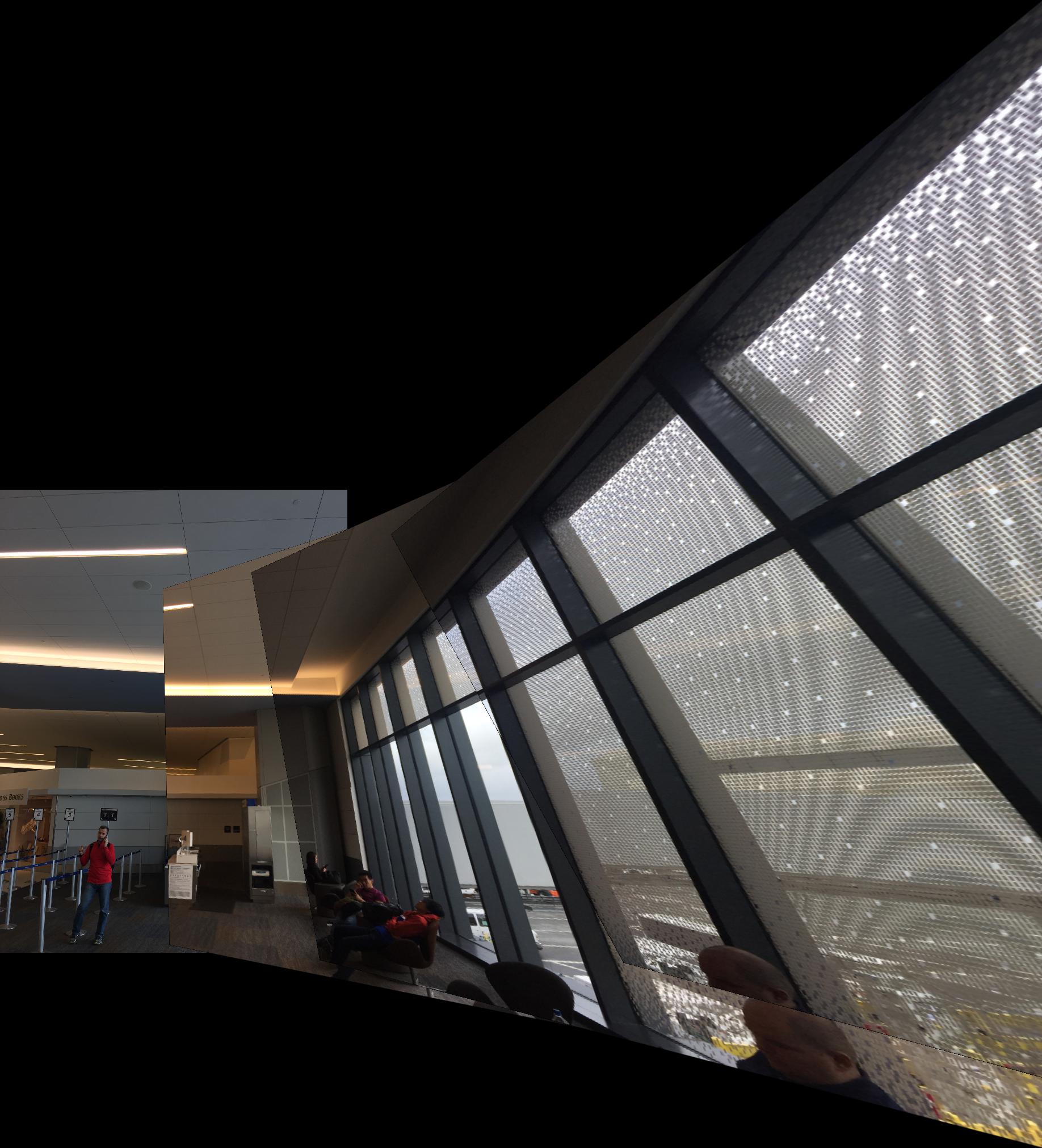

Here are the results of rectifying a couple of images I just took around LA.

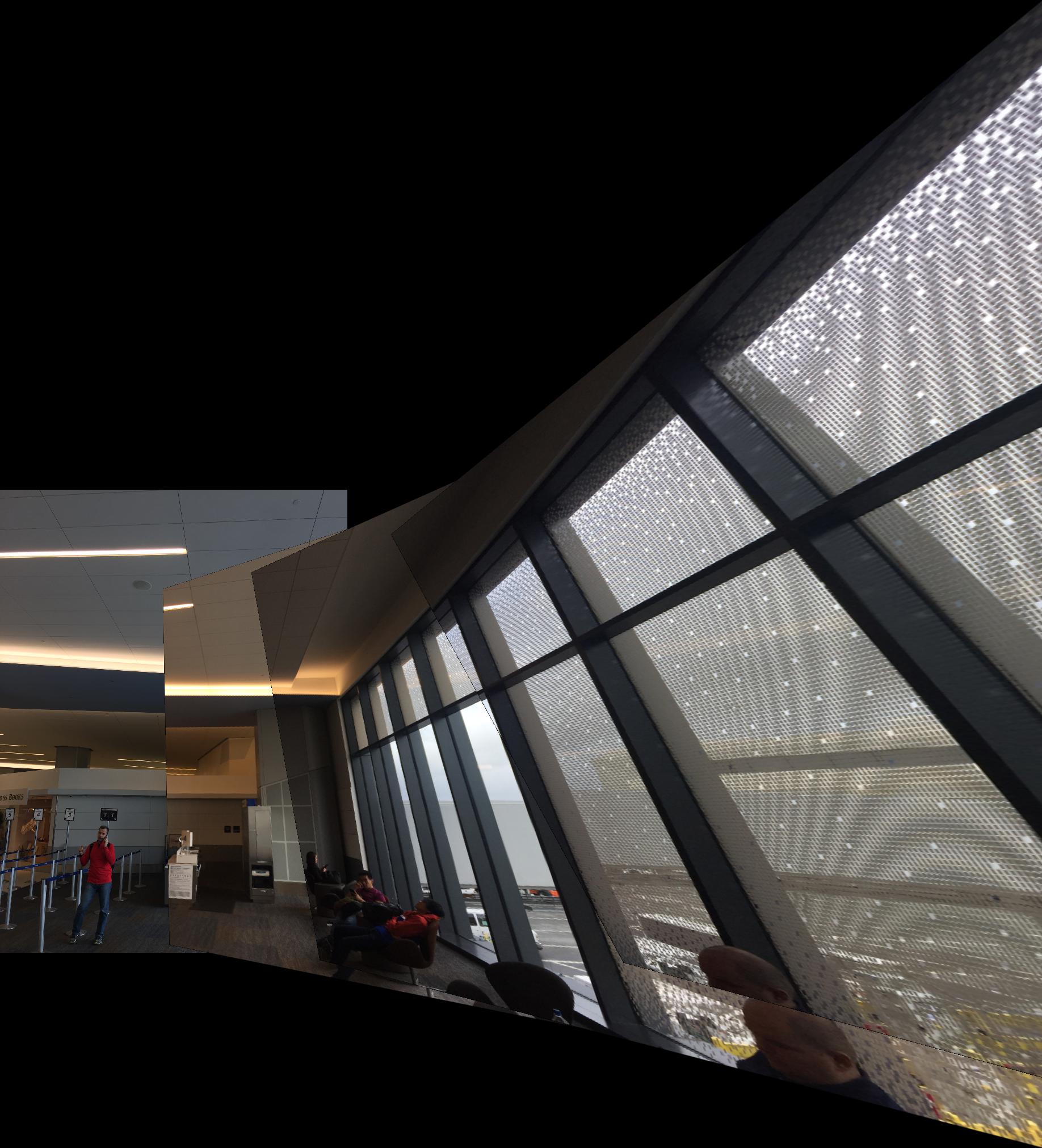

Stitching a Scene

Here are the results of stitching together several pictures in a scene. We apply the same process as used in the rectification iteratively to each subsequent image. I noticed that because I was doing the images in order, the amount of warp compounded for each subsequent image due to the perspective differences and linear projection. This could have been alleviated by beginning with the central image and warping everything onto the central image instead.

Part B: Feature Extraction and Automatic Alignment

In this part we will use several algorithms to help us find an automatic alignment between images in order to create a mosaic. First, we will need to select features automatically between images and define matches between similar features. Then, because small differences in the homography matrix can produce big variations in the final result, especially if the selected points define a small/degenerate area, we want to be able to get the best homography possible using the aid of an algorithm that iteratively improves our findings (RANSAC).

Harris corner detection

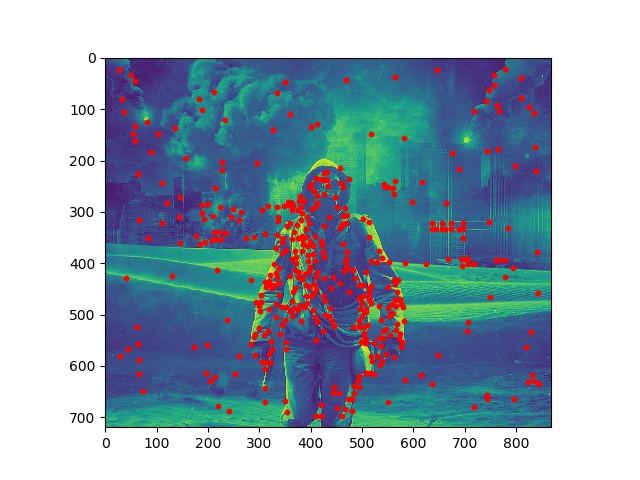

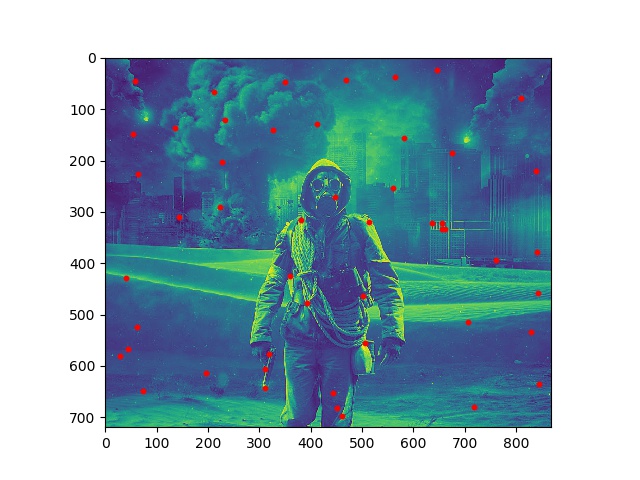

We use the Harris corners algorithm to produce a heat map of corners (sharp derivatives in both x/y directions) and then isolate some points with the highest gradients from the map. The result of some of my tuning with the thresholds can be seen below:

ANMS

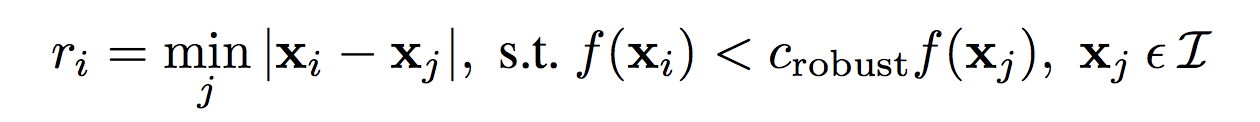

Then we can use the Adaptive Non-Maximal Suppression algorithm (ANMS) as described in the paper to compute the "most important points". For each point, we will define the suppression radius as the minimum distance it is away from another point with a harris corner strength larger by some threshold.

Therefore, the point with the highest harris corner strength has the largest suppression radius. Then, we pick the top 250 points from the set.

Here's the ANMS-filtered set of points on the same test pic.

Feature extraction and matching

At this point, we have 250 points from each image, but we need to find out which features actually correspond to each other. We take a patch of 40x40 pixels centered around the points and downsample the patches to 8x8 to increase robustness. Then, we compute a correspondence between each feature from the left image to each of the features from the right. We only keep a pair of features as matching if there is no other correspondance from the right set of features with an SSD close enough to the lowest SSD by some given threshold. And finally export those matches.

RANSAC

Lastly we will run the RANSAC algorithm to compute the best homography between the matching features. At each iteration we do the following:

- Sample 4 matching features from the left and right images.

- Compute an exact homography between the two sets of points.

- Warp all left points with the computed homography and compare SSDs with the right points.

- If a warped point and the corresponding right point have an SSD below some epsilon threshold, we count the point as an inlier and at each iteration keep track of the best homography (producing the most inliers).

Here's a stiching of a random house.

Here are the results compared to the manual extraction:

Conclusion

This was a very cool exploration of several multi-purpose algorithms and it was great to see everything finally working in practice. Given more time I would've definitely tried adding rotation invariance or some more robust measure of the inlier count in the RANSAC algorithm, since the thresholding for that was extremely sensitive for the final result.