This project is about stitching together many photographs to create larger composite images. We warp and blend together images that are of the same scenery but slighly shifted, similar to a panorama.

We first need to recover the parameters of the transformation between each pair of images: p′=Hp

We first need to figure out the values for H (a,b,c,d,e,g,h). This can be solved by setting up a system of linear equation (A*h = b) and then using least squares to solve for H. A is sized 2N x 8 as for each points, there are two rows in the A matrix.

After solving for transformation matrix H, I apply it to every p' to find which p value belongs at that coordinate in order to rectify an image. I selected the four corners of flat objects in the image and then rectified them into a rectangle

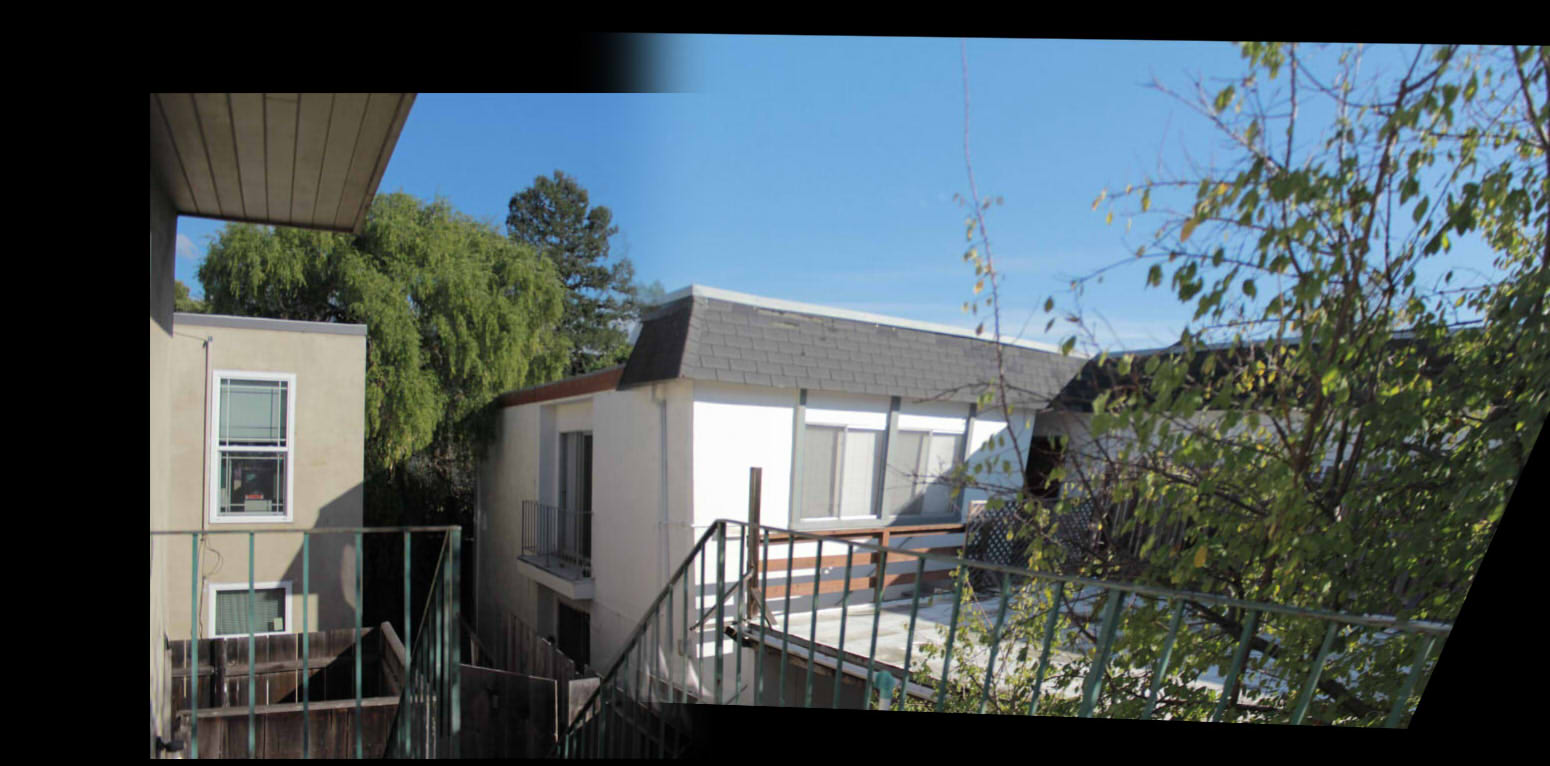

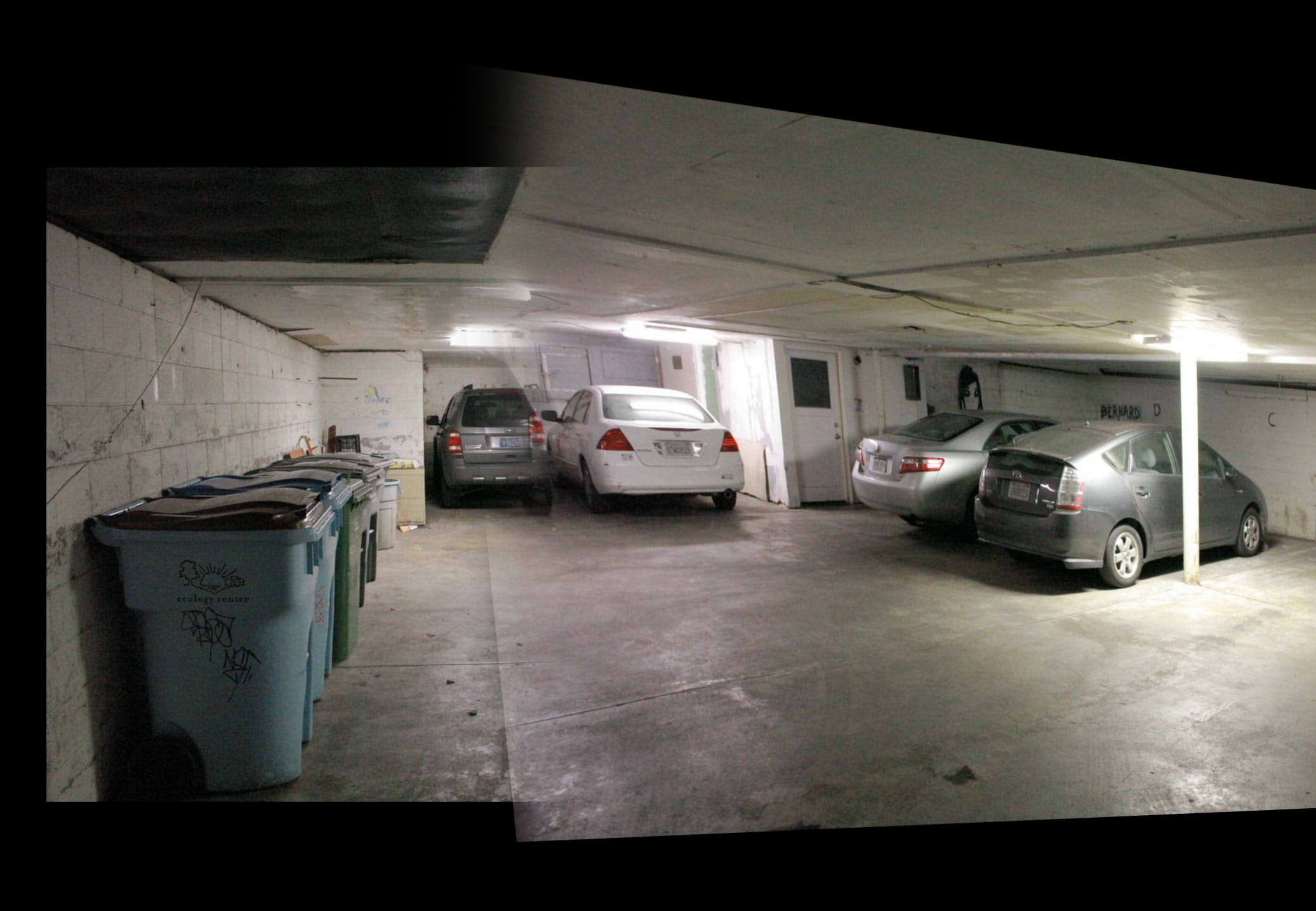

Using the technique above, I was able to make an image mosaic. I warped the second image to the first one. I then blended overlapping pixels using alpha blending so that there will be a more smooth transition between each image.

I now have a better understand of how panoramas are implemented. It makes me curious of what other improvement/optimizations are used in industrial panorama programs like the ones in our iphones.

Previously, we created a mosaic through manualy picking points. The goal of this part is to automatically choose corresponding points from both images.

Using the Harris corner detection function given to us, we are able to detect prominent features of a image. It analyzes an image for strong x and y gradients.

originals

Harris points

Harris corners detectors yield too many feature points bunched up together. To filter out points too close to one another, we use ANMS. For a given point, find their radius to the neighbor closest to them that has a larger enough strength. To check sufficient strength I checked that it is still stronger when scaled to 90%. I then take the top 250 points with the largest radius for a more even spread.

We then assign each feature point a feature descriptor. For each feature points, i created a 40x40 patch with the point at the middle and gaussian blurred it. I bias/gain-normalize the patch and then downsample it by a factor of 5 to a 8x8 patch. I then flatten the 8x8 patch to a vector of size 64 to finally get my descriptor. The descriptor is then normalized to have mean of 0 and std of 1. Then compare the descriptors using SSD to find the matching features between two images. We find the 2 nearest neighbors to the feature patch, 1-NN and 2-NN, and make sure 1-NN/2-NN is under some value to determine whether or not 1-NN is far enough away from the next nearest neighbors. If both are too close, we just discard this feature point. This allows us to further filter down more points.

There are still many feature points that are unusable in our goal of auto stitching images. The goal of RANSAC is to further remove outliers. We first select four correspond random feature points (from both image( and compute a homography from them. We then find the inliers. To do so, we make sure the L2 distance between points1 and points2 * homography is large enough than some value epsilon. We repeat this many times (1000 for me) and keep the iteration with the most amounts of inliers. With these inliers, we finally have the corresponding feature points we need to simply warp the images together like in part A. Although I believe I implemented RANSAC correcly, I was unable to finish this part. The function that computed the homography from two sets of points defined in the last part seems to be failing for some reason and I was not able to figure it out in time.