Project 7A: Eulerian Video Magnification

Utkarsh Singhal

Introduction

The goal of this project is to use laplacian pyramids and bandpass filtering to enchance minute changes in videos. This was a particularly exciting project for me because Eulerian Video Magnification was why I chose computer science as a high school senior. I am very glad to have been able to implement it.

How it works

The main idea is very simple: Decompose an image into different spatio-temporal frequency bands, and selectively amplify some of them. This simple idea is remarkably powerful, and can be used to magnify mono-harmonic motions. The spatial frequency bands are created using laplacian pyramids, and the temporal frequncy bands are created using FFT. Unfortunately, one drawback of the project is its sensitivity to parameters. I spent ~5 hours just trying to tune the parameters.

My video turned out to be a failure case for color amplification because I could not hold the camera still enough for it to work properly. Interestingly, however, it seemed to amplify motion. For face and baby amplifications, I used parameters [25,10,0,0,0], while for my face, I used [15,5,0,0,0]

Bells and Whistles

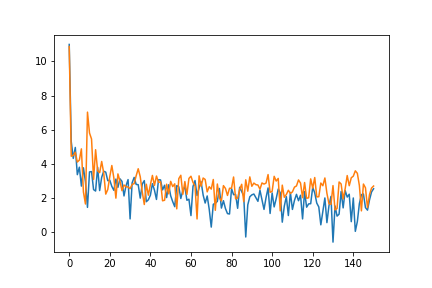

Here is a plot of the spectral powers before and after filtering for pixel (150,250) at 6 pyramid levels. The blue plot corresponds to original power spectrum, and the orange corresponds to filtered. As one can see, the lower frequency amplitudes are much more amplified, which makes since since my passband was 1.7-2.7 Hz.

I also implemented motion magnification for the wrist video

Project 7B: Fake Miniatures

Tilt-Shift photography, also known as "Fake Miniatures" is a popular photography technique on image forums like Flickr. The main idea behind this technique is to make a normal picture look like an image of miniatures by sleectively blurring diffrent parts of the image differently. This creates an artificial depth-of-field effect, which looks quite appealing in practice.

How it works

I create a fake depth-map, and blur different areas of the image using values from the depth map; i.e., areas are that 'closer' are blurred less. My approach was to threhsold the depth at 25 levels and to set the blur as a linear function of the threshold bin. This seemed to work pretty well, although it might be possible to use more sophisticated images for better results. I also increased contrast by adding an offset in HSV value.

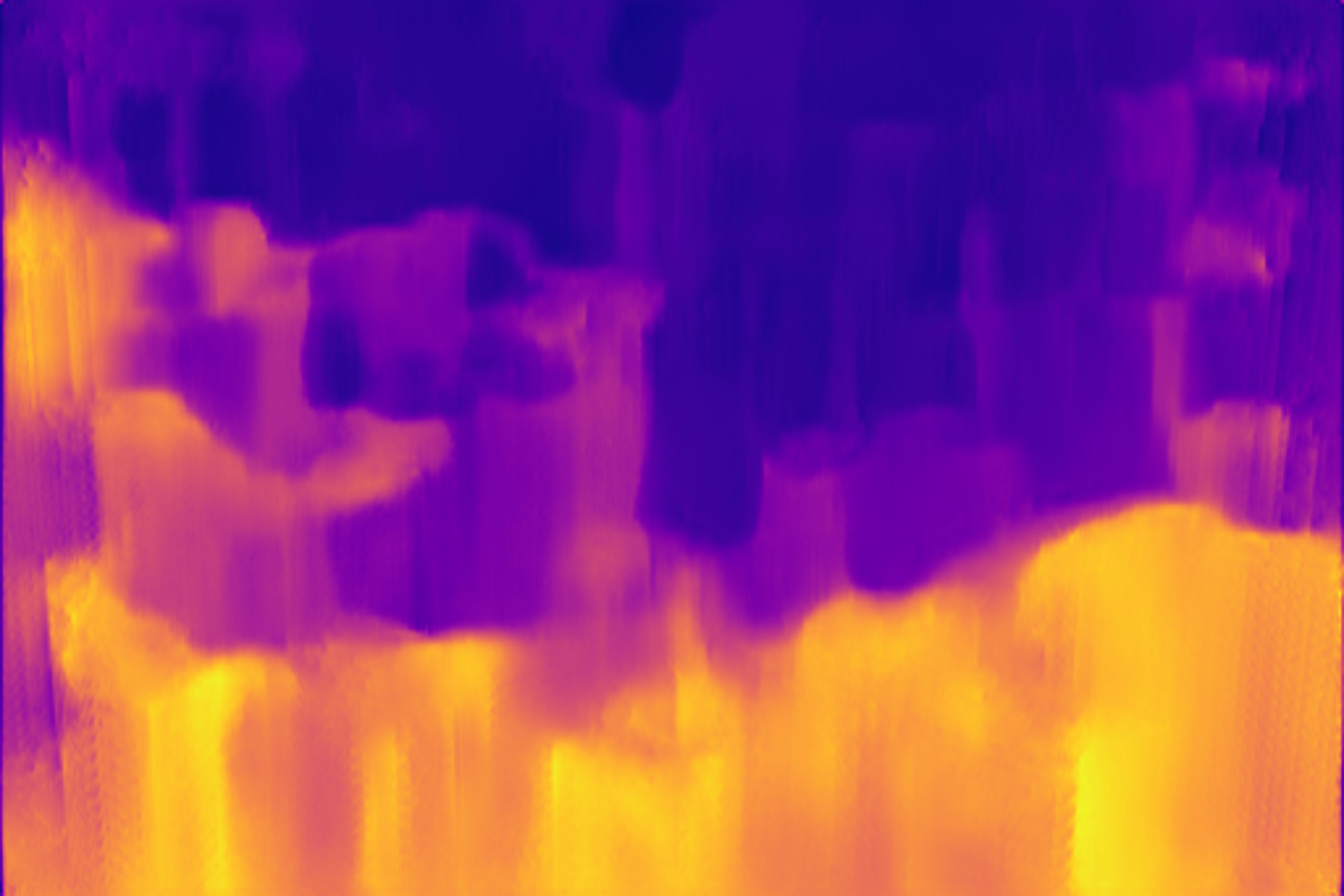

Bells and Whistles: Generating depth maps using a deep net

I used a depth-prediction network implemented in tensorflow to try to predict the depth maps. Unfortunately, due to the high amount of depth disparity in these images, this approach does not work as well as one would hope. Maybe it can be improved by fine-tuning the image on these kinds of images.

Summary

Overall, this was a fun project that really summarized the class very well. I look forward to doing projects like this again!