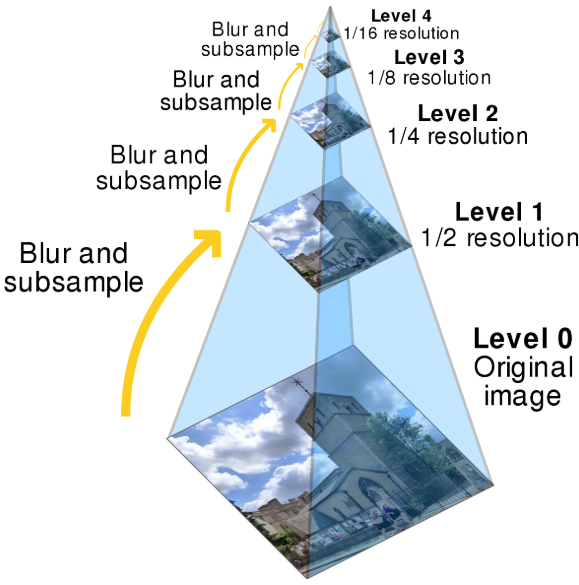

Naive Algorithm

A simple, brute-force approach to this alignment problem is defining a window of possible displacements, searching through this range, and returning the "best" alignment according to some metric. I used two metrics suggested by the project spec: SSD (sum of squared differences) and NCC (normalized cross-correlation coefficient). SSD measures the difference between two images, so we choose the displacement that minimizes this metric. NCC measures the similarity between two images with an output range between -1 and 1, -1 being very different and 1 being identical images, so we choose the displacement that maximizes this metric. With a window of [-15, 15], this naive algorithm produces the following results:

Cathedral SSD:

Green [1 -1], Red [7 -1]

Cathedral NCC:

Green [1 -1], Red [7 -1]

Monastery SSD:

Green [-6 0], Red [9 1]

Monastery SSD:

Green [-6 0], Red [9 1]

Nativity SSD:

Green [3 1], Red [7 1]

Nativity NCC:

Green [3 1], Red [7 1]

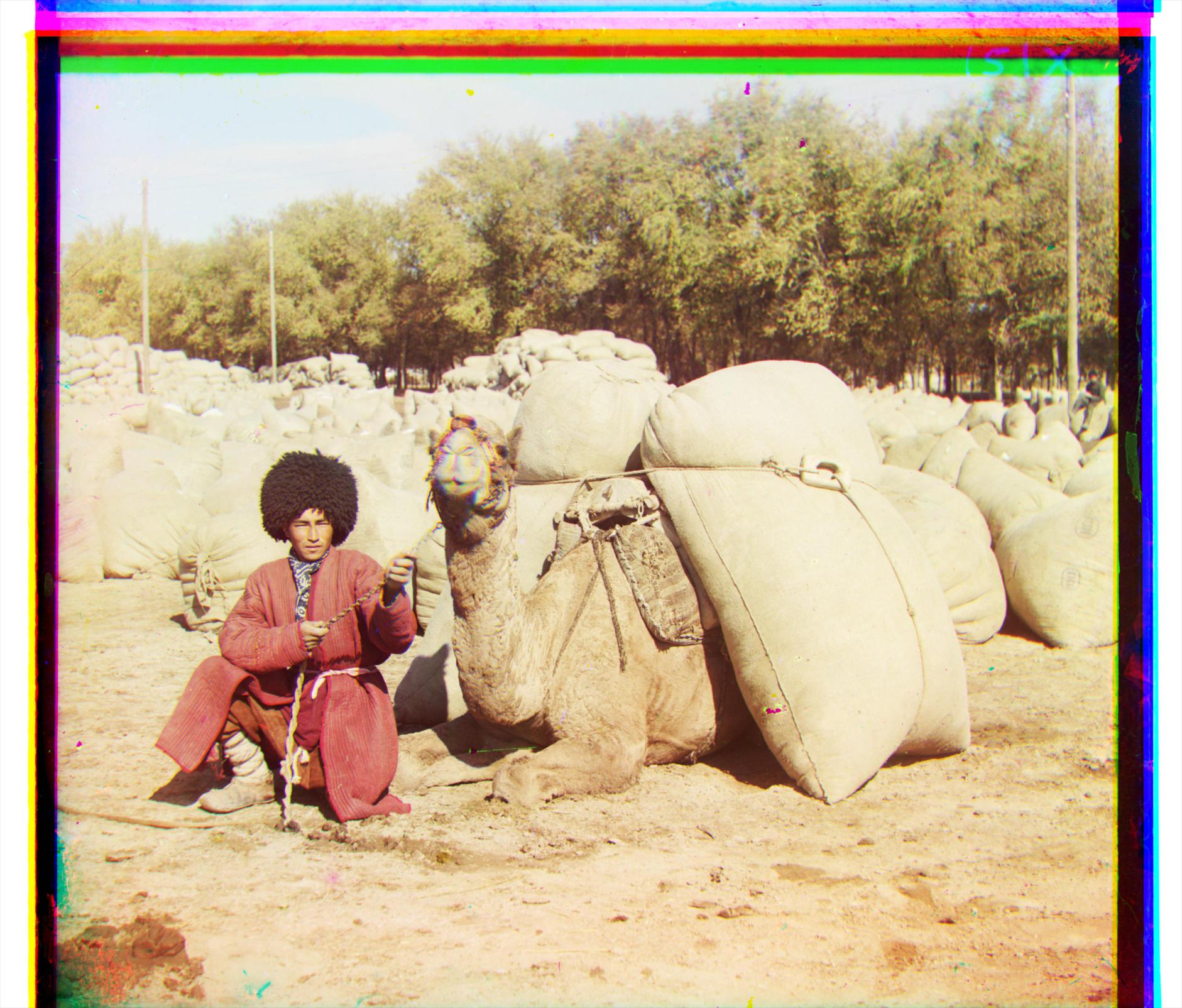

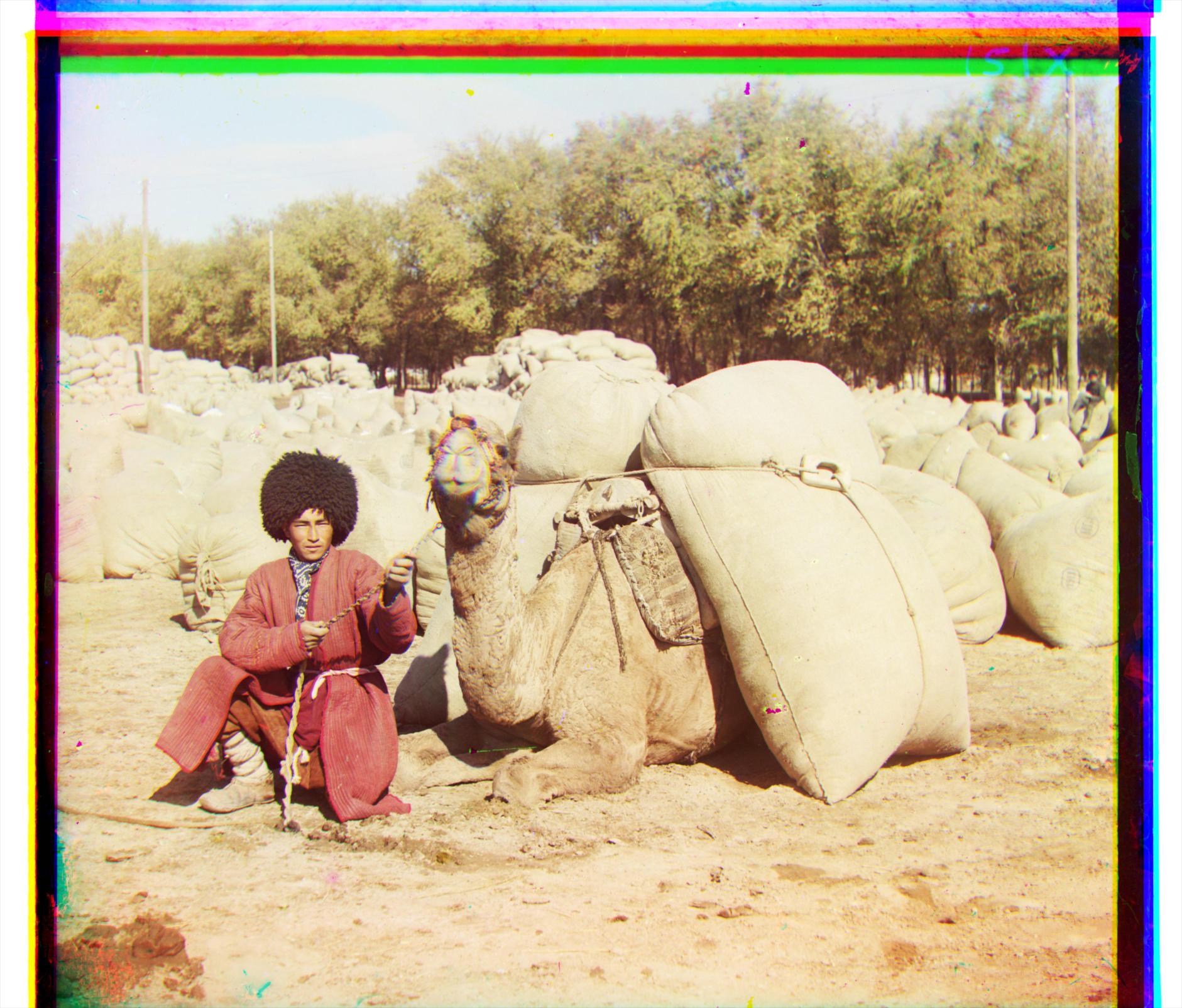

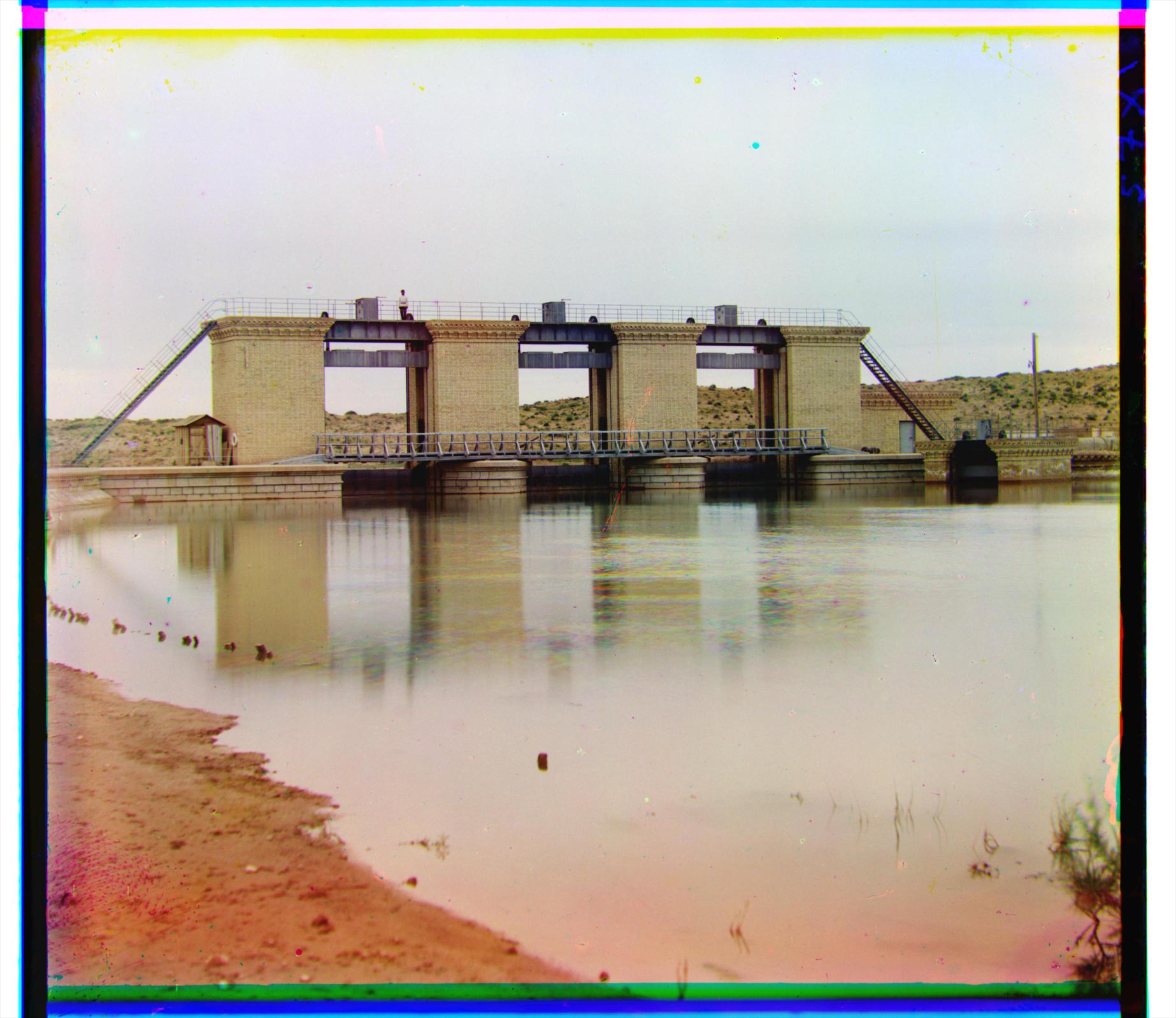

Settlers SSD:

Green [7 0], Red [14 -1]

Settlers SSD:

Green [7 0], Red [14 -1]

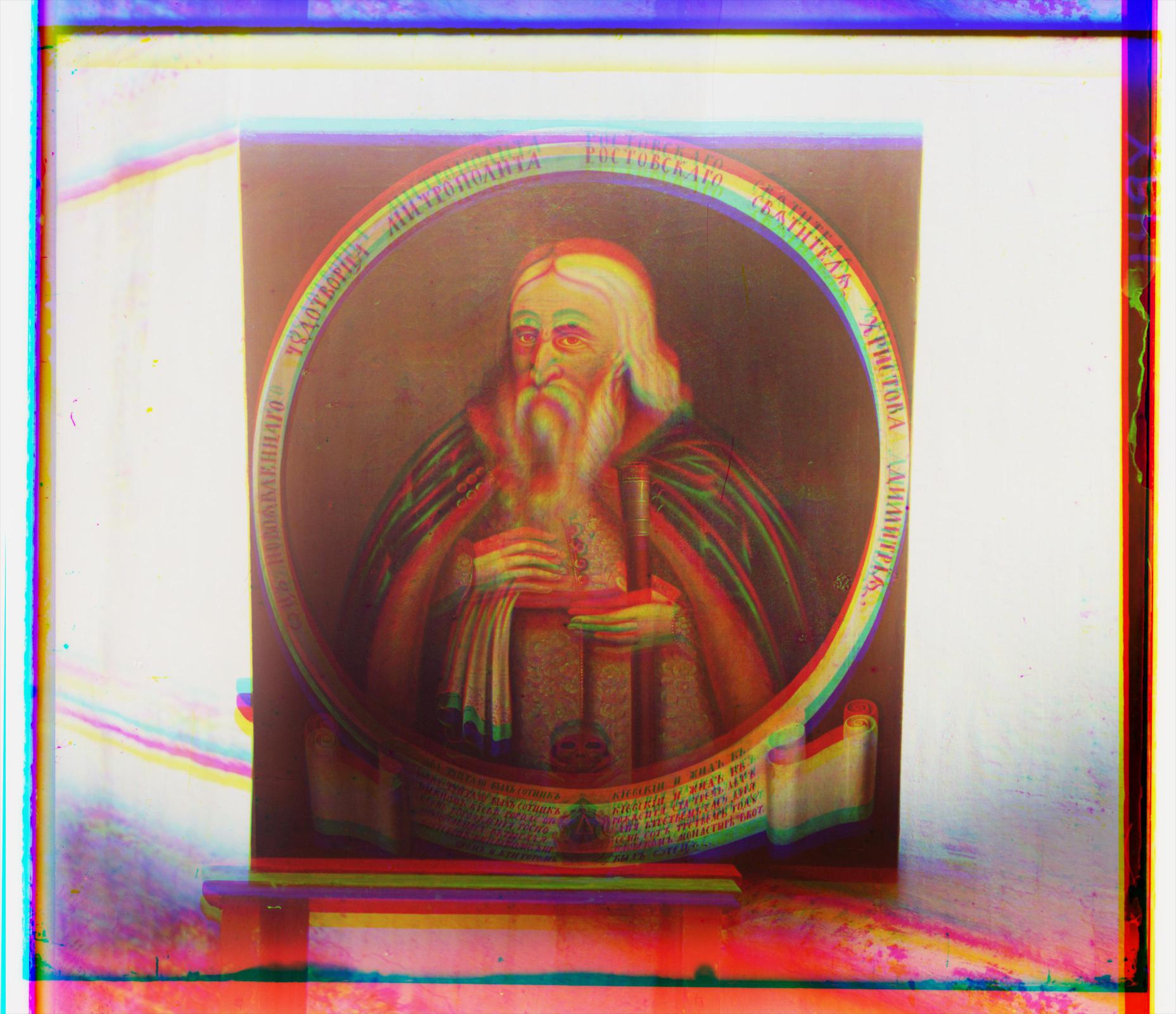

Tweaking the Monastery

The results were fair for most images. However, the Monstery image was not very well-aligned. A notable feature of this image is that it is predominantly blue, which could affect the alignment of red/green against blue. I tried instead aligning the blue and red images against the green image. The alignment was equally bad, if not worse. I then tried cropping the outer 10% margin of the image and re-aligning the red/green to the blue image. The result was significantly better.

Monastery NCC aligned with green:

Blue [6 0], Red [6 1]

Monastery NCC with 10% cropped:

Green [-3 2], Red [3 2]