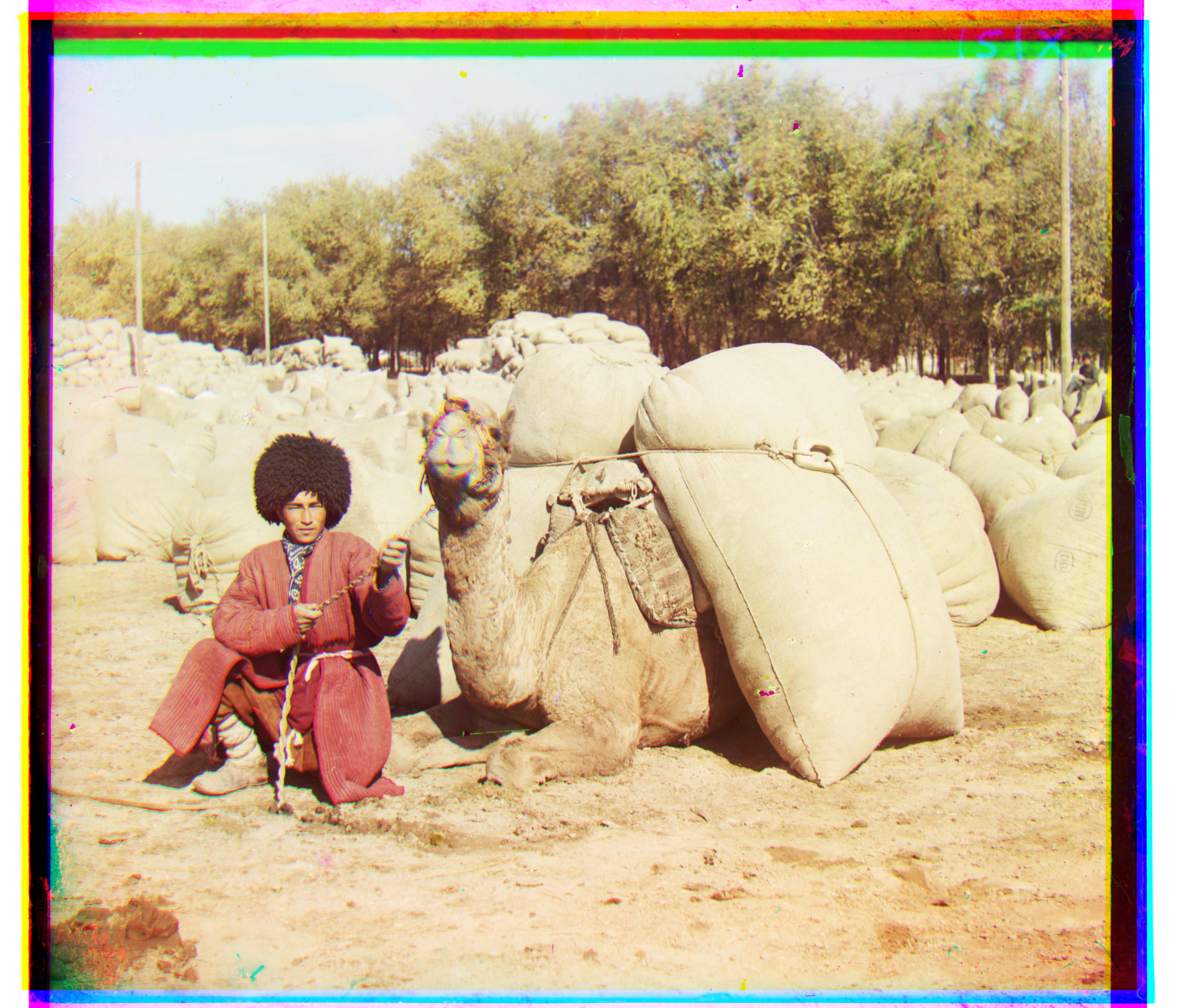

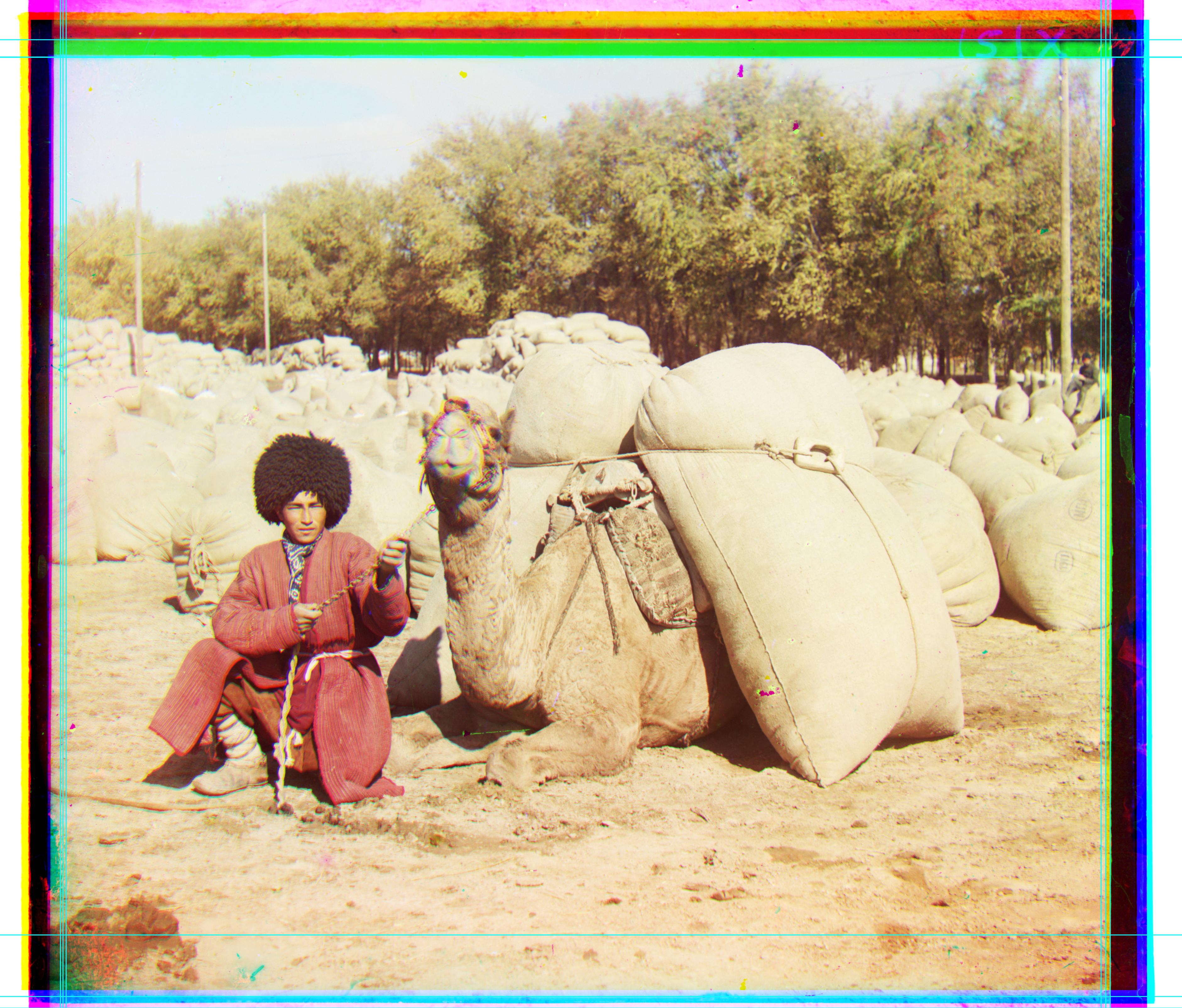

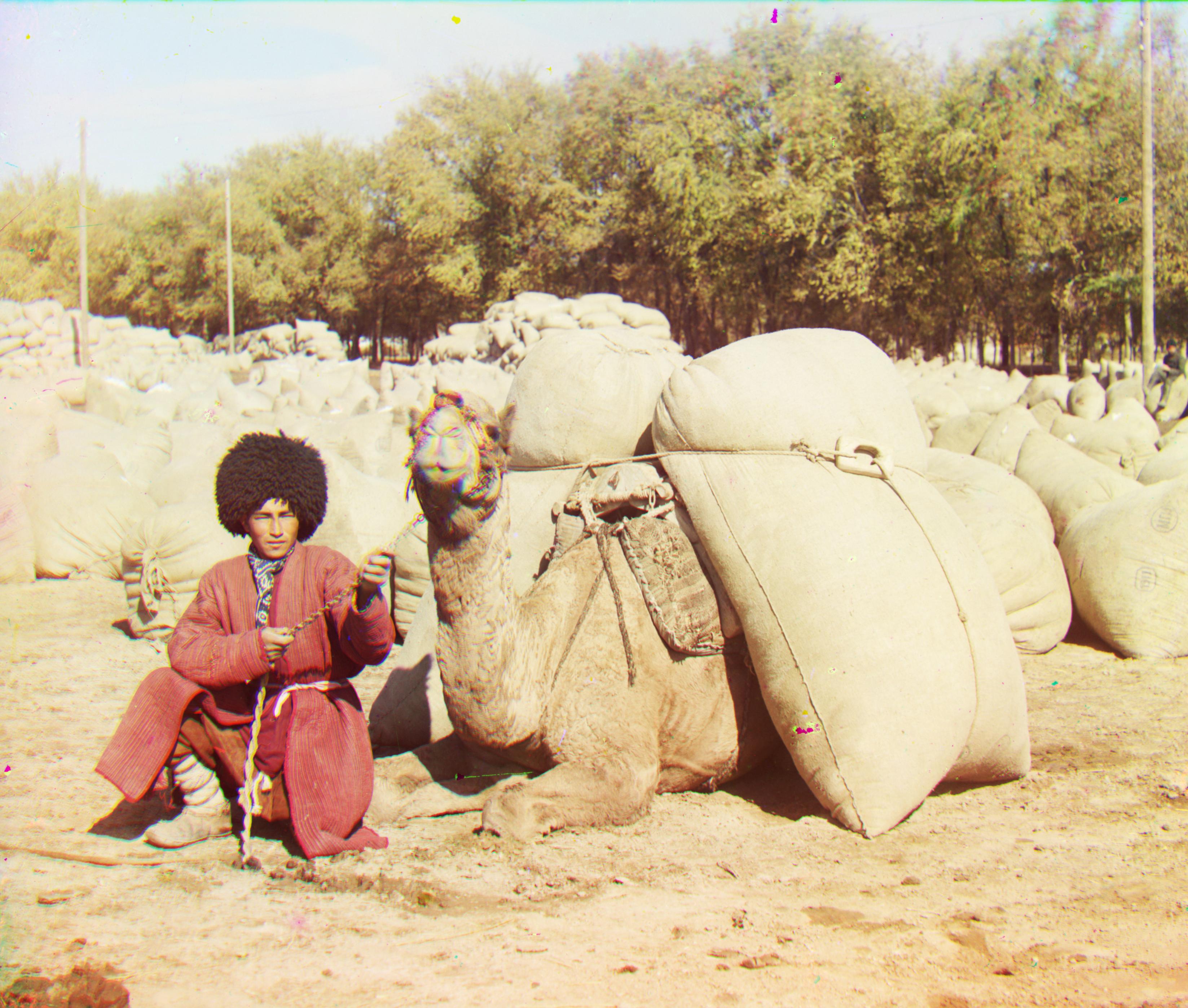

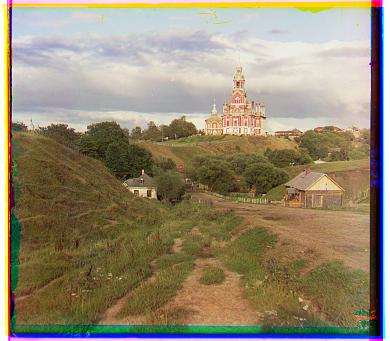

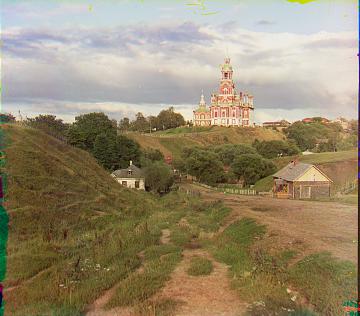

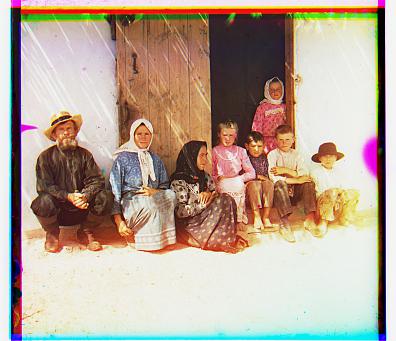

The Prokudin-Gorskii Collection is a collection in which the images consist of three photographs taken with a red, green, or blue filter. The goal of this project is to colorize the seemingly black and white images into a three channel one. However, since each shot was taken independently, there exists a displacement between every color channel, thus a metric is required to align the image accordingly.

The first, naive, implementation consists of shifting each color channel (-15, 15) to a reference channel and minimize a SSD value. The Squared of Sum Differences (SSD) value is calculated by taking the element-wise difference and summing the square of the difference. Our goal is to minimize this heuristic so as to reduce the discrepancies in the values.

The second, naive implementation consists of shifting each color channel (-15, 15) to a reference channel and maximizing a NCC value. The Normalized Cross Correlation (NCC) value is calculated by taking each pixel and normalizing it by dividing by the mean of the channel's value. Then, summing the element-wise multiplication. Since the values are normalized, we know that the better similarities will yield larger values (values closer to one since the fraction is squared).

My initial implementation of both of these metrics were slow because I iterated through each row and column to calculate the value. However, after vectorization, the run time was a lot faster. Additionally, the original versions of both metrics compared every existing pixel of the image, but this punishes the heuristic on borders (especially with monastery.jpg). I was able to fix this issue by only running the comparison on the image with 20% cut off from each edge.

The naive SSD and NCC metrics mostly only worked well and optimally (fast) for JPEGs, however it left the TIF files running for a long time. The idea behind the image pyramid revolves around resizing the image to be smaller and running SSD or NCC so as to bring the pixels to a relatively closer alignment. Then, iterating with a smaller scaling factor each time until your image is no longer scaled.

Some optimizations, initially, I kept the displacement window the same for each layer. After some thought, I decided to only aligned up to when the resize value is to be 1/2 the original image since the offset would be at most 1 pixel. But, an even better optimization I later found was by having the deepest layer have a large window and every subsequent layer have a small window since the each prior layer would brought the image to atleast be one pixel off from the next layer. THis yielded results MUCH FASTER!! Also, initially, I set the blue channel to be my reference and aligned red and green to it; however, as per the TA's suggestions, the blue channel was too saturated and caused some of my images to be misaligned. This was not an issue until I changed the search/displacement window.

Alignment using Edge Detection: Instead aligning the image on pixel values (which can vary based off saturation and other color-esque factors), I used the skimage.feature.canny to find edges and align off them. This yields slightly better alignment since the edges will now match much better though the visual impact is hard to notice.

Auto Cropping: My process for cropping borders was to first blur the image with a gaussian then run a sobel edge detection in the y and x direction (separately) and finally to do a hough line transform to find the best lines to crop along the image. Since this will yield a large number of lines, I first filter out all lines for only those that exist within some distance from the edge (0.075*len in my case). After which I partition the lines to be (left or right) or (top or bottom) borders. Then, I take the one closest to the center of the image. Since there are three color channels, I run this process for every channel then take the inner most lines returned so as to encompass all borders. There is still a need for tuning this metric with gaussian blur, sobel, and hough line since there will be times when the image is cut off along a straight edge that is not entirely a border (say a door). I think it would help if I change the parameter of how long the hough line sould be so that it takes up a majority of the image.

What I found interesting was that after I implemented my optimizations, the shift values decreased compared to what I had output in previous iterations... Previously, some shifts would be > 100 but now most of my shift values are < 100. This is most likeley be because the color channels I am comparing to now differ from before where the relative one was blue but now green. Where as previously, both shifts toward blue were quite large, they are smaller since green is a less extreme value to shift so so the red shifts less and the blue shifts in the negative direction.