Project Overview¶

Jonathan Tan, cs194-26-abl¶

This project was to colorize the Prokudin-Gorskii photo collection by taking original plate negatives shot in three channels and combining them into a color picture.

To do so, we basically take a negative, divide it into thirds, and stack them on top of each other in the appropriate channels to produce a color image. However, we also programmatically aligned the plates, so the color channels wouldn't be mismatched. I used the sum of squared differences, which basically spits out larger numbers the more "different" input matrices are by summing their squared differences, so by using that as a scoring metric, you can test which alignments of the plates and channels work best against a base image. In this project, I aligned the plates to the top channel, b (followed by g and r).

However, a good amount of images wouldn't align properly due to noise on the edges and the black borders around the plates. Cropping those out as a preprocessing step significantly improved colorization. Those are noted by having "cropped" in their filename down in the results section. Subsequently, those offsets are meaningless without the cropped tif's, which are available upon request.

In particular, emir.tif had a hard time aligning properly, and still wouldn't align even after cropping it down. I assume it's because of differing brightness values across the channels, so the computer can't properly match based on pure SSD. Feature based matching or edge detection would probable handle this negative better.

I also implemented an image pyramid to handle larger resolution images. While the naive approach involves searching over a relatively large window of displacements, it only works for smaller images, e.g. the ~400x1000 resolution plates. For the larger images, ~3300x9300, we needed to use an image pyramid so our colorization would run fast enough.

Basically: scale an input image down to something small and manageable, process it, and then repeat this process on a larger scale version of the image, updating your estimate from processing it at the previous scale. This lets you search smaller displacements, which become more and more costly as resolution increases. With my implementation, I start at an image at most 64 pixels wide before doubling the size. Another caveat is that at the lowest scale of the image, it's fine to test every possible displacement before moving into a smaller window based search at larger levels.

Finally, I colorized some extra images from the collection. One features a fence and one features a monument.

All images are saved as jpgs.

Small Image Results¶

cathedral:¶

| unaligned | aligned: g (-1, 1), r (-1,7) |

|---|---|

|

|

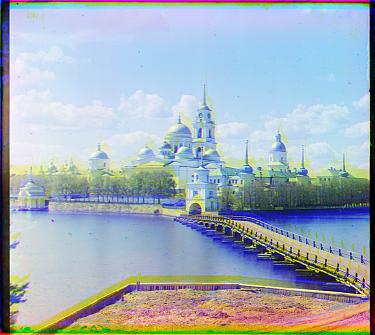

monastery, pre cropped:¶

| unaligned | aligned: g (2, -9), r (2, -9) |

|---|---|

|

|

tobolsk:¶

| unaligned | aligned: g (2, 3), r (3, 6) |

|---|---|

|

|

Large Image Results¶

emir.tif aligned: g (7, -3), r (17, 107)¶

harvesters_cropped.tif aligned: g (15, -54), r (13, -104)¶

icon.tif aligned: g (16, 42), r (22, 89)¶

![]()

lady.tif aligned: g (-6, 57), r (-17, 123)¶

melons.tif aligned: g (4, 83) and r (7, 176)¶

onion_church.tif aligned: g (22, 52), r (35, 108)¶

self_portrait_cropped.tif aligned: g (-2, -29), r (-3, -10)¶

three_generations.tif aligned: g (5, 52), r (7, 108)¶

train_cropped.tif aligned: g (0, -68), r (31, -128)¶

village_cropped.tif aligned: g (12, -54), r (22, -102)¶

workshop_cropped.tif aligned: g (-1, -56), r (-13, -117)¶

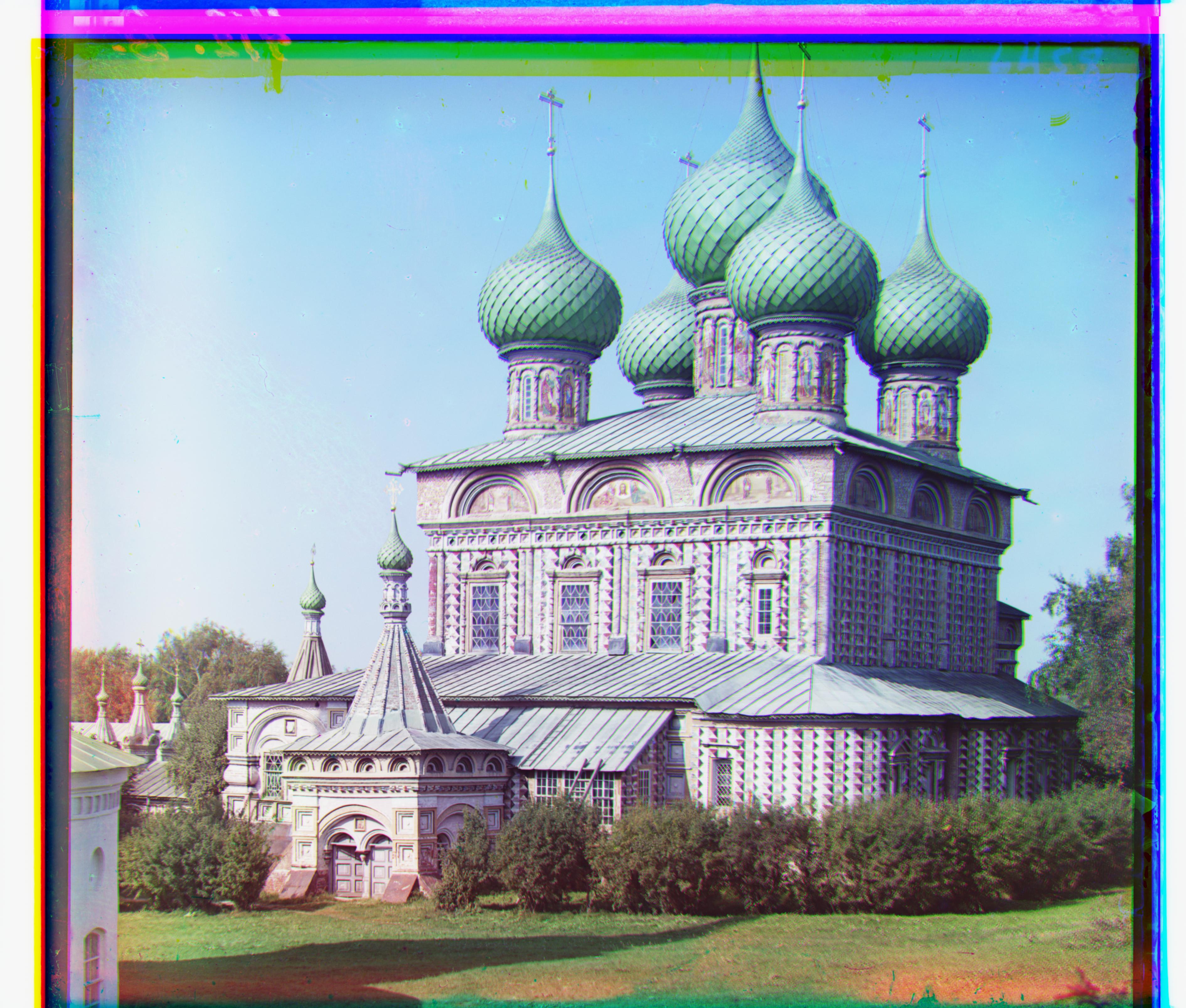

Additional Prokudin-Gorskii colorizations¶

fence_cropped.tif: aligned: g (-1, -66), r (-9, -100)¶

monument_cropped.tif: aligned: g (-16, -97), r (-29, -112)¶