Overview

"The goal of this assignment is to take the digitized Prokudin-Gorskii glass plate images and, using image processing techniques, automatically produce a color image with as few visual artifacts as possible." To accomplish this task, I compared the NCC (normalized cross-correlation) between a window of possible displacement of one colored channel (either red or green) to a fixed color channel (blue). Due to scalability issues, I implemented an image pyramid with dynamic windows to reduce runtime down to O(log n). At every recursive level of the pyramid, we rescaled the picture down by a scalling_factor, searching over the rescaled image and building up our displacement vector from the coarest to the finest. I cropped 5% from both axes to get rid of any unpleasurable pixels that rolled over the axes from displacement.

Approach

Naive Approach

I first implemented the naive approach using a window offset of 15 (i.e from -15 to 15), calculating the NCC heuristic. The rollover from the border edges amplified noise and error, so before calculating the NCC, I cropped the image by 10% on each axis. I keep track of the displacement vector with the best heuristic, then shifted the corresponding channel images followed by stacking Blue, Green, Red channel images respectively to obtain the colorized image. This naive approach scaled in polynomial time with the image size, then I used an image pyramid to reduce the runtime to logarithmic time.

Pyramid Approach

Using the recursive concept, I rescaled the image by factors of two and built the displacement vector with dynamic windows. I start at the finest level by rescaling the image down by n, then by n / 2, then n / 4... and so on until the scaling_factor (i.e n) is 1. At each recursive context, calculate the sum of the displacement vector from its current iteration and the displacement vector of the next recursive layer: v(n) = displ(img, otherImg) + 2 * displ(img -> img / 2, img -> img / 2). The base case is when the rescaling factor is one. And the coefficient of two refactors the scaled image's displacement, as a displacement of 1 on a n-rescaled image is 1 * n. Furthermore, we do not look over a fixed window of [-15, 15]. At the finest level, we assume that the displacement vector is precise, meaning that alignment after the finest level will be marginal. Thus, searching over a window of [-20, 20] at the most rescaled image (I used a scaling_factor of 16) followed by windows of [-2, 2] was adequate to align most if not all images.

[EXTRA CREDIT]: Canny Edge Detection

However, Emir was the one anomaly that had terrible alignment with this approach. Due to high blue saturation, the blue channel was not a successful channel to compare for the NCC. Thus, to circumvent color anomalies, I used Canny Edge Detection to preprocess images before running the algorithm. However, even this approach has its flaws -- if an image has too condensed edges and the wrong sigma parameter, the final picture can be blurry due to unintended noise from CED. I found the best sigma for the test set this time, but with further time, dynamically allocating sigma is the optimal approach for future endeavors.

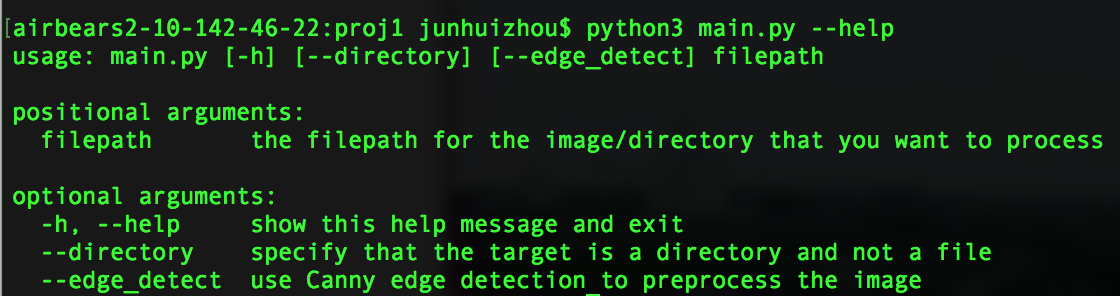

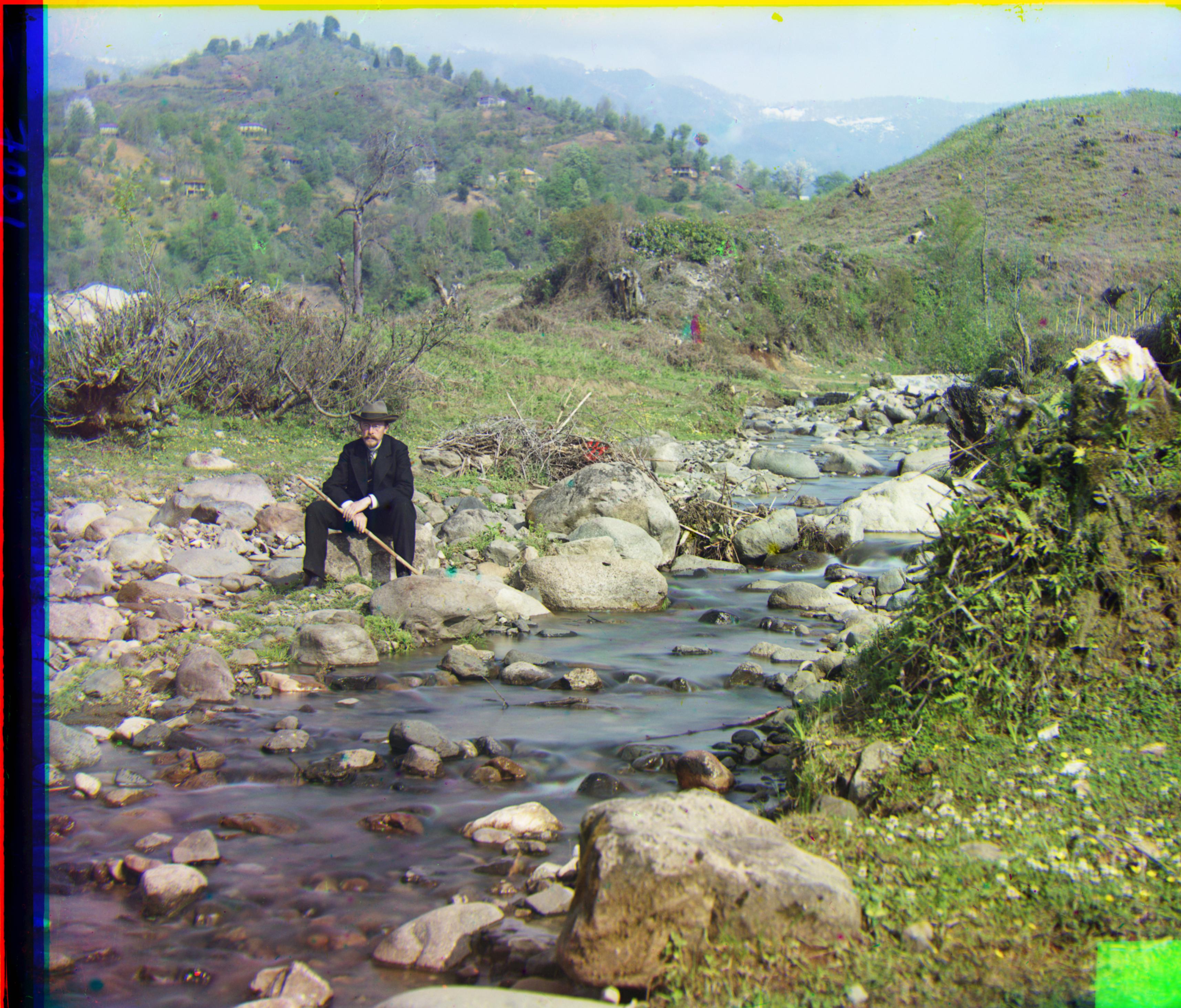

Results

| Image | Red Displacement Vector (x, y) | Green Displacement Vector (x, y) |

|---|---|---|

|

(15, -1) | (7, 0) |

|

(3, 2) | (-3, 2) |

|

(12, 3) | (5, 2) |

|

(7, 0) | (3, 1) |

|

(113, -190) | (49, 24) |

|

(107, 40) | (49, 23) |

|

(123, 15) | (60, 13) |

| (90, 22) | (38, 16) | |

|

(120, 13) | (57, 9) |

|

(175, 37) | (77, 29) |

|

(111, 8) | (55, 12) |

|

(87, 32) | (43, 6) |

|

(117, 29) | (57, 22) |

|

(138, 22) | (67, 13) |

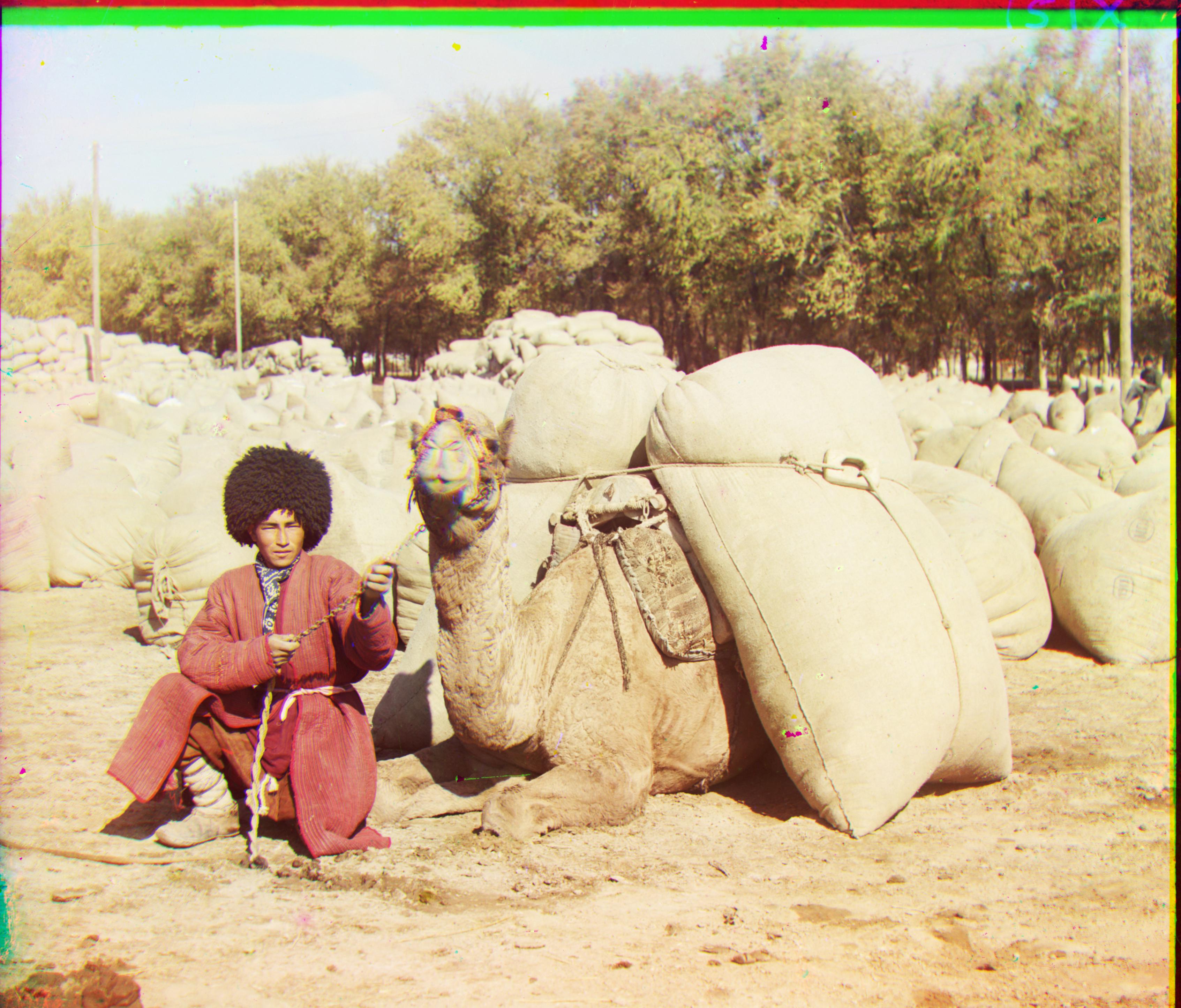

How to Execute Code