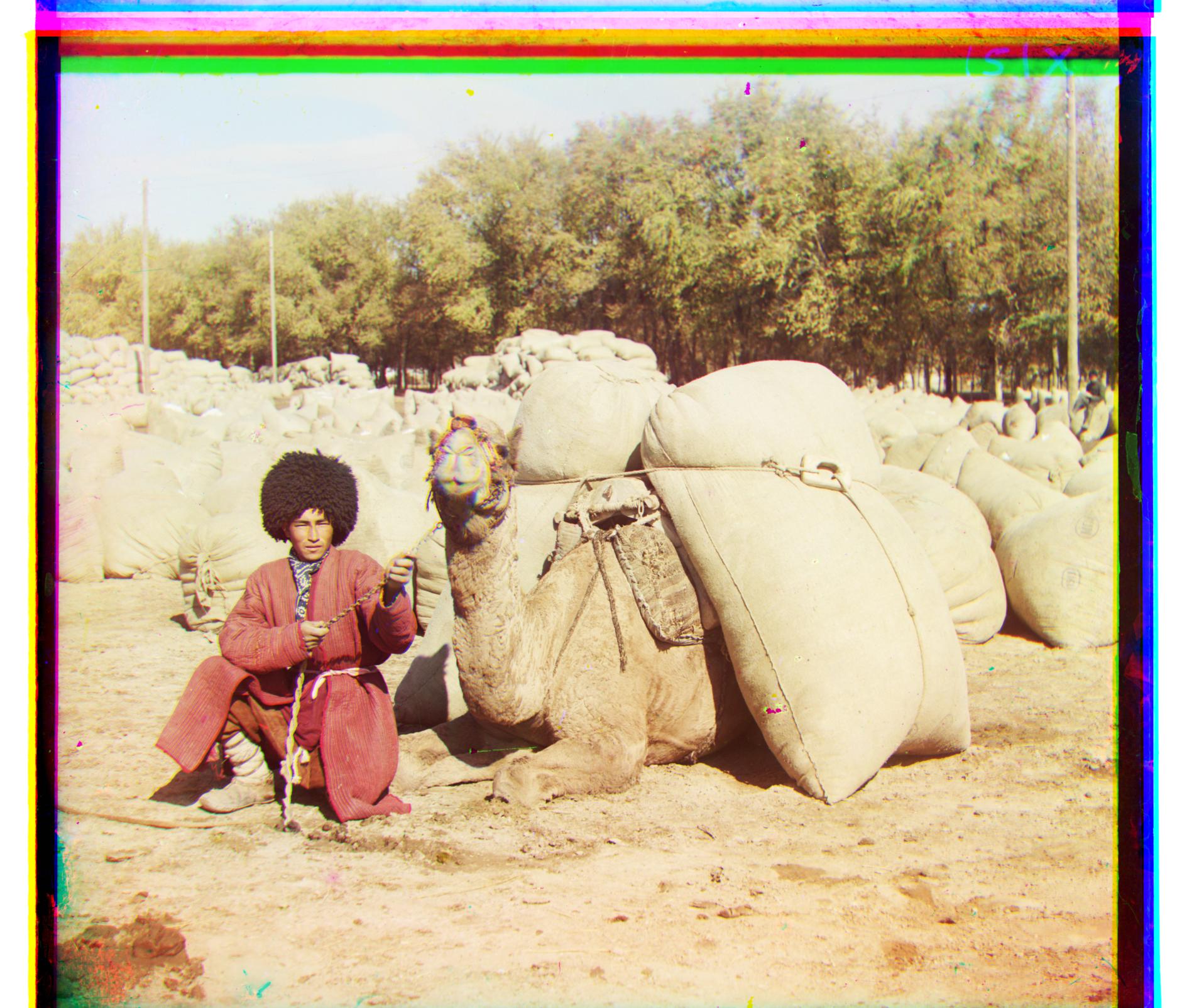

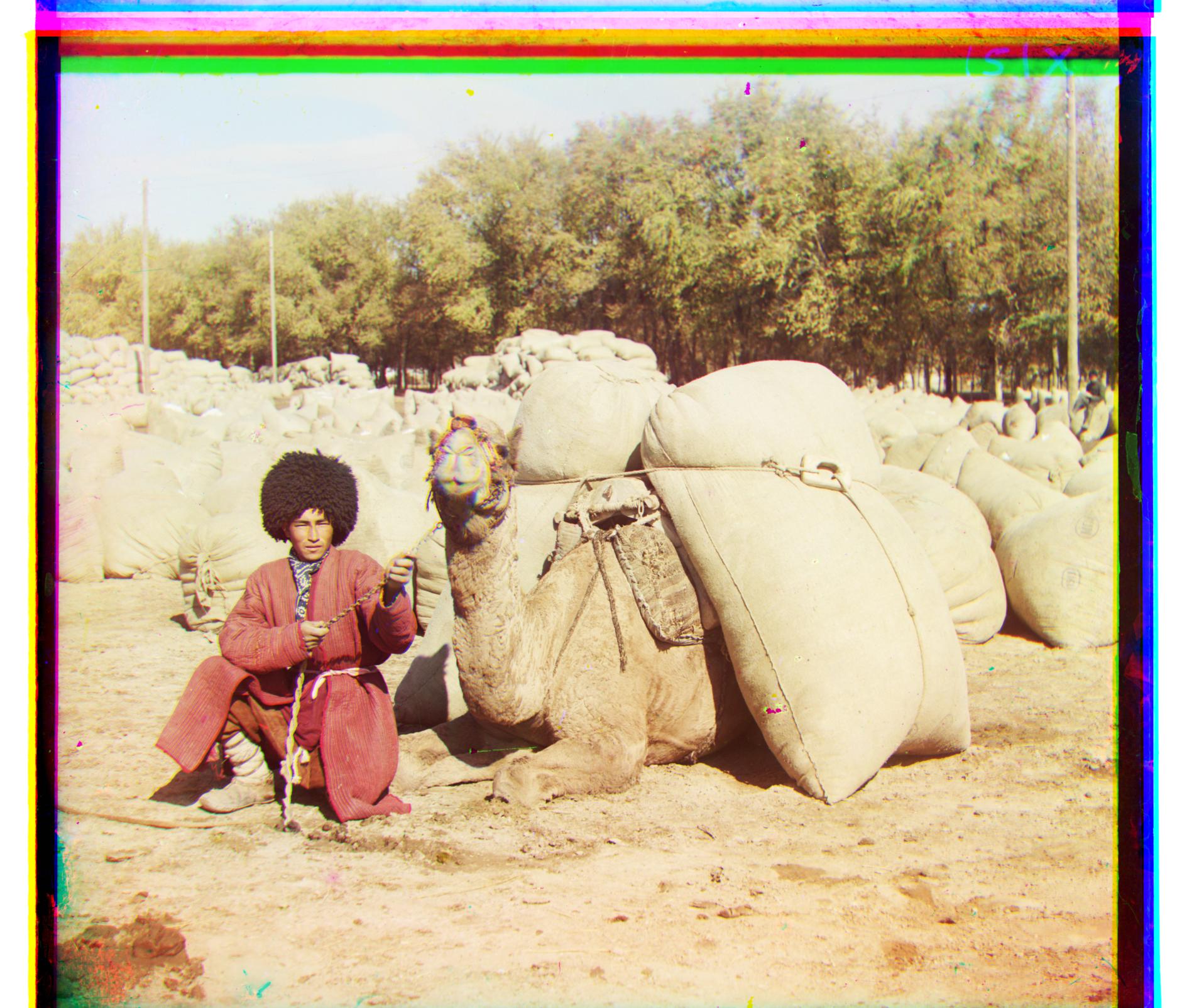

Result on example images

The project's goal is to recover the color pictures taken by Sergei Mikhailovich Prokudin-Gorskii. He did not have a color camera at that time period so instead he used black & white camera to take pictures. At each scene, he took three pictures with red, green and blue filters respectively. The project is intended to produce a color photograph from three black & white photograph with filters.

Since these pictures are taken with red, green and blue filters, we can first roughly assume that those three photos correspond to the R, G and B channels. The algorithm will choose one of the three photos as the base and shift the other two photos in both x axis and y axis to get a best match and then stack these transformed photos together. In terms of the matching criterial, I used normalized cross-correlation and pick the displacenment distance where this value gets maximized. The search range I picked is 30, which is able to handle all pictures (small size) shown beblow.

The algorithm described above can efficently handle photos with small size. However, when photo size gets large, the displacement can become large. Exhaustive search on 2 dimensions therefore would be slow. For large photos, I used image pyramid. This algorithm basically make use of the small-size algorithm everytime the photos get scaled to a smaller size. After the displacement on a smaller scale get calculated, we can apply the adjustment on a bigger scale and further refine the displacement. The search interval can be drastically decreased in this way.

1. When the entire image is used for searching best displacement, some of the photos does not work well. The reason is that the borders of the image are get rolled into a position where these pixels cannot be matched with other photos. Therefore, I only use the center part of the image (from 1/4 to 3/4 in terms of one side) for matching and the results looks good.

2. Initially, when I used the algorithm above, I cannot get the photo 'self portrait' right. I resolve this by increasing the final output resolution. This resolve the problem because in this photos, there are a lot of small detailed pattern in bush. Therefore, if the image get scaled down too much, we lost too many details to get a successful match. A relatively large resolution photos can be matched successfully.

3. Initially, the image 'emir' does not get produced correctly. The reason is that I use photo with blue filter as a base. Since the man in the picture has blue cloth with high saturation, using blue as base is not a good idea because it becomes hard for low red and blue value matahed up with high blue value. I end up resolving this problem by use photo with green filter as the base. The problem can also be resolved by using edge photo to use, which will be further discussed later.

My auto crop algorithrm checks the brightness of the border in an iterative manner from outside to inside to check if there is an sudden increase on the average brightnees. When an increased brightness lebel is detected, the image will be cropped at that position. The picture will get rotated 4 times to get the algorithm applied to all edges. The algorithm works on around 80% of all edges tested. When the brightness of a border is similar to that of the inside picture or when the change is too gradual, the edges become hard to detect. In these cases, a constant edge will be cropped out.

I found that the smallest value on these pictures are almost equal to 0 and the highest value on this picture is almost 1, so transforming the pixel value to span 0 to 1 range will not help too much. What I did is to implement a s-curve gamma function to differentiate the mid range value more so that the photos are more contrasty.

For edge detection, I used sobel filters in skimage package. The above three pictures are the output of sobel filters on the input. Then edges photos of the blue and red filtered will get matched to the photo of green filter using similar algorithm described in 'Approach' section using normalized cross-correlation.