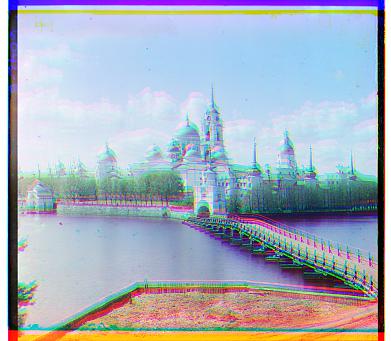

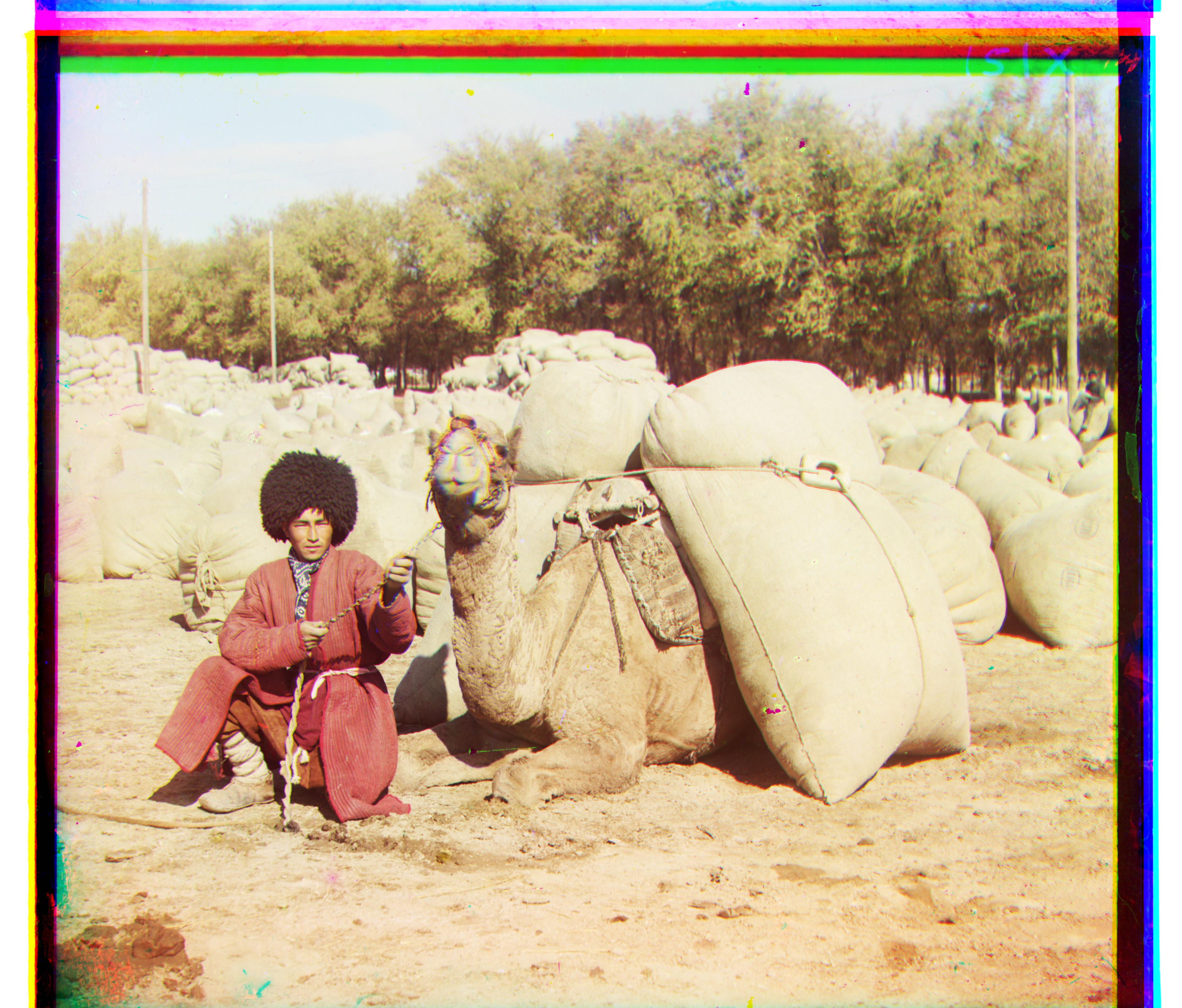

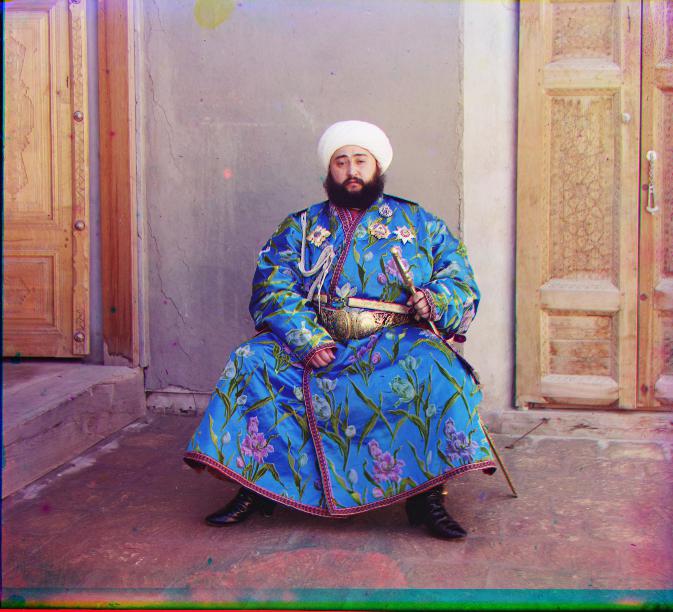

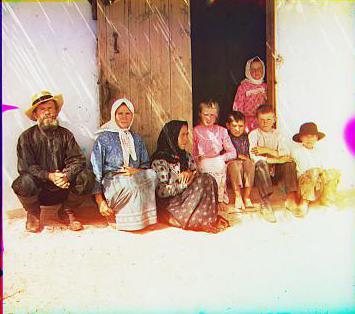

The goal of the assigment is to create a program that takes in Prokudin-Gorskii glass plate image negatives and allign them such that a color image with as few visual artifacts as possible is produced. The glass plate negatives are split into their respective Blue, Green, and Red (top to bottom) color channels as shown below:

After loading each image, the first step I took was splitting each image into 3 equal parts to get the plate's respesctive BGR values. This process is simple and is done in the starter code: the image is simply divide into parts each having one third of it's original height. The next step was to decide upon a similarity metric to measure the similarity between two images. To keep it simple, I considered only the two recommened metrics: the Sum of Squared Differences (SSD) and the normalized cross-correlation (NCC). In the end, I decided upon the NCC because it worked slightly better on the image, albeit abit slower. I also excluded 10% of the image (the edges) in my calculation of the NCC simply because the borders serve no purpose in computing a decent alignment of the image channels.

Using the B part of the image as the base, I first exhaustively searched for the best displacement vectors for the G and R values for each of the JPG files. It turns out that searching in the (-15, 15) grid of x-y displacements was sufficient for these files. For each x-y pair, I used np.roll to displace the the G/R pixel matrices, calculated the NCC between these translated matrices and the B matrix, and returned the shifted matrix whose (x, y) displacement values produced the largest NCC. Combining these new alligned G and R matrices with the original B matrix produced the following results:

Displacement vectors: g - [5, 2]; r - [12, 3]

Displacement vectors: g - [-3, 2]; r - [3, 2]

Displacement vectors: g - [3, 1]; r - [7, 1]

Displacement vectors: g - [7, 0]; r - [14, -1]

Displacement vectors: g - [49, 24]; r - [103, 43]

Displacement vectors: g - [60, 16]; r - [124, 14]

Displacement vectors: g - [40, 17]; r - [89, 23]

Displacement vectors: g - [55, 8]; r - [110, 12]

Displacement vectors: g - [79, 29]; r - [175, 34]

Displacement vectors: g - [55, 13]; r - [112, 27]

Displacement vectors: g - [42, 6]; r - [87, 32]

Displacement vectors: g - [57, 20]; r - [114, 27]

Displacement vectors: g - [65, 12]; r - [138, 23]

Displacement vectors: g - [60, 3]; r - [137, 4]

Displacement vectors: g - [19, -2]; r - [116, -9]

Displacement vectors: g - [36, 10]; r - [83, 7]

Overall, the image pyramid algorithm did a decent job alligning all of the images.

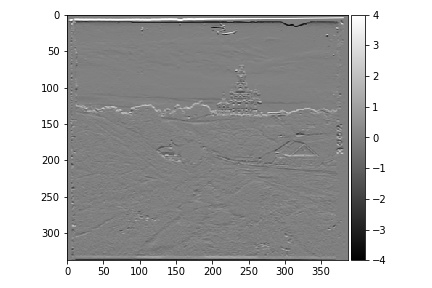

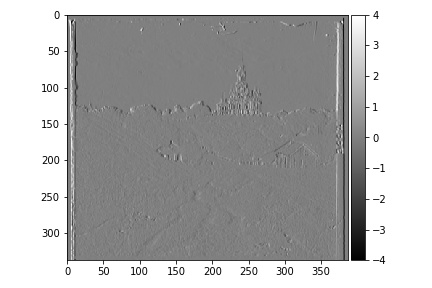

To deal with the black and white border of the images, I used applied the horizontal and vertical sobel filter to the image for edge detection. Below is result of applying the horizontal and vertical filters to the blue channel of the cathedral.jpg image:

Determing where the white border and black border of each image lies from the above is relatively straightforward. Considering only pixels in the outer 1/5 of the image, finding arg-min of pixel intensities for the left and top edges and the arg-max of pixel intensities for the right and bottom edges gives a generally good approximation. To keep things simple, I only looked at pixel values along the 4 quarters of the image for each position, and took the max for the left and top min for the right and bottom values, and choose them as subset values. Below are the before and after pictures of the auto-cropped in action:

One of the main issues with my approach is that it worked really bad for cropping the bottom border. This is because I used the blue channel to find the cropped image, since I alligned the red and green channels to it anyways. The problem with using the blue part is that the bottom border in this part of the image was only black, and was really small. So I decided to not crop the bottom part of the image, which produced results that were sufficient. The result is that I couldn't do anything about the weird colored borders in the lower part of the image.