The main idea of this project is to align 3 different rgb channels to create one rgb image. The challenge lies in the fact that each chanell can have differences with other channels and can have large distortions in the image. The idea is to combine all three channels along with some shift for two channels so that the resulting image looks like a normal rgb image.

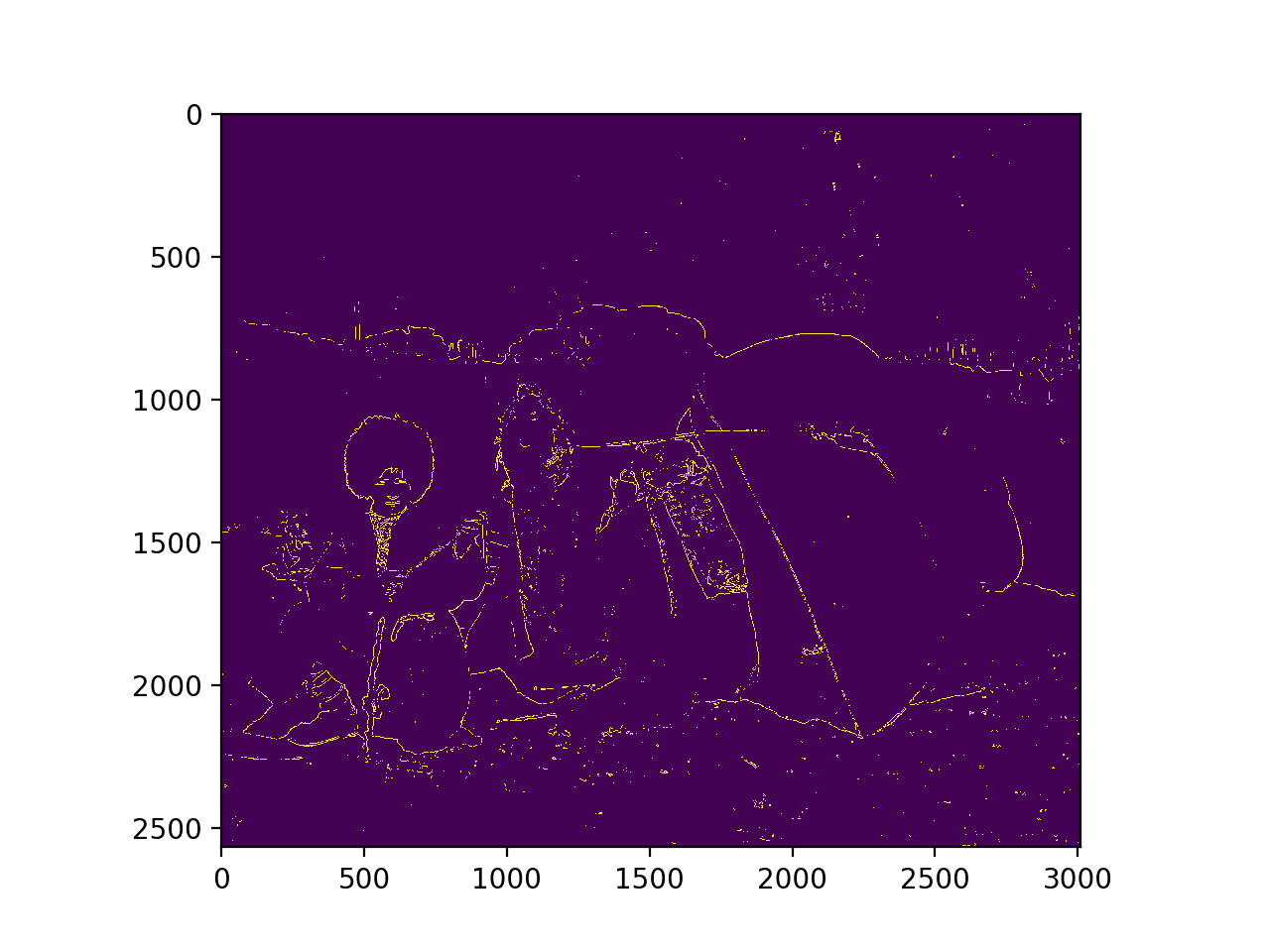

The initial approach follows a simple iteration over a window range of the image. The image is cropped to remove borders and other potential distortions that would affect alignment. Then the sobel operation is done as edges are important features to align as well as it ignores the different luminances of each channel. Next the best shift in a horizontal and vertical range from -15 to 15 is found by using the sum of square differences between the two channels. Once the shift is found, each channel is shifted to their corresponding amount and then each channel is stacked to make an image.

Next, for use on larger images, a image pyramid is constructed. This is an recursive process where it uses the previous approach on smaller and smaller scale images of itself (decreasing by a factor of 2 each time). First it recurses until it gets to some small, pre-specified image size of itself (200). Then it finds the large scale but coarse shift in that size. Then when the function goes back to a larger scale of itself it finds a more fine tuned shift and adds it to twice the shift of its recursive call. To make this more efficient, the window of shift ranges increases as the image get's smaller. This makes sense to do because ideally the larger images will only need to find fine grained shifts. There were no problems with any of the images. Small images take ~.15 seconds to align and lage images take ~7 seconds to align.

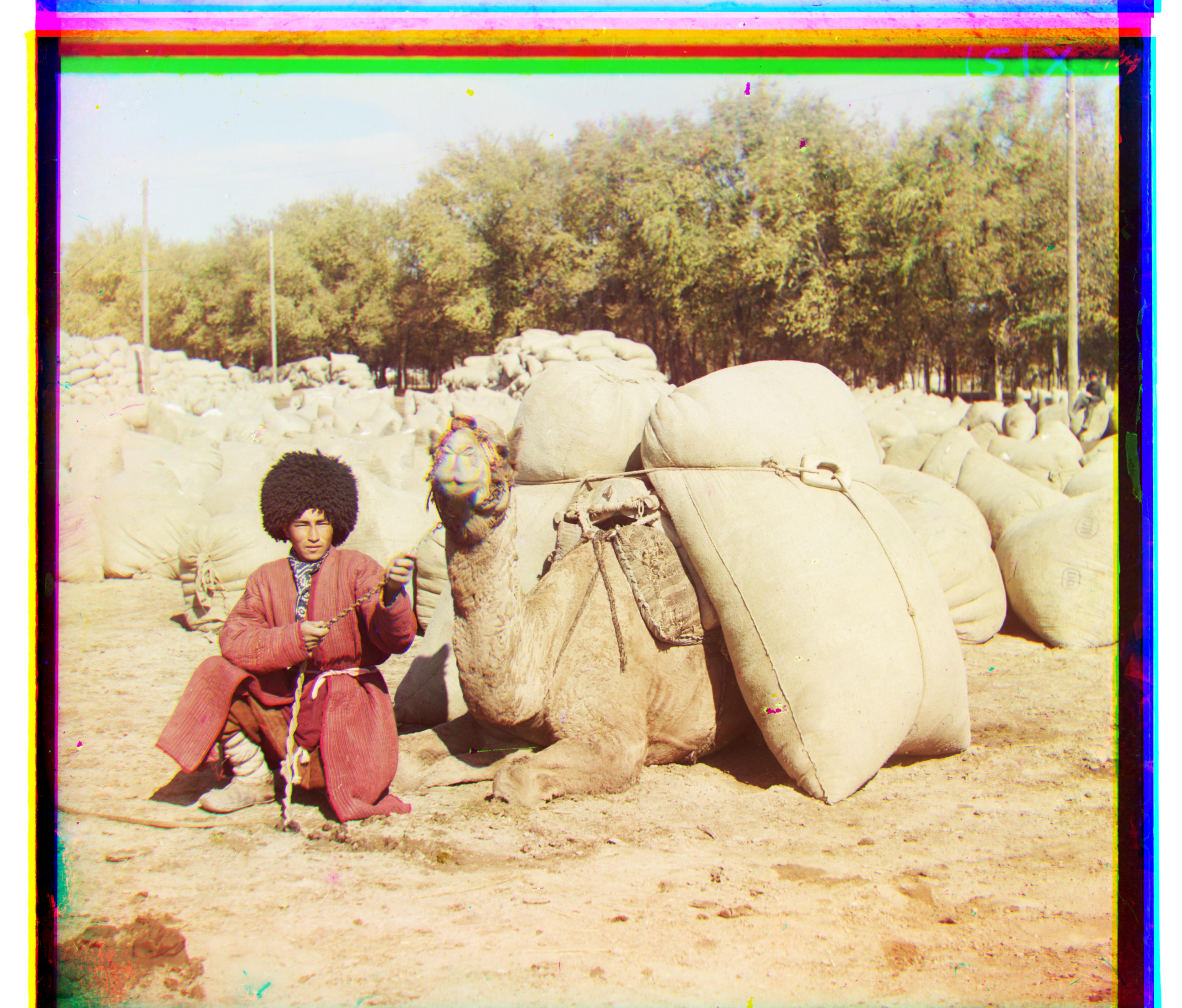

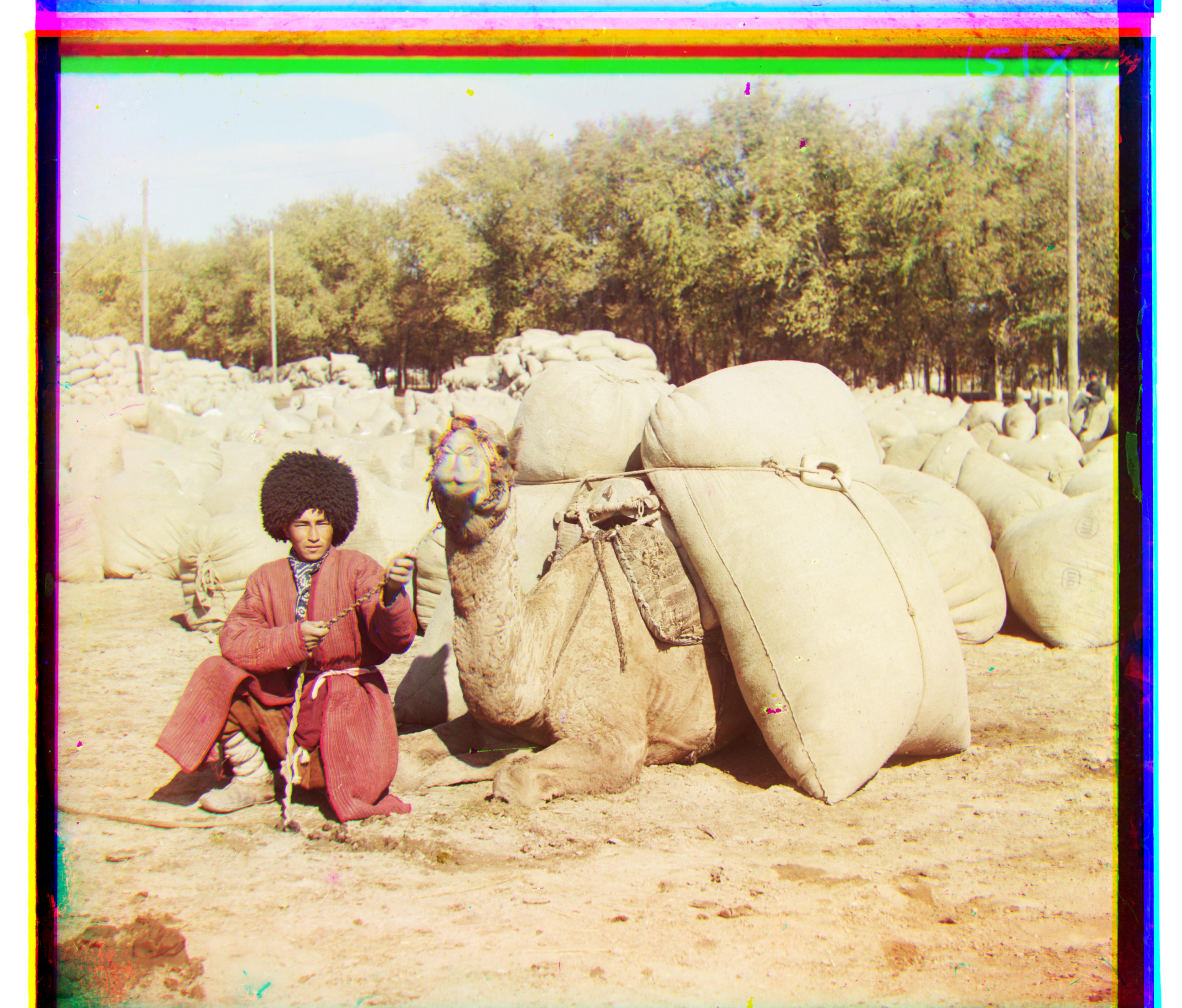

Contains 13 provided images with 3 extra images (at the end).

Here is the list of image names along with their red and green channel offsets.

| Image name | Green x-shift | Green y-shift | Red x-shift | Red y-shift |

|---|---|---|---|---|

| cathedral.jpg | 2 | 5 | 3 | 12 |

| monastery.jpg | 2 | -3 | 2 | 3 |

| nativity.jpg | 1 | 3 | 0 | 8 |

| settlers.jpg | 0 | 7 | -1 | 14 |

| emir.tif | 24 | 49 | 40 | 107 |

| harvesters.tif | 17 | 60 | 14 | 124 |

| icon.tif | 17 | 42 | 23 | 90 |

| lady.tif | 9 | 56 | 13 | 120 |

| self_portrait.tif | 29 | 78 | 37 | 176 |

| three_generations.tif | 12 | 54 | 9 | 111 |

| train.tif | 2 | 41 | 29 | 85 |

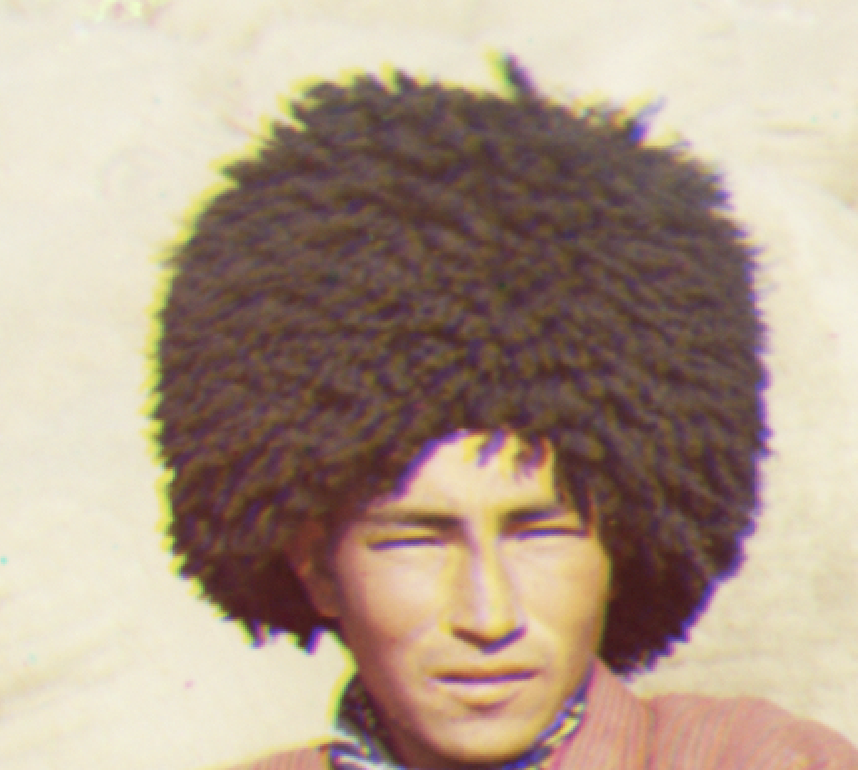

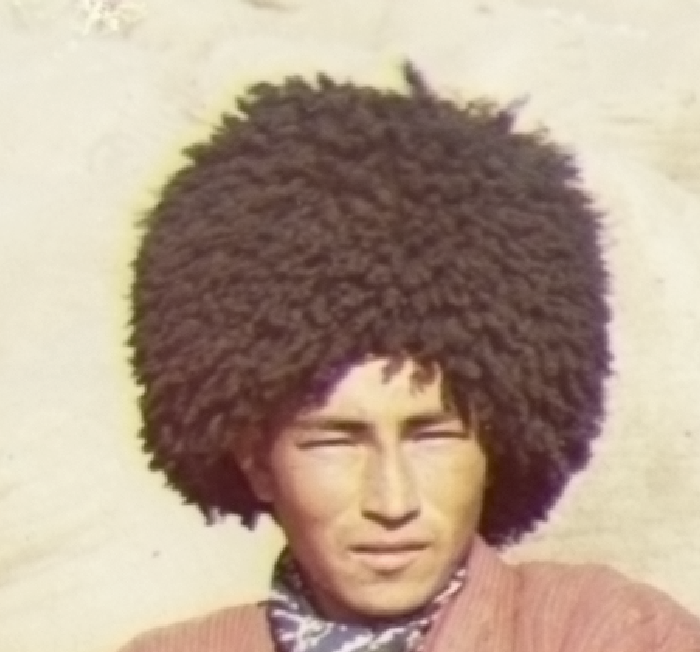

| turkmen.tif | 22 | 57 | 28 | 117 |

| village.tiff | 10 | 64 | 21 | 137 |

| extra_house.jpg | 1 | 2 | 1 | 7 |

| extra_monument.jpg | 1 | 1 | 2 | 3 |

| extra_water.jpg | 0 | 1 | 0 | 2 |

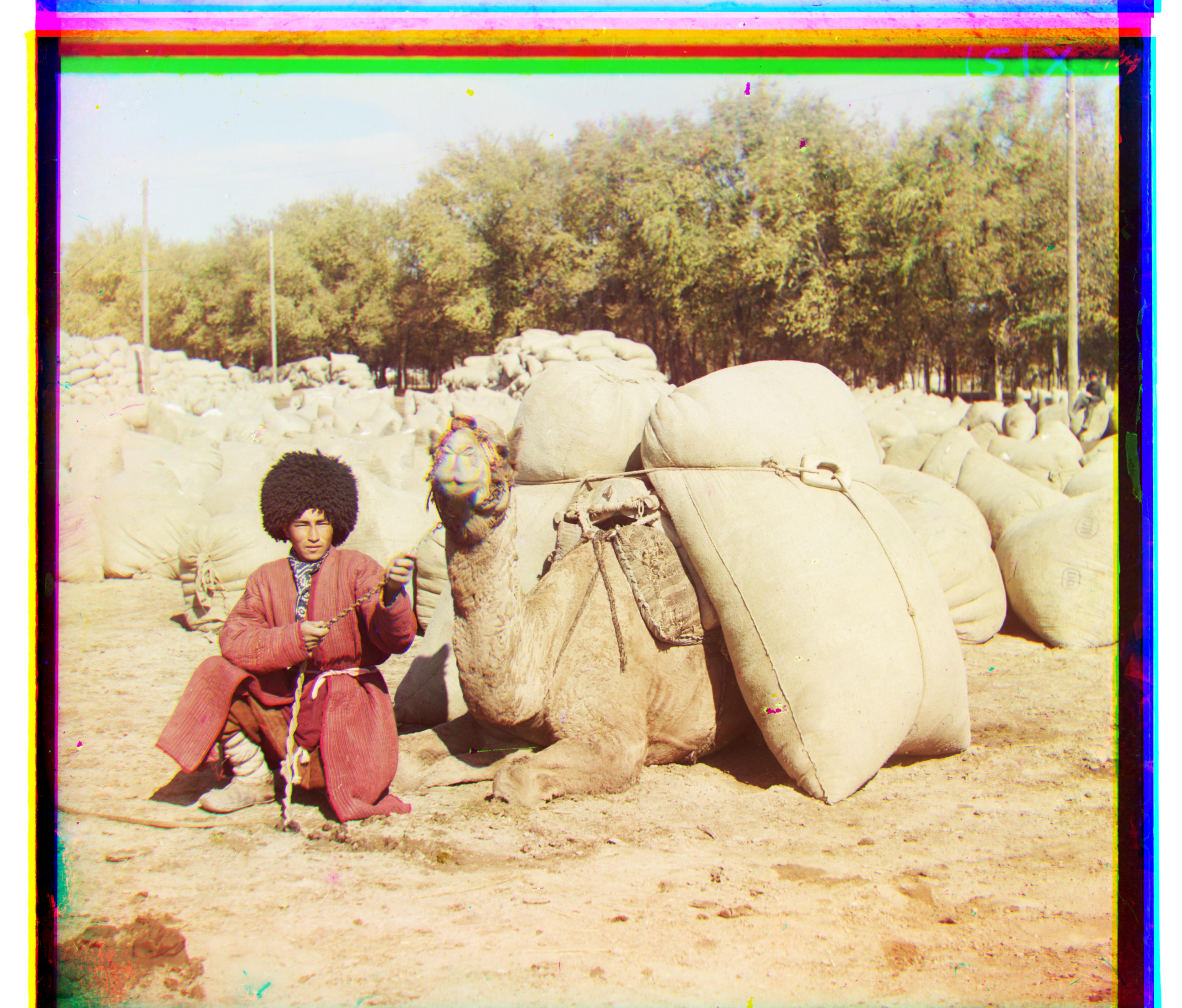

One of the issues with this dataset of images is that there is some non-linear distortion between each channel, meaning that channels may be overlapping well in one part of the image but not well in another part. Another issue known with cameras is chromatic abberation, where the lens has a different refractive index for different wavelengths of light. This results in edge efects usually where edges of objects either have a magenta or greenish hue. This also could be a reason why the image doesn't seem aligned in some parts. To fix this problem, chroma blurring is used. The process first involves taking the current, aligned image and putting it in the LAB color scheme. Slight Gaussian Blurring is done in the a and b channels. After this, the image is converted back into RGB. The quality of images, for some fine grained detail, have improved as a result of this method. An example is shown below.

Here is a segment of one of the aligned images above. Notice the boundary effects.

While the problems with boundary effects are improved, this post processing technique does make the image slightly more blurry (although not in the luminance channel).