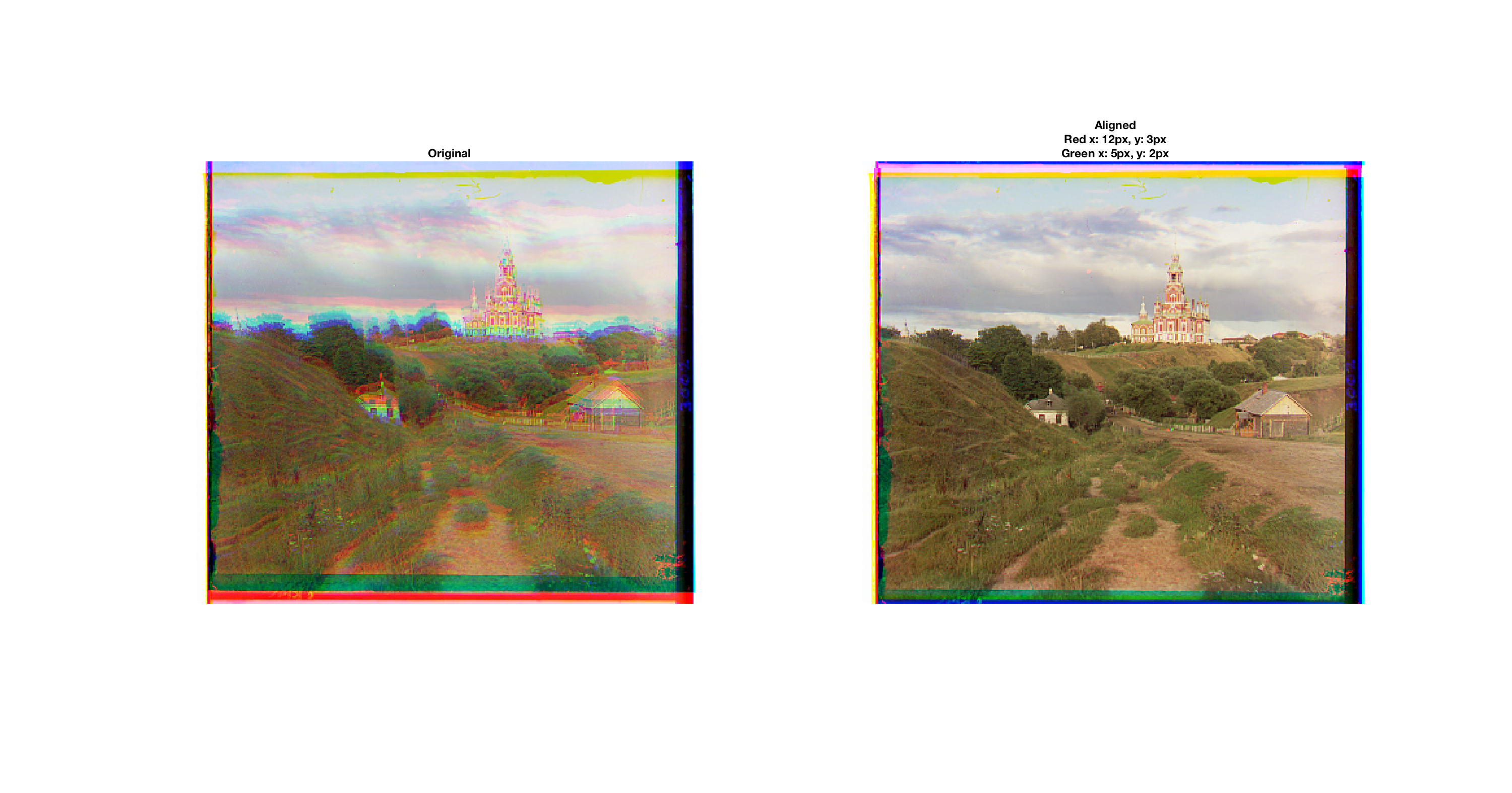

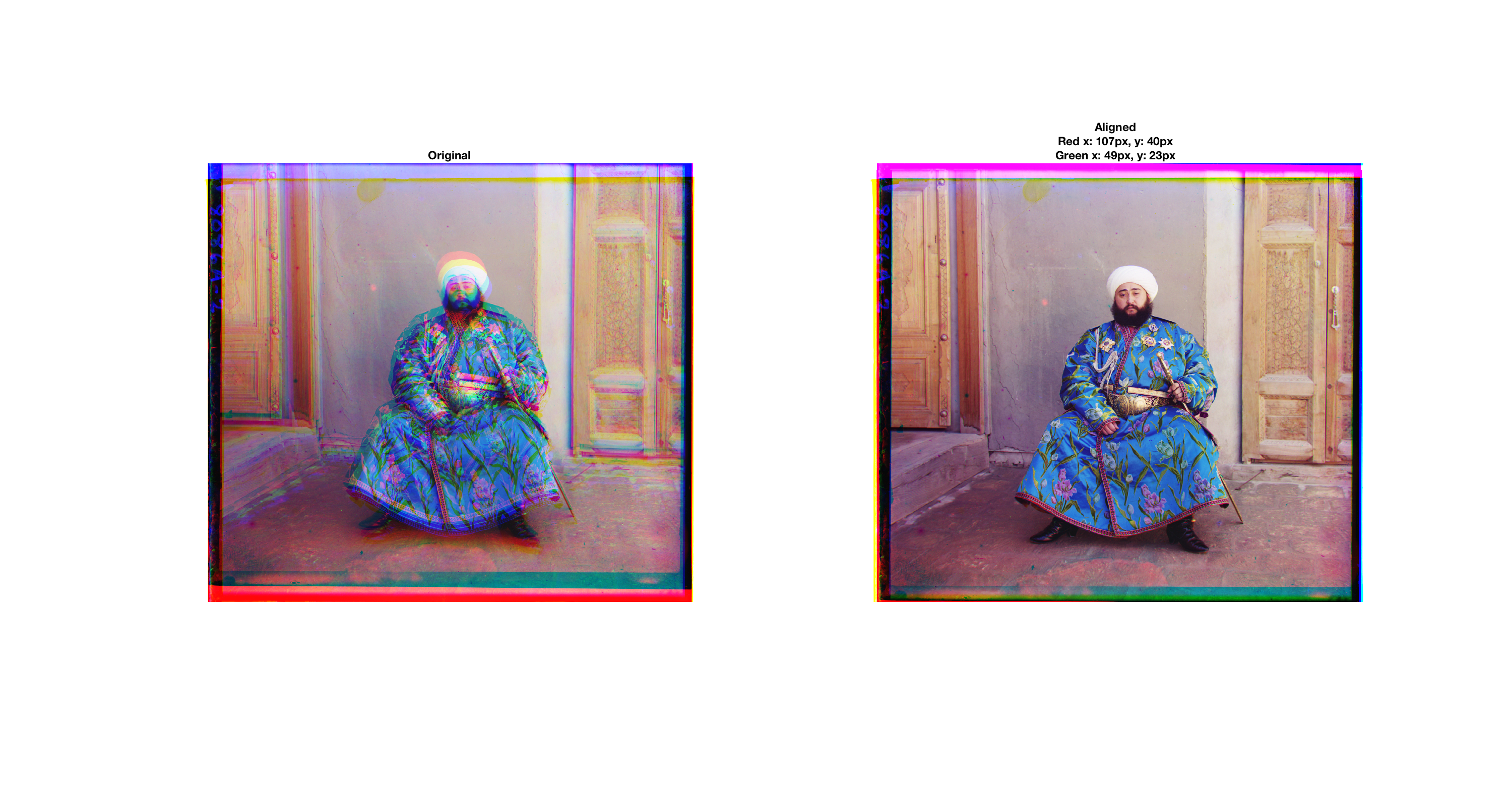

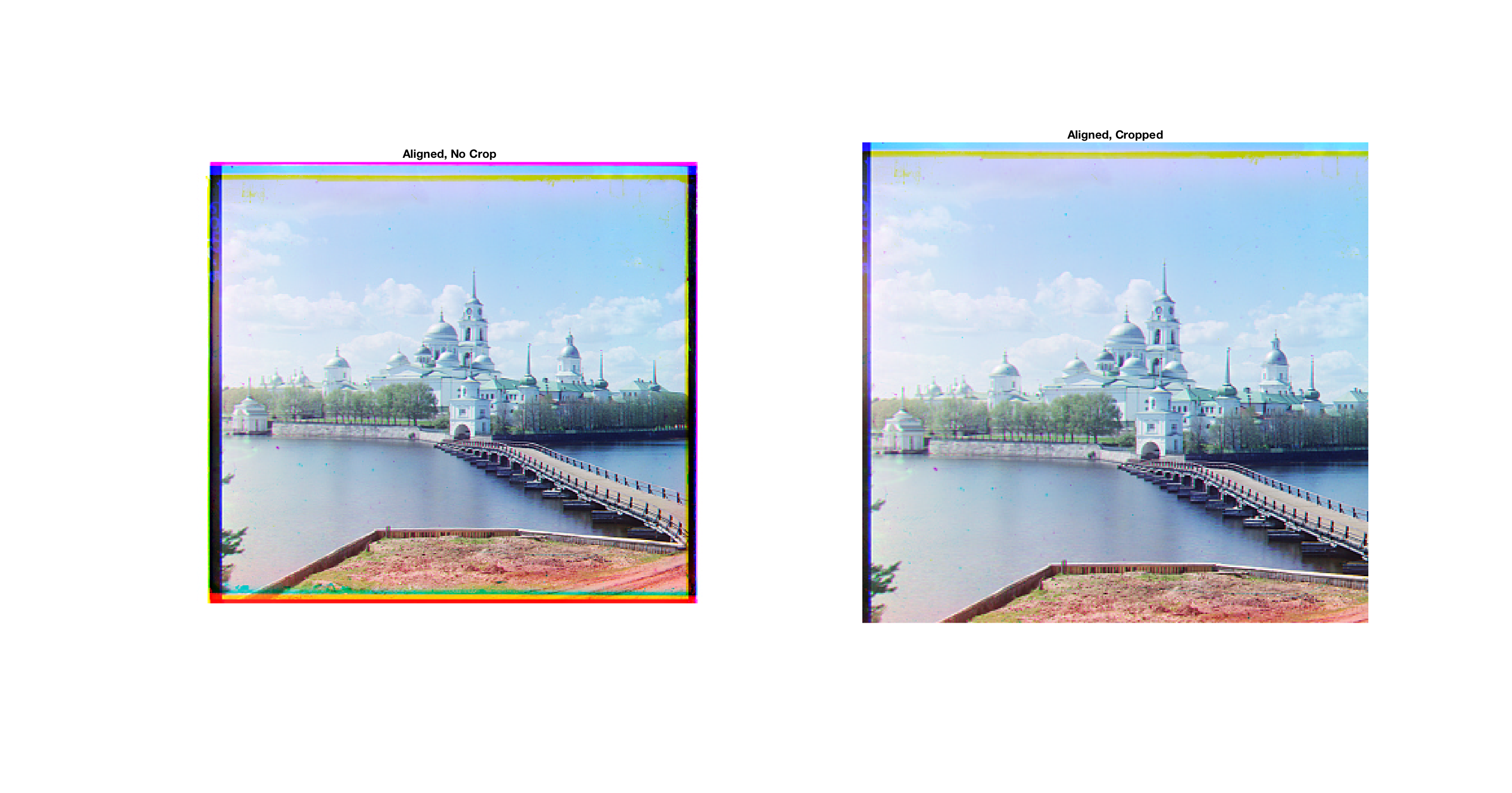

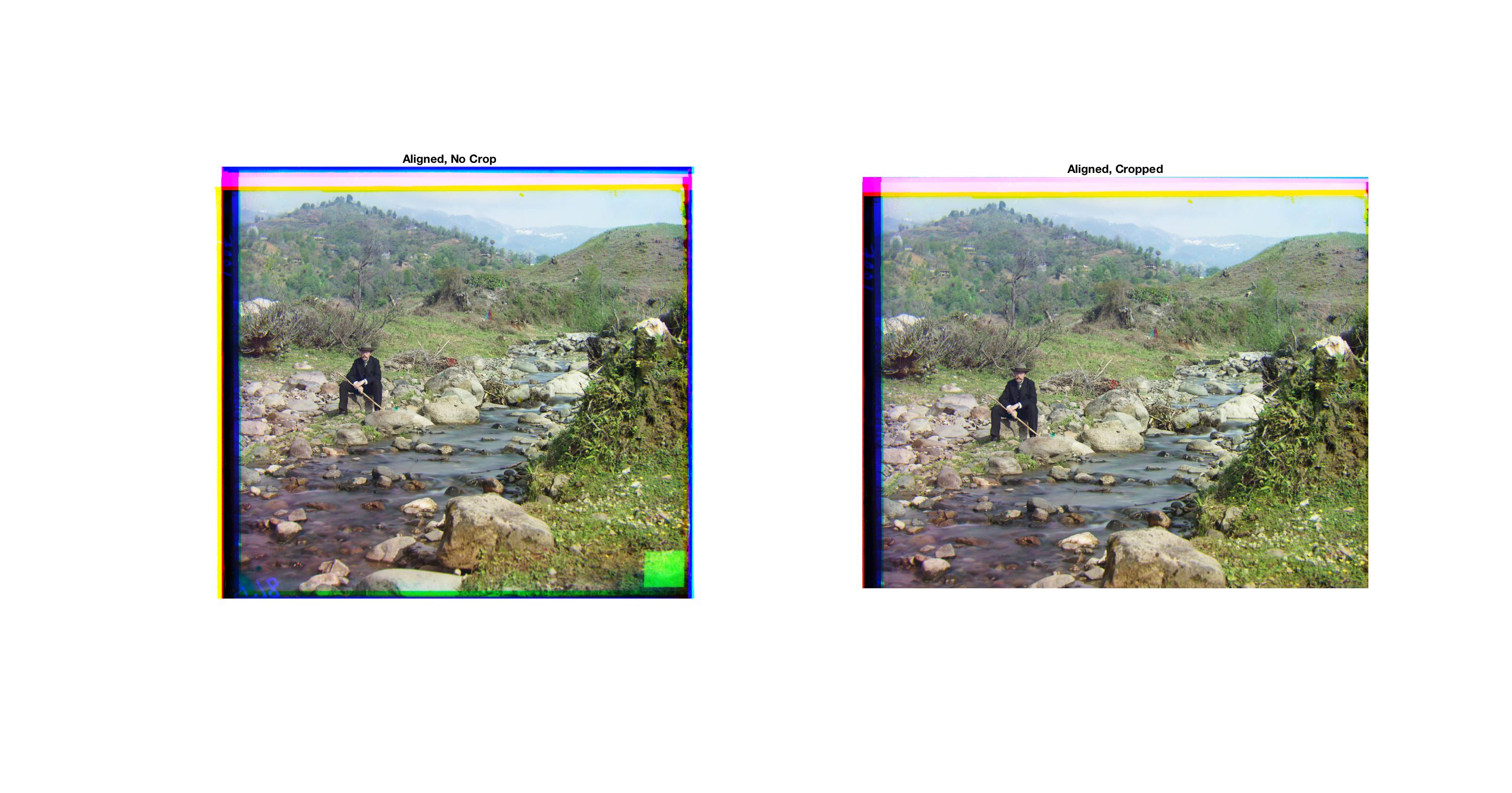

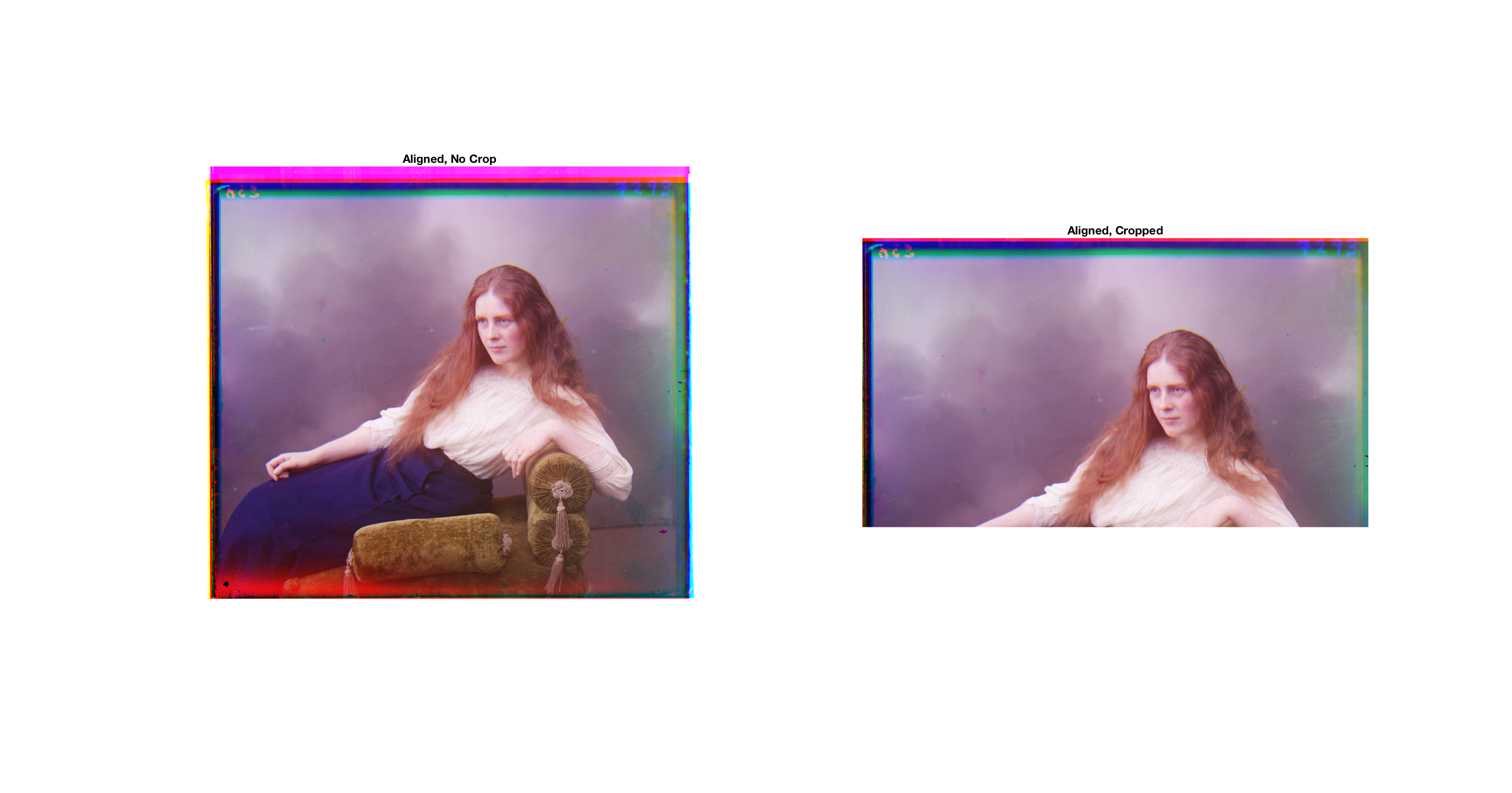

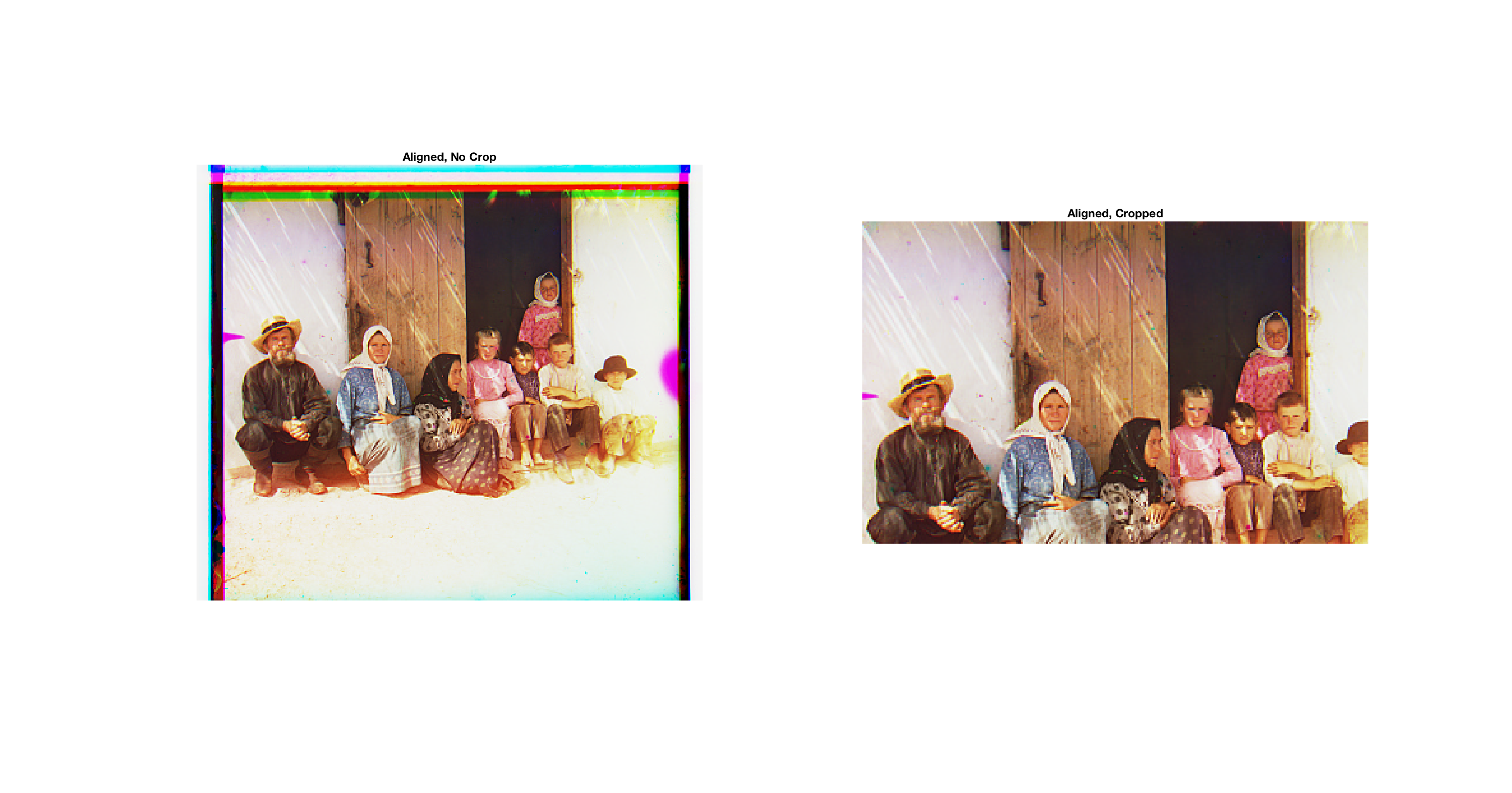

Sergei Mikhailovich Prokudin-Gorskii (1863-1944) captured three grayscale images, each behind a different color filter - red, green, and blue, of various scenes in the Russian Empire. The Library of Congress has digitally scanned and uploaded the negatives to their archive. Since Sergei was not perfectly still when taking these photos, naively laying the RGB color channels on top of each other doesn't create the desired effect of a full color image. This project aims to spatially align the three color channels in order to produce a high quality color image with limited artifacts.

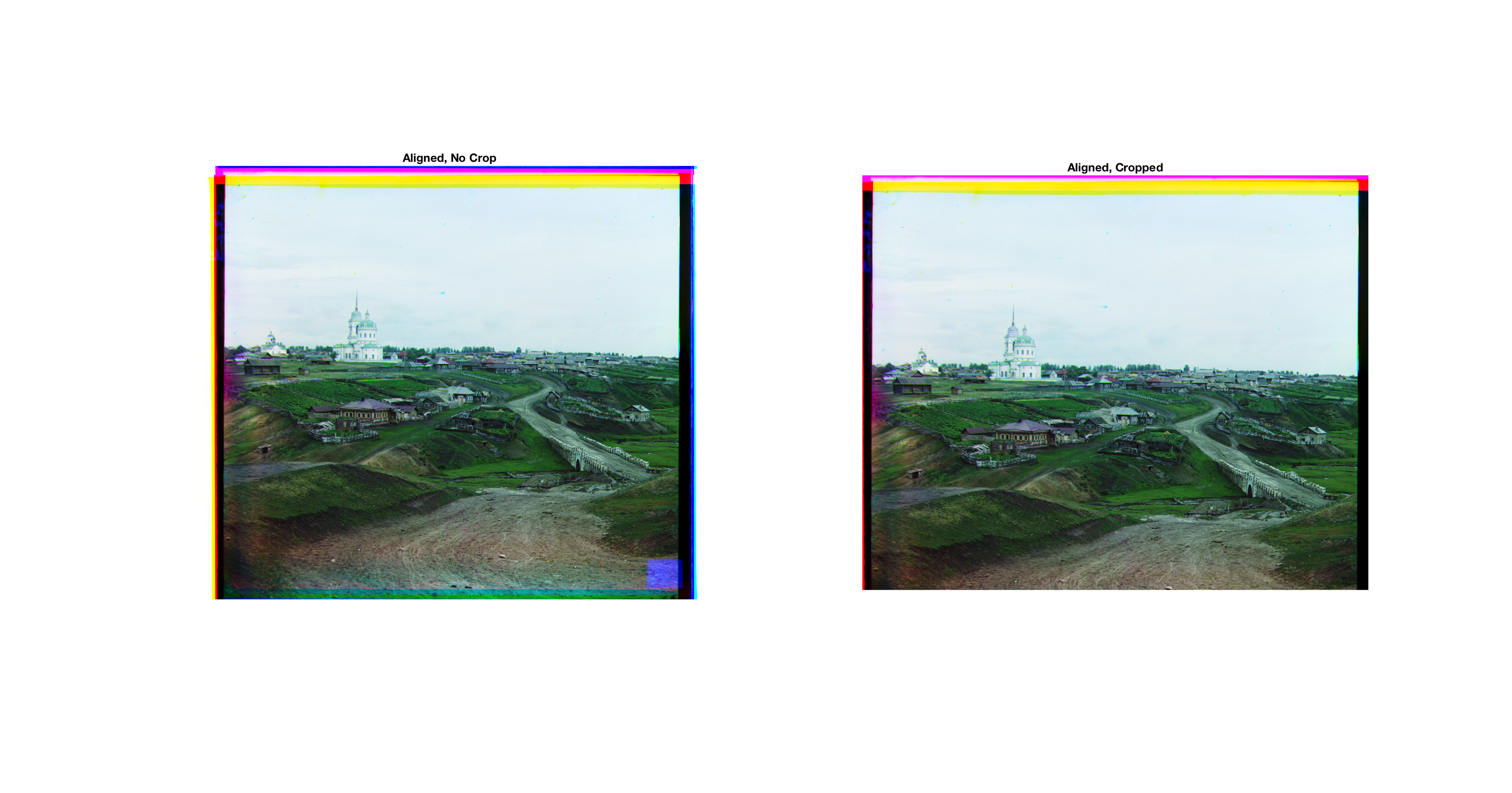

The main idea is to circularly shift the red and green color channels in the x and y directions such that their normalized cross-correlation (NCC) score is maximized with respect to the blue color channel. The NCC is defined as dot(image1./||image1||, image2./||image2||) where image1 and image2 are the vectorized versions of the 2D grayscale images. This quantity is often called the "cosine similarity" between two vectors. Since the scanned images have colored borders which are not part of the image, only 70% of the horizontal and vertical field of view, from the center, is considered in the NCC score.

The algorithm should output the circular shift in both the x and y directions which maximizes this score for both the red and green channels. Doing this for large images is computationally expensive and the operation takes unreasonably long to complete. More specifically, if we are searching in a window of [-dx, dx] and [-dy, dy] shifts, the algorithm has quadratic time complexity. To solve this, I used an image pyramid to estimate the majority of the shift on a coarse (read: small, subsampled) image. Then, I estimated the shift on the next level of the pyramid with a larger image, shifted with a scale of the shift estimate of the previous level, using a smaller search window, and so on. The shift at each level is scaled by the inverse of the downscale applied to the image at a particular level, and all the scaled shifts are accumulated into one x and y shift that is applied to the original image. At a high level, as the algorithm travels from the top of the pyramid, to the bottom, the search window shrinks by the same factor the image is scaled. Although this doesn't reduce the algorithmic complexity, it greatly reduces the clock run time of the algorithm. The set of small images each take <1s to align while the set of large images each take <8s to align (including the edge detection - more on that below)

You may view all of them here.

Note: The results shown are using the Canny edge detection features for computing the NCC, described below. All images except for emir.tif look fine without using edge features for scoring, but I omitted displaying those results since the difference in image quality is imperceptible.

Since edges are invariant to the absolute intensity of the pixels, the binary image produced above makes for a much more accurate scoring metric.

Since edges are invariant to the absolute intensity of the pixels, the binary image produced above makes for a much more accurate scoring metric.

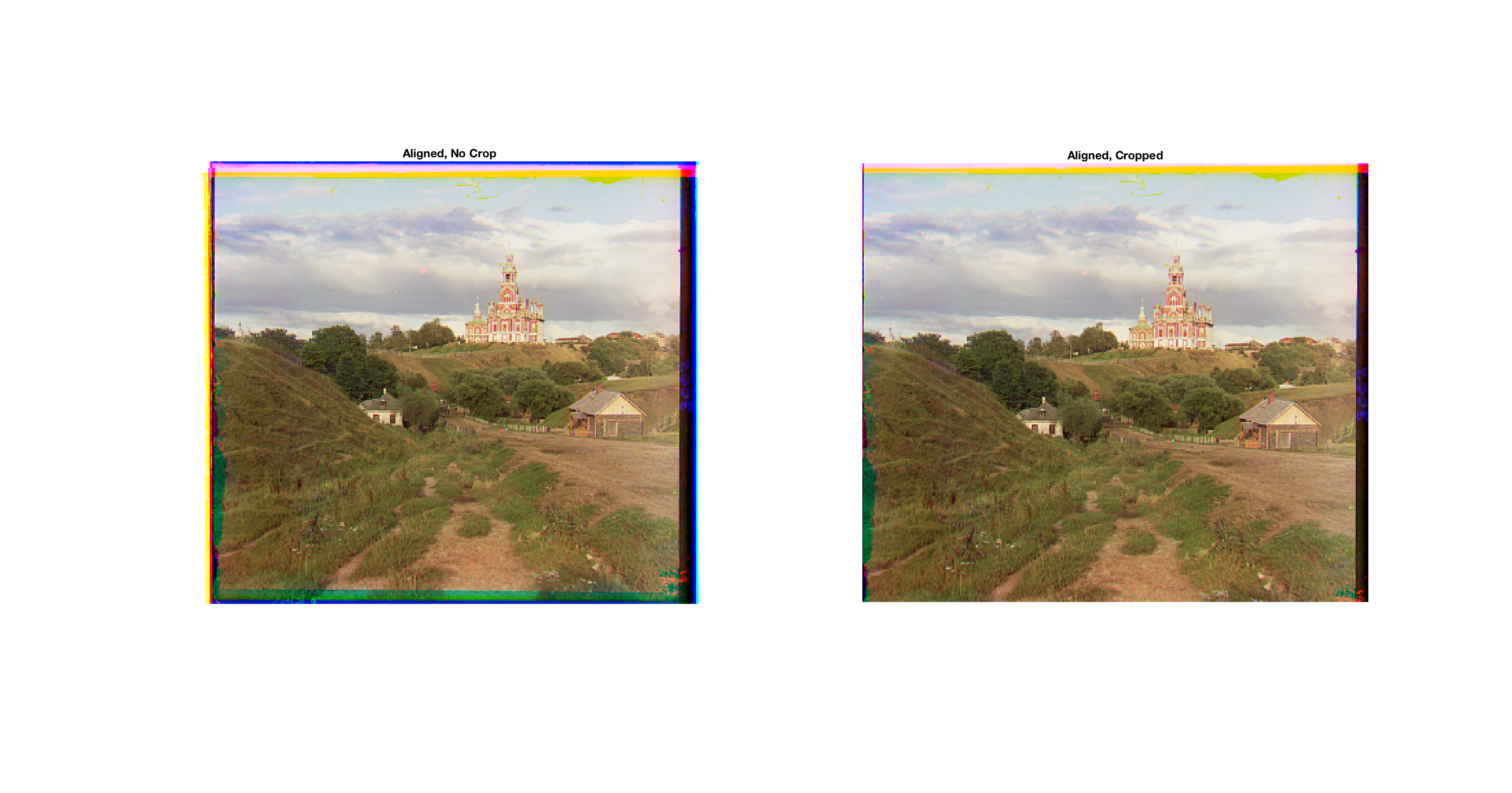

Of course, this algorithm makes the assumption that 1) the maximum error happens on the borders, and 2) the errors after the maximum error are negligible.

Of course, this algorithm makes the assumption that 1) the maximum error happens on the borders, and 2) the errors after the maximum error are negligible.

Most of bad results crop the image too far in, so it's clear that I need a better metric for finding the borders. If I were to approach this problem in the future, I would try identifying the borders by searching for straight lines on the color image.

Most of bad results crop the image too far in, so it's clear that I need a better metric for finding the borders. If I were to approach this problem in the future, I would try identifying the borders by searching for straight lines on the color image.