Sergei Mikhailovich Prokudin-Gorskii was one of the pioneers for color photography.

He used his technique to capture various scenes in twentieth century Russia.

To capture color, Prokudin-Gorskii would take three exposures of the same subject,

each with a different colored filter: red, blue, or green. Each of these images

would be recorded on a glass plate and could be reconstructed into a color image.

That is essentially the goal of this project. For this project, we sought to develop an

algorithm that would take a digitized version of the glass plate images and output a single

colored image. This involved separating the glass plates images file into the three color

channel images, aligning the images, and then stacking them such that they produced one

RGB color image.

I started my project by implementing the single-scale search procedure using for loops,

as suggested by the spec. For the metric, I initially used SSD, keeping track of the minimum

SSD and its respective horizontal and vertical offset as I searched in the [-15, 15] window

of displacement. I tested on the cathedral image, and it worked as expected. After browsing

Piazza and seeing recommendations to use NCC instead, I then implemented a function to retrieve

the NCC and used as the metric (no changes in the search procedure.)

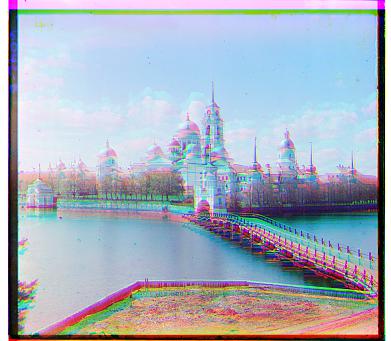

The single-scale alignment process worked well for all the jpg images except for monastery. However,

upon examining the final image, I saw that since much of the image was blue, using blue as a base

was problematic because it skewed my metric. As you can see below, the image on the left, which

uses the blue channel image as the base, is misaligned. The image on the right, with the green

channel image base, is successfully aligned.

Implementing the image pyramid search method took more thought than the single-scale search.

After making sure that all the jpg images work with the metric I had chosen, I made a recursive

function that essentially formed a pyramid of images with different scales to speed up the search.

At each layer, it would make a recursive call to the function, passing in the image scaled to half size

as well as the base image scaled to half size as the parameters. When the passed in image width is less

than 250px, the function does a single scale search at this layer in a window of [-15, 15]. When

the layer above receives this offset, it multiplies it by 2 (because of the scaling factor) and shifts the

image accordingly before searching in a [-1, 1] window and returning the optimal offset at the current layer.

With the pyramid search, I was initially unable to get most of the larger images to align even

after checking my metrics and function for correctness. Finally, after a hint from Piazza,

I realized that the dark borders of the image was throwing off the alignment process because

the NCC metric rates overlaying dark parts highly. The tif files were then cropped 200px from each side, and I

was able to output all the images correctly. I went back and added cropping (10% from each side) for the

smaller jpg images. For some images, there was no change, but for others, like the cathedral image below, it

improved the final color image.

**Note: offsets are in format [horizontal offset, vertical format]**

cathedral.jpg |

monastery.jpg | ||

settlers.jpg |

nativity.jpg | ||

emir |

harvesters | ||

|

icon |

lady | ||

self_portrait |

three_generations | ||

train |

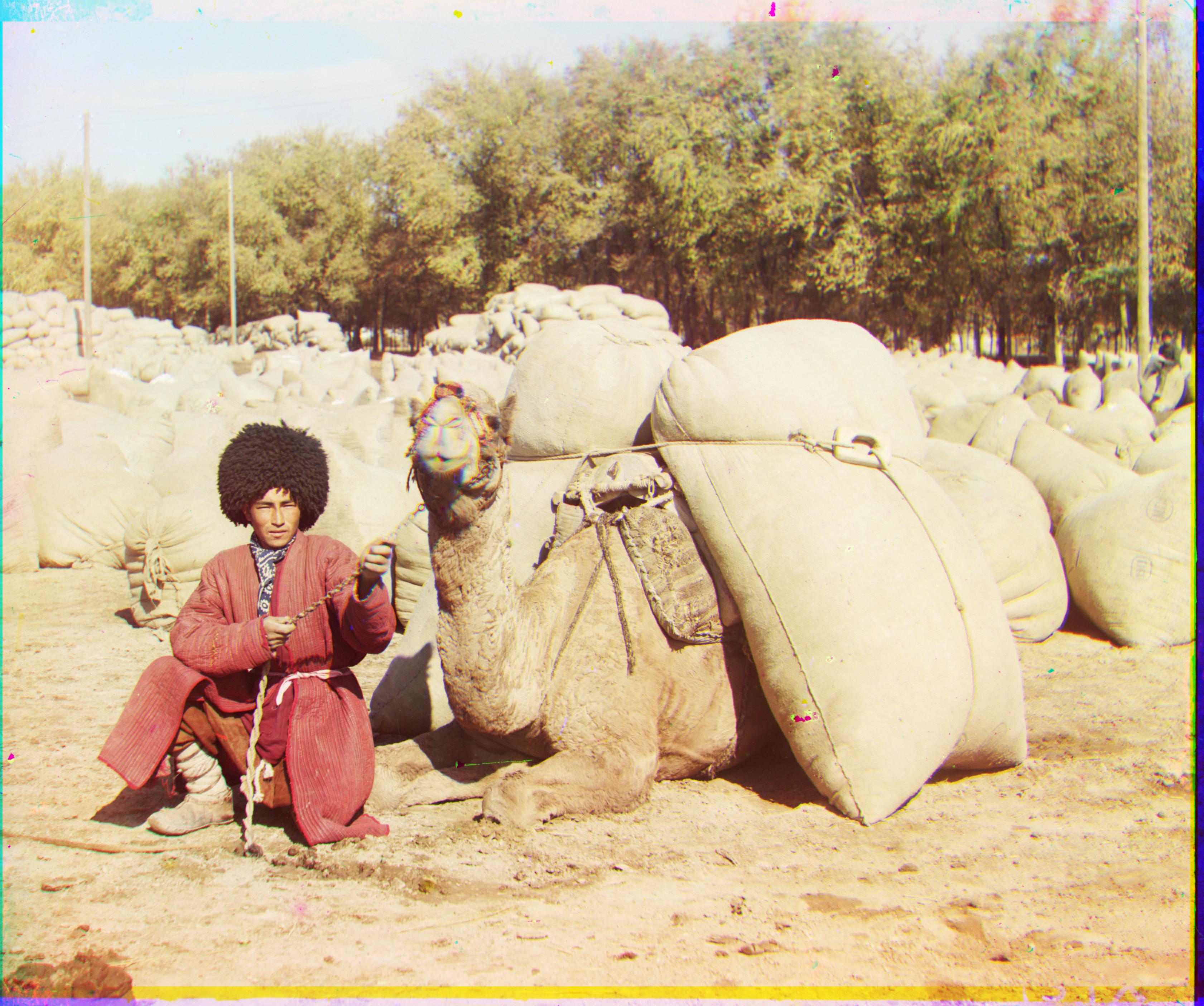

turkmen | ||

village |

flowers (chosen example) | ||

boy (chosen example) |

boat (chosen example) |