CS194-26 Proj 1: Images of the Russian Empire

Brian Aronowitz: 3032201719

First pass

I started by implementing basic SDD/Normalized cross-correlation between the jpg images: comparing the R,G,B values over an exhaustive [-15, 15] window of shifts of the G and B images. The R channel is never shifted.

I added an additional optimization, to only search the center third of the image, in an effort to ignore the black borders of the images.

Below are some results from this implementation.

Cathedral: G: (-1.0,-7.0) B: (-3.0,-12.0)

Cathedral: G: (-1.0,-7.0) B: (-3.0,-12.0)

|

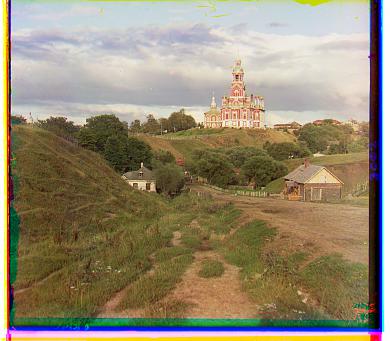

Monastery: G: (-1.0,-6.0) B: (-2.0,-3.0)

Monastery: G: (-1.0,-6.0) B: (-2.0,-3.0)

|

Nativity: G: (1.0,-5.0) B: (0.0,-8.0)

Nativity: G: (1.0,-5.0) B: (0.0,-8.0)

|

Settlers: G: (1.0,-8.0) B: (1.0,-15.0)

Settlers: G: (1.0,-8.0) B: (1.0,-15.0)

|

Second pass

Unfortunately, the naive searching algorithm, even with the center-third optimization, results in terrible looking alignments for larger images. This is because the images need to be moved relatively far to get ideal alignments, which given the naive implementation, would be extremely computationally inefficient.

I implemented an image pyramid to deal with this. It is dynamically sized, reducing the images by a constant factor until it reaches a 300 pixel threshold that I set. On average, about seven levels are created for the larger images. On each level of the pyramid, the alignment routine returns the computed ideal offsets to the pyramid level above it. This offset is then applied to the images, and then the images on the current level are computed. It continues to compute these offsets iteratively, storing all the offsets in a sum, which is at the end applied to the original images.

Below are some results of this image pyramid optimization.

Harvesters: G: (8.0,-351.0) B: (-78.0,-676.0)

Harvesters: G: (8.0,-351.0) B: (-78.0,-676.0)

|

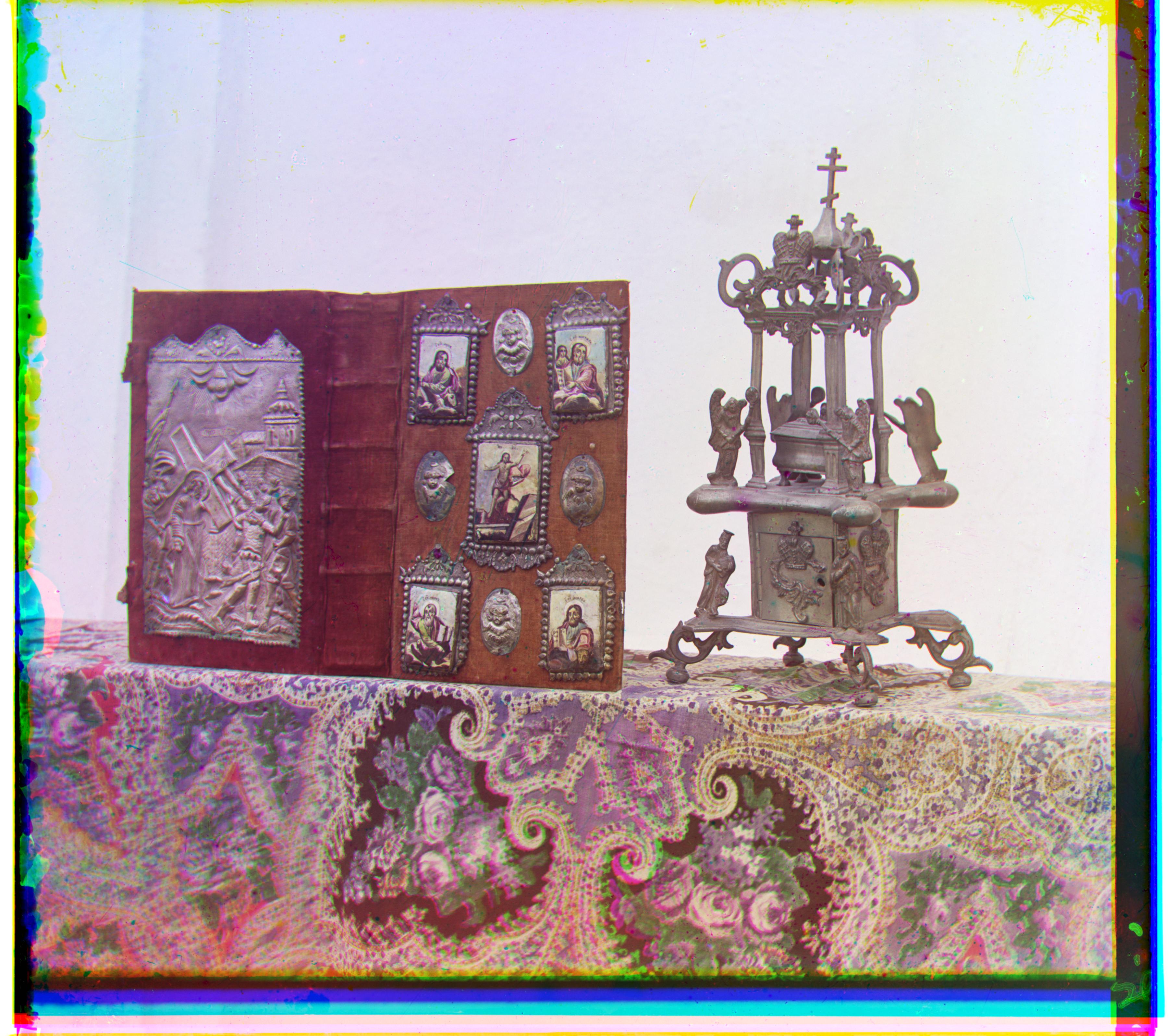

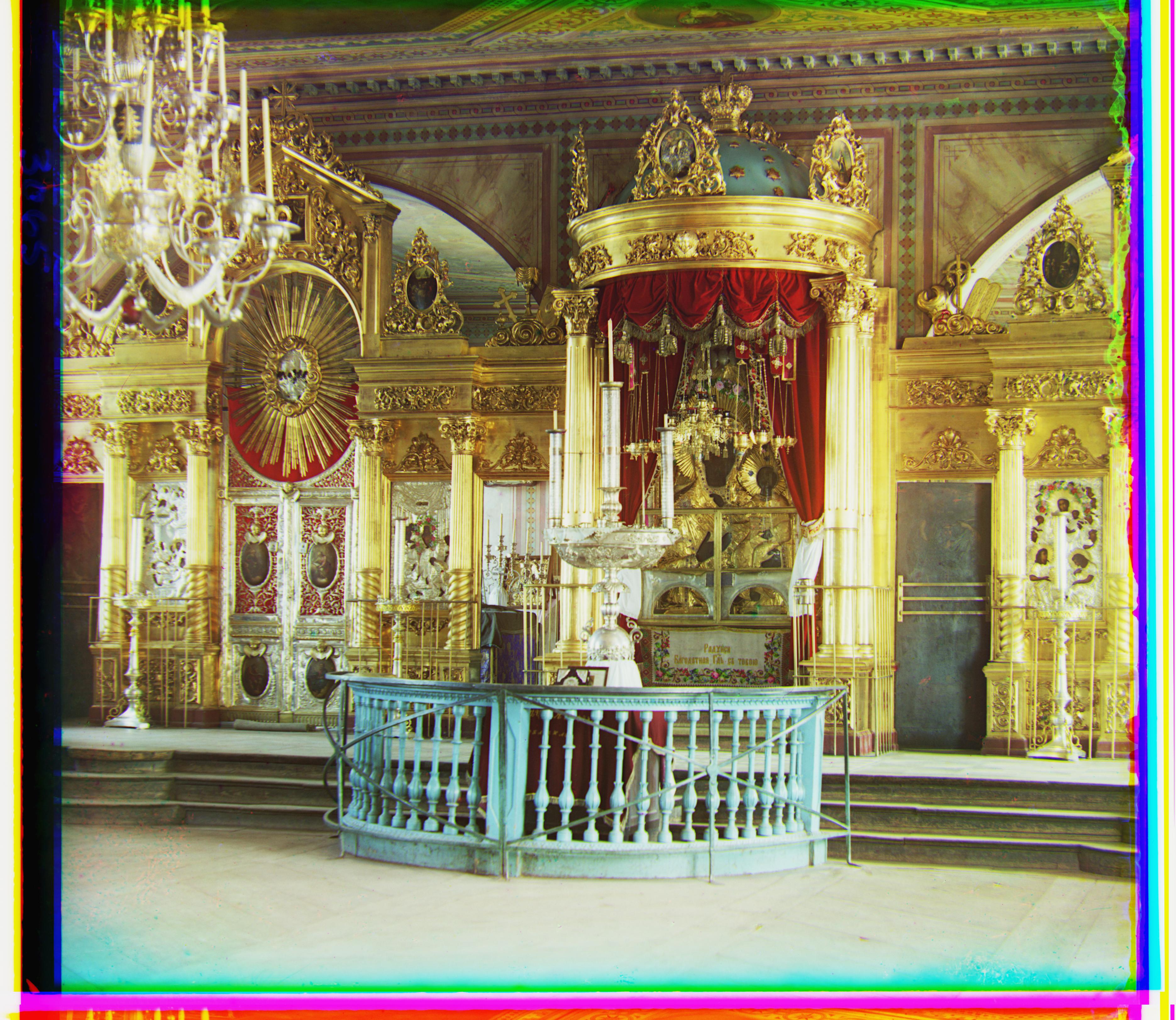

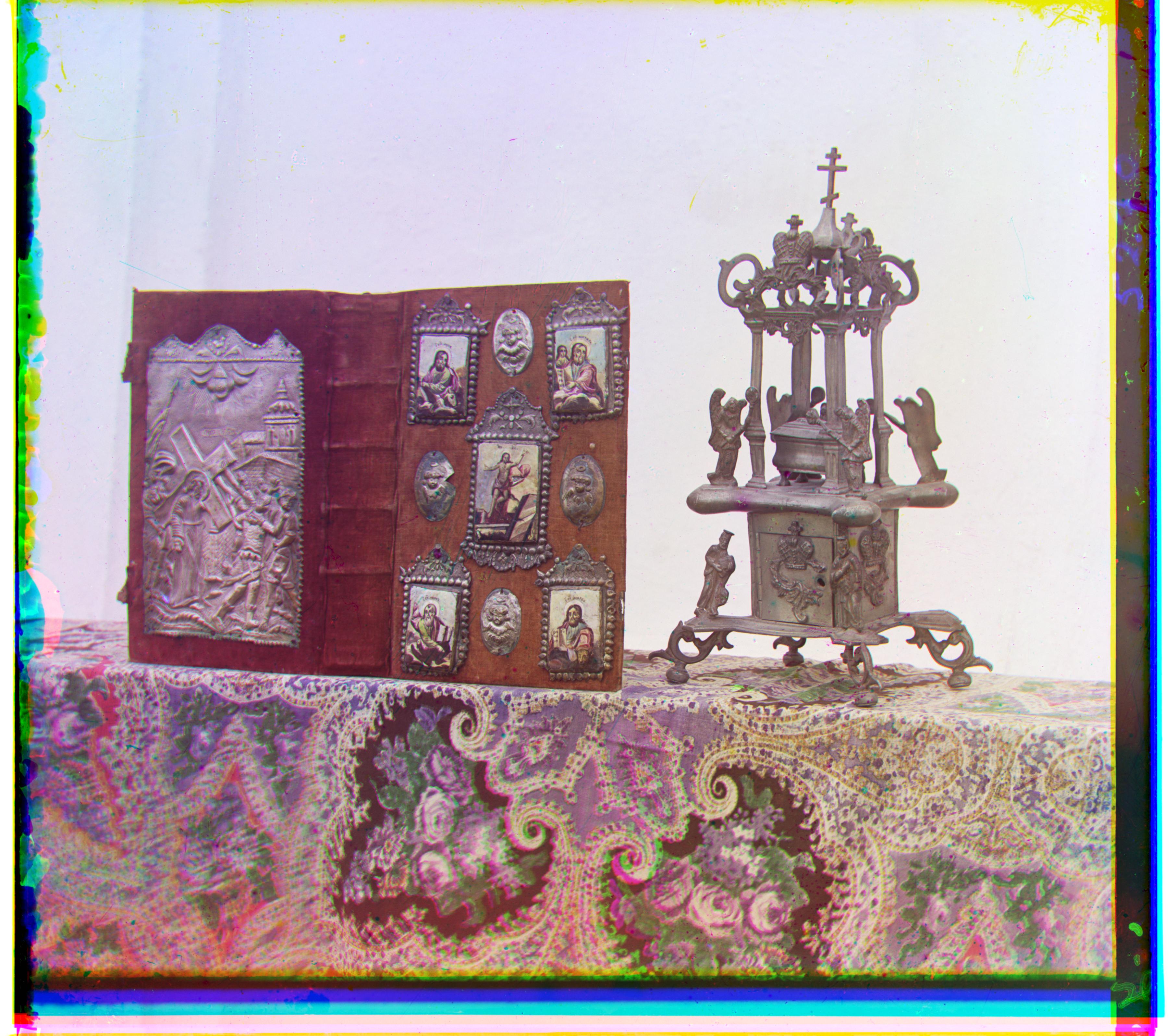

Icon: G: (-31.0,-273.0) B: (-122.0,-495.0)

Icon: G: (-31.0,-273.0) B: (-122.0,-495.0)

|

Self Portrait: G: (-49.0,-544.0) B: (-155.0,-825.0)

Self Portrait: G: (-49.0,-544.0) B: (-155.0,-825.0)

|

Lady: G: (-11.0,-330.0) B: (-55.0,-614.0)

Lady: G: (-11.0,-330.0) B: (-55.0,-614.0)

|

Three generations: G: (10.0,-328.0) B: (-56.0,-604.0)

Three generations: G: (10.0,-328.0) B: (-56.0,-604.0)

|

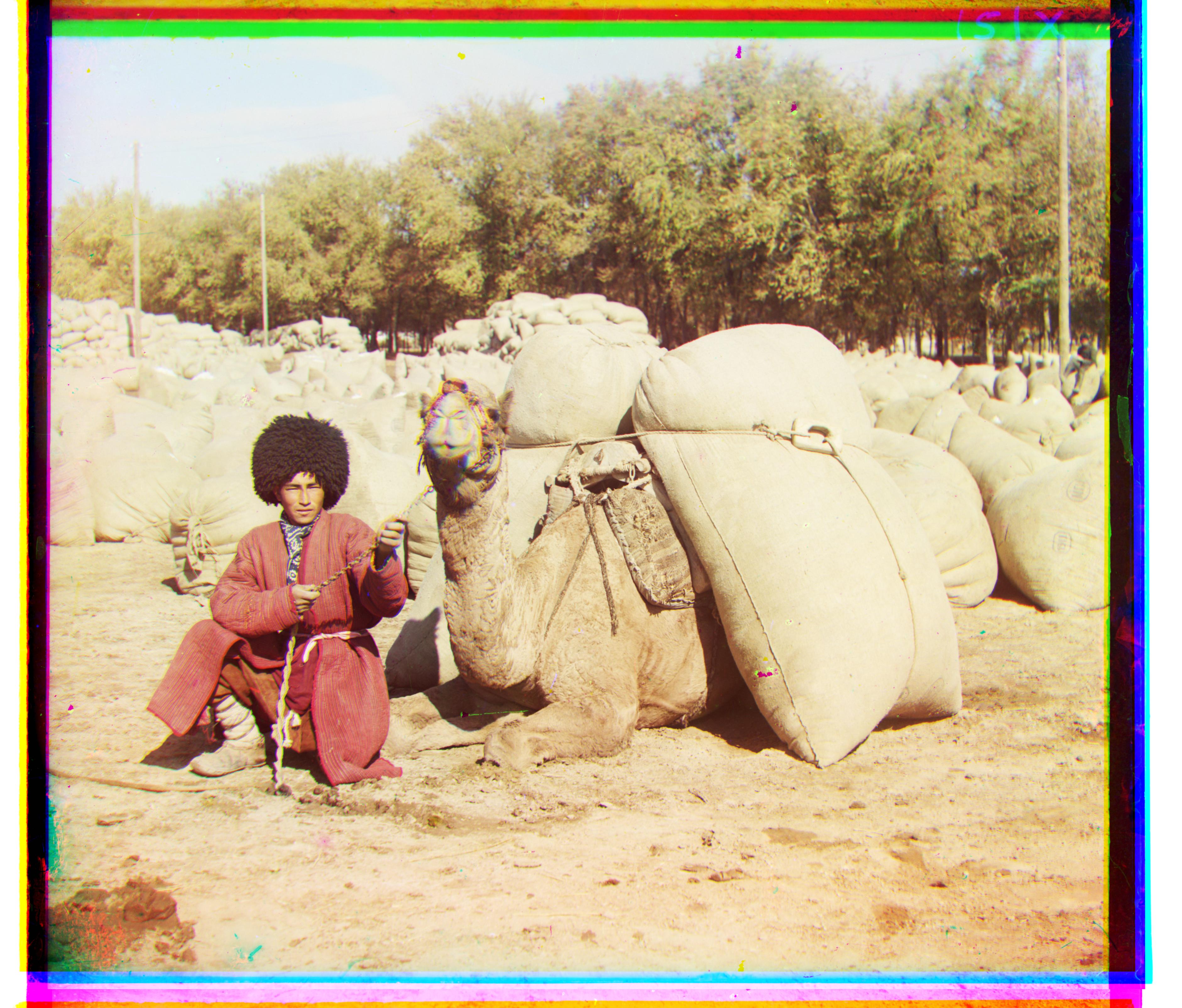

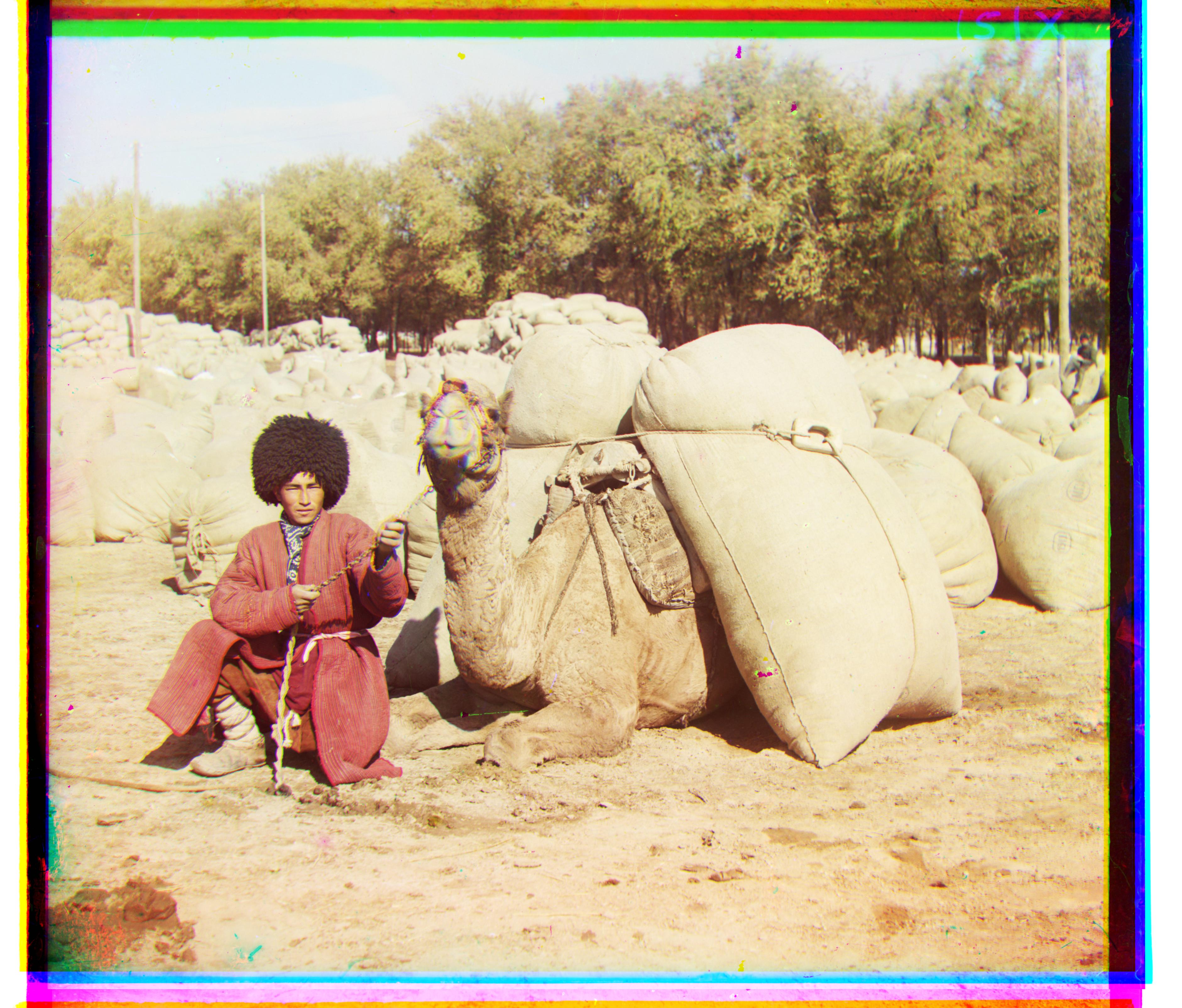

Turkmen: G: (-39.0,-330.0) B: (-161.0,-644.0)

Turkmen: G: (-39.0,-330.0) B: (-161.0,-644.0)

|

Train: G: (-145.0,-232.0) B: (-174.0,-473.0)

Train: G: (-145.0,-232.0) B: (-174.0,-473.0)

|

Village: G: (-55.0,-393.0) B: (-116.0,-755.0)

Village: G: (-55.0,-393.0) B: (-116.0,-755.0)

|

As show below, the algorithm fails in some cases. For Emir the image pyramid, which uses SSD, simply breaks, and gives a horrifiying ugly result. The dense patterns make the center searching algorithm freak out and misalign the image.

Emir unaligned: G: (-102.0,-321.0) B: (154.0,770.0)

Emir unaligned: G: (-102.0,-321.0) B: (154.0,770.0)

|

Bell and whistle 1/ Emir Alignment solution

To deal with this, I implemented edge detection on the R,G,B channels, with the reasoning that pure white pixels, surrounded by blackness, would allow for more precise alignment of the images.

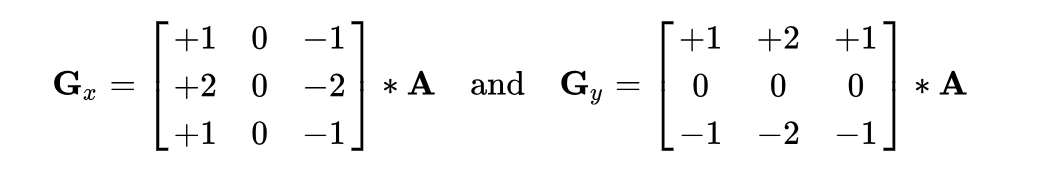

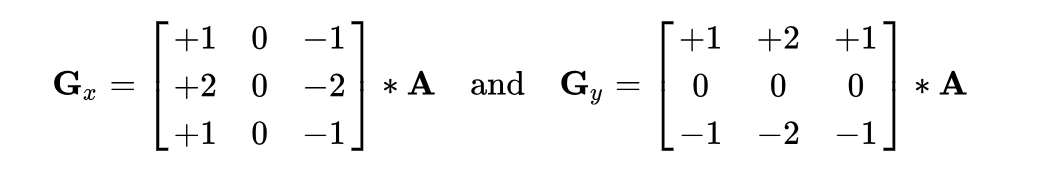

The edge detection algorithm is an implementation of Sobel Edge detection. It works by first convolving a 2D, normalized gradient-detection matrix over the whole image, to detect gradients in the x direction.

Unnormalized sobel gradient operators

Unnormalized sobel gradient operators

|

It then convolves the 90 degree rotated version of the first matrix over the image to detect gradients in the y direction.

The resulting two images containing the gradients are squared, added, then their square root is taken. This resulting image (which holds the image gradient information) is multiplied against the original image to extract it's edges.

Monastery, extracted edges [enhanced in photoshop]

Monastery, extracted edges [enhanced in photoshop]

|

Settlers extracted edges [enhanced in photoshop]

Settlers extracted edges [enhanced in photoshop]

|

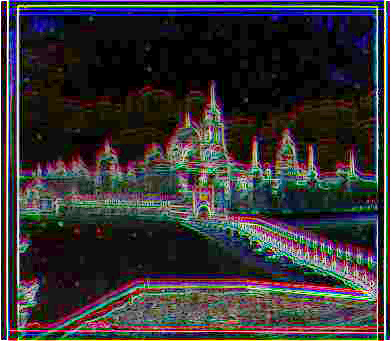

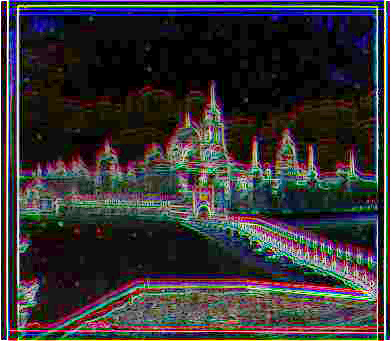

Emir extracted edges [enhanced in photoshop]

Emir extracted edges [enhanced in photoshop]

|

Emir aligned: G: (-55.0,-398.0) B: (-116.0,-758.0))

Emir aligned: G: (-55.0,-398.0) B: (-116.0,-758.0))

|

The end

Urn G: (-47.0,-148.0) B: (-112.0,-203.0)

Urn G: (-47.0,-148.0) B: (-112.0,-203.0)

|

Glass: G: (-9.0,-218.0) B: (-106.0,-329.0)

Glass: G: (-9.0,-218.0) B: (-106.0,-329.0)

|

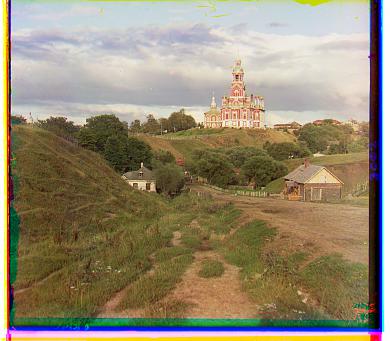

Temple: G: (46.0,-329.0) B: (20.0,-601.0)

Temple: G: (46.0,-329.0) B: (20.0,-601.0)

|

River: G: (-45.0,-291.0) B: (-110.0,-513.0)

River: G: (-45.0,-291.0) B: (-110.0,-513.0)

|