Overview

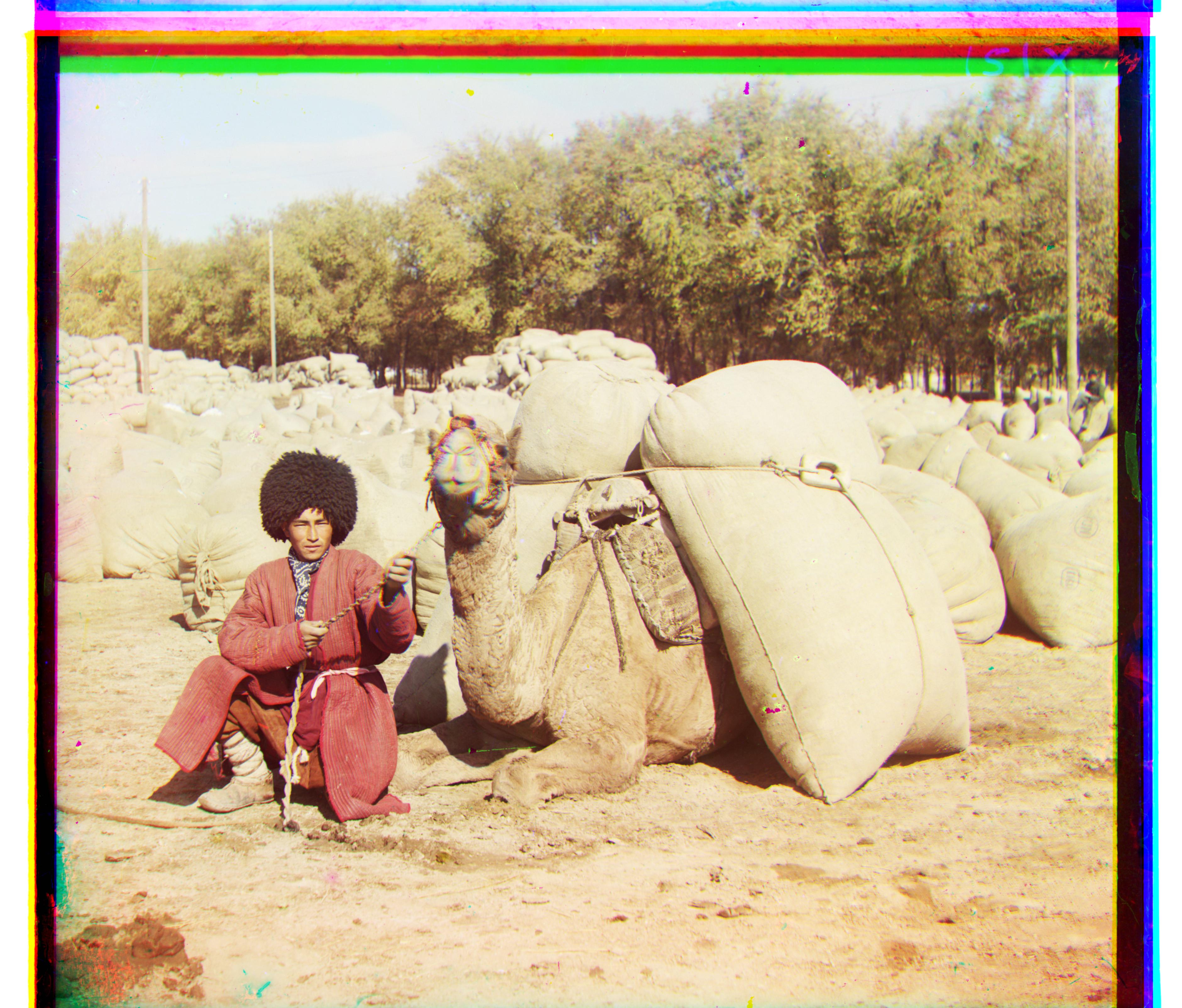

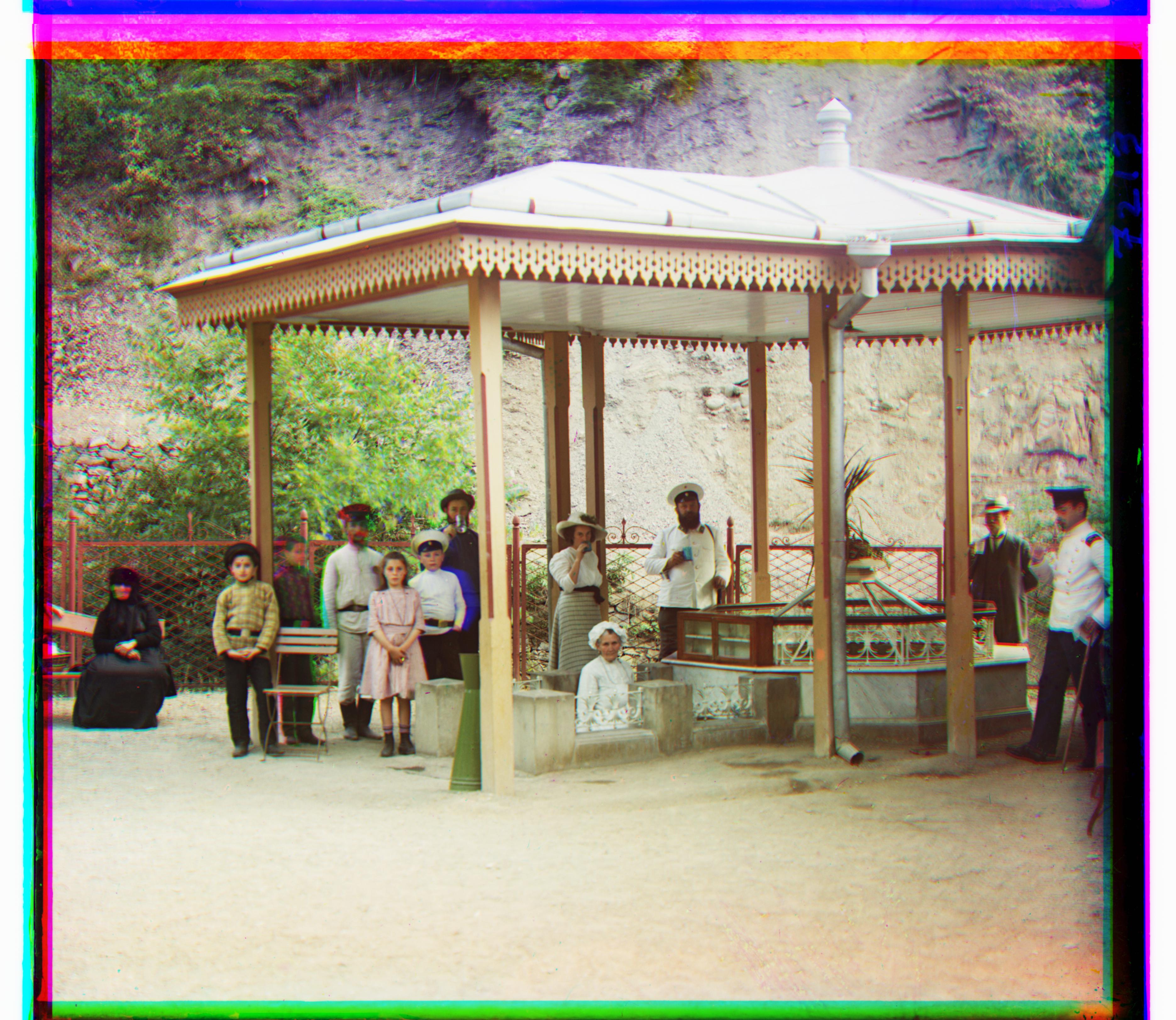

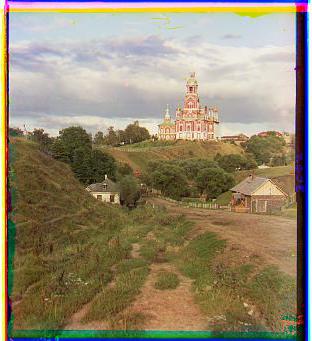

In this project, we worked with the images from the Produkin-Gorskii collection, which are in sets of three black and white images, where the brightness of each image corresponds to the value of the R, G, or B channel at that pixel location. Unfortunately, these pictures are all slightly misaligned. This project asks us to write an algorithm that will find the proper alignment between these channels in order to reconstruct the original image.

Section I: Results

Part 1: Given Images

Smaller Images: Approach

In order to align the images, we first began with naive search through the range of [-15,15] for x and y displacements. We did this first with the red channel against the blue channel, and then the green channel against blue. At each of these displacements, we compared the two channels using one of two similarity metrics, and returned the displacement with the highest similarity.

The two similarity metrics we used were the Sum of Squared Differences, which is just the sum of the squared differences between the pixel values, and the Normalized Cross Correlation, which is the dot product of the two images normalized.

For the smaller images, this was sufficient to align them completely, and did not take much time at all. (only a few seconds)

Here are the results for the smaller images we were given.

|

|

|

|

Larger Images: Approach

For the larger images, an exhaustive search would take too long. Therefore, we used an image pyramid to calculate the offsets.

The image pyramid works recursively, starting with a very small, low resolution scaled version of the image, aligning that, then using that alignment as a basline and trying small adjustments in the next scale up, and continuing to upscale and adjust until you are at the full image resolution again. We found that the best scale was 2x, that the range [-15,15] still worked well to search the lowest resolution level, and that searching for adjustments in a range of about twice the scale worked enough to align the images.

Here are the results for the larger images we were given. This part was implemented using hte image pyramid described above. These took a bit longer, but still took well under 30 seconds to run.

|

|

|

|

|

|

|

|

|

|

Here are the results for some extra images, using the same image pyramid process.

|

|

|

Section II: Bells and Whistles

Intensity Rescale

We tried rescaling the intensity of the images to increase contrast, which was done by finding the 10th and 90th percentile values in the image, and then scaling the whole image so that those values are now the lowest and highest in the image and anything outside that boundary is clipped. This increases the contrast of the center range of intensity values, and clips anything that is too light or too dark.

|

|

CLAHE (Contrast Limited Adaptive Histogram Equalization)

We also tried Contrast Limited Adaptive Histogram Equalization to change the contrast of the images. To do this, we used OpenCV's histogram equalization package, which essentially increases contrast by stretching the histogram of pixel values so that it reaches the ends of the contrast scale, effectively "equalizing" the histogram of the image's pixel values. This is useful in images where all pixel values are relatively similar, such as in the vault image below.

We used this tutorial for help: https://docs.opencv.org/3.1.0/d5/daf/tutorial_py_histogram_equalization.html

|

|

|

|

|

|

|

|

|

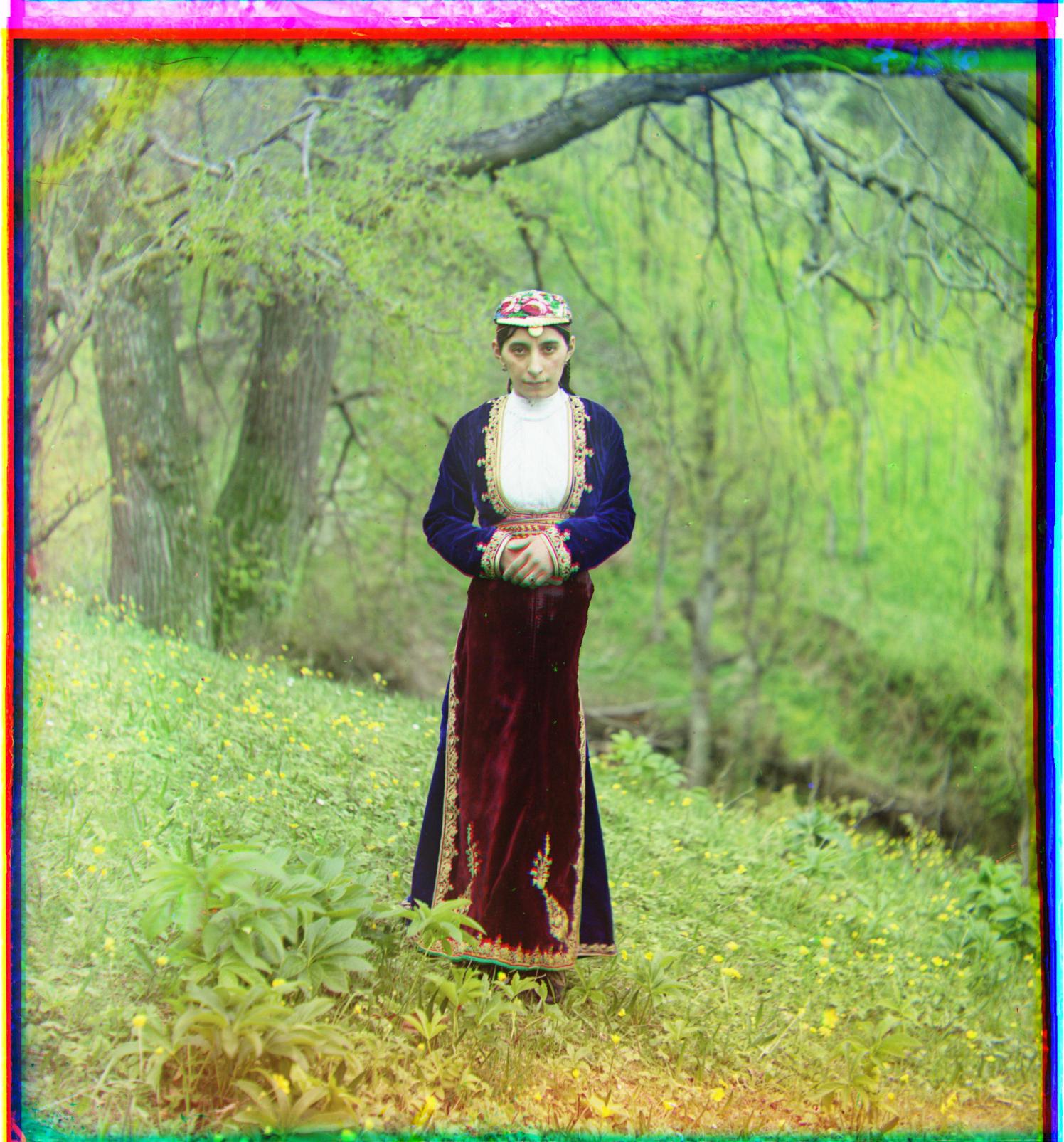

It is interesting to note that this method seemed to work especially well for the vault image, which makes sense as it is faded and the contrast increase allows the paintings on the walls to show up more. However, for the emir image, it oversaturates, and I have to add that the increased contrast makes the lady look a bit creepy.

Rotation As A Metric

We implemented rotation as a metric in a very similar way to the original image pyramid, and just added in checks for small rotation changes as a third dimension in which to search over.

In doing this, we ran into the problem that all the images we had were generally perfect at 0 rotation, so we couldn't test our algorithm. For example, the lady image aligns to the exact same shift as without rotation.

Therefore, we intentionally rotated the green channel of the lady image by 15 degrees and used that to test the algorithm. It does not match the image perfectly, but it does a fairly accurate job considering the magnitude of the rotation. The third parameter in the output shift is the angle.

|

|

|

Seam Carving

We also used Seam Carving on the images to remove the least important parts of the image, also known as content-aware image resizing. This works by finding a connected path down the image, picking one pixel from each row, that has the least "energy," which means that its difference from the pixels around it is very low, determined by a horizontal and vertical convolutional filter. Once this path has been found using dynamic programming progressing through a 2D array, that column is deleted from the image, and the process repeats.

This is the tutorial used to help write this: https://karthikkaranth.me/blog/implementing-seam-carving-with-python/

|

|

|

|

|

|

|

|