Overview

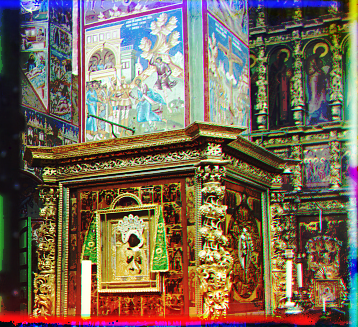

In this project, we take black and white images from the digitized Prokudin-Gorskii collection. These images are the result of taking the same picture three times, once with a red filter, once with a green filter, and once with a blue. We can then reconstruct a full color image from these channels.

Process

First, we divide the black and white image into three, each representing a single color channel. We then crop 25% off the edges of each image, to avoid over-fitting the borders. Then, we can use a signal matching method (in this case, normalized cross-correlation) to align the images. We want to find the optimal alignment -- the shift within a [-15, 15] window where the NCC is the highest.

For images where a [-15, 15] window isn't a sufficient search space, and enlarging the search space would be unreasonable, we implement an image pyramid. We downscale the image, to a small size, search in a [-15, 15] window on that 'level', then use the highest match as a starting point for the next level up (in size).

Results

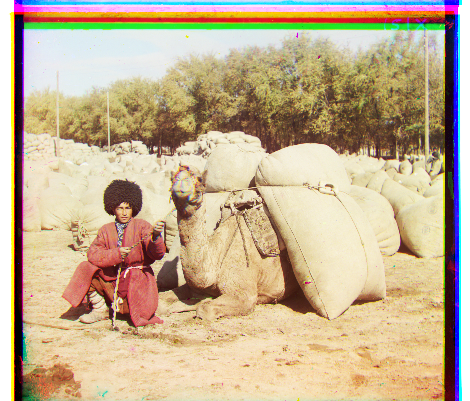

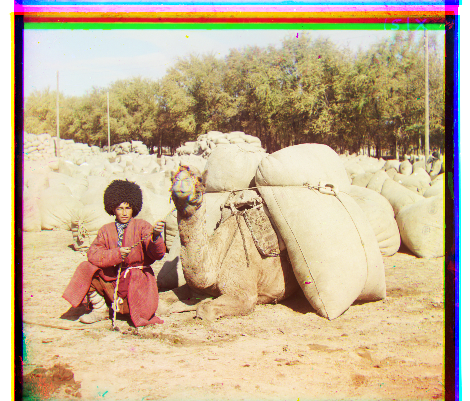

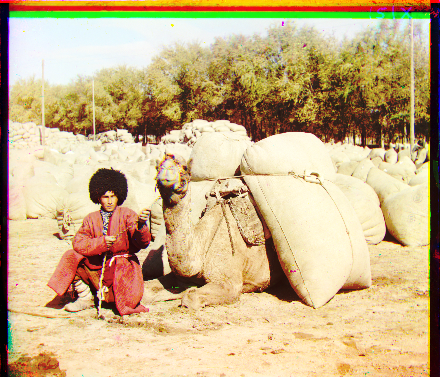

The following images were created with an image pyramid and alignment by normalized cross correlation on the raw black and white plates.

|

|

|

|

|

|

|

|

|

|

|

|

|

Bells and Whistles: Aligning using Different Features (Edge Detection)

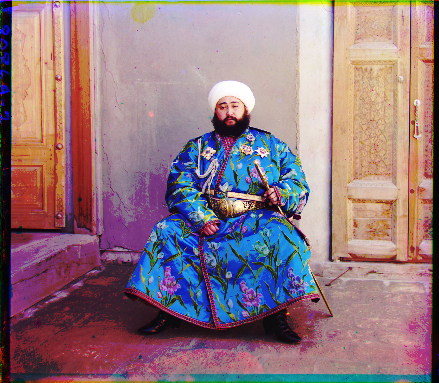

Some images have a strong dominant color amongst red, green, or blue. In particular, consider the plates for 'emir'. Our naive algorithm will have difficulty aligning them because the pixel values themselves will be so difficult. However, if we align using the actual shapes in the image, rather than raw pixel values, we may achieve better results. Below are the images aligned by first applying the Sobel filter on the images (transforming them into 'edge images'), then running NCC. At this compressed resolution, the benefits of this are mostly only noticeable on the Emir image.

|

|

|

|

|

|

|

|

|

|

|

|

|

Bells and Whistles: Automatic Contrasting and Border Cropping

We can attempt some naive automatic contrasting to the pixel values of our images. The approach was very simplistic; pixel values under 100 were multiplied by .6 and those over 100 were multiplied by 1.05. This has the effect of making "the darks darker, and the lights lighter". We can see that in general, this makes the images look sharper and more crisp both in color and in form, especially those images with large spaces of black (such as Train). However, Lady represents a strong failure case as the cloudy pattern in the background yields many artifacts. This could be improved by using the CDF histogram approach discussed in lecture, or even tuning the parameters of our simple algorithm.

We can also attempt naive automatic border cropping to clean up the edges of our image. We trim the edges of the image were the row or column has a mean value close to white or close to black. This did not work as well as hoped beyond the plain white border of the image. The black border had a lot of rough edges, which hindered this approach. Other ideas would be to look for a certain number of pixels with the same or close to the same color within the same column or row. For example, if a row had over 80% of pixels within a color range of 5 from one another, we may be able to assume that is a border and crop that way. We can see our approach to this especially failed on Settlers, which had a large area of white at the bottom of it and was thus cropped off as a "border".

The following images show images combined using edge detection, with automatic contrasting and border cropping applied in post. The shifts are the same as in the images done by edge detection above.

|

|

|

|

|

|

|

|

|

|

|

|

|

Extra Pictures!

|

|

|

|

|

|

|

|

|

|

|

|