This project involves blending images with Laplacian pyramids and Poisson blending.

A nice picture of a cat, but there is this weird human shape in the background. Let's enhance the cat.

Alg: Subtracted the Gaussian-blurred image from the original image to get a difference image. Result is the original image plus the difference image. The Gaussian blur was done with a standard deviation of 3.

Alg: Applied a Gaussian blur with sigma1 to the first image. This image was then normalized to have a mean 0.5 and stddev 0.33. For the second image, obtained a difference image as in the unsharp filer, using a Gaussian blur with sigma2. Result is the mean of the normalized and difference images.

Derek and cat. sigma1=10, sigma2=20.

Happy and sad man. sigma1=7.5, sigma2=7.5.

Putin and a bear. sigma1=4, sigma2=4. Note that the result is suboptimal since Putin and the bear are not well-aligned: Putin is still visible even when viewing from up-close. However, increasing sigma1 to further blur Putin makes him very difficult to see from father away.

Fourier Transform (FT) plots for Derek and cat. First row: Derek FT and cat FT. Second row: Derek FT after filtering, cat FT after filtering, and the hybrid image's FT.

Alg: Subtracted the Gaussian-blurred image from the original image to get a difference image. Repeated this process for the Gaussian-blurred image to get a second difference image, etc. The last Gaussian-blurred image was kept as-is. For visualization purposes, the difference images were normalized to mean 0.5 and stddev 0.5.

Dali's Lincoln and Gala painting. For the Gaussian blurs, used a filter with sigma=5.0.

Derek and cat hybrid image. For the Gaussian blurs, used a filter with sigma=4.0.

Alg: Created a Laplacian pyramid for both images by resizing the Laplacian stacks created by the above algorithm. Then, for each pixel, determined the coefficient for blending the images based on distance from the mask and using the same feathering width for all scales. The images were then blended at each scale according to these coefficients, and combined to form the full-size result image.

Apple and orange. Mask was the left half of the image. Used a feathering width of 2px and a filter with sigma=4.0 for the Laplacian pyramid.

Lion and tiger. Mask was the left half of the image. Used a feathering width of 6px and a filter with sigma=2.0 for the Laplacian pyramid. Note that the lion and tiger could not be completely aligned, resulting in artifacts near the mouth despite the rest of the image being aligned.

Meatball and galaxy. Mask was a circle of radius 80 centered at (y, x) = (145, 125). Used a feathering width of 2px and a filter with sigma=2.0 for the Laplacian pyramid.

Blending at multiple scales of the pyramid for the meatball and galaxy.

When we wish to combine images, it is perceptually beneficial to try to preserve the gradients, rather than the exact pixel values. In Poisson Blending, this is achieved by minimizing the squared error between pixel values such that the gradients in the source image and the boundary values at the target image are maximally preserved.

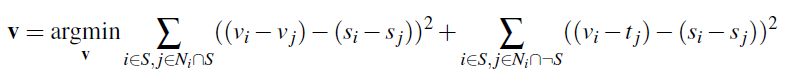

Alg: minimize the above quantity, where s is the ordered set of pixels from source image, t is the ordered set of pixels from the target image, N_i denotes the 4-neighborhood of pixel i, and v is the ordered set of pixels in the mask for which we are optimizing values.

Note: my images (especially the source image masks) are rather small. This is not due to runtime constraints, but rather due to the fact that I have an old computer and I get out-of-memory errors for the Numpy array if I make them any larger...

Sanity check: the toy problem and solution.

A penguin joins hikers on a mountain.

A worm eats an apple, and changes color to match camouflage!

Compare with directly pasting the source region into the target image!

A duck looms on the horizon. Will they collide? Note that the horizon line disappears near the duck, since it was not present in the original image. This could be improved by better masking, but cannot be entirely avoided.

A whale in the sky? This time, it is a texture from the source image that is being introduced to the target image: the bands of sunlight in the ocean do not belong in the sky. Again, this could be improved by better masking.

Meatball and galaxy. Here, the Laplacian pyramid blending (3rd image) outperforms the Poisson blending (4th image), since the gradient constraint causes color artifacts below the meatball. In general, Poisson blending should outperform Laplacian when color preservation is not important and the textures in the source and target images are similar. Conversely, Laplacian outperforms Poisson when textures are dissimilar and the colors are roughly equal between the source and target images at the boundary regions.