Unsharp masking is a technique that will "sharpen" a photo by blurring it using a Gaussian filter and subtracting that from the original, resulting in a mask of the edges. This extra layer of details is then added back to the original image, enhancing the edges, producing a sharpening effect.

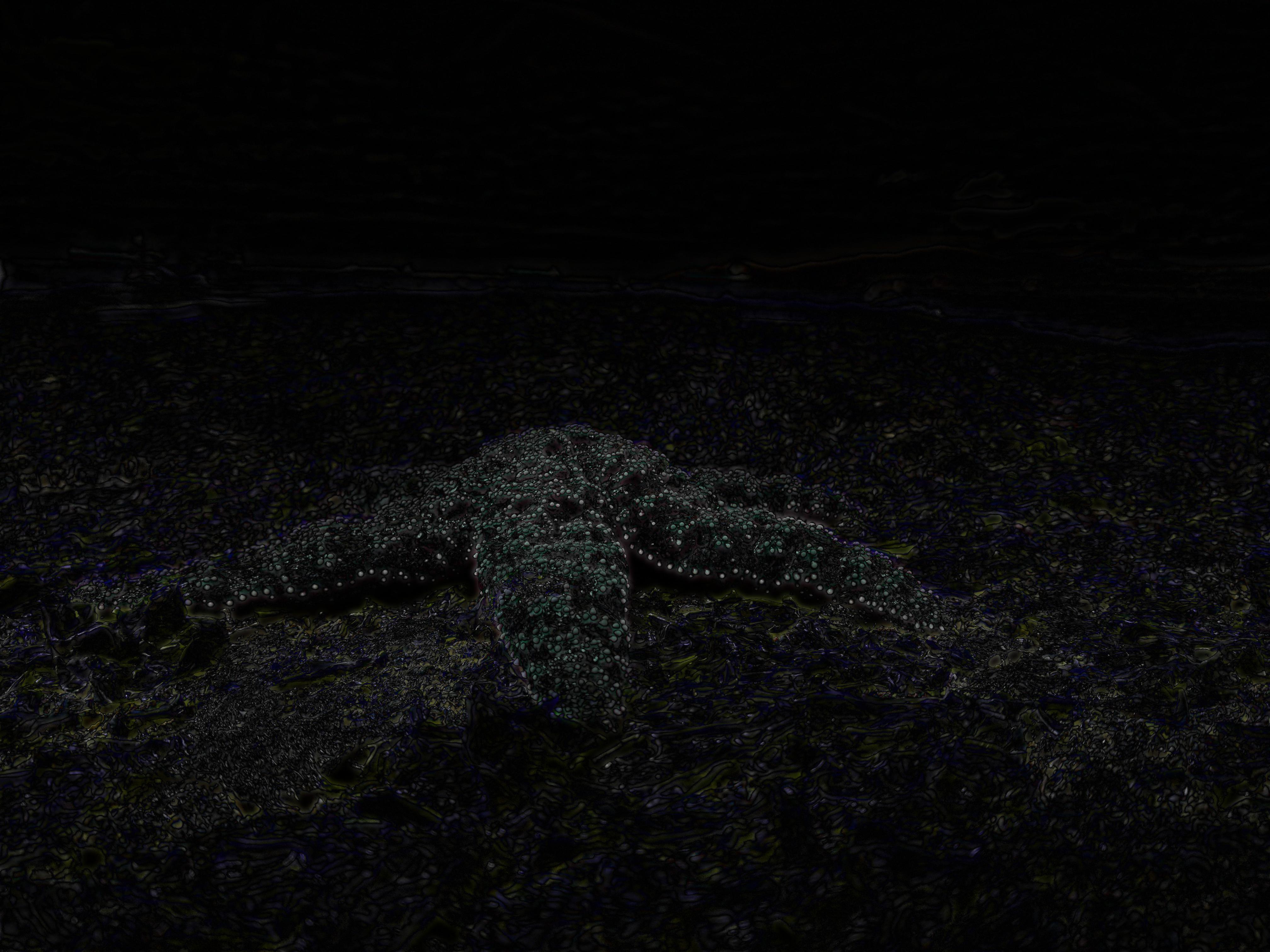

As you can see below, this technique works for both grayscale and color images. In both cases, the edges have been brightened to be more easily distinguishable. For all of these images, I used a Gaussian kernel with sigma = 10 and alpha = .5 .

Hybrid images are images that can be interpreted in two different ways depending on the viewer's distance from the image. This is done by combining the details, or the high frequencies, of one image with a blurred version, or the low frequencies, of another. When up close to the image, the viewer sees the high frequencies more clearly, but when farther away, the details are less visible, with the main focus being on the low frequencies.

To get the high frequencies, I used the same process as in the unsharp mask technique above by blurring the image and subtracting that from the original to get the details. For the low frequencies, I simply used a Gaussian filter to blur the image. I then added the two together to get the final hybrid image.

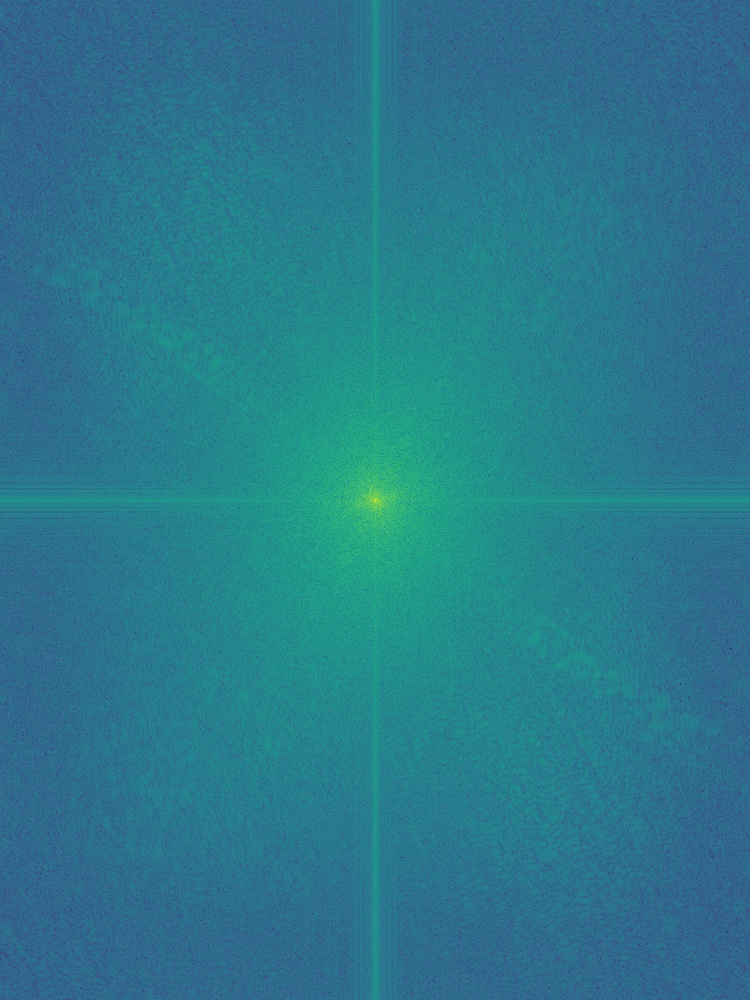

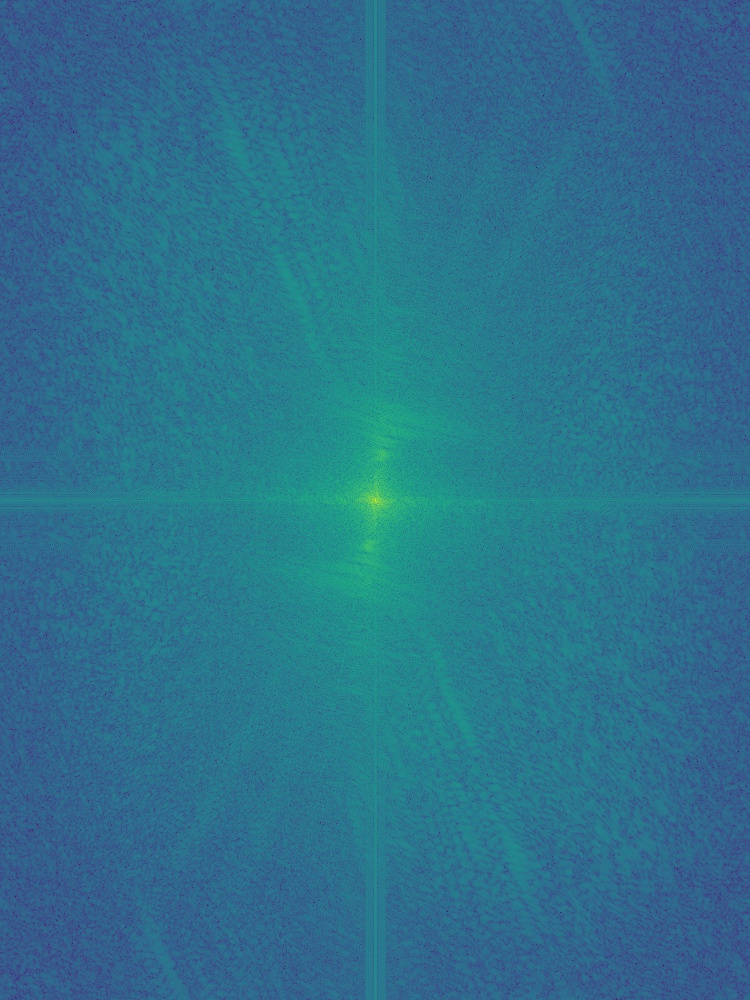

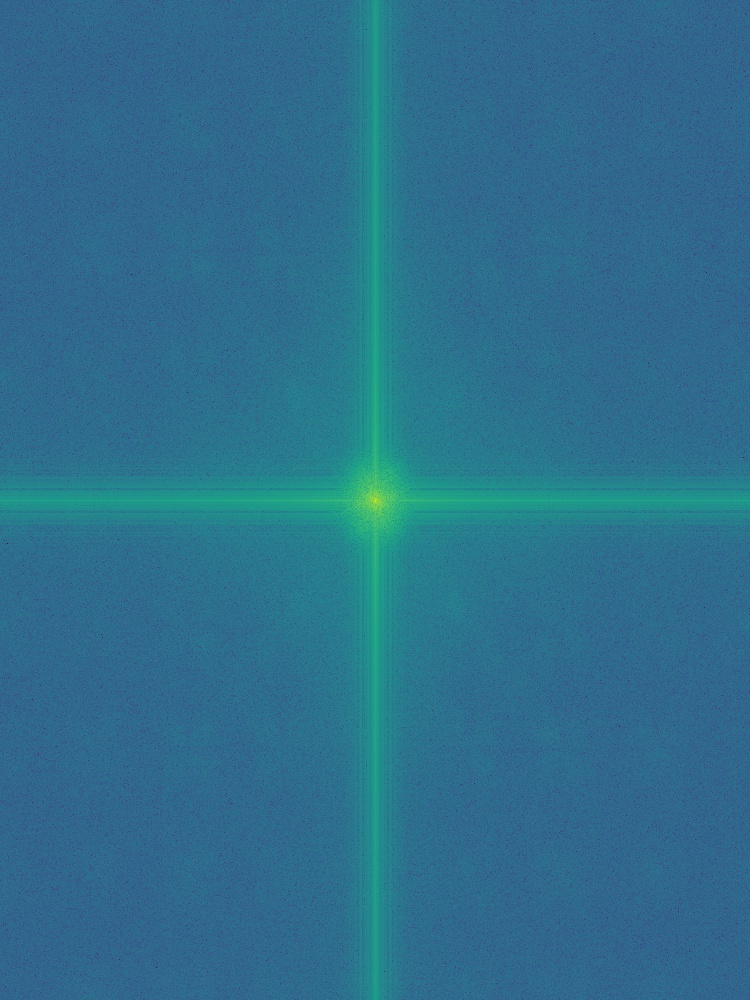

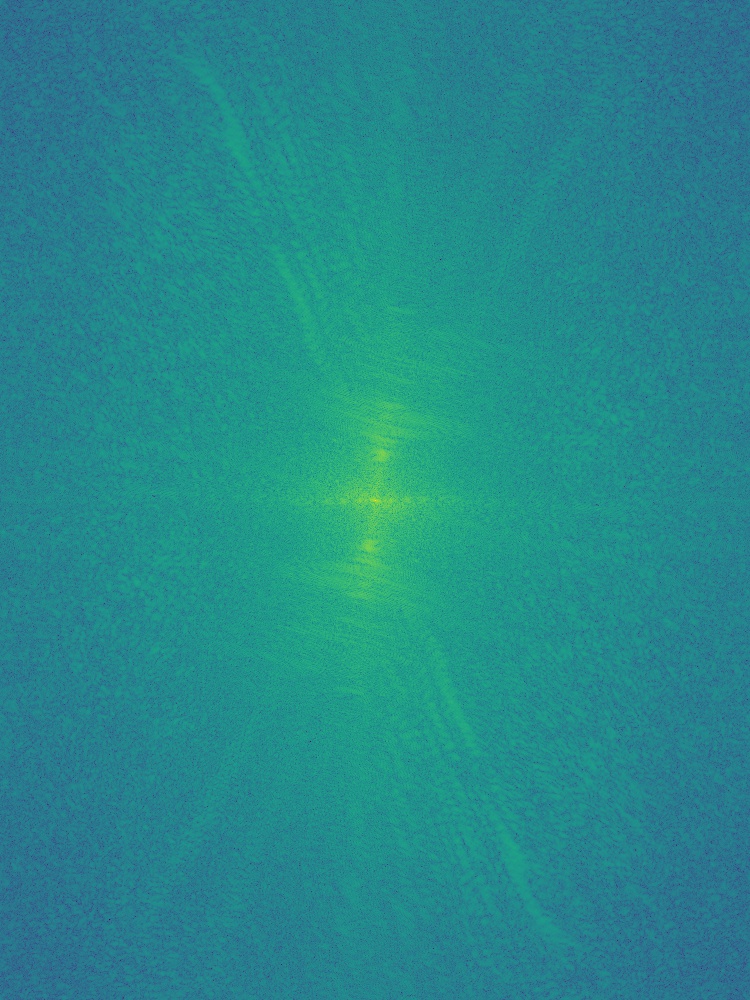

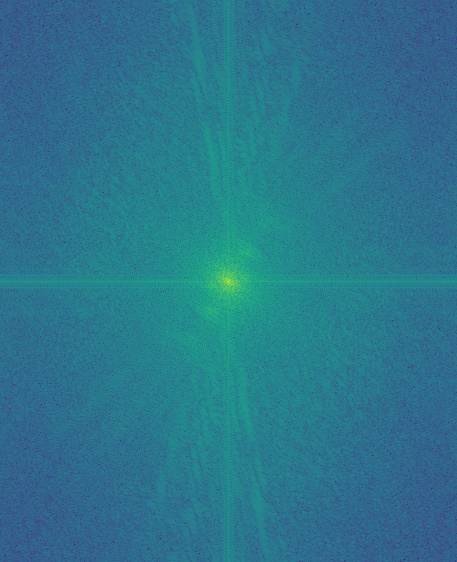

Below I have documented the log magnitute of the Fourier transform of the input images, the filtered images, and the hybrid image of the goat-raccoon hybrid image set.

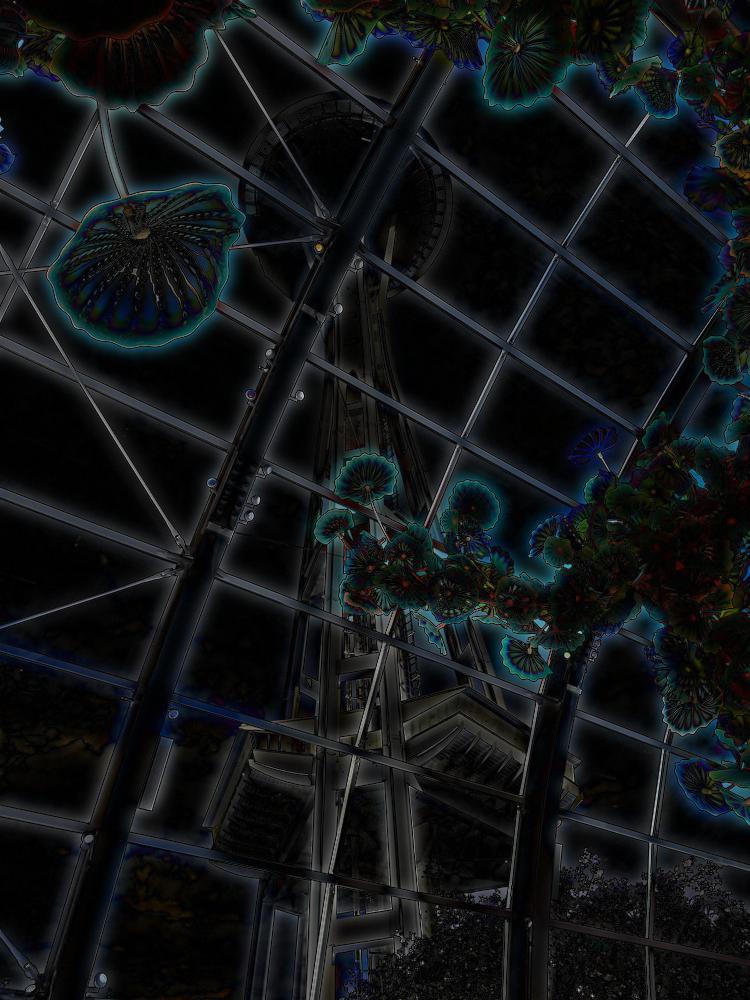

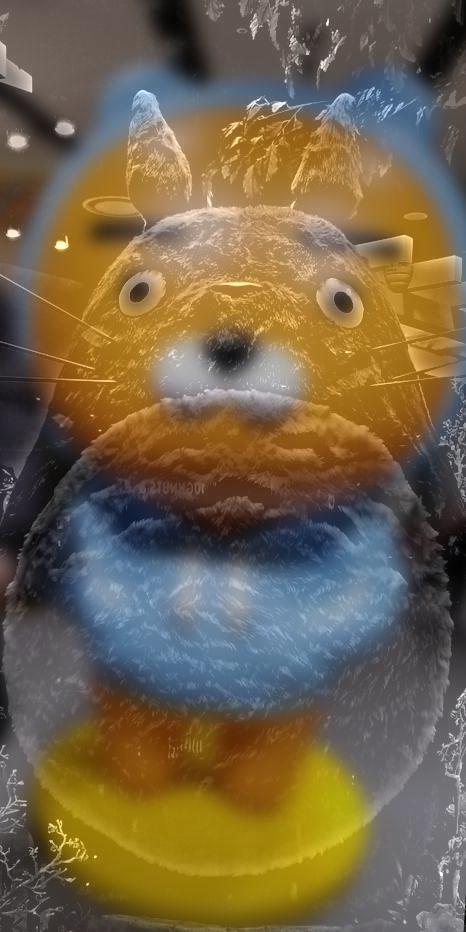

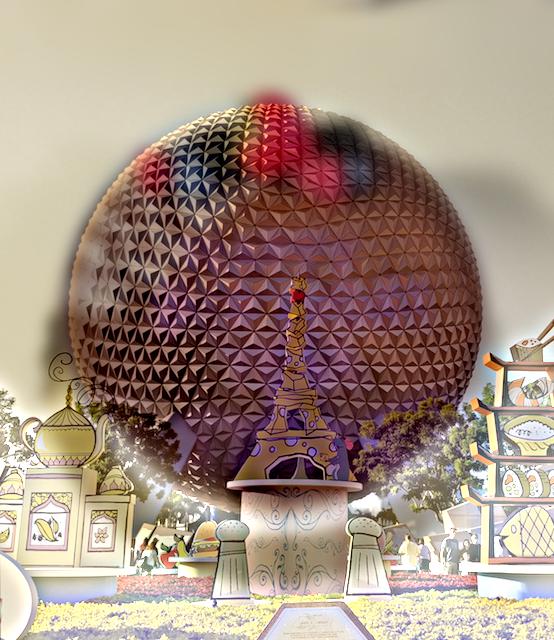

I experimented with color to see if it can enhance the effect of the hybrid images. I tried putting both images in color, just the low frequency in color, and just the high frequency in color, and then compared them to the original grayscale hybrid image.

I found out that the best combination is to have the high frequency image in color while keeping the low frequency image in grayscale. This allows the details of the high frequency image to stand out better when looking at the image up close. If the low frequency image is also in color, its colors overpower the high frequency image too much, making it harder to see. However, the hybrid images are pretty clear in all of the cases, and it doesn't always make that much of a difference, which is especially seen in the case where the high frequency image happens to not have a lot of color.

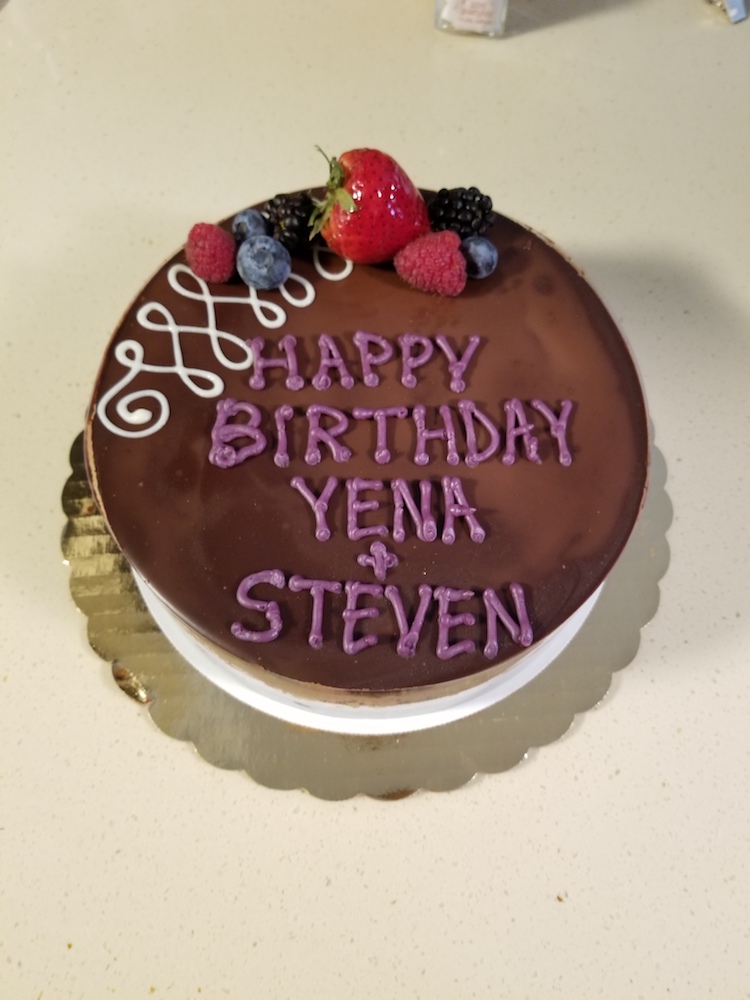

The effects of color also depend on how distinguishable the low frequency image is without color. In the last set with the cake and the Epcot ball, the cake is not super distinguishable in grayscale, so the combinations with the cake in color actually look better.

I consider the below hybrid image set to be a failure.

A Gaussian stack involves blurring an image using a Gaussian filter, then blurring the resulting image again. This keeps repeating for each layer of the stack. The Gaussian filter acts as a low pass filter, so that at each layer a different set of high frequencies are being removed.

On the other hand, a Laplacian stack uses the Gaussian stack to create its image. At each layer i, the blurred image at that layer in the Gaussian stack subtracts the image at layer i + 1 from it, resulting in the difference between the two images. This new resulting image is now layer i of the Laplacian stack. Each of the images in the Laplacian stack is of the high frequencies, or the details, that were cut out at each layerd of the Gaussian stack. None of the images should look the same since they are all of different frequencies.

As a way to blend images together, we can use the Gaussian and Laplacian stacks that we created above. The idea behind this is we separate the two images into different frequencies (the Laplacian stack) and blend each of the frequencies together before combining all of the layers together. As part of the process, we also have a mask to denote where we're blending the images. We find the Gaussian stack for this mask and we blend each layer with its corresponding layer in the Laplacian stacks for the two images. Once everything is blended, we combine them all to have a final image that is blended separately at each frequency level.

Below I have used colors while blending images. This involved doing the above process for all three channels of rgb images.

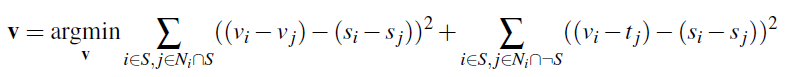

In this part of the project, I'll be blending images together using Poisson blending. This technique focuses on getting the gradients of both the target and source images to match, rather than just blending without consideration of the other image. I look at the gradients of both images and try to minimize their difference while also trying to keep the colors equal. I have a source image s that I want to blend with a target image t. I want to solve the following linear least squares problem in order to find the minimized gradient differences:

In order to show that my implementation is accurate, I'll be reconstructing an image using this technique. When applying the Poisson blending technique to an image and a blank image, it should return itself, since the minimized gradient differences is simply the original image. As you can see below, I was able to reconstruct the image.

This part involves using the above mentioned technique and applying it to two different images, with which we use a mask to blend things together.

The blending of the bird and sushi images below did not turn out very well. The sushi looks transparent and the background in the source image is clear and does not fit in with the background in the target image. Here, it is clear that in order to have good results, the two backgrounds should be of similar color, not only to allow colors to stay consistent and allow retaining of the original source image colors, but also to blend more seamlessly.

In the following case, the images blended together using Poisson blending blended together very well. However, the point of this blended image was to show Seattle at both day and night, but since Poisson blending tries to keep the colors the same, the dark sky became a lot lighter. This does was not the case for the Laplacian blending, in which the left side is clearly night, keeping the original colors. However, the divide between the two images is very clear and distinct.