CS194-26: Project 3

Rachael Wu (cs194-26-acr)

Overview

The goal of this project was to create hybrid images by combining high and low frequency portions of images, blending multiresolution images using Gaussian and Laplacian stacks, and blend objects from a source image into a target image using Poisson blending

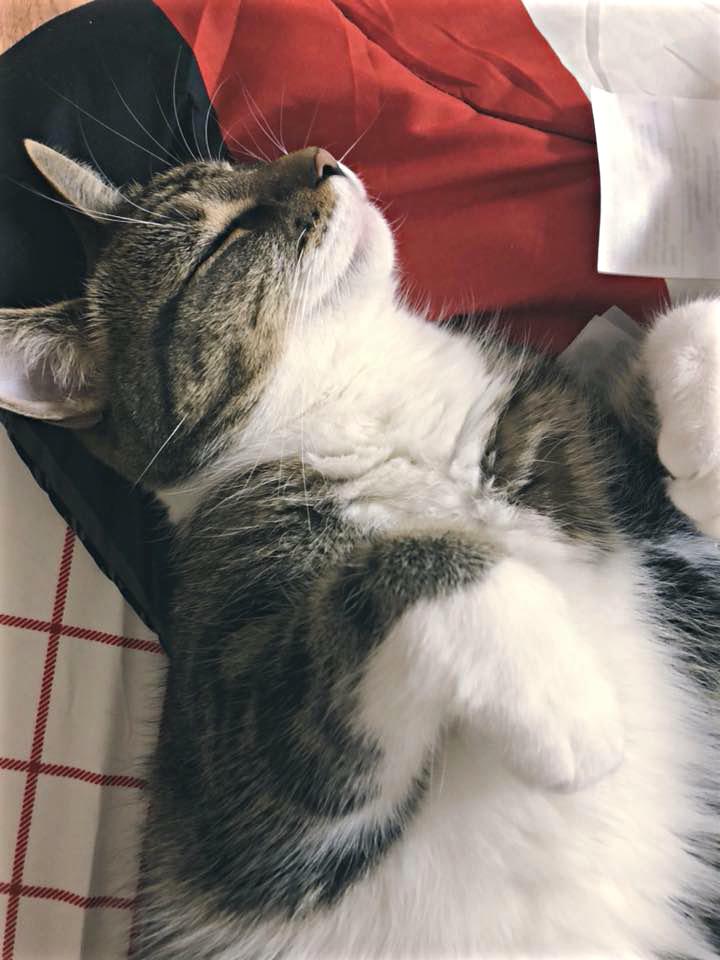

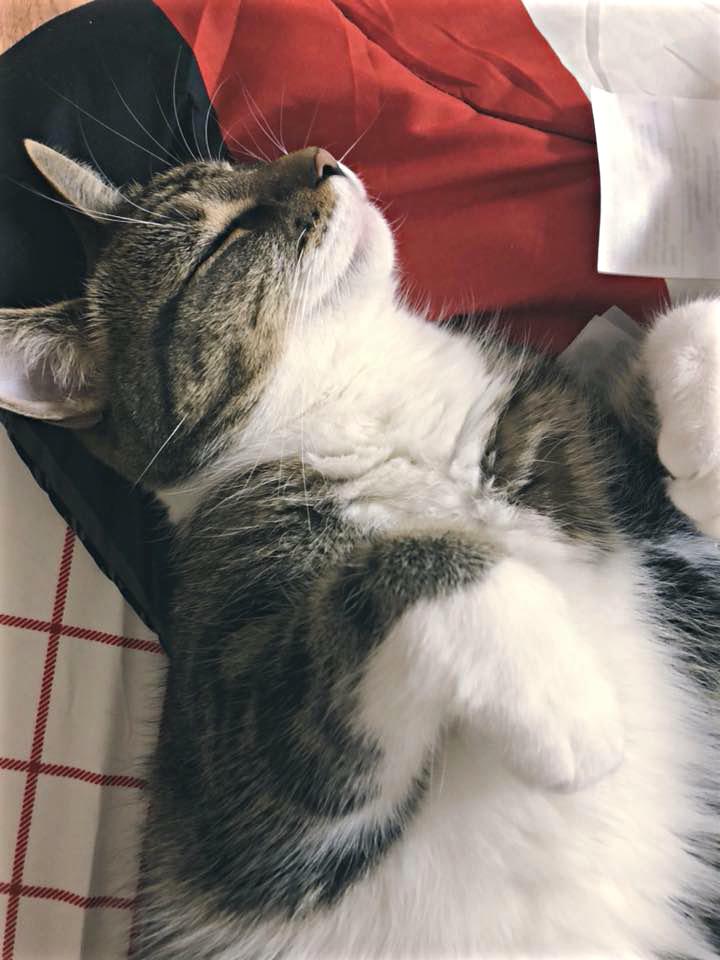

Part 1.1: Warmup

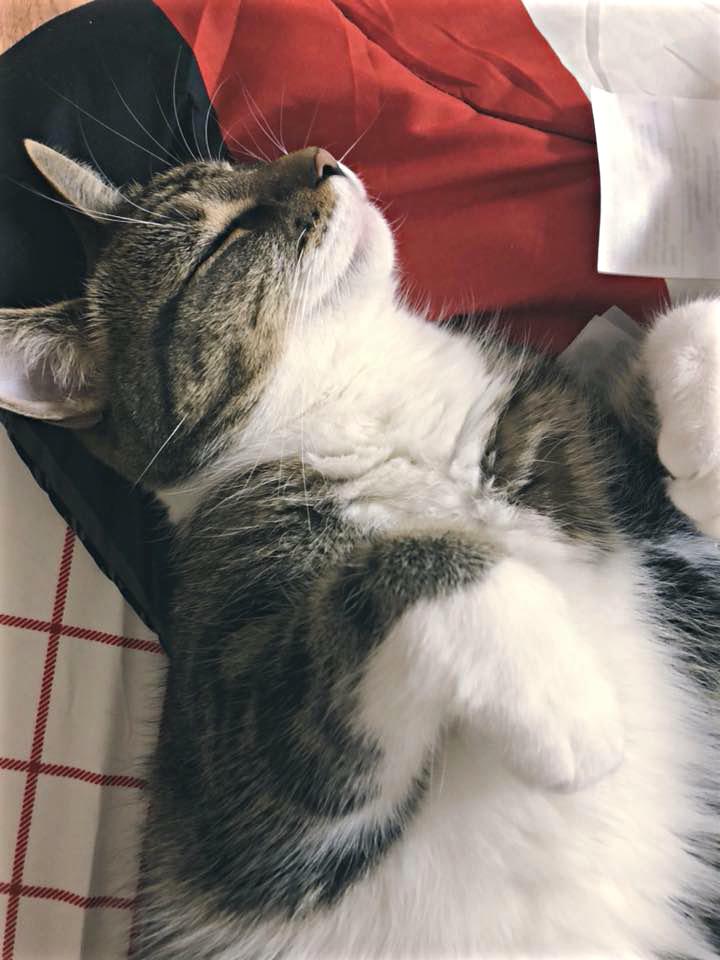

The first part of the project was to sharpen an image using the unsharp masking technique. More specifically, for an image f and a Gaussian filter g, we have a blurred image f * g and a sharpened image f + a(f - f * g). For this project, we chose a value of 0.3 for our alpha or a value, a kernel size of 70, and a sigma of 8 for the Gaussian filter. Below is an example of our result:

From left to right: Original image, filtered image, and sharpened image of a cat

Part 1.2: Hybrid Images

The second part of the project was to create hybrid images using high and low pass frequencies. More specifically, we combined the high pass portion of one image (ie: a Laplacian f - f * g) with the low pass portion of another image (ie: a blurred image f * g). For this part of the project, we used a Gaussian kernel of size 50, sigma = 6 for the high-pass image, and sigma = 3 for the low-pass image. Additionally, instead of averaging the high-pass and low-pass images, we multiplied the high-pass image by 2 before averaging in order to make the high-pass image more prominent. This method created the following hybrid images:

Image of Derek, Nutmeg, and the resulting hybrid image

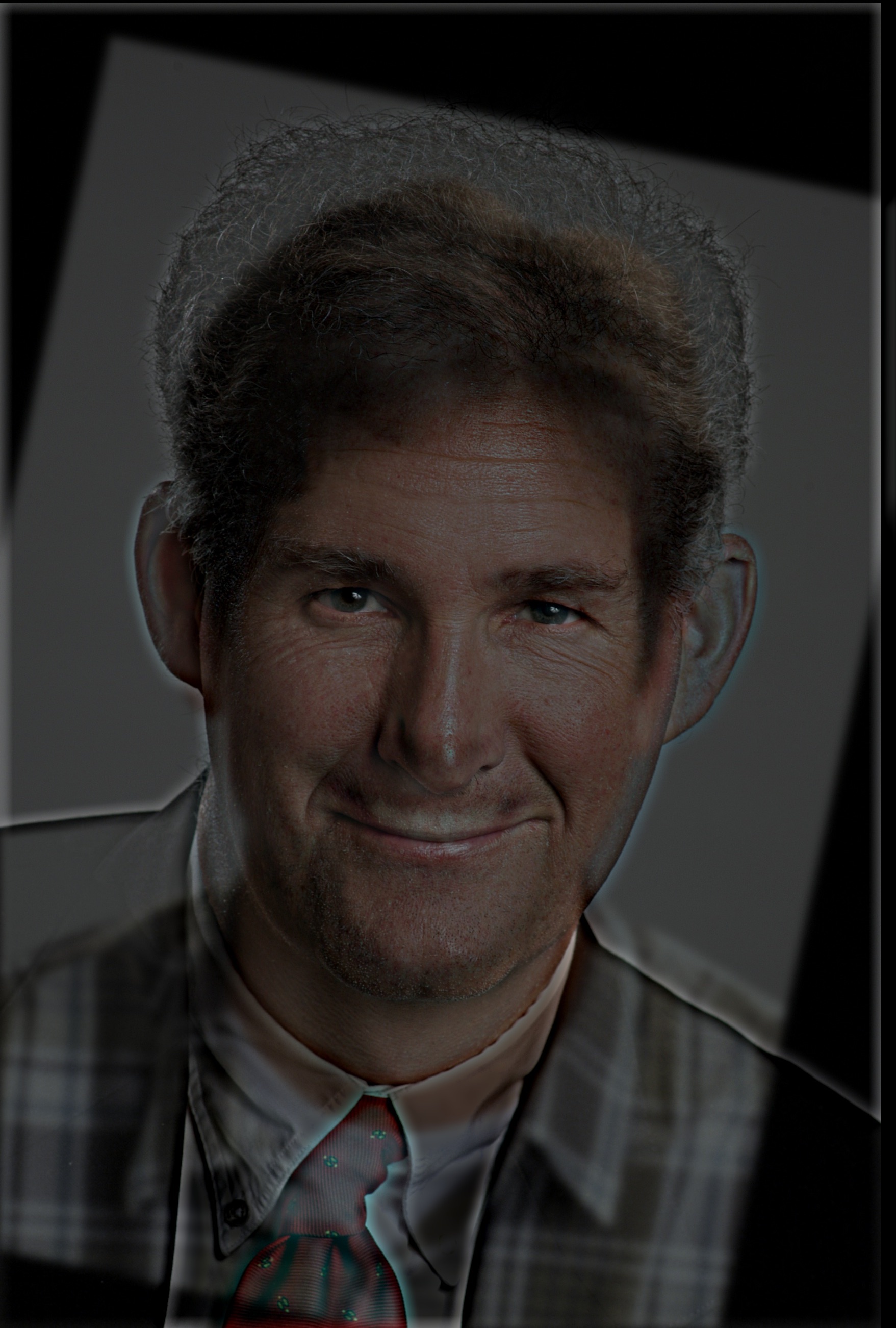

Image of Professor Denero, Professor Hilfinger, and the resulting hybrid image

Image of a muffin, a chihuahua, and the resulting hybrid image

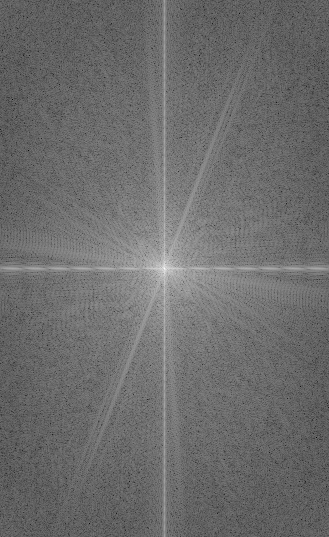

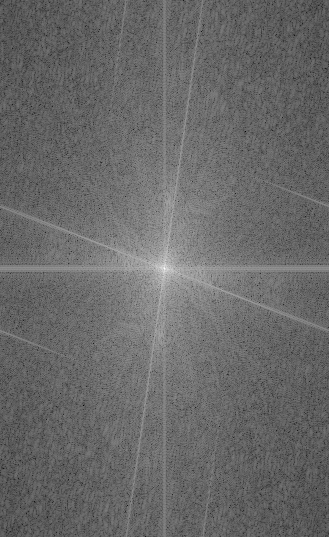

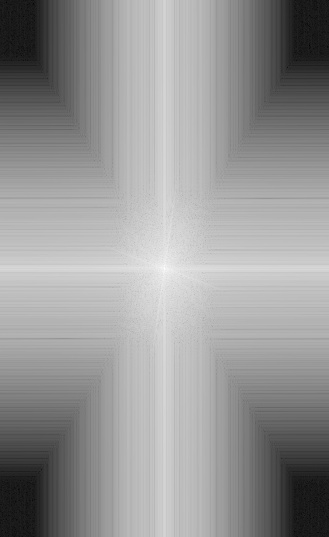

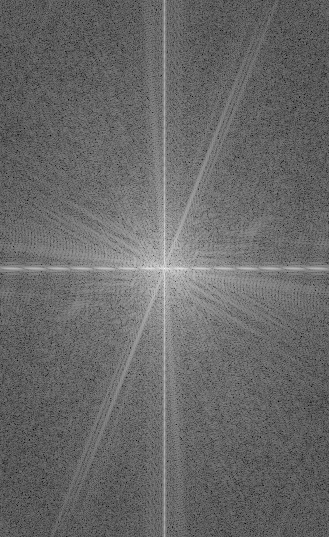

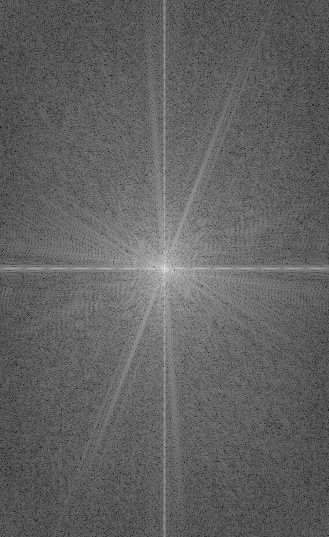

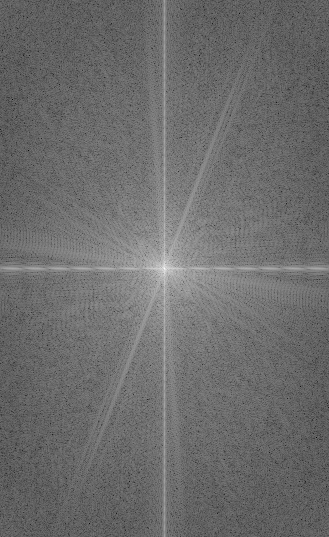

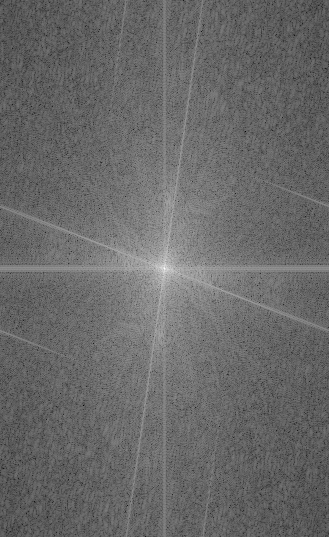

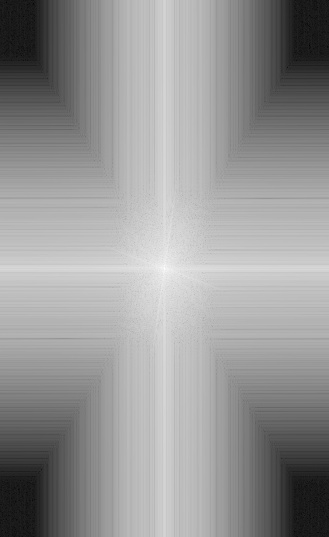

Below are the Fourier transforms of the Denero-Hilfinger hybrid image:

From left to right: Fourier transforms of the original Denero image, the original Hilfinger image, the low-pass Denero image, the high-pass Hilfinger image, and the hybrid image

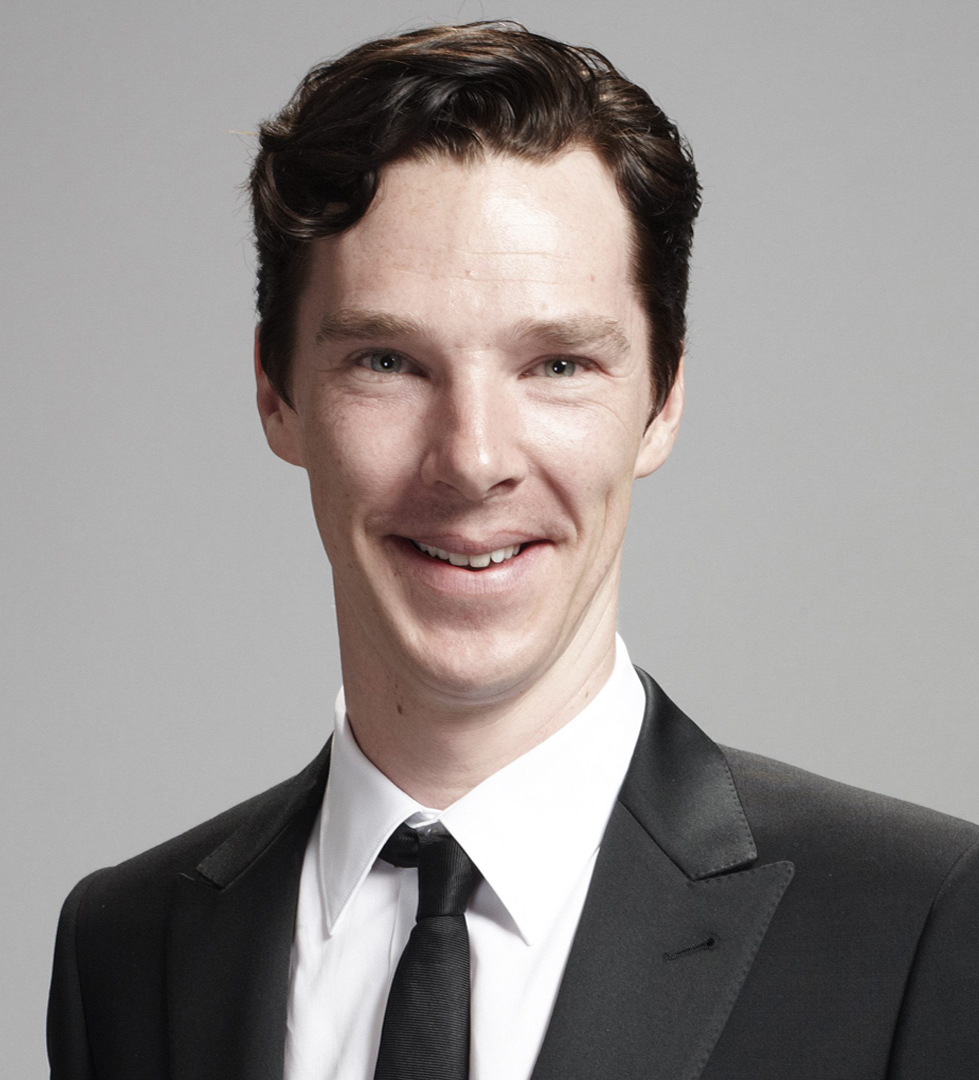

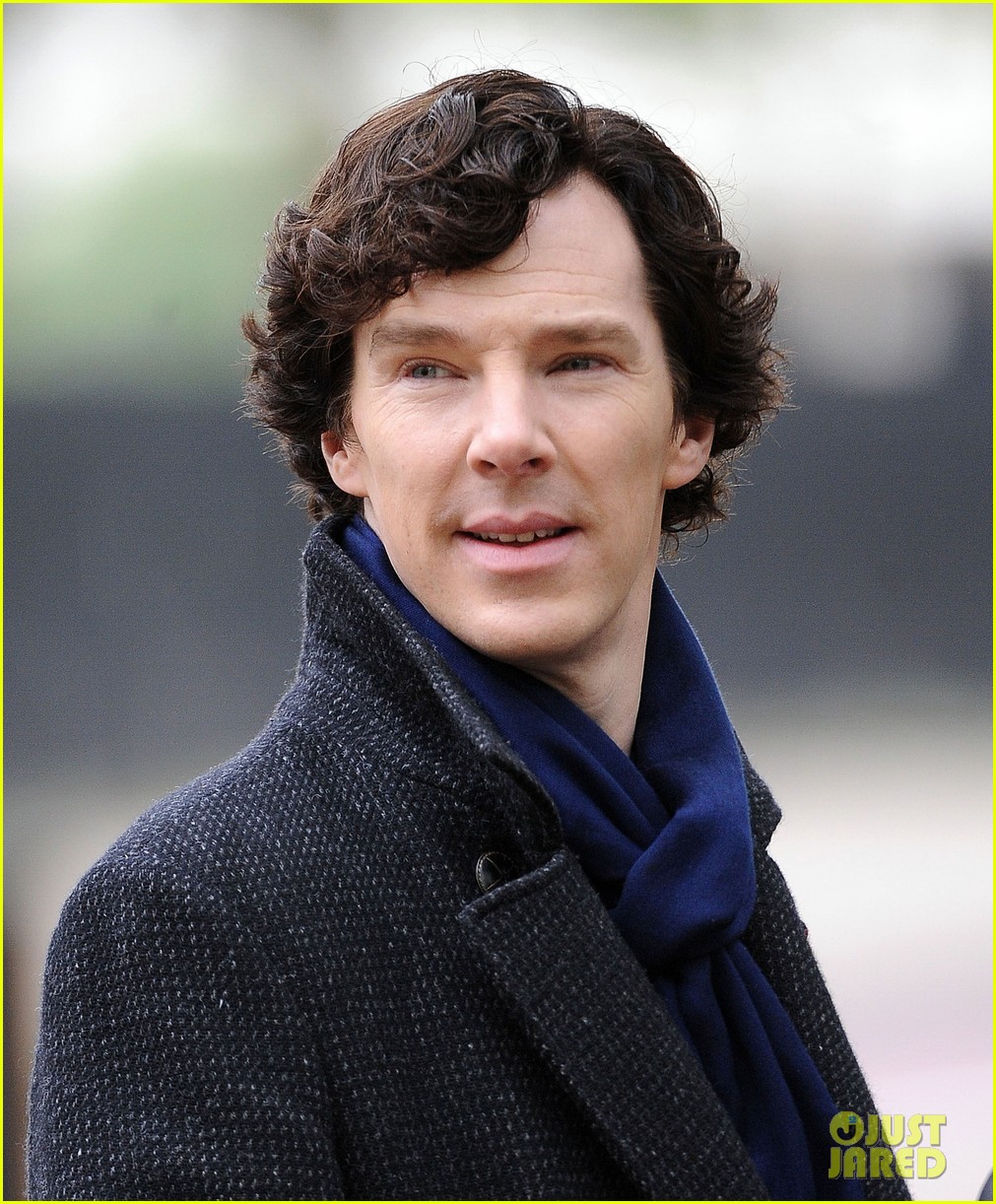

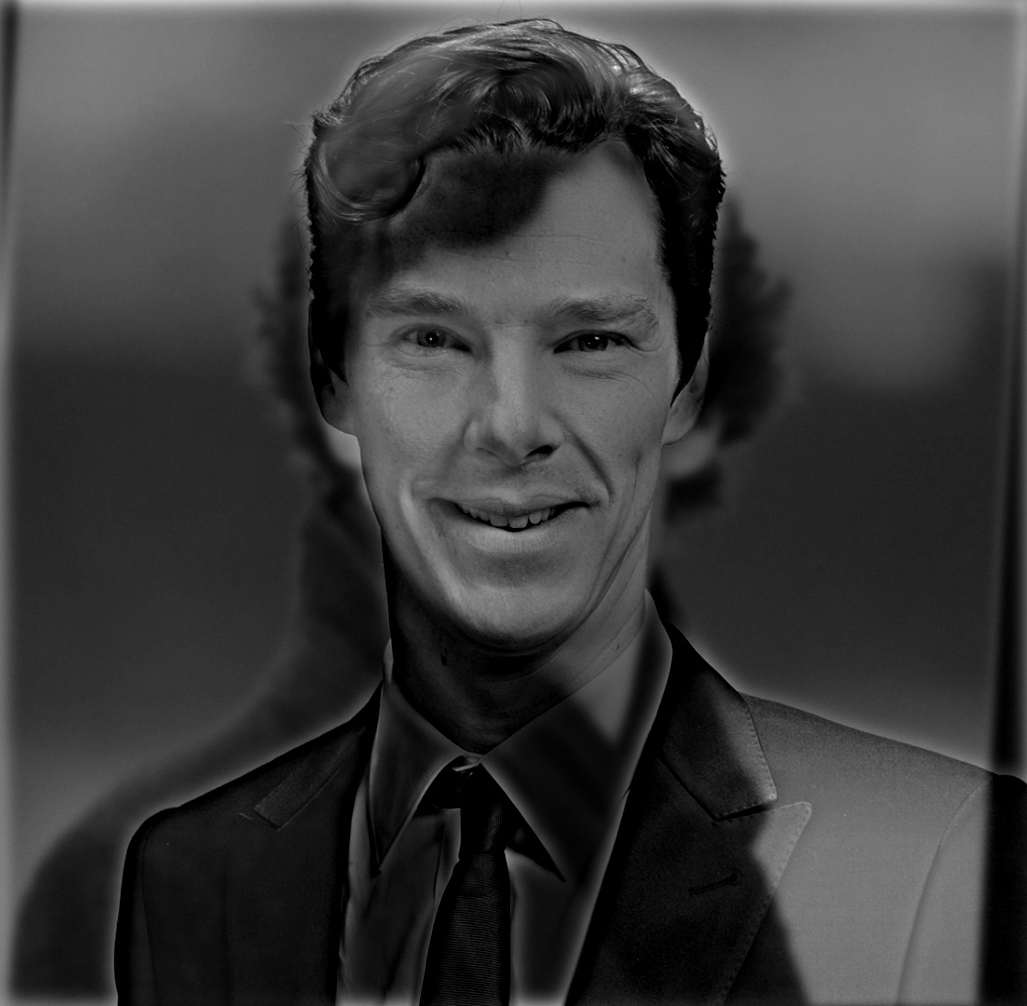

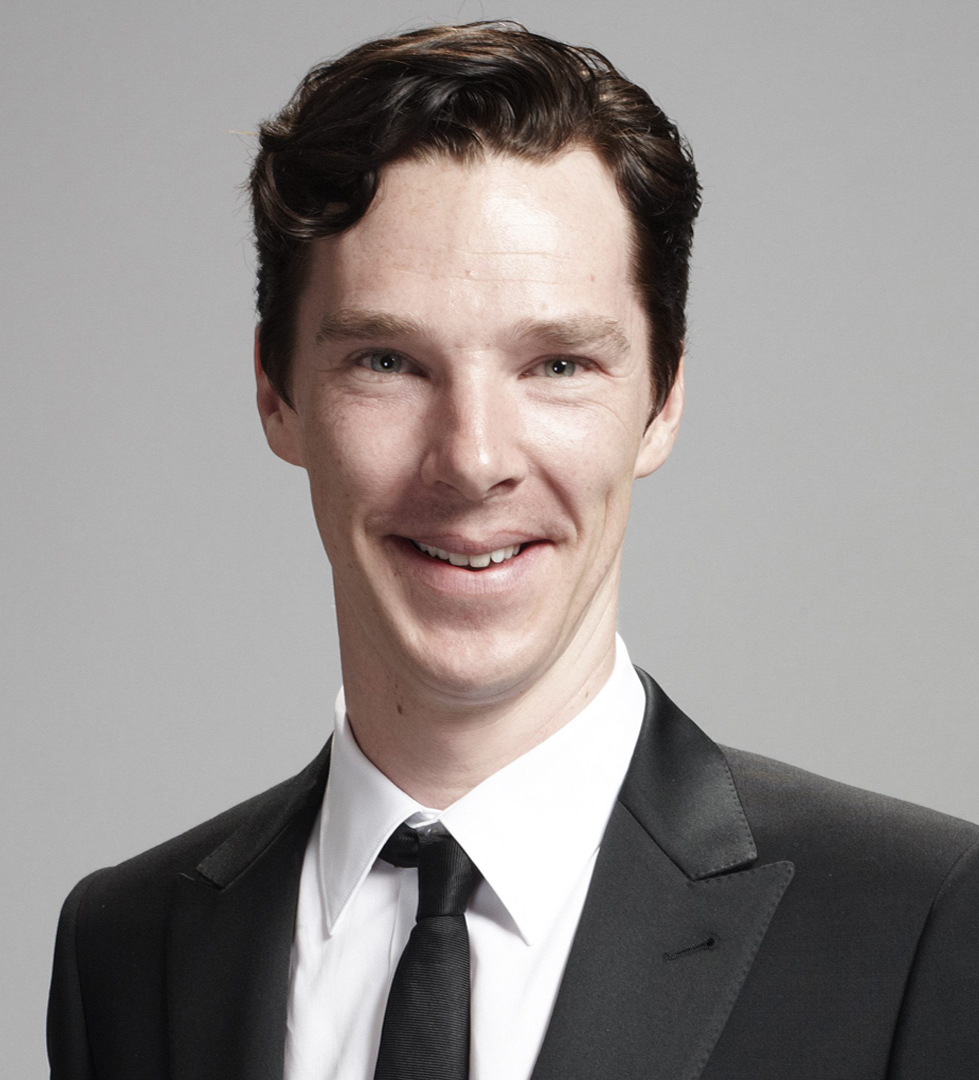

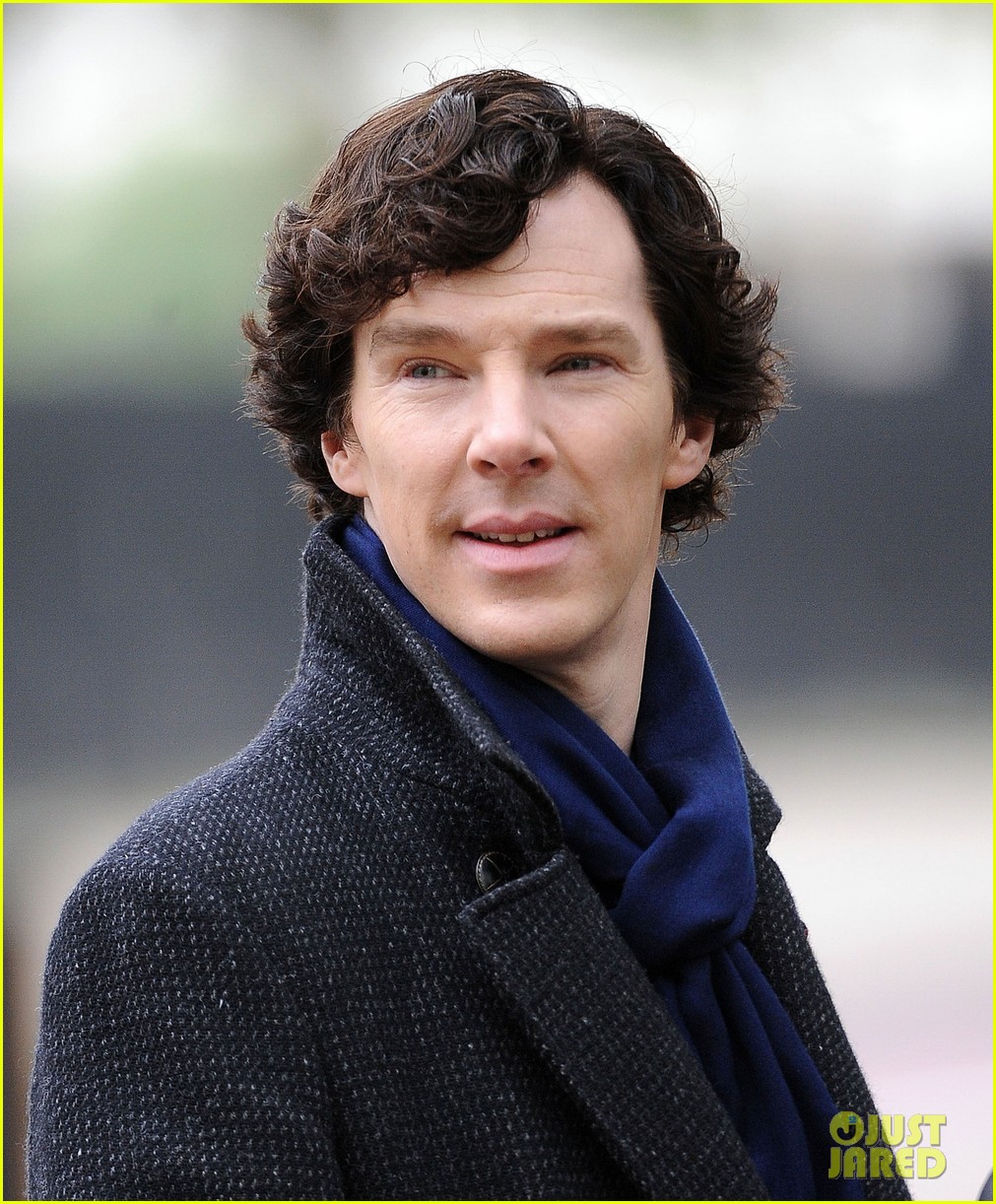

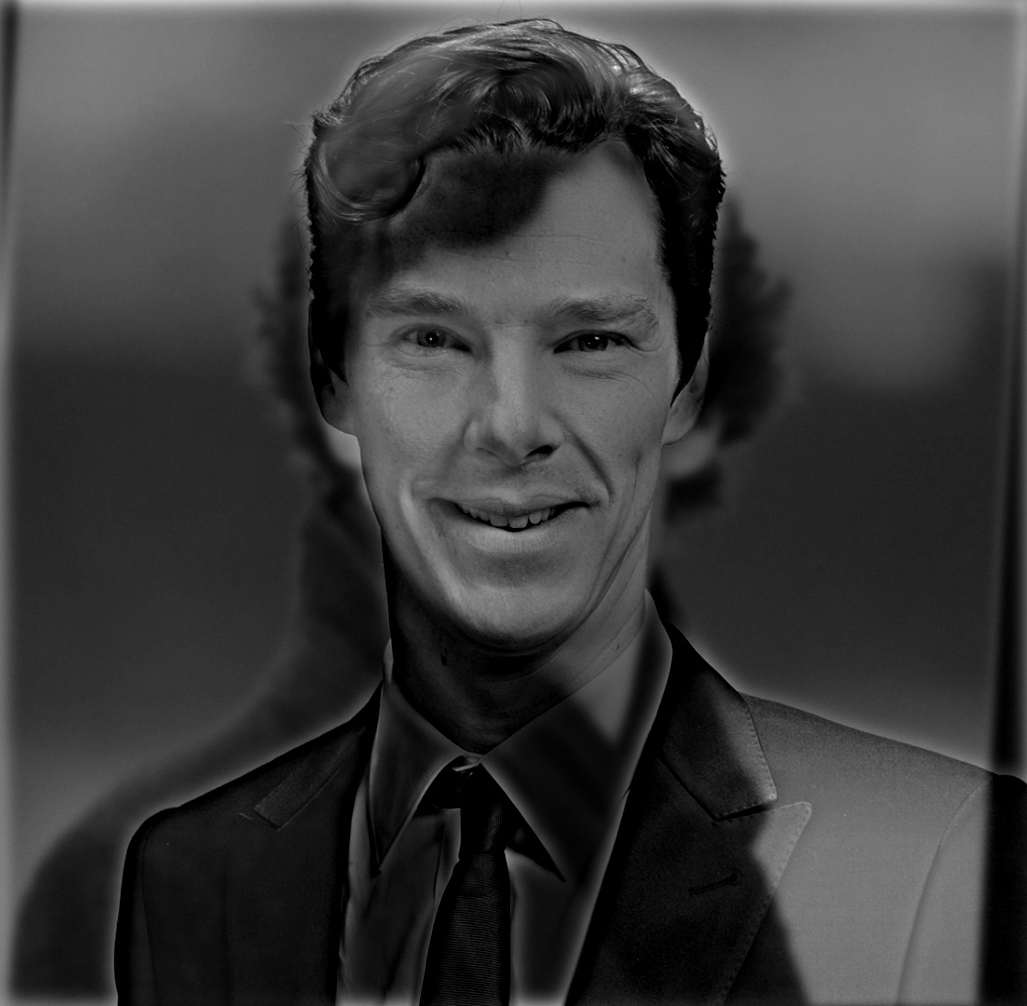

One example of a failure was when we attempted to blend together two pictures of Benedict Cumberbatch; this attempt was a failure since the two facial shapes were too different:

Benedict Cumberbatch

Bells & Whistles: We also used color to try to enhance the effect. Since the high-pass image was very dark, it did not make much of a difference for that. However, color helped to enhance the effect for the low-pass image, since we could see the features of that image more prominently:

Color versions of hybrid images

Part 1.3: Gaussian and Laplacian Stacks

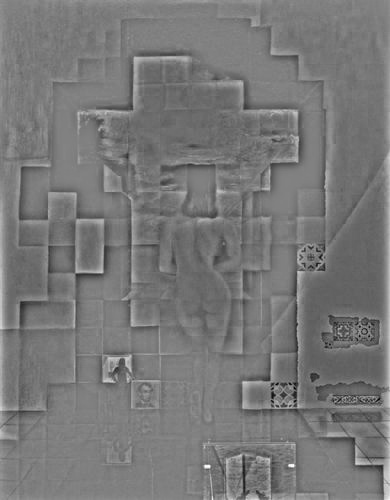

The next part of the project was to create Gaussian and Laplacian stacks to analyze images. We used the recommend number of levels (N = 5) and the same parameters as the previous part for the Gaussian kernel. For the image of Lincoln, we obtained the following stacks:

Laplacian stack for image of Lincoln

Gaussian stack for image of Lincoln

Notice that the last layers for the Laplacian and Gaussian stack are the same; this is so we are able to reconstruct the image. Below are the stacks applied to the hybrid image:

Laplacian stack for hybrid image

Gaussian stack for hybrid image

For which which can see that Hilfinger is more prominent in the Laplacian stack, while Denero is more prominent in the Gaussian stack.

Part 1.4: Multiresolution Blending

This part of the project involved blending two images by computing a smooth seam when transitioning from one image to the other. More specifically, we computed a Gaussian stack GR for a given mask and Laplacian pyramids LA and LB for both images, and used these to combine each level of the stack to form a combined pyramid LS(i, j) = GR(i, j)LA(i, j) + (1 - GR(i, j))LB(i, j). Afterwards, we reconstructed the image by adding all the layers together to get a blended image.

For this part of the project, we used sigma = 35 for the Gaussian stack and sigma = 5 for the Laplacian stacks, as well as a kernel size of 70 for both stacks. We also used 5 levels for each stack.

Below are our results (hover to compare with the naive version):

Left: Blended image of cat; Middle: Before/After makeup; Right: Oraple

Left: Spring and winter pictures of a forest blended together; Right: Spring and autumn pictures of a forest blended together

We also used an irregular mask and the following images:

From left to right: Irregular mask, image of eye, image of hand

to create the following blended image:

Blended image of eye and hand

One failure we had was when we were trying to align a picture of a cat and a dog; since the two animals' facial features were not aligned, the result was not as visually pleasing:

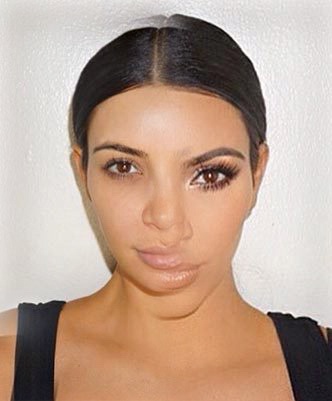

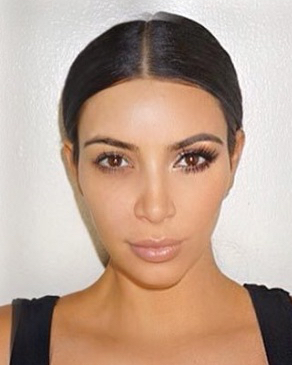

Similarly, initially we failed to align two pictures of Kim Kardashian:

To fix this issue, we properly aligned the image to get

which is blended much more smoothly.

Part 2.1: Toy Problem

In the second half of the project, our goal was to implement gradient-domain blending, in which we blended an object from a source image s to a target image t. In order to do so, we minimized the difference between the x and y gradients of s and v, and constrained the problem so that the boundary of the object in s matches the corresponding boundary pixels in t.

We first applied this to a toy problem, in which our source image s was the same as our toy image t, meaning that our reconstructed image should be the same as the original image. Instead of constraining all pixels on the boundary, we only constrained the top left pixel (0, 0) to be the same.

Left: Original toy image; Right: Reconstructed image

Part 2.2: Poisson Blending

Finally, we apply the above method to larger images with irregular masks. Below is our favorite blending result (hover to compare with pixels directly pasted):

which was the result of the following images:

From left to right: Source image, target image, and mask

Other Results:

One example that didn't work as well was this image:

which was the result of blending the following two images:

This image failed since the texture of the background of the cat on the left was too different from the background of the cat on the right, resulting in a noticeable border in the final blended image. It is better to use pictures with background of similar texture/color, like in the examples above.

We can also compare our results from 1.4 to our results here:

Left: Blended image with Poisson blending; Right: Blended image with Laplacian stacks

for which we can see that the Laplacian stacks provide a more seamless blend, while Poisson blending preserves the original target image's color. Thus, if our images have a similar texture and color, and we wish to preserve the original image's colors, Poisson blending is more suitable. Otherwise, Laplacian stacks may provide more seamless blending, since it spreads the error throughout the whole image rather than in just one region.

The most important thing I learned from this project was how to vectorize my code and store it in a space-efficient manner (ie: in a sparse matrix), which is something that fan be applied not only to computational photography, but to other areas of computer science.