Project 3 Submission

Name: Rohan Murthy(cs194-26-adk)

Project overview:

This project explores the different ways we can take two images and blend them together. In

this project I use such methods as Laplacian pyramid blending and Poisson blending to create images that could definitely be

impossible to see naturally (like an oraple!).

Warmup:

For the warmup, I gaussian filtered a blurry image and then

added this filtered image back to the original blurry image with an alpha

coefficient. This sharpened the original image. When I filtered the image,

I first created a gaussian Kernel with size 100 and sigma 5. Then I filtered

the image with this kernel. For getting the result I used this equation:

result = original + alpha * gaussianBlurredImage with alpha = 0.5.

Original:

Sharpened:

Sharpened:

Hybrid Images:

For this section, I firstly aligned the person and cat image based on their eyes.

Then, to hybridize them I gaussian(low-pass) filtered the person image with sigma 4 and kernel

size of 25 (2*3*4 + 1). Also, I high-pass filtered the cat image by subtracting the image by the

gaussian filtered image with sigma 20 and kernel size 121 (2*3*20+1). Then, I took these, greyscaled them

and added them to get the hybridized image. For all of these images I also found the log magnitude of

the Fourier transform using the equation given in the project spec.

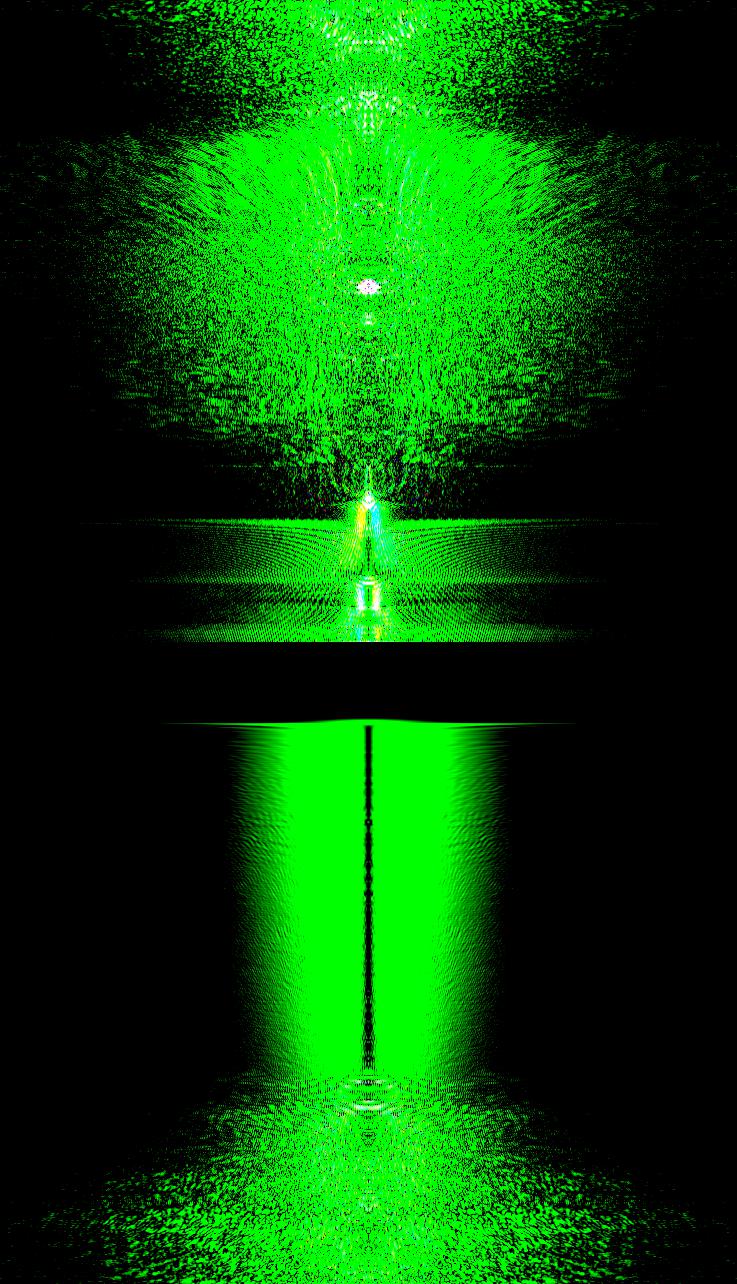

Original Cat and its Fourier transform

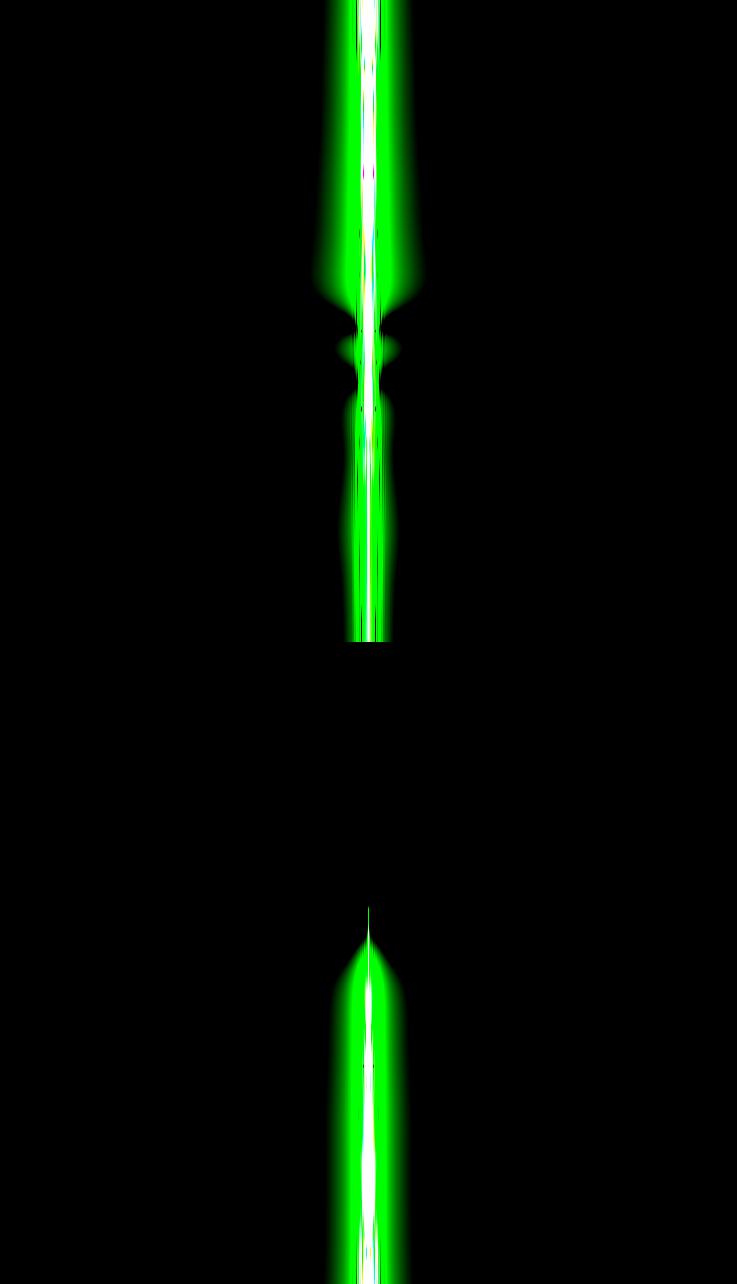

High-pass filtered Cat and its Fourier transform:

High-pass filtered Cat and its Fourier transform:

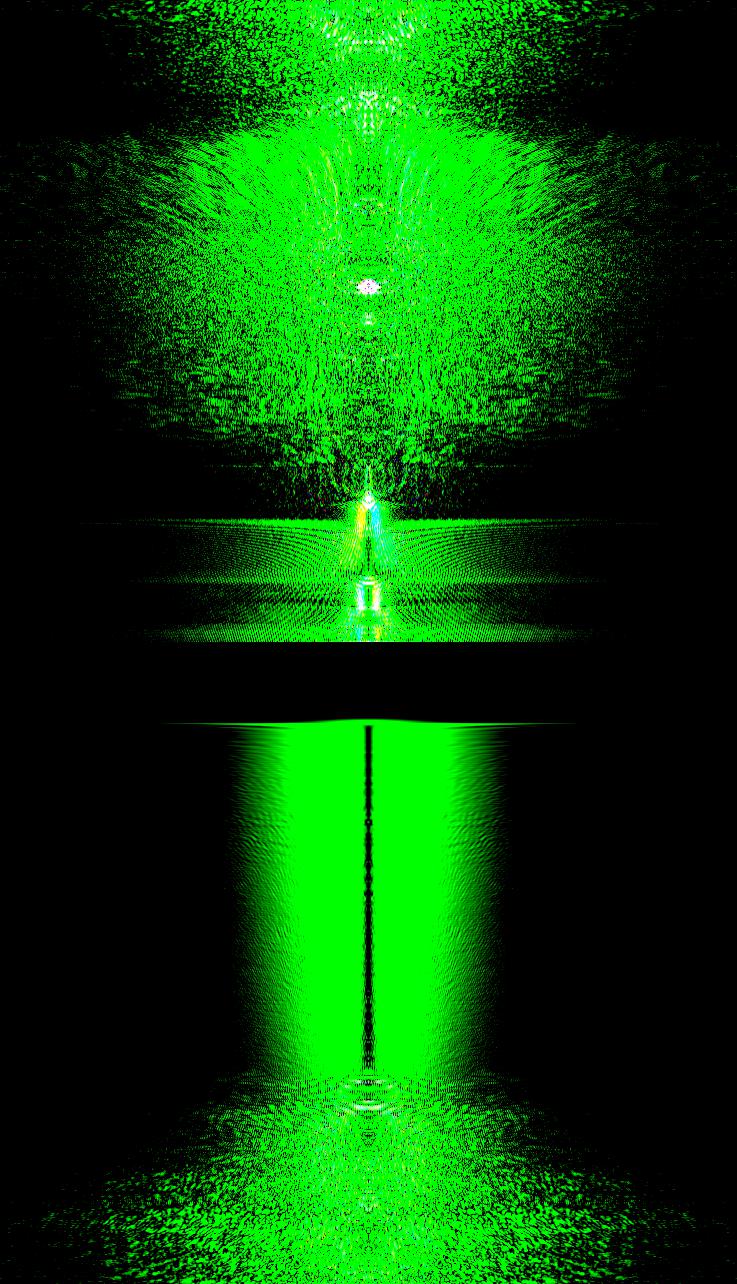

Original Human and its Fourier transform

Original Human and its Fourier transform

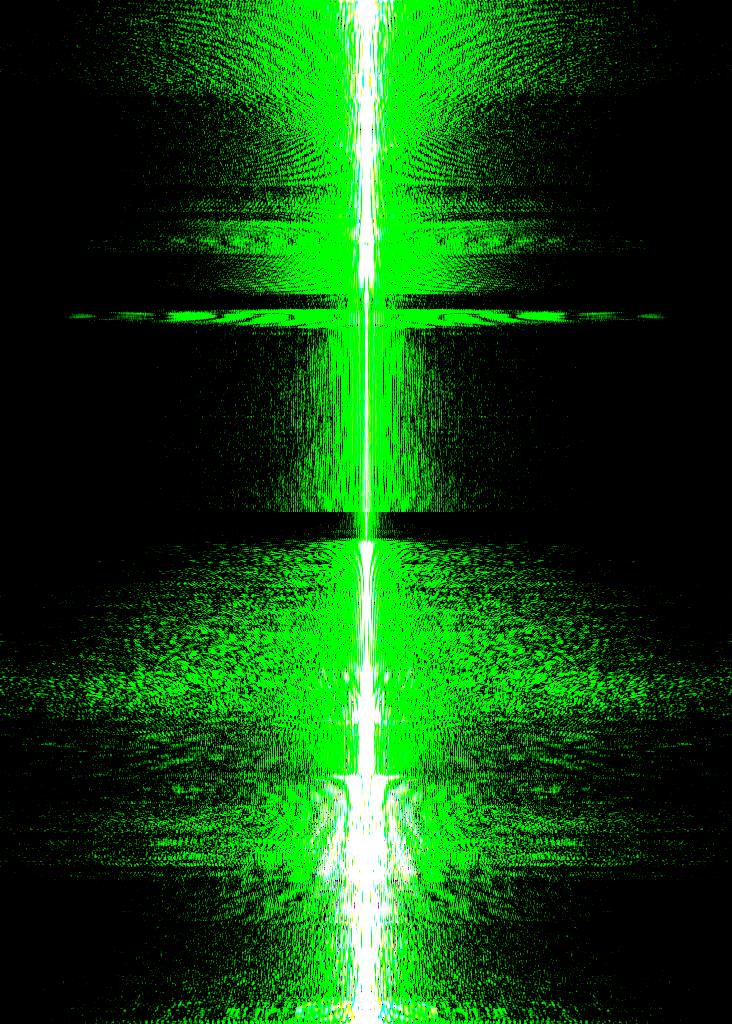

Low-pass(Gaussian) filtered Human and its Fourier transform:

Low-pass(Gaussian) filtered Human and its Fourier transform:

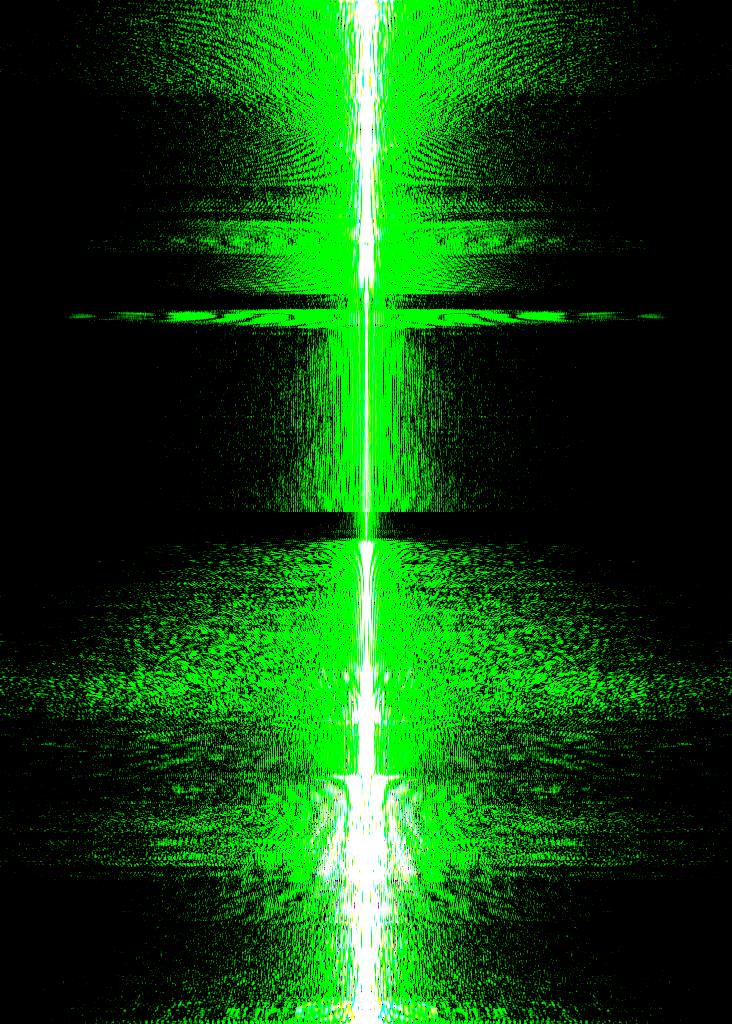

Hybridized and its Fourier transform:

Hybridized and its Fourier transform:

I made three other hybrid images. Two came out well and one didn't.

Oski and jaguar with sigmas 4 and 1 respectively:

I made three other hybrid images. Two came out well and one didn't.

Oski and jaguar with sigmas 4 and 1 respectively:

Daniel Radcliffe and Meme with sigmas 2 and 1 respectively:

Daniel Radcliffe and Meme with sigmas 2 and 1 respectively:

This didn't come out so well: Random Person and a Beagle:

This didn't come out so well: Random Person and a Beagle:

Multiresolutional Blending:

To do blending, I utilized Gaussian and Laplacian stacks. A Gaussian stack is where we start out with an intial image

and each level of the stack you exponentially increase the gaussian blur on the image. A Laplacian stack is where each level

is the difference between the gaussian filtered image at the same level and the gaussian filtered image at the prior level.

Note that the first image in the Laplacian stack is the difference between the original image and the gaussian filtered image at

the same level.

This is the gaussian and laplacian stack for Lincoln. Note that each level the sigmas for the gaussian kernels are:

1, 2, 4, 8, 16 respectively.

Now, for the blending part. To do this, we have an apple and an orange and we get their gaussian and laplacian stacks. They need to all have

the same number of levels. I create a new stack while recursing through all of the stack levels. At each level I set the value to

gaussian(mask)*laplacian(apple) + (1-gaussian(mask))*laplacian(orange). After going through all 5 levels, I then add a 6th level, which

is the combined image passed through a gaussian filter; gaussian(mask * originalAppleImage + (1-mask) * originalOrangeImage).

Once these are calculated, I add all of the them to get the blended image.

Gaussian and laplacian stack for apple:

Now, for the blending part. To do this, we have an apple and an orange and we get their gaussian and laplacian stacks. They need to all have

the same number of levels. I create a new stack while recursing through all of the stack levels. At each level I set the value to

gaussian(mask)*laplacian(apple) + (1-gaussian(mask))*laplacian(orange). After going through all 5 levels, I then add a 6th level, which

is the combined image passed through a gaussian filter; gaussian(mask * originalAppleImage + (1-mask) * originalOrangeImage).

Once these are calculated, I add all of the them to get the blended image.

Gaussian and laplacian stack for apple:

Gaussian and laplacian stack for orange:

Gaussian and laplacian stack for orange:

This is the stack that I created and then added to get the resulting blended image:

This is the stack that I created and then added to get the resulting blended image:

Resulting blended image once the above images are added:

Resulting blended image once the above images are added:

Result of blending a basketball and tennis ball:

Result of blending a basketball and tennis ball:

Result of blending a duck and a pool:

Result of blending a duck and a pool:

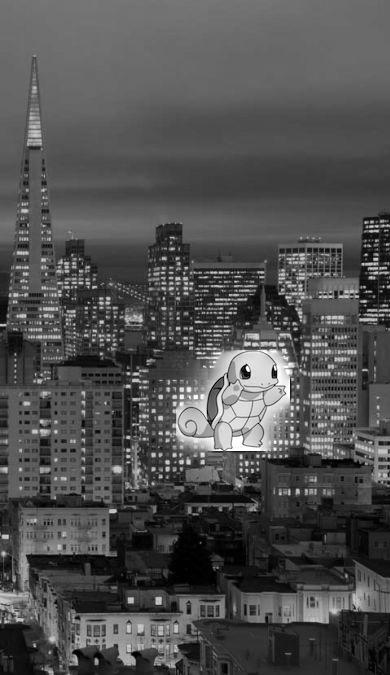

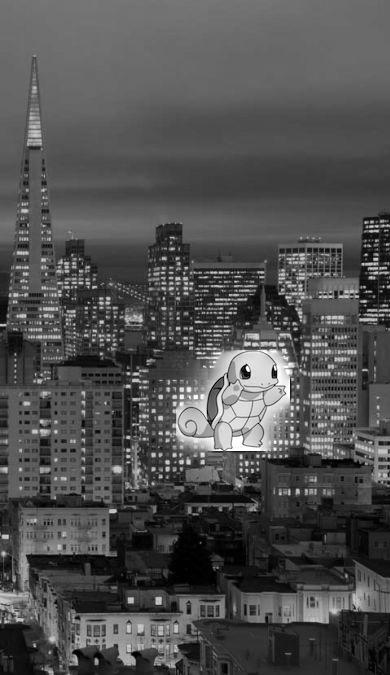

This didn't turn out so well. This is the result of blending SF and squirtle:

This didn't turn out so well. This is the result of blending SF and squirtle:

Part 2

Part 2 of the project is using poisson blending to be able to take a source image, a target image, and a mask, and essentially allow

the masked part of the source image to be blended into the target image.

The way that I do poisson blending is by solving a least square problem (finding the matrix x, for Ax = b).

A is the sparse matrix, x is the final image that we want, and b contains the gradients we want for the final image.

First, I make sure that all of the 3 images are of equal length and width. More importantly, I make sure that the source

image is padded so that it is of equal length and width as the target image. I look at all 3 color channels for the images.

I set A to be a zero matrix with dimensions

(2*# pixels in mask region, number of pixels total) and I set b to a zero matrix with dimensions (2*#pixels in mask region, 1).

By following the given equation in the project spec, I look at all pixels in the masked region. For each of

the top and left neighbor pixels ((x-1,y) and (x,y-1)) the gradients are set accordingly and added to the b matrix.

The A matrix is updated accordingly, too, to have correct coefficients to find these gradients.

If the neighbor pixel is outside of the masked region the gradient will have the target image value of that pixel added to the

gradient. Also, in this case, the row in the A matrix will be the identity for that pixel.

Once the x matrix is found by using lsqr for Ax=b in the current color channel, I set the result by setting one pixel at a time.

If it lies outside the masked region, it takes on the pixel value of the target image pixel. If it is in the masked region, it takes on

the value of the pixel from the calculated x(v) matrix.

The toy problem is just computing the x and y gradients and using least squares to find the original image from this.

On the left is the original toy problem image and the right is the recovered original image:

This is using the given images: The snowy target image, the penguin image padded, the mask and the result:

This is using the given images: The snowy target image, the penguin image padded, the mask and the result:

This is my favorite blending result: The forest target image, the fish image padded, the mask and the result:

This is my favorite blending result: The forest target image, the fish image padded, the mask and the result:

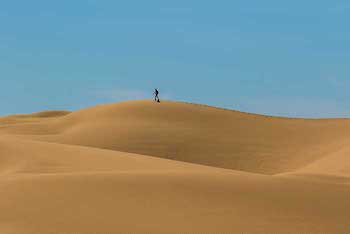

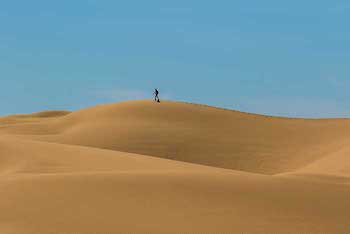

Another one: The desert target image, the drone image padded, the mask and the result:

Another one: The desert target image, the drone image padded, the mask and the result:

Bad result: The water target image, the dog image padded, the mask and the result:

Bad result: The water target image, the dog image padded, the mask and the result:

The reason why this was a bad result was because the background for both of the images were pretty different. The dog background had a darker

blue, so that messed with the blending onto the light blue target. Also, there were too many ripples in the target image. This

made it so that there wasn't a consistent solid background that could be blended to.

This was a pair of images that I blended above in the Laplacian pyramid blending:

These are the original images of sf and squirtle:

The reason why this was a bad result was because the background for both of the images were pretty different. The dog background had a darker

blue, so that messed with the blending onto the light blue target. Also, there were too many ripples in the target image. This

made it so that there wasn't a consistent solid background that could be blended to.

This was a pair of images that I blended above in the Laplacian pyramid blending:

These are the original images of sf and squirtle:

This is the mask, the result above from Laplacian blending, and the result from Poisson blending:

This is the mask, the result above from Laplacian blending, and the result from Poisson blending:

The Laplacian pyramid blending approach definitely worked better, but was still not the best. The reason this was better

is that the Poisson blending was messed up by the background of the source image. Since the background

was white, the gradients were messed up. Also, since the picture of SF was very convoluted and there were so many colors in the

masked region (and on the border of the region), the result wasn't that good. Laplacian pyramid blending is best when

the background of the source and target images are very different. Also, this method is best when there is a lot of differing

colors that are in the target image. Poisson blending works best when the background of the source and target images are very similar

(this is why the example with the penguin and the mountain worked so well). This is because the target image pixels on the border

of the masked region aren't interfering with the gradients as much.

The Laplacian pyramid blending approach definitely worked better, but was still not the best. The reason this was better

is that the Poisson blending was messed up by the background of the source image. Since the background

was white, the gradients were messed up. Also, since the picture of SF was very convoluted and there were so many colors in the

masked region (and on the border of the region), the result wasn't that good. Laplacian pyramid blending is best when

the background of the source and target images are very different. Also, this method is best when there is a lot of differing

colors that are in the target image. Poisson blending works best when the background of the source and target images are very similar

(this is why the example with the penguin and the mountain worked so well). This is because the target image pixels on the border

of the masked region aren't interfering with the gradients as much.

Sharpened:

Sharpened:

High-pass filtered Cat and its Fourier transform:

High-pass filtered Cat and its Fourier transform:

Original Human and its Fourier transform

Original Human and its Fourier transform

Low-pass(Gaussian) filtered Human and its Fourier transform:

Low-pass(Gaussian) filtered Human and its Fourier transform:

Hybridized and its Fourier transform:

Hybridized and its Fourier transform:

I made three other hybrid images. Two came out well and one didn't.

Oski and jaguar with sigmas 4 and 1 respectively:

I made three other hybrid images. Two came out well and one didn't.

Oski and jaguar with sigmas 4 and 1 respectively:

Daniel Radcliffe and Meme with sigmas 2 and 1 respectively:

Daniel Radcliffe and Meme with sigmas 2 and 1 respectively:

This didn't come out so well: Random Person and a Beagle:

This didn't come out so well: Random Person and a Beagle:

Now, for the blending part. To do this, we have an apple and an orange and we get their gaussian and laplacian stacks. They need to all have

the same number of levels. I create a new stack while recursing through all of the stack levels. At each level I set the value to

gaussian(mask)*laplacian(apple) + (1-gaussian(mask))*laplacian(orange). After going through all 5 levels, I then add a 6th level, which

is the combined image passed through a gaussian filter; gaussian(mask * originalAppleImage + (1-mask) * originalOrangeImage).

Once these are calculated, I add all of the them to get the blended image.

Gaussian and laplacian stack for apple:

Now, for the blending part. To do this, we have an apple and an orange and we get their gaussian and laplacian stacks. They need to all have

the same number of levels. I create a new stack while recursing through all of the stack levels. At each level I set the value to

gaussian(mask)*laplacian(apple) + (1-gaussian(mask))*laplacian(orange). After going through all 5 levels, I then add a 6th level, which

is the combined image passed through a gaussian filter; gaussian(mask * originalAppleImage + (1-mask) * originalOrangeImage).

Once these are calculated, I add all of the them to get the blended image.

Gaussian and laplacian stack for apple:

Gaussian and laplacian stack for orange:

Gaussian and laplacian stack for orange:

This is the stack that I created and then added to get the resulting blended image:

This is the stack that I created and then added to get the resulting blended image:

Resulting blended image once the above images are added:

Resulting blended image once the above images are added:

Result of blending a basketball and tennis ball:

Result of blending a basketball and tennis ball:

Result of blending a duck and a pool:

Result of blending a duck and a pool:

This didn't turn out so well. This is the result of blending SF and squirtle:

This didn't turn out so well. This is the result of blending SF and squirtle:

This is using the given images: The snowy target image, the penguin image padded, the mask and the result:

This is using the given images: The snowy target image, the penguin image padded, the mask and the result:

This is my favorite blending result: The forest target image, the fish image padded, the mask and the result:

This is my favorite blending result: The forest target image, the fish image padded, the mask and the result:

Another one: The desert target image, the drone image padded, the mask and the result:

Another one: The desert target image, the drone image padded, the mask and the result:

Bad result: The water target image, the dog image padded, the mask and the result:

Bad result: The water target image, the dog image padded, the mask and the result:

The reason why this was a bad result was because the background for both of the images were pretty different. The dog background had a darker

blue, so that messed with the blending onto the light blue target. Also, there were too many ripples in the target image. This

made it so that there wasn't a consistent solid background that could be blended to.

This was a pair of images that I blended above in the Laplacian pyramid blending:

These are the original images of sf and squirtle:

The reason why this was a bad result was because the background for both of the images were pretty different. The dog background had a darker

blue, so that messed with the blending onto the light blue target. Also, there were too many ripples in the target image. This

made it so that there wasn't a consistent solid background that could be blended to.

This was a pair of images that I blended above in the Laplacian pyramid blending:

These are the original images of sf and squirtle:

This is the mask, the result above from Laplacian blending, and the result from Poisson blending:

This is the mask, the result above from Laplacian blending, and the result from Poisson blending:

The Laplacian pyramid blending approach definitely worked better, but was still not the best. The reason this was better

is that the Poisson blending was messed up by the background of the source image. Since the background

was white, the gradients were messed up. Also, since the picture of SF was very convoluted and there were so many colors in the

masked region (and on the border of the region), the result wasn't that good. Laplacian pyramid blending is best when

the background of the source and target images are very different. Also, this method is best when there is a lot of differing

colors that are in the target image. Poisson blending works best when the background of the source and target images are very similar

(this is why the example with the penguin and the mountain worked so well). This is because the target image pixels on the border

of the masked region aren't interfering with the gradients as much.

The Laplacian pyramid blending approach definitely worked better, but was still not the best. The reason this was better

is that the Poisson blending was messed up by the background of the source image. Since the background

was white, the gradients were messed up. Also, since the picture of SF was very convoluted and there were so many colors in the

masked region (and on the border of the region), the result wasn't that good. Laplacian pyramid blending is best when

the background of the source and target images are very different. Also, this method is best when there is a lot of differing

colors that are in the target image. Poisson blending works best when the background of the source and target images are very similar

(this is why the example with the penguin and the mountain worked so well). This is because the target image pixels on the border

of the masked region aren't interfering with the gradients as much.