CS 194-26 Project 3: Fun with Frequencies and Gradients

Name: Andrew Oeung

Instructional Account: cs194-26-adz

Objective:

This project utilizes frequency filtering, Gaussian/Laplacian stacks, multiresolution blending, and Poisson blending to blend/edit images into hybrid images. Hybrid images are static images that change in interpretation depending on how you view them. For the first part of the assignment, we'll focus on images that look different from short distance vs long distance, and then we will move on to blending two images together as seamlessly as possible. The images selected will be of extreme importance, because they will dictate the overall success of the result.

Part 1.1: Warmup

I sharpened the cover art of a video game called Ys 8: Lacrimosa of Dana. I used the methods getGaussianKernel and filter2D from the cv2 library, and added the detail on top of the original image. The title is most prominently captured in the detail.

Unsharpened image |

Sharpened image |

Detail |

Part 1.2: Hybrid Images

I created hybrid images by doing a low pass filter on one image and a high pass filter on another, then adding the images together. The image pairs I chose were Goku and Vegeta, 2 dogs, and a pair of brothers named Adam "Armada" Lindgren and Andreas "Android" Lindgren. The Lindgrens are two professional players from Super Smash Brothers Melee (Android and Armada are their aliases). I tried to choose images that had a similar stance and facial characteristics for the best results. My failure was the 2 dogs hybrid image, because the white dog does not contrast well with the black dog. My favorite is the Goku and Vegeta hybrid image. They both have similar facial and body structure, so they blend well, although it is hard to contrast their hair because they have the exact same hair color.

High Freq.: Andreas Lindgren |

Low Freq.: Adam Lindgren |

Hybrid Results |

High Freq. Image: Goku |

Low Freq. Image: Vegeta |

Hybrid Results (Favorite) |

High Freq. Image: Black Dog |

Low Freq. Image: White Dog |

Hybrid Results |

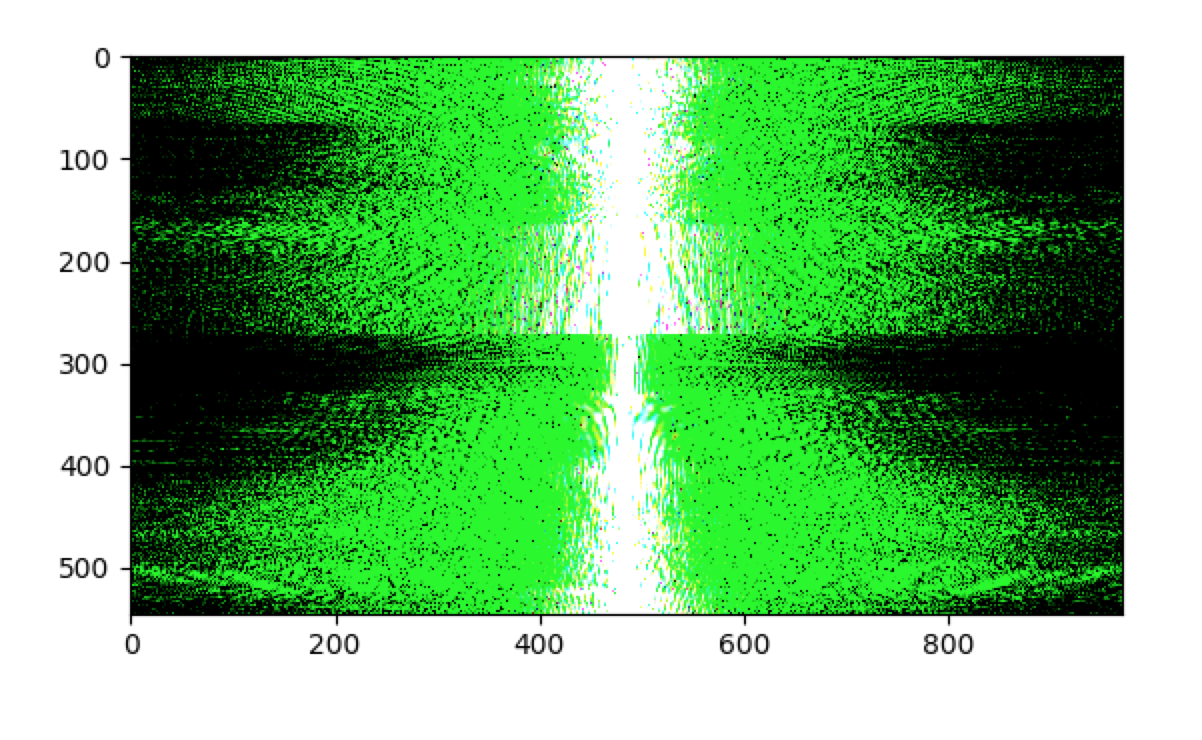

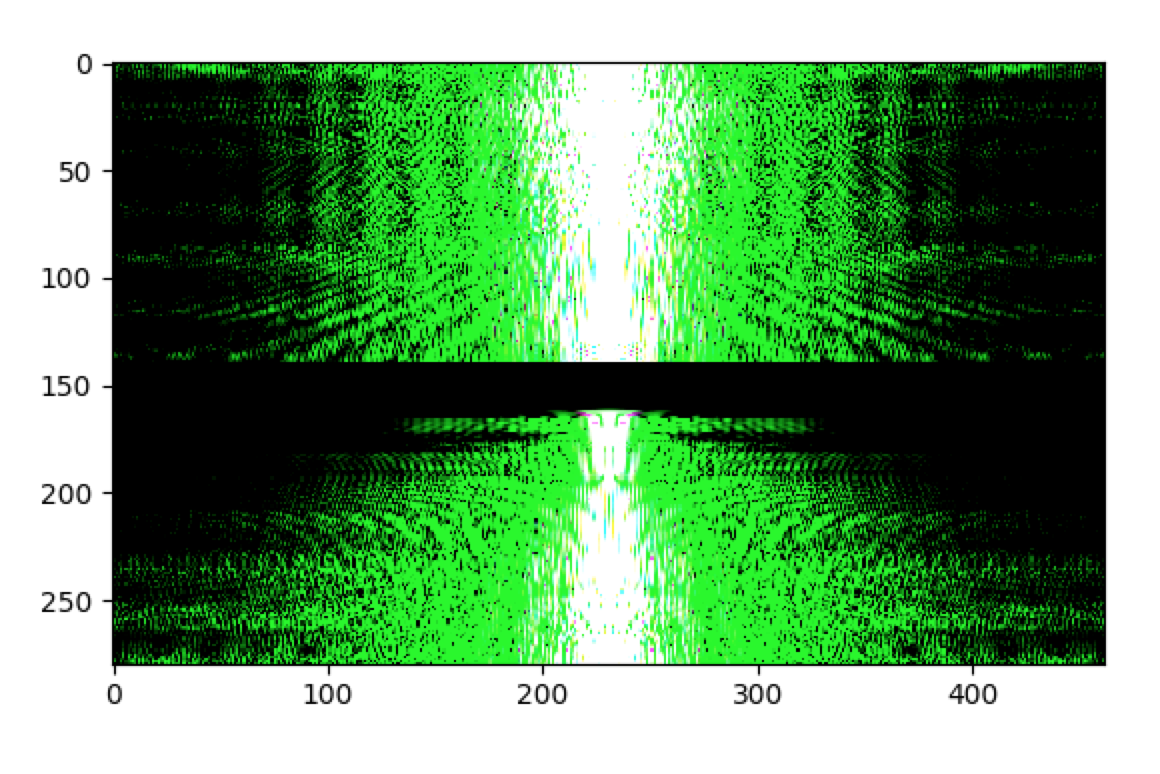

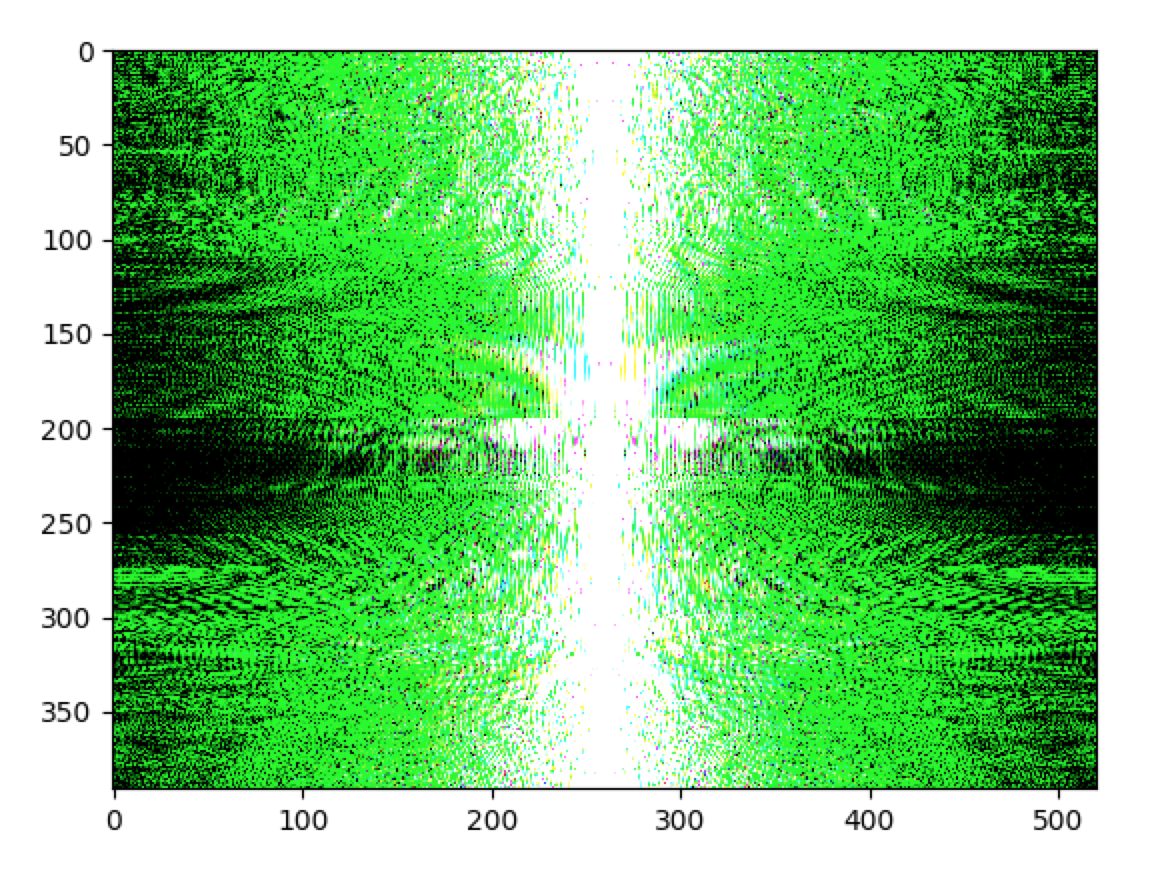

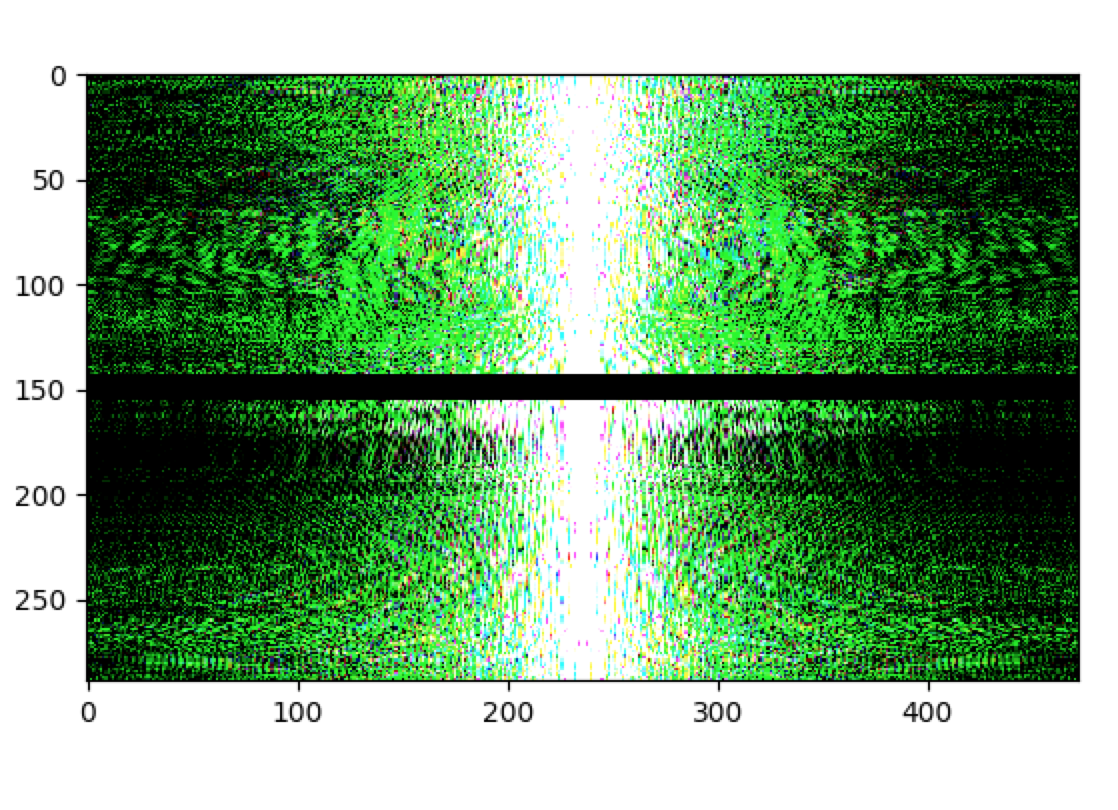

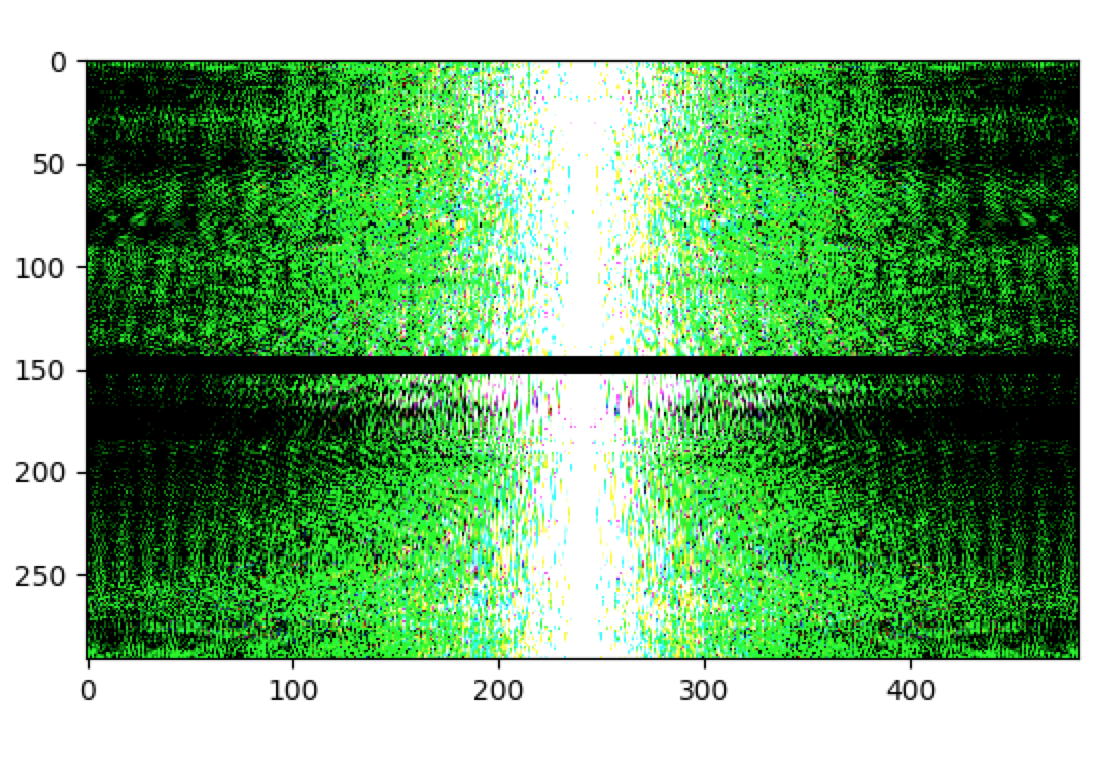

Goku Frequencies |

Goku Frequencies (filtered) |

Vegeta Frequencies |

Vegeta Frequences (Filtered) |

Hybrid Frequencies |

Part 1.3: Gaussian and Laplacian Stacks

Here, I successively apply a gaussian filter to an image and store the results in a stack. The Laplacian image will be the difference between the current image and the previous image. So the first level is original - G(I), the second level is G(original) - G(G(original)), and so on. I perform a gaussian filter with sigma = 2, then sigma = 4, then sigma = 8, then sigma = 16, then sigma = 32. The Laplacian Stack is normalized.

Lincoln |

Gaussian (Level 1) |

Gaussian (Level 2) |

Gaussian (Level 3) |

Gaussian (Level 4) |

Gaussian (Level 5) |

Laplacian (Level 1) |

Laplacian (Level 2) |

Laplacian (Level 3) |

Laplacian (Level 4) |

Laplacian (Level 5) |

Mona Lisa |

Gaussian (Level 1) |

Gaussian (Level 2) |

Gaussian (Level 3) |

Gaussian (Level 4) |

Gaussian (Level 5) |

Laplacian (Level 1) |

Laplacian (Level 2) |

Laplacian (Level 3) |

Laplacian (Level 4) |

Laplacian (Level 5) |

Favorite: Gaussian and Laplacian Stacks

I created the Gaussian and Laplacian Stacks for my 1.2 favorite, Goku and Vegeta as well.

Goku & Vegeta |

Gaussian (Level 1) |

Gaussian (Level 2) |

Gaussian (Level 3) |

Gaussian (Level 4) |

Gaussian (Level 5) |

Laplacian (Level 1) |

Laplacian (Level 2) |

Laplacian (Level 3) |

Laplacian (Level 4) |

Laplacian (Level 5) |

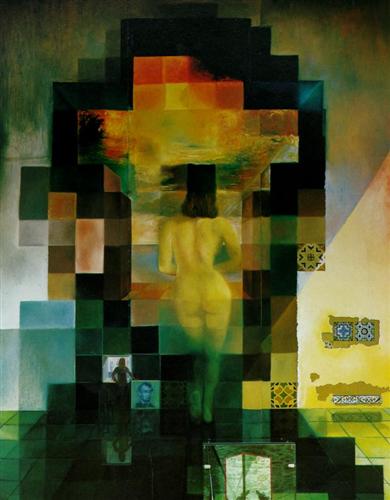

Part 1.4: Multiresolution Blending

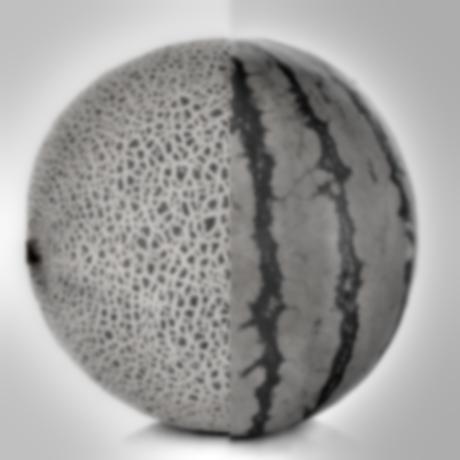

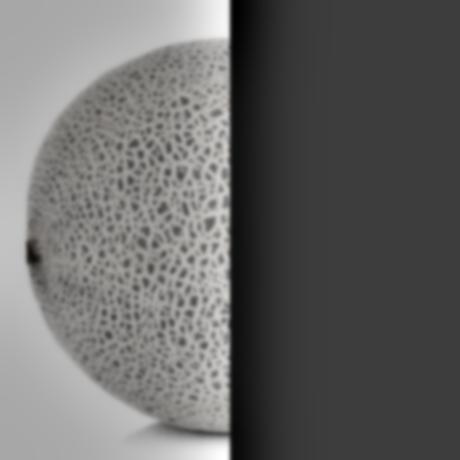

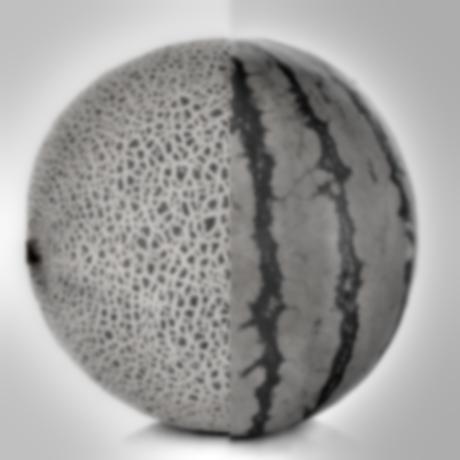

In this part, I blended two images together using my Gaussian and Laplacian Stacks. I chose to use these image pairs: an apple and orange, a cantaloupe and watermelon, and a honeydew and nectarine. I blended the honeydew and nectarine with an irregular mask using the provided matlab code, and I would say it is definitely a failure. You can clearly see the transition between the honeydew and the nectarine, and there is a discontinuity between the honeydew and nectarine on the left side of the combined fruit. My favorite result is the cantaloupe and watermelon. The blend looks rather natural because they have similar shape and coloring.

Orange |

Apple |

Hybrid Results |

Cantaloupe |

Watermelon |

Hybrid Results (Favorite) |

Honeydew |

Nectarine |

Hybrid Results |

Favorite: Laplacian Stacks

Here, I'll apply my Laplacian Stack to my favorite result with the masked images. The images immediately below are the result of summing up all the Laplacian levels in the Laplacian Stack for that respective image. Below those are the individual Laplacian Stack levels.

Masked Cantaloupe (All Laplacian Stack Levels Summed Up) |

Masked Watermelon (All Laplacian Stack Levels Summed Up) |

Laplacian (Level 1 - Cantaloupe) |

Laplacian (Level 2 - Cantaloupe) |

Laplacian (Level 3 - Cantaloupe) |

Laplacian (Level 4 - Cantaloupe) |

Laplacian (Level 5 - Cantaloupe) |

Laplacian (Level 1 - Watermelon) |

Laplacian (Level 2 - Watermelon) |

Laplacian (Level 3 - Watermelon) |

Laplacian (Level 4 - Watermelon) |

Laplacian (Level 5 - Watermelon) |

Part 2: Gradient Domain Fusion:

The purpose of this part is to show that through Poisson blending, humans are more sensitive to relative changes in the image itself rather than changes in pixel intensity of the image. That is to say, the pixel gradient is more important than absolute pixel value. We can blend two images together by putting a portion of one source image into another target image and matching its gradient to the target image. We model the gradient restraints so that the new object will be able to blend properly into the target image, and this will be modeled as a system of linear equations so that we can use least squares.

Part 2.1: Toy Problem

I've reconstructed the toy image below through a least squares solver. This is pretty self-explanatory.

Toy Image |

Reconstructed Toy Image |

Part 2.2: Poisson Blending

My favorite image was when I blended a plane into the sky of Beijing. Here's the source and target images, as well as a side-by-side comparison of a crude pasted airplane and the gradient-fused airplane. The code will compute the pixel gradients of the source cutout (a plane in this case) and reconstruct what the pixels would look like if they were placed into the target image so that it will blend as seamlessly as possible by minimizing the error in our least squares constraints. The pixel reconstruction will be solved through least squares. Since we're matching the source gradient to the target gradient, we need to solve the following equations:

v(x, y) - v(x-1, y) = s(x, y) - s(x-1, y)

v(x, y) - v(x+1, y) = s(x, y) - s(x+1, y)

v(x, y) - v(x, y+1) = s(x, y) - s(x, y+1)

v(x, y) - v(x, y-1) = s(x, y) - s(x-1, y-1)

Favorite: Beijing + Plane

Plane |

Beijing |

Pasted version of Beijing + Plane |

Poisson version of Beijing + Plane |

Here are some other examples of Poisson blending in action. My failure was definitely the hand + eggroll one. The reason it does not work so well is because the hand picture is from an anime (Steins;Gate) while the eggrolls are from real life. Also, the hand image is way too bright and it ruins the coloring of the eggrolls.

Eggrolls |

Hand |

Hand + Eggrolls |

Baby Penguin |

Snowy Expanse |

Penguin + Snow |

Now, I will compare my multiresolution blending results with the Poisson blending results. I'll use the cantaloupe and watermelon image pair as a source of comparison.

Cantaloupe and Watermelon (Multiresolution Blending) |

Cantaloupe and Watermelon (Poisson Blending) |

It definitely seems like multiresolution blending works better here. For poisson blending, the watermelon's high green pixel value bleeds into the cantaloupe, which doesn't have a high green pixel value. This means that the gradient will make the cantaloupe's green coloring much higher in the target image during Poisson blending. But the cantaloupe/source image's original color intensity was a better match than the new intensities that the gradient attempted to calculate. If the source object has a similar background to the target image, then Poisson blending will work better than multiresolution blending, since it will create a much more seamless transition with its gradient calculations. However, if the source object's background is too different from the target image, then Poisson blending will look unnatural because it tries to fit the source object's color intensity to the target image, even if they have no similarities. In this case, multiresolution blending would be a better fit.

Lessons from this Project:

I learned that creating a website is a tedious and time-consuming endeavor. Also, finding the correct images for each task was not an easy task: images wouldn't fit right or they would have improper shapes for blending. For example, just finding fruit that had the correct shape and background for multiresolution blending took a very long time.