Overview

For this assignment/project, we worked with frequency domain and gradient domains to try to "photoshop" images, mainly interweave one part of a source image to another so that it looks natural.

Part 1: Frequency Domain

1.1 Warm-up

For the first part, we worked in frequency domain. We saw that to sharpen an image, one should take the high filtered part of an image, which is the original image - low filtered image, multiply it by a constant, and add it back to the original image. Here is an example of it working!

|

|

1.2 Hybrid Images

To create the hybrid images, we notice that when we are close, we observe high frequency components better and when we are further, we notice the low frequency components better. So, if we have two images A,B and we want to create a hybrid image that shows A more clearly when close and B more clearly when far, we take the high filtered image of A and low filtered image of B and add them together!

Here are some picturesque images! Apologies for the spacing of Derek and Nutmeg... I can't get them to go close for some odd reason.

Favorite Picture is Derek and Nutmeg

|

|

|

|

|

Owl and Cat (FAILURE CASE)

|

|

|

SHREK and PEPE (GOOD CASE)

|

|

|

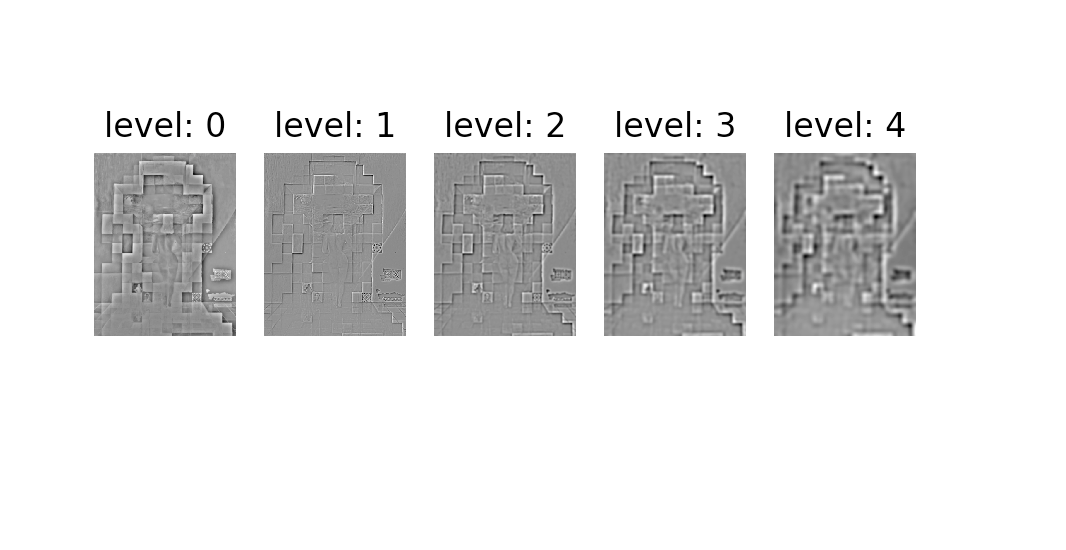

1.3 LAPLACIAN AND GAUSSIAN

HERE IS AN EXAMPLE WITH LINCOLN

|

|

1.4 Multiresolution Blending

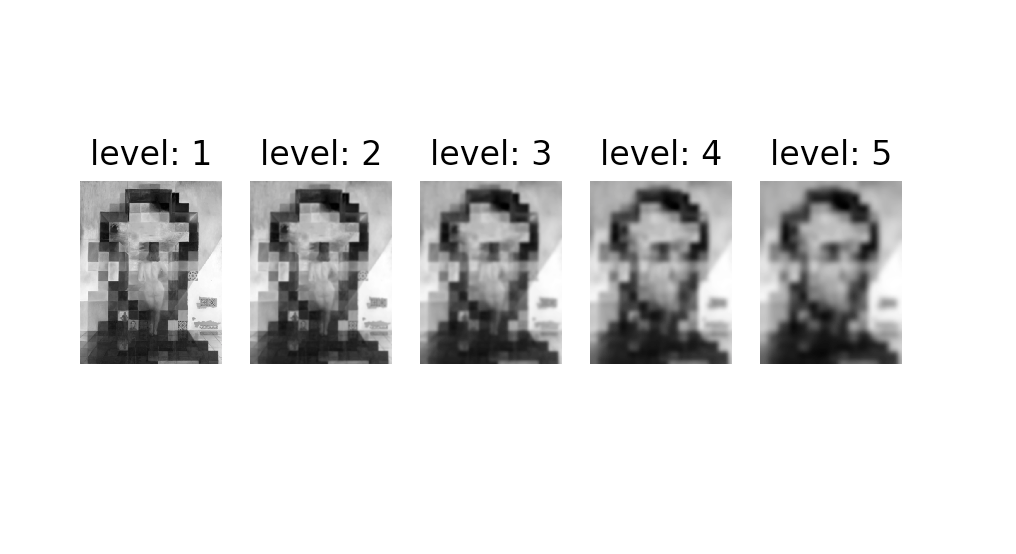

This was interesting. If we want to "blend" two images together, a naive approach would be to just take the part you want from image A and put it onto image B. However, this is terrible in that there are obvious seams and discrepancies that anyone could tell from looking at the combination. Instead, multiresolution blending uses laplacian and gaussian stacks to achieve a smooth blend.

What we need is a mask to select which part of image A we would need. We get the gaussian stack of the mask, the laplacian stack of A, and the Laplacian stack of B. The Laplacian stack is calculated via first getting the gaussian stack G[0, 1, .. n-1]. Then, L[i] = G[i] - G[i+1] for i = 1, ..., n-2, and L[0] = I - G[0] and the last layer is same as the gaussian stack's so that L[0] + L[1] + ... + L[n] = I - G[0] + (G[0] - G[1]) + ... (G[n-2] - G[n-1])+ G[n-1] = I!!

After getting the laplacian stacks of the two images (L1, L2) and gaussian stack of the mask (G1), the new image's laplacian stack (L3) is the follows: L3[i] = L1[i] * G1[i] + L2[i] * (1 - G1[i]), where G[i] sort of acts like weights for the laplacian stacks. Then, we get the final blended image by summing up the levels in L3 to produce the image!

|

|

|

|

For the images below, the first row is the orange laplacian weighted by the 1 - mask gaussian. The second row is the apple laplacian weighted by the mask gaussian.

Here's a cool one with a bottle on the sea.

|

|

|

|

Here's a nice one with a butterfly and sunglasses.

|

|

|

|