Lightfield Camera

CS 194-26: Computational Photography, Fall 2018

Tianrui Chen, cs194-26-aaz

Overview

In this project, we simulated depth and aperture adjustments using lightfield image sets from the Stanford Light Field Archive.

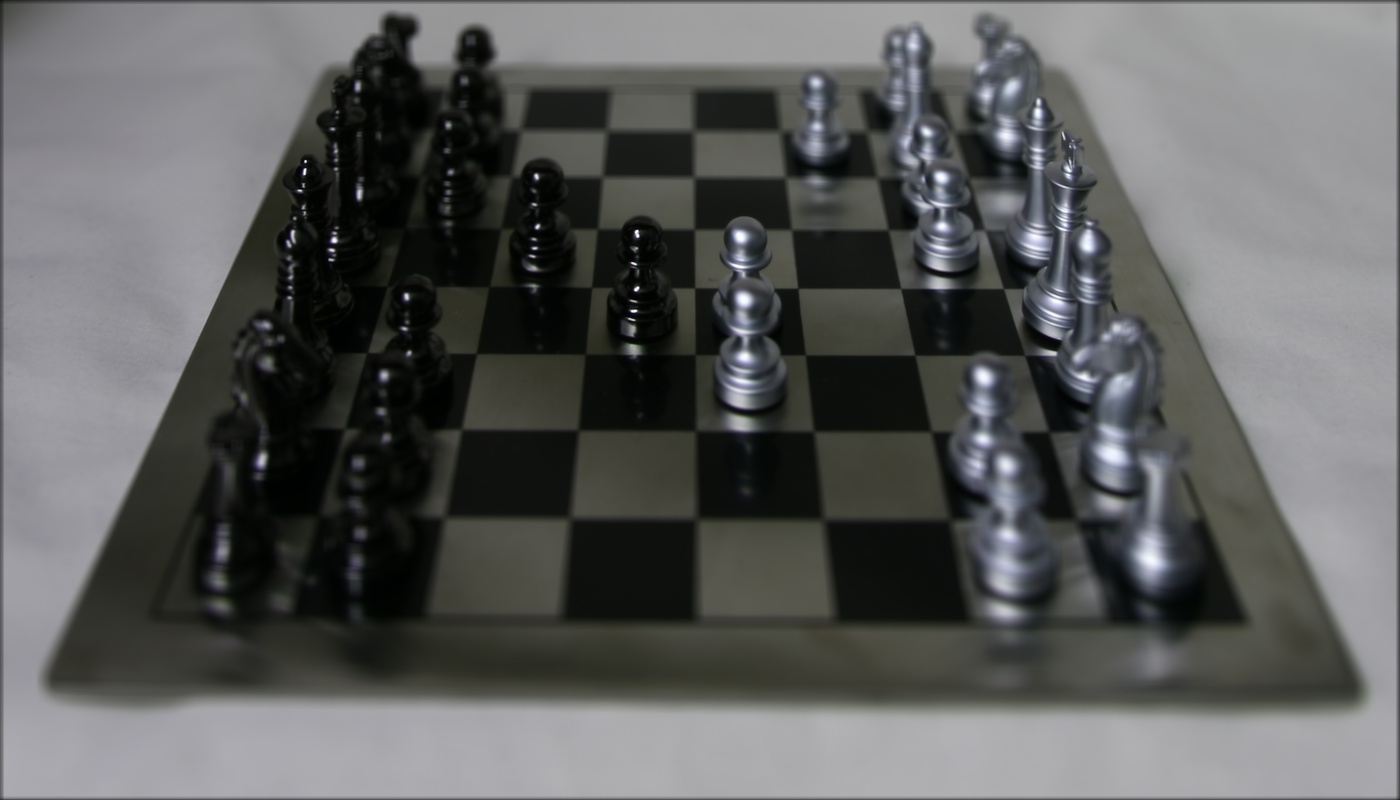

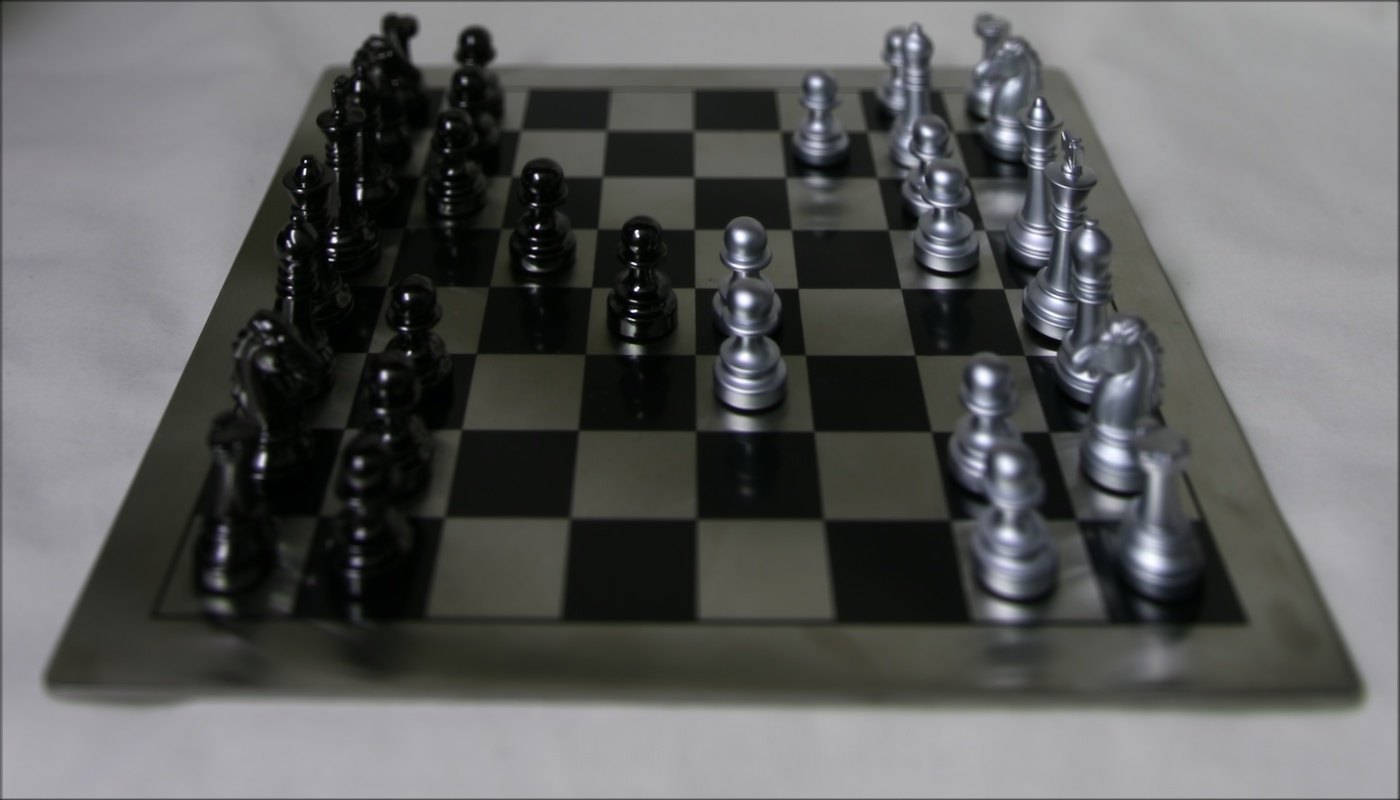

Depth Refocusing

The image sets consists of 289 images of the same scene taken by a 17 by 17 array of cameras. Since the cameras take images at different positions, the objects in the scene are offset in the images. Specifically, objects far away would not move too much as the light rays entering the camera are near parallel, while nearby objects would have significant shifts comparing the images.

By averaging the set of images, far away objects would become clearer than the nearby ones as far away objects are better aligned in the image. To change what objects become clearer after averaging, we offset images relative to a center image to change what objects become aligned.

In terms of actual implementation, we first treated the image at (8, 8) in the zero indexed array of cameras as the center image to calculate offsets against. For every other image, we took the difference of its uv coordinate and the center image uv coordinate and scaled by an $\alpha$ value to get a offset amount relative to how far away the image is compared to the center. We then used scipy.ndimage.shift to shift the image by the offset amount, and averaged all the shifted images to get the depth focused image.

Focus shift

|

|

|

|

| $\alpha = -0.5$ | $\alpha = -0.4$ | $\alpha = -0.3$ | $\alpha = -0.2$ |

|

|

|

|

| $\alpha = -0.1$ | $\alpha = 0.0$ | $\alpha = 0.1$ | $\alpha = 0.2$ |

Depth refocus gifs

|

|

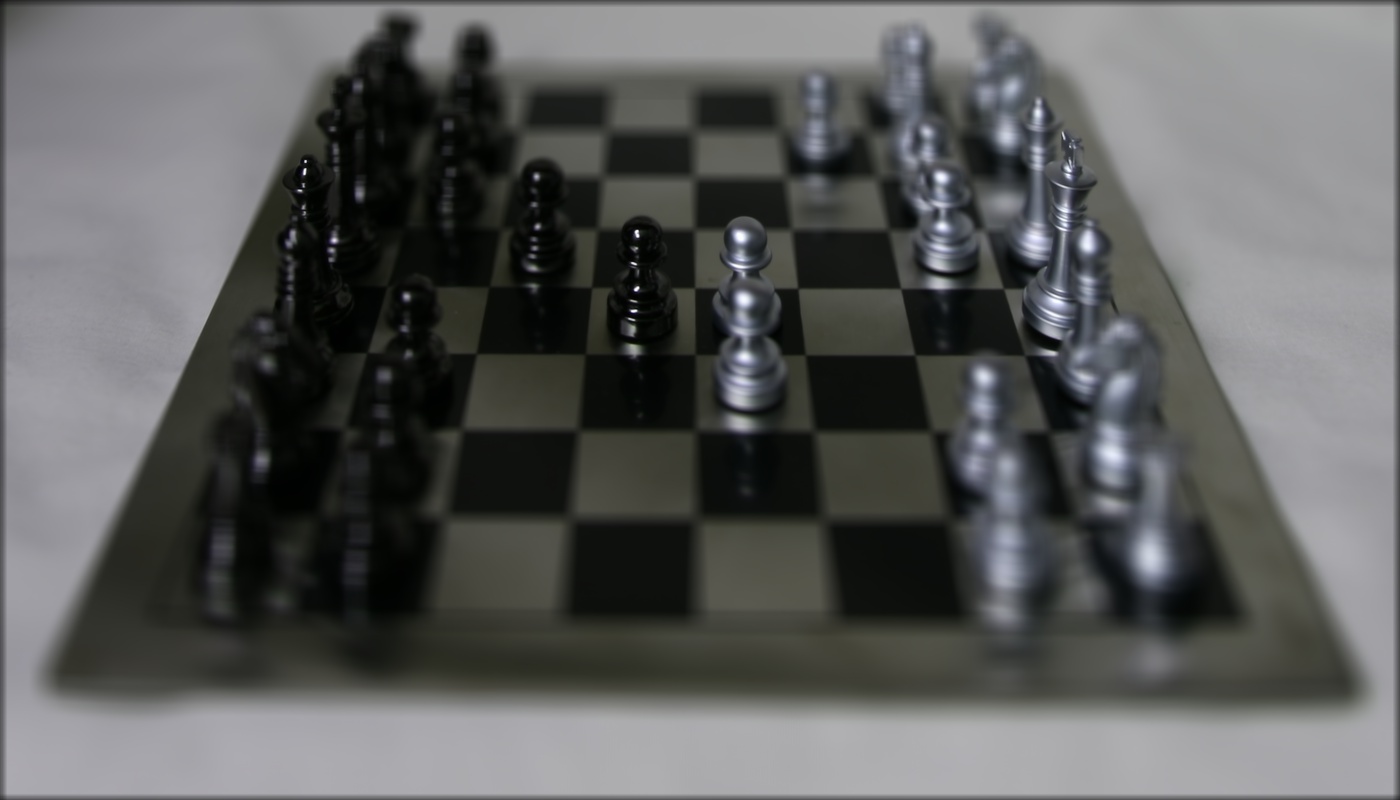

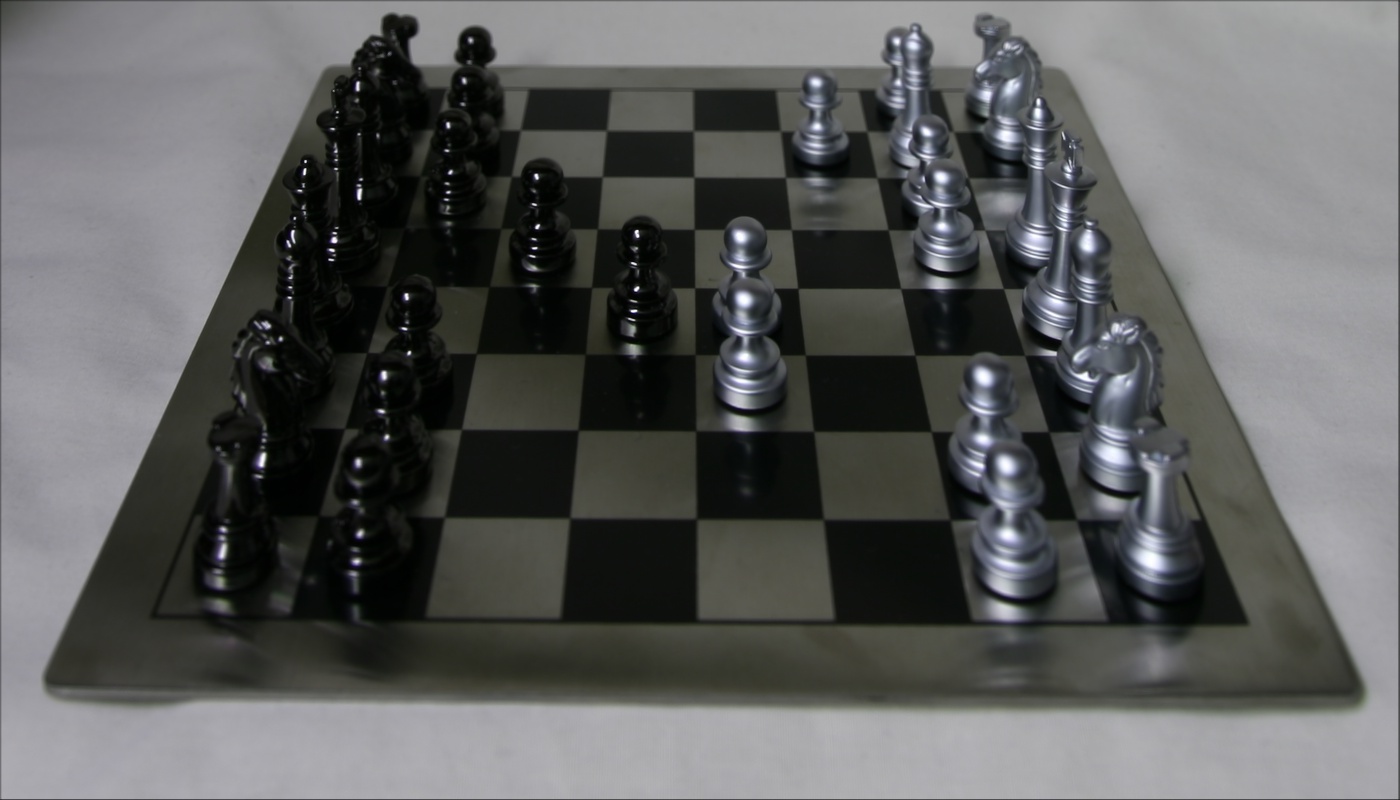

Aperture Refocusing

To simulate taking an image at different aperture sizes, we shrink the grid of images that are used to compute the average. For example, instead of using the full 17 by 17 grid of images, we can use the center 9 by 9 grid of images. Smaller apertures lead to deeper depth of fields, so the blur effect is less prominent in an image. Intuitively, a smaller grid of images simulates a deeper depth of field since both the number of misaligned images decreases from less images and the magnitude of misalignment decreases as the furthest away camera images are not included.

Aperture shift; $\alpha=-0.2$

|

|

|

|

| 17 by 17 | 15 by 15 | 13 by 13 | 11 by 11 |

|

|

|

|

| 9 by 9 | 7 by 7 | 5 by 5 | 3 by 3 |

Aperture refocus gifs

|

|

To conclude, we have learned to create a simple method to perform depth and aperture refocusing using light field datasets built. With it, we gain a little more intuition about how cameras and focus works.