Introduction

In this project, we will explore post-capture depth refocusing and aperture adjustment through lightfield photography. Lightfield photography enables us to change the depth of field (aperture) and depth of focus after taking the shot, because lightfield data is 4D data, describing rays of light that make up the image instead of simple pixel brightness values in 2D. We will be using data from the Stanford Light Field Archive, which have 289 2D images per example in a 17x17 grid, all of which are taken over a plane orthogonal to the optical axis.

Depth Refocusing

With light field data, we are able to refocus after taking the photo, through shifting and averaging the photos in our photo grid. If we simply average all of the photos without shifting, it makes sense that the elements in the background, further away from the camera, would be sharper and more in focus that the foreground; this is because the elements far way shift less in the image when we move our camera around. This average-all-without-shifting example (alpha = 0) is shown here below:

We can extend this idea to focus to different depths, by shifting each image in our 17x17 grid towards the central coordinates before averaging them together. The procedure is as follows:

- Determine the central image: here, we use (8, 8) as the central coordinate because our grid is 17x17.

- Select an alpha value, which determines depth of focus.

- For each image in the grid at an (u, v) coordinate, compute the offset from the central coordinate.

- Shift each image towards the central coordinate, by the offset scaled by alpha.

- Average all of the shifted images.

The shifting of each image was done with scipy.ndimage.shift, with order = 0 for faster computation time.

Results

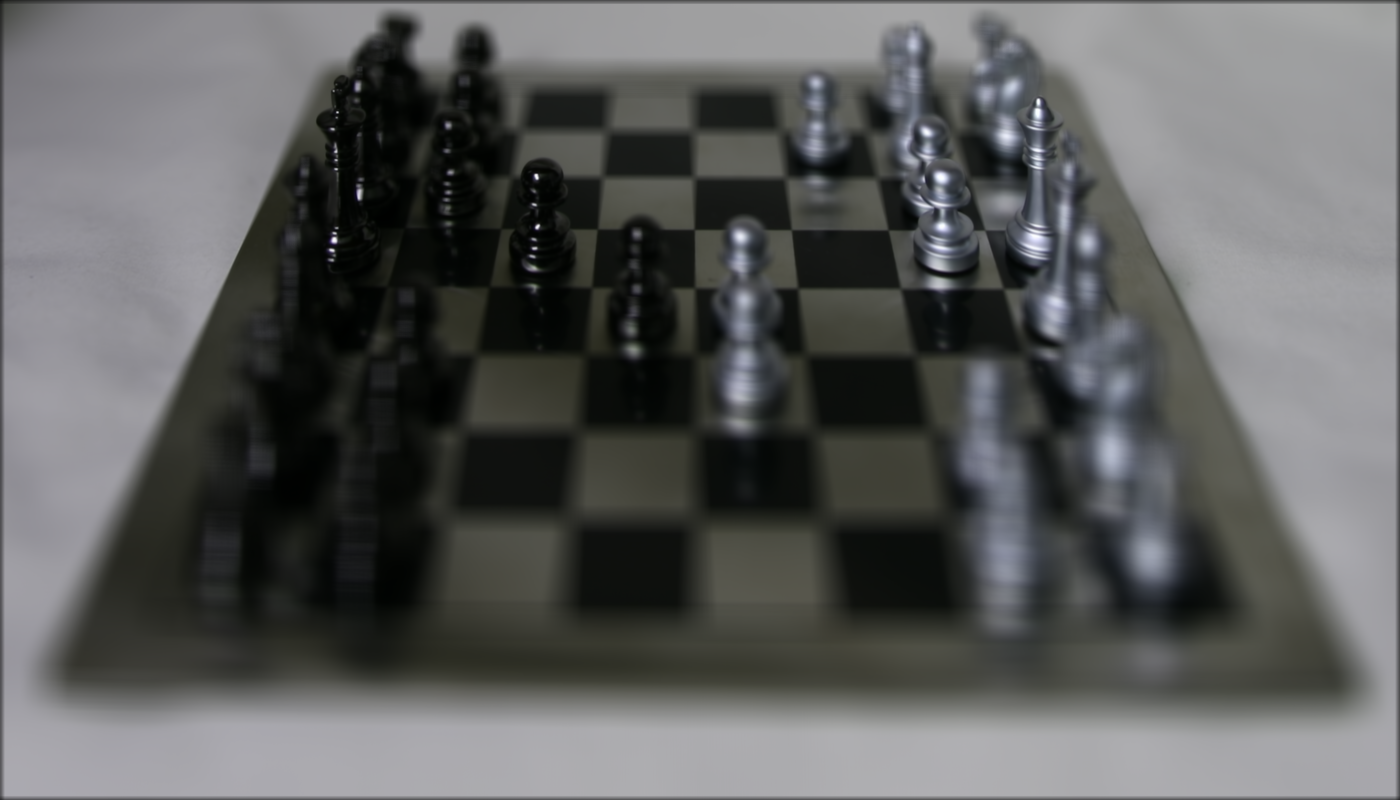

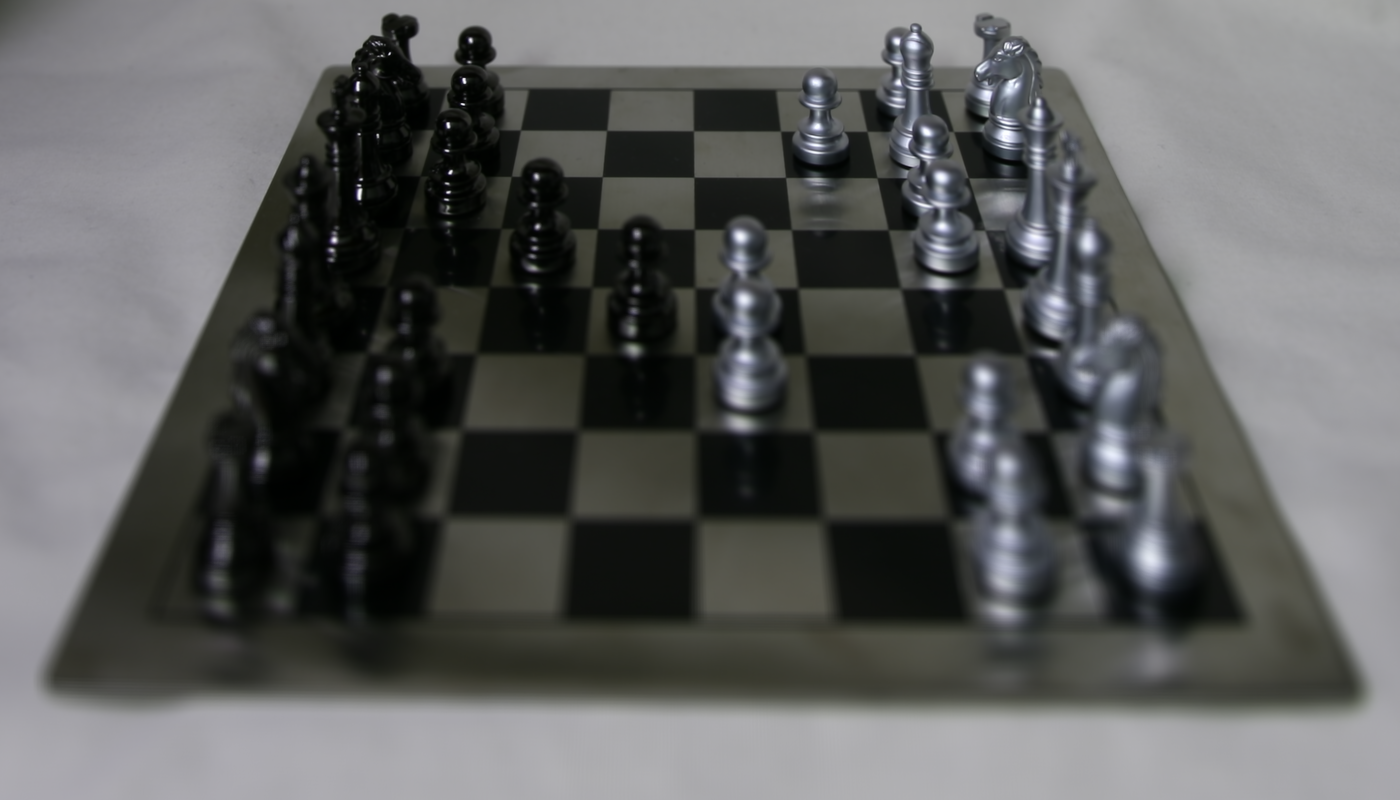

Below are some results of the chessboard with different alpha values.

alpha = 0.1

alpha = 0.3

alpha = 0.5

Below is an animation as alpha changes from 0.0 to 0.6:

Aperture Adjustment

In additonal to post-capture refocusing, we are able to simulate different aperture sizes with lightfield data, by averaging images around a central image. Averaging more images around a central image simulates an increase in aperture size and a decrease in depth of field, much like letting in more light with a larger aperture for traditional cameras.

- Determine the central image: here, we use (8, 8) as the central coordinate.

- Select a radius value: the bigger the radius, the bigger the aperture.

- Average all images within the specified radius around the central image (determined by Euclidean distance between the image coordinate and the central coordinate in the grid).

Results

Below are some results of the chessboard with different simulated apertures, as determined by different radius R values. As you can see, the depth of field decreases as radius R increases.

R = 1

R = 4

R = 8

Below is an animation as radius R changes from 1 to 8:

Takeaways

Through lecture, reading Professor Ng's paper, and working on this project, I finally learned how lightfield photography works. Simple computations like shift-and-averaging are powerful with lightfield data. Adjusting focus and aperture size after capturing the photo is a very cool concept, and one that Apple and Google have picked up on with Portrait Mode in the iPhone and the Pixel. Perhaps lightfields can make their way into VR, for even more realistic depictions of the 3D world around us.