Depth Refocusing and Aperture Adjustment with Light Field Data

CS 194-26 Computational Photography Fall 2018

Guowei Yang cs194-26-acg

Introduction

Part 1: Depth Refocusing

Step 1: Obtain Relative Shifting Amount from Center

Part 2: Aperture Adjustment

Summary

As the paper by Ng et al. demonstrated, capturing multiple images over a plane orthogonal to the optical axis enables achieving complex effects using very simple operations like shifting and averaging. The goal of this project is to reproduce Depth Refocusing and Aperture Adjustment using real light field data.

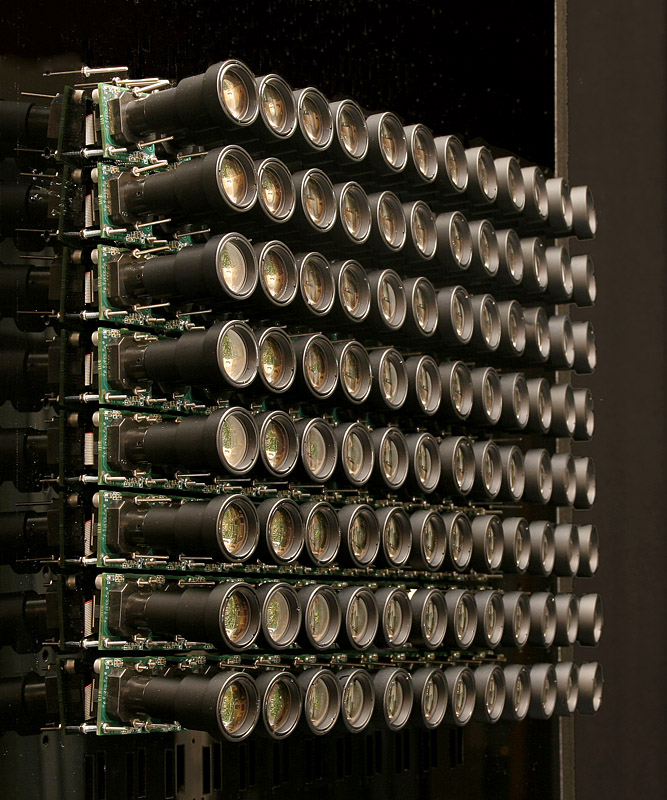

To reproduce the experiment, I obtained the image dataset from the Stanford Light Field Archive, which were all taken by a gigantic 17x17 camera array. By simply averaging, shifting these 189 images, we could obtain focus-shifting effects, aperture changing effects.

Step 2: Shift Each Photo

Step 3: Take Average

Luckily, all of the pictures in the Archive are appropriately named, which has the following format:

out_yCoordinate_xCoordinate_uCoordinate_vCoordinate.png

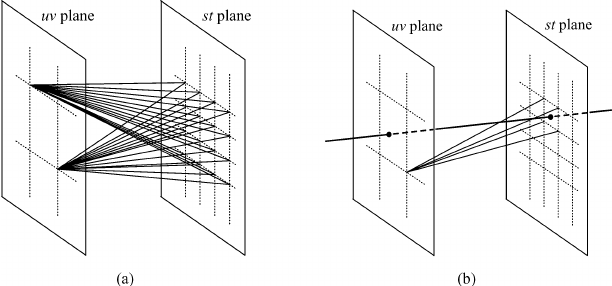

Where the x, y coordinates are the camera locations in the camera array, and the u, v coordinates correspond to the light field (u, v) plane:

After obtaining the coordinates, we want to shift the image by a constant factor of the distance to the center (in our case it's (8, 8)). Due to the nature of the camera array, after shifting, different points of the images may align together, creating a sharp point, which is essentially a focusing point. We can shift the focusing point by changing the constant factor.

After shifting the image, we simply take the average of the image. And Voila!

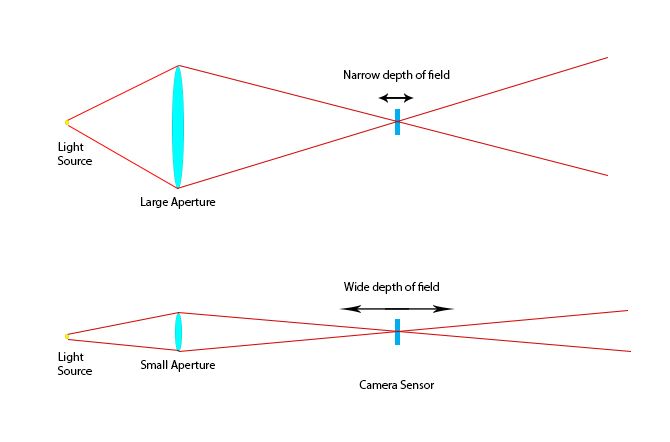

We could also adjust the aperture of the image as well using this image dataset. We could select the rays that we want to include in the final averaged image, and control the number of incoming rays using a radius variable. The larger the variable is, the narrower the depth of field is.

I tried to recreate the miniature of building, so I launched my drone, trying to take the "grid shot". However, due to the instability of the drone, the final averaged pictures are extremely blurry. Also the foreground moved too much, so we can't really see the shifted focus point effect.

Bells and Whistles

Normally when I take pictures, aperture and focus are determined before the picture is even took. And during post-process stage, there's not much one can do to change the focus point or depth of field. However, using light field theory, we are able to manipulate aperture and focus given we have obtained a large set of light ray information. We could create pictures with different depth of field and focus point by pulling different light ray information.