Project Overview

Capturing images from a plane orthogonal to the optical axis where each image is captured from a slightly different angle in the x and y directions (but remains the same in the z axis) enables us to reconstruct images with different depths as well as simulate aperture resizing. More concretely, if someone were to take a bunch of pictures of the same scene, but with each image changed the x and y positions in a uniform manner, we would obtain a grid of images that would represent the lightfield data of the scene. With clever averaging and shifting, we can reconstruct images that appear to be taken with a DLSR of different focal lengths (focusing at different depths) or with different apertures.

Depth Refocusing

An interesting note about these images are that objects in the scene that are closer to the camera will move more than objects that are farther away in the scene as the camera moves its perspective. Thus, when we look at the images in the grid, we will see that objects that are closer will vary more in their position than objects that are farther away. We can use this property to generate images that focus at different depths.

|

|

Notice how the edge of the chest closest to the camera moves more than the lid of the chest between these two images taken at slightly different x and y positions.

When we simply average all the Jellybean lightfield data from the Stanford Light Field Archive, we get the follow image:

|

Ok I suppose the way they took the images, the farther jellybeans varied more in placement than the nearby ones. Thanks for ruining my point above STANFORD by inconsisently taking your light field data! But anyways, the farther jellybeans are more blurred because their locations differ more than the closer jellybeans which are more or less in the same place for each image so they are sharp. What happens if we shift images over? We can line the images up at a certain location so that a certain area of the image is sharp and the rest is blurred. For example, we can line up the farther away jellybeans together to have them sharp which will make the closer jellybeans more blurred. We can do this by shifting each image a proportion of its original shift from the center by a constant C and averaging these shifted images together. We get a new image with a different depth focus. We get the following jellybean images by varying this parameter C:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Notice how as we increase C, the depth of focus moves from farther away to closer.

Putting this into a gif we get:D

|

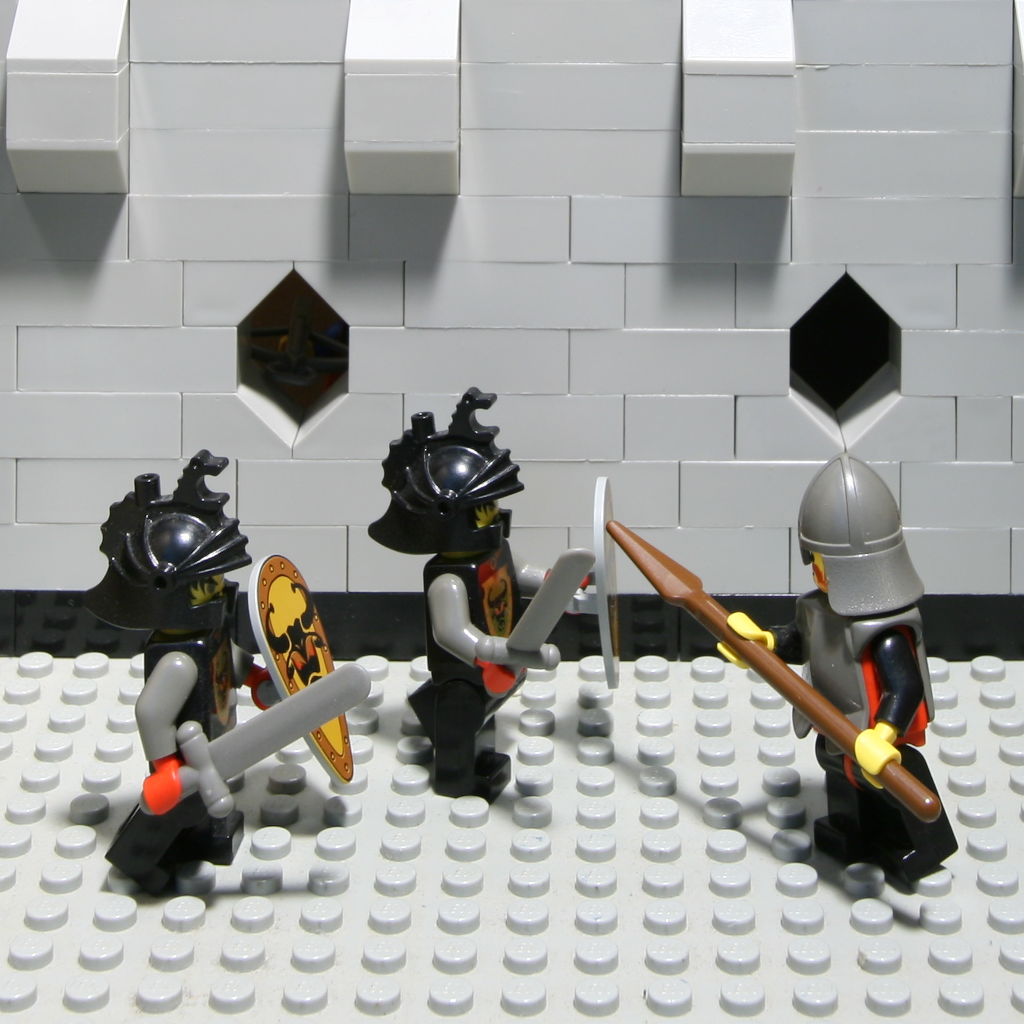

We do the same with the following lego image:

|

|

Aperture Adjustment

Changing aperture sizes changes the depth of field of an image. Larger aperatures decrease the range of focus of the image by making less of the image in focus. Smaller apertures increase this range of focus. We can simulate this by manipulating the number of images we choose to sample from a lightfield grid to average together. I chose an image to be my "center" image and then with increasing n, I choose to include all images that are n shifts away in either the x or y axis in my average. I get the following results for the chest image:

|

|

|

|

|

|

|

|

Creating a gif!

|

Doing the same for the previous lego picture, we get:

|