Part A: Image Warping and Mosaicing

The human field of view extends beyond what we see in traditional photos. Mosaics are a way we can approach this wide field of view. Photos are taken starting from the left or right side, rotating the camera by a little for each entry in the mosiac. For the process to work, the camera must remain in the same physical position in world space. This because the aligning and stitching of the images together requires projective warping the images so they are all on the same plane. The alignment does require the distortion of some of the scene (just like a wide angle lens) but the result is a clean overlap between the shared features in the images.

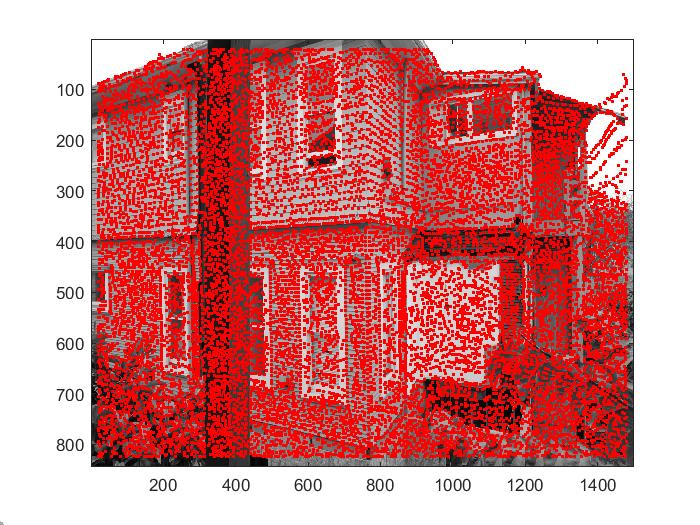

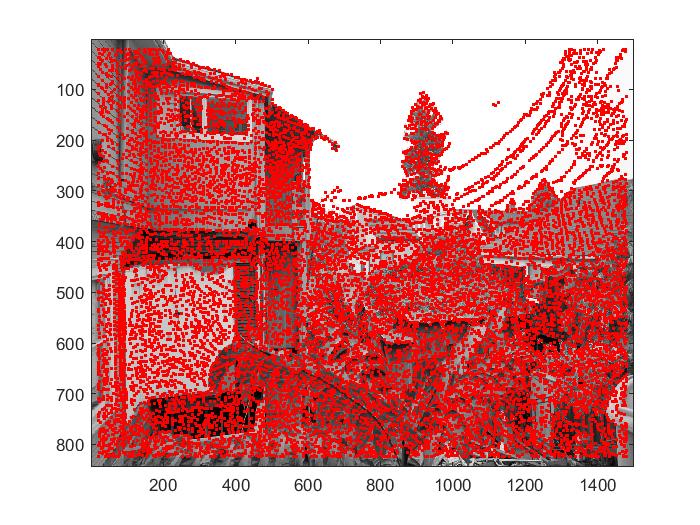

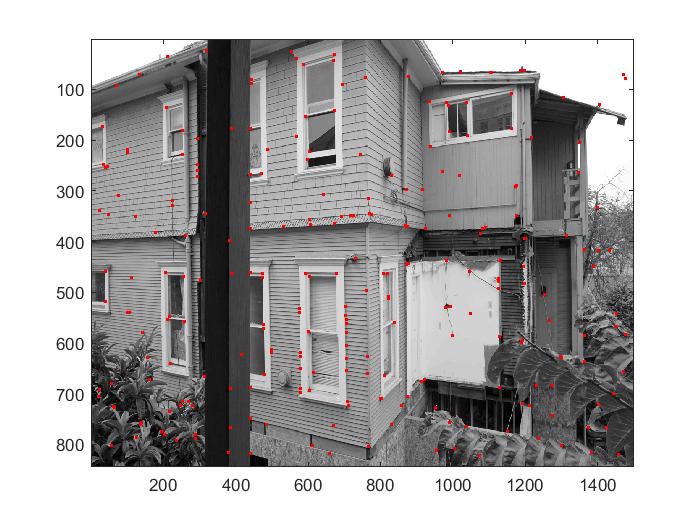

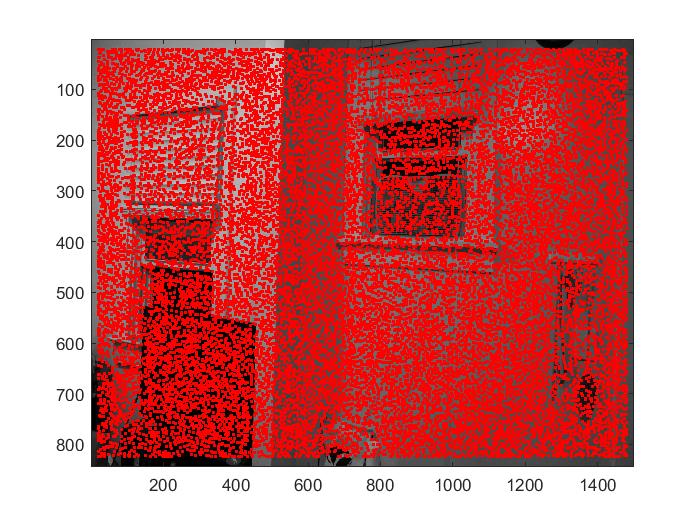

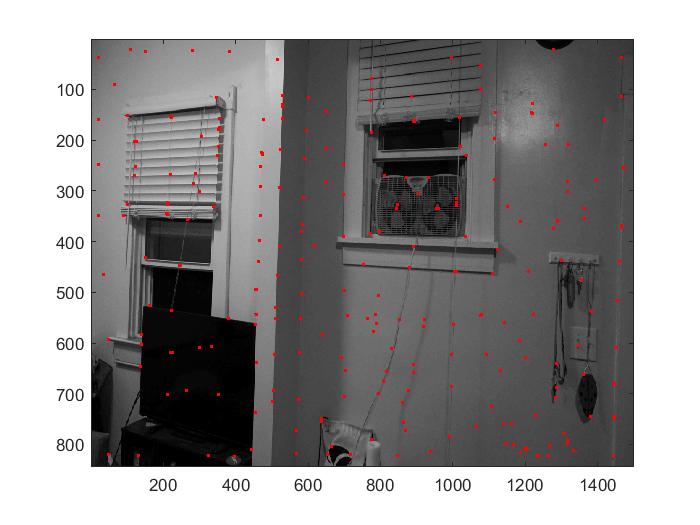

Shooting the Pictures & Correspondence Points

The pictures were taken on a tripod. I took a series of 7 photos for each scene starting from the left, rotating to the right by about 30 degrees for each shot. I choose two photos from each scene and identified shared features between these two. These features are demarcated with correspondence points: pairs of points meant to identify the same objects in both scenes.

|

|

|

Recover Homographies

Once we have the correspondence points between the two images, we can calculate a transformation matrix that can change the correspondence points on one image to be on the same plane as the other. A homography is a 3 x 3 matrix with 8 degress of freedom, so we must provide at least 4 correspondence points to determine it with least squares.

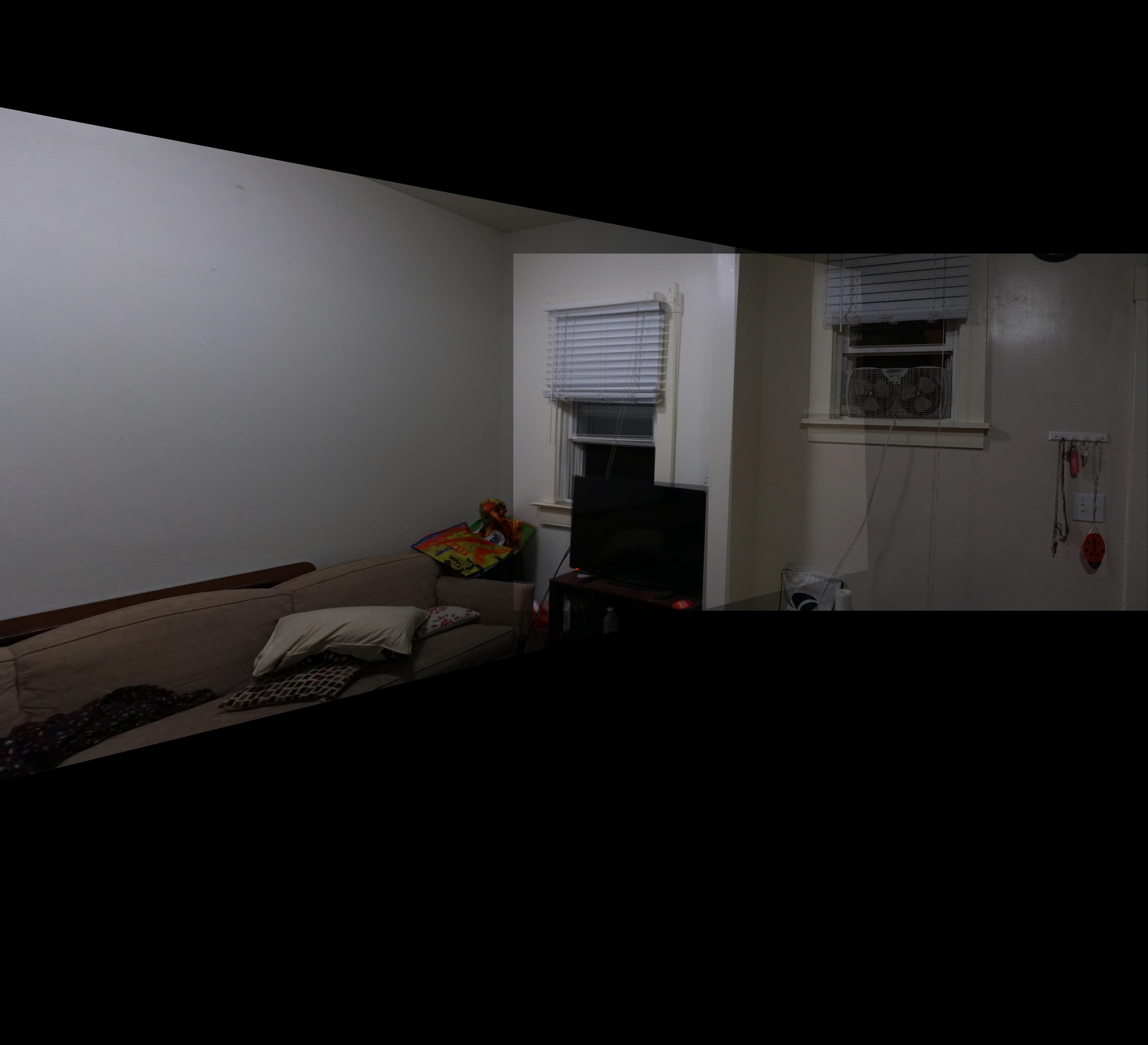

Warp the Images

Once we have the homography, we can apply it to the points from the image we want to warp to see what it would look like if it were on the plane of its partner image. The image will look distorted away from the correspondence points because it shifted to match up with the correspondence areas of the other image as much as possible. Identifying the placement of the pixels in the homography transformed image is done with inverse warping. Inverse warping consists of finding what values we should assign to the warped image by applying the inverse of the homography to the partner image. This gives us the approximate location of the unwarped source image that we should interpolate from to end up with our warped image.

|

|

Image Rectification

Rectification takes advantage of the warping properties of homographies to morph an image into a specific shape/direction. The correspondence points on the other image can form a square. The end result is the image warped so the correspondence points assume a square shape.

|

|

|

|

|

|

Mosaicing

Using the techniques outlines before, we can create mosiacs. We warp the left image onto the image plane of the right and stitch using Laplacian blending. The brightness of the overlapping portions of the image were probably due to imprecise averages between the two images.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Part A Summary

The most interesting thing I learned from this part of the project was how much the information contained within an image can be shifted to give an entirely new perspective. In particular, rectifying part of an image to be "straight" not only straightens out the specified area, but also the entire image. On top of that, the result does not even look that warped/stretched!