Khoa Hoang

Overview.

This is a continuing part of previous project 6A in which the images are stiched together by defining feature points manually before finding homography matrix and warping.

For this second half, the features will be detected automatically by Harris algorithm and then selected by Adaptive Non-Maximal Supression and RANSAC techniques.

The steps include:

1. Choose points by Harris Interest Points Detector

2. Adaptive Non-Maximal Suppression

3. Feature Descriptor Extraction

4. Feature Matching

5. RANSAC and then warping

The descriptions in this report refers to this paper:"Multi-Image Matching using Multi-Scale Oriented Patches"

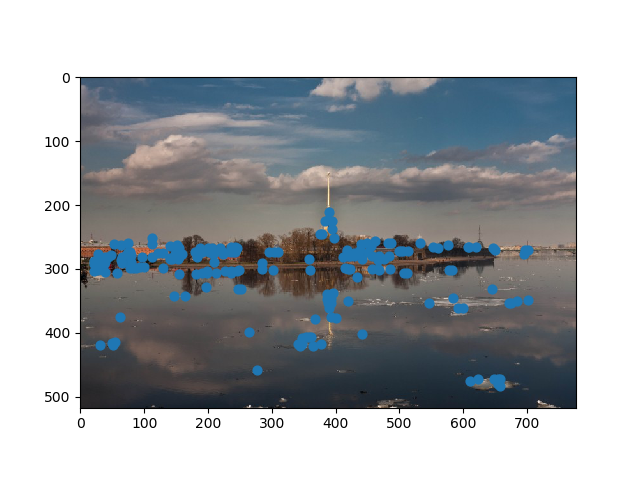

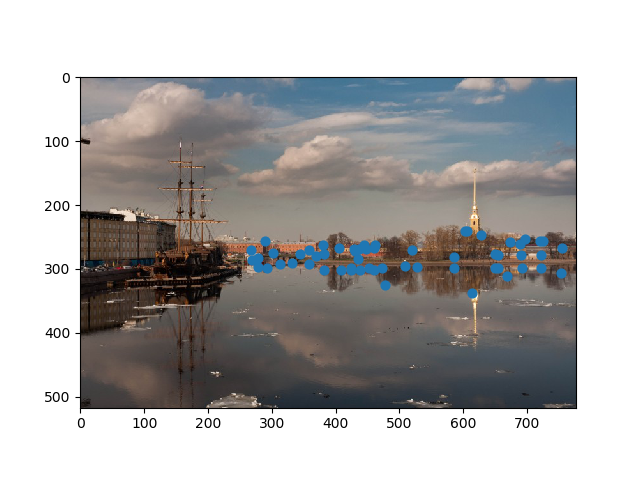

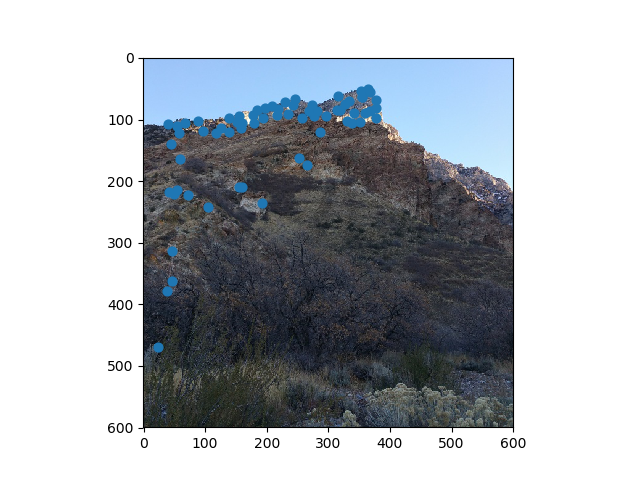

Harris Corner Detection

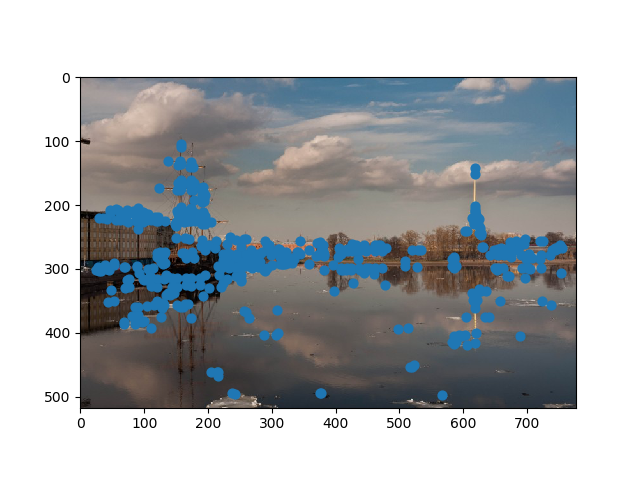

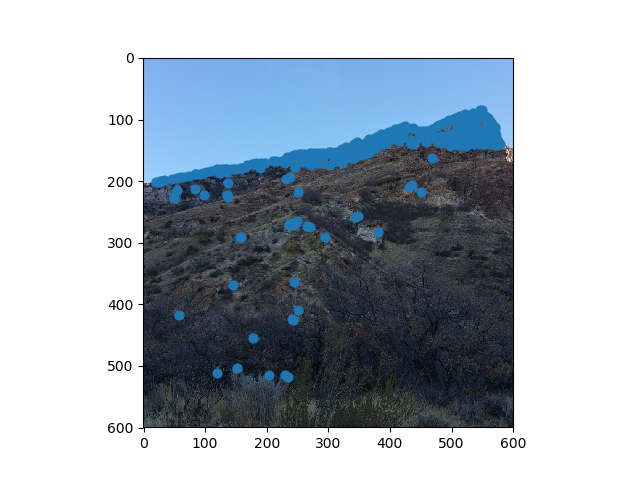

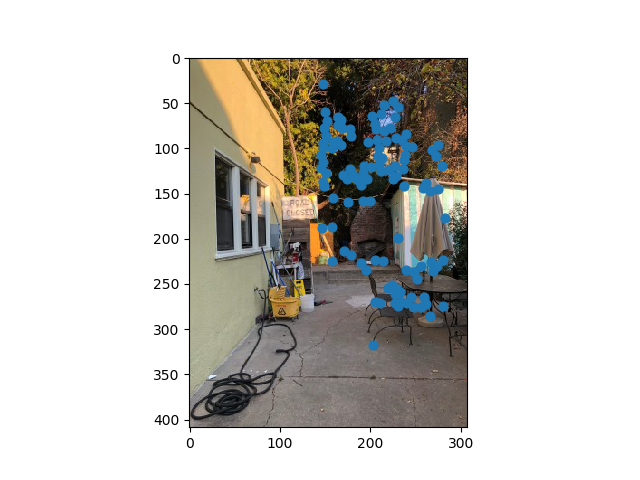

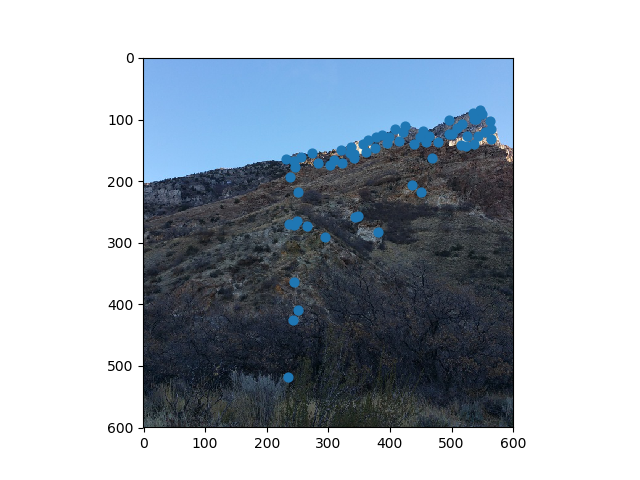

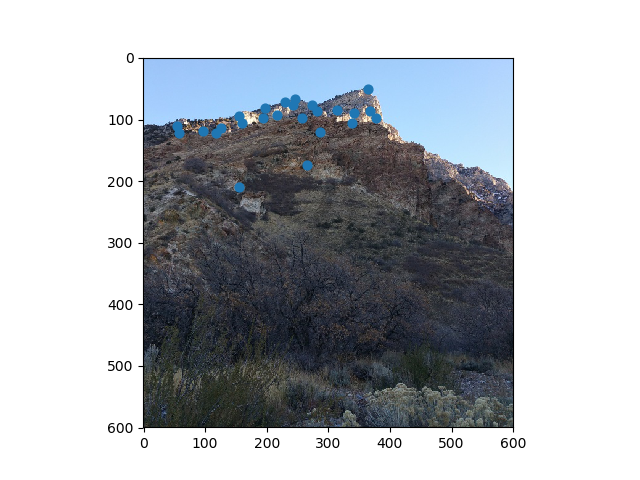

This first step is achieved by using harris.get_harris_corners(image). The algorithm will choose the most prominent points in a given image

More specifically, The interest points we use are multi-scale Harris corners. For each input image I(x, y) we form a Gaussian image pyramid Pl(x, y) using a subsampling rate s = 2 and pyramid smoothing width σp = 1.0. Interest points are extracted from each level of the pyramid.

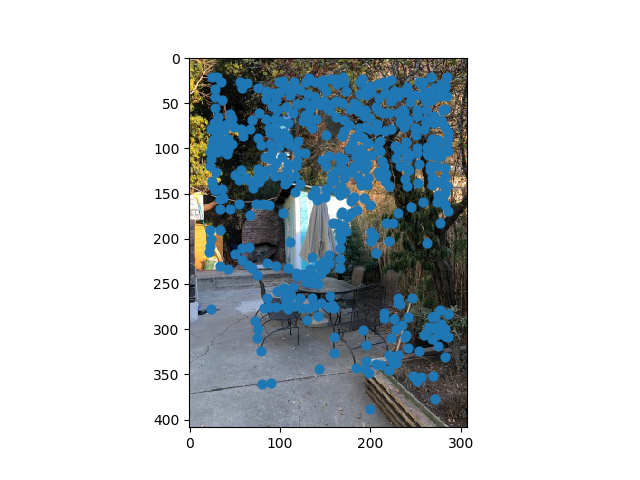

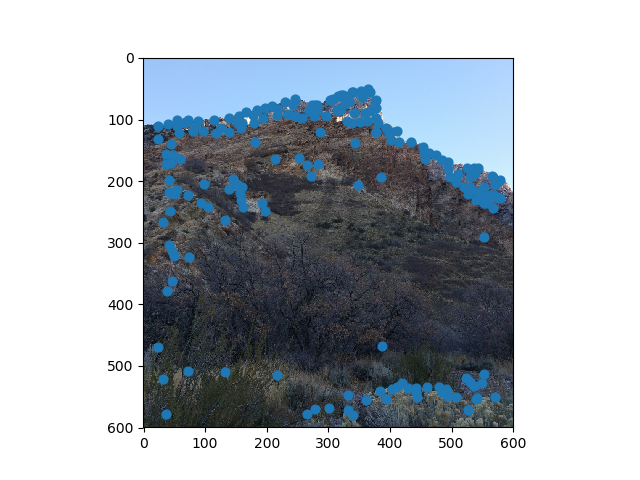

Adaptive Non-Maximal Suppression

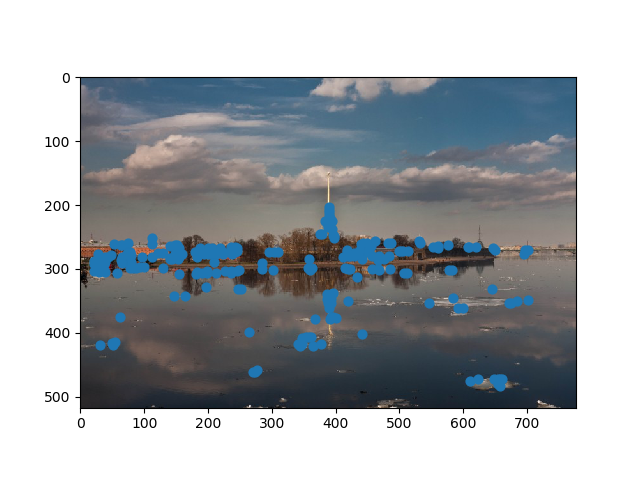

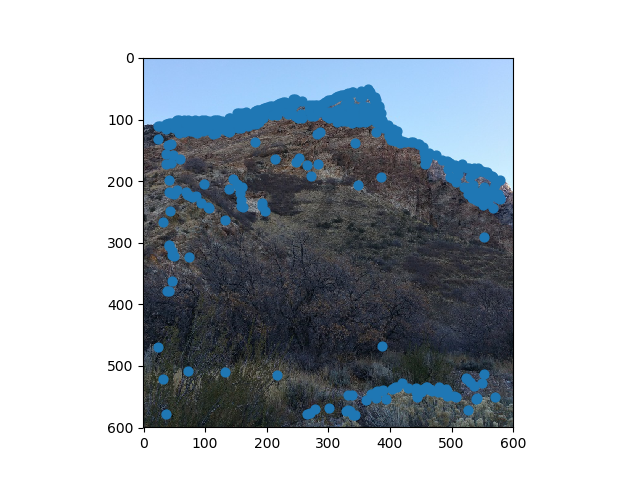

Since the computational cost of matching is superlinear in the number of interest points, it is desirable to restrict the maximum number of interest points extracted from each image. At the same time, it is important that nterest points are spatially well distributed over the image, since for image stitching applications, the area of overlap between a pair of images may be small. To satisfy these requirements, adaptive non-maximal suppression (ANMS) strategy is developed to select a fixed number of interest points from each image.

With ANMS, every point is reselected if it is within a defined suppression radius. The first 500 points will be selected from the original points from Harris dectection.

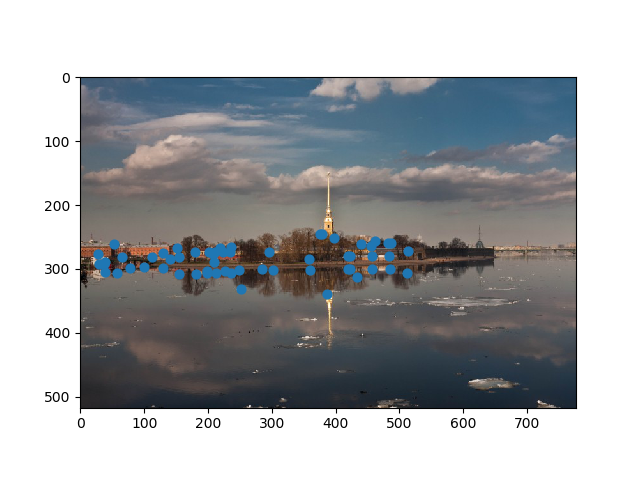

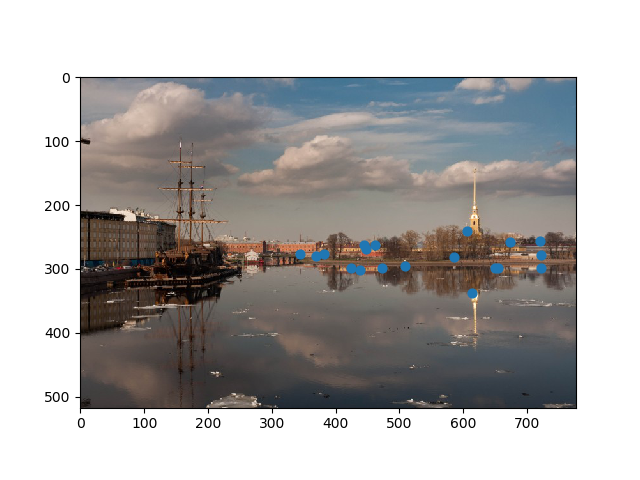

Feature Descriptor Extraction and Feature Matching

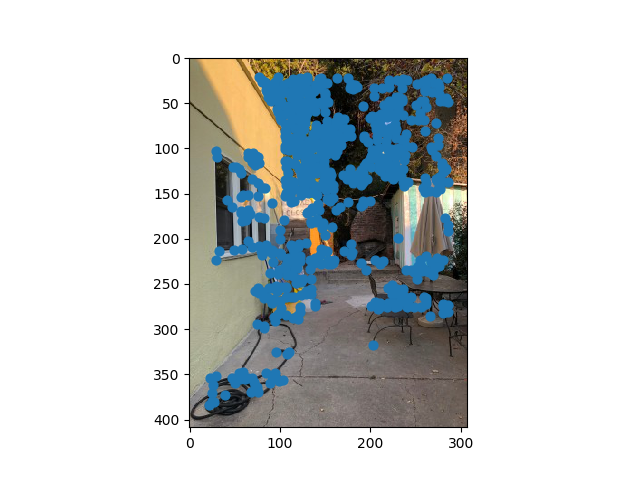

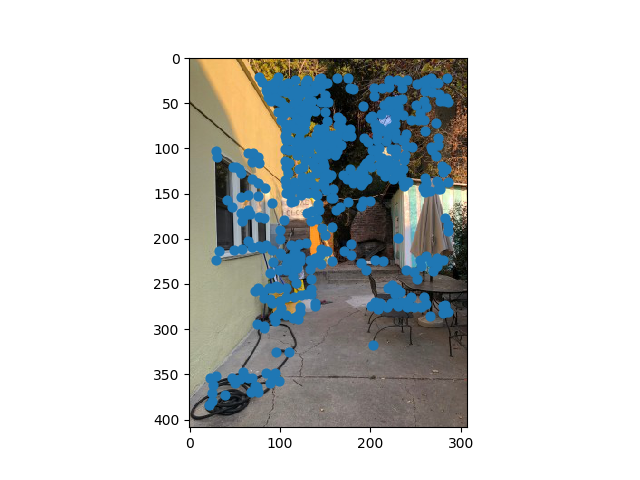

Once we have determined where to place our interest points, we need to extract a description of the local image structure that will support reliable and efficient matchingof features across images.

Given an oriented interest point, we sample a 8 × 8 patch of pixels around the sub-pixel location of the interest point, using a spacing of s = 5 pixels between samples. To avoid aliasing, the sampling is performed at a higher pyramid level, such that the sampling rate is approximately once per pixel

After sampling, the descriptor vector is normalised so that the mean is 0 and the standard deviation is 1. This makes the features invariant to affine changes in intensity (bias and gain). Finally, we perform the Haar wavelet transform on the 8 × 8 descriptor patch di to form a 64 dimensional descriptor vector containing the wavelet coefficients ci. Due to the orthogonality property of Haar wavelets, Euclidean distances between features are preserved under this transformation.

For feature matching, given Multi-scale Oriented Patches extracted from all n images, the goal of the matching stage is to find geometrically consistent feature matches between all images. This proceeds as follows. First, we find a set of candidate feature matches using an approximate nearest neighbour algorithm. Then we refine matches using an outlier rejection procedure based on the noise statistics of correct/incorrect matches.

As the result shows, there are sufficienly less points remaining but they are more exact.

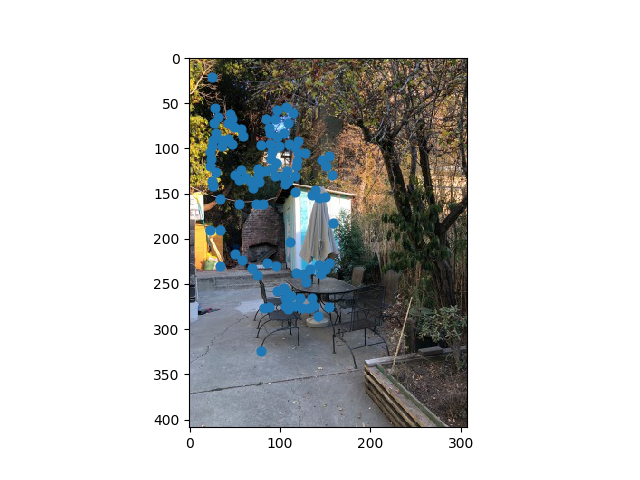

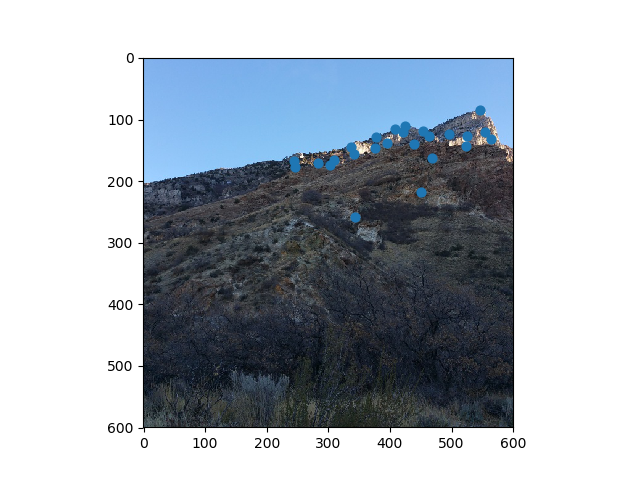

RANSAC

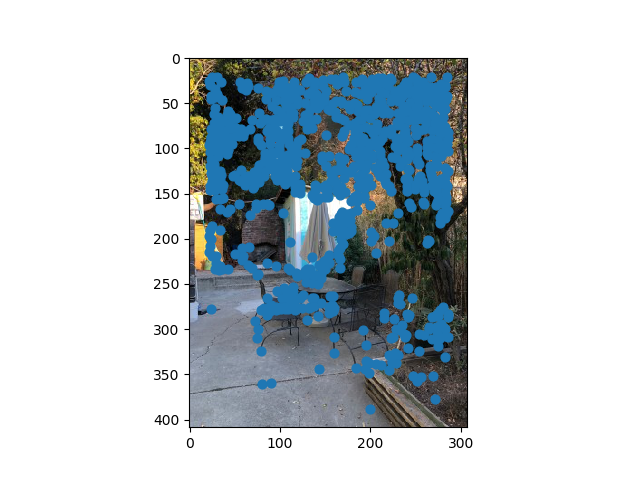

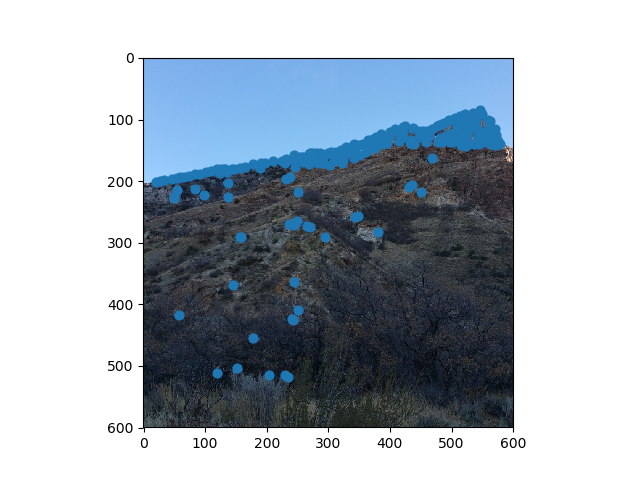

Random sample consensus (RANSAC) is an iterative method to estimate parameters of a mathematical model from a set of observed data that contains outliers, when outliers are to be accorded no influence on the values of the estimates. Therefore, it also can be interpreted as an outlier detection method.[1] It is a non-deterministic algorithm in the sense that it produces a reasonable result only with a certain probability, with this probability increasing as more iterations are allowed.

With this very last step, the points are fined tuned to be even more exact such that every given point in one image will have another unique matching point in another. The largest set of points will be kept from this process and used to calculate homography matrix.

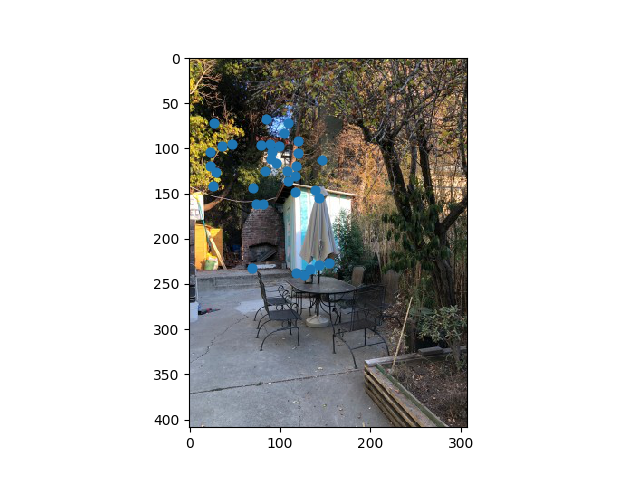

Result without blending

The left column will be the manually stiched images while the right side will be the ones achieved with automation. The ghosting effect is eliminated as now we have more points and the points are more exact than hand-chosen ones before.

What I have learnt

The first time I saw the panorama feature on cellphone I was so amazed of how efficiently it stiches the images in real time and so curious of how it works. I'm so glad that I have this project as it answered most of my questions regarding panoramic photography. With clever math we can develop efficient algorithm that can choose points more exact than any human being and the results are astounding. I am also happy that I discovered many imaging libraries along the way of completing this project.