Overview

A single image inherently has limitations on how large a scene can be captured; however, when we mosaic, or stitch, many images together, we can create a larger field of view. The challenge is, how do we stitch the images together so they are nicely aligned?

It is not enough to simply overlap and blend two images together, even though there are features shared across the images. We will visit the use of projective transformations to warp an image into the same plane as the other, provided the two images shared the same point of projection (i.e. the camera didn't translate between shots). Finally, how do we blend the images together into one cohesive image? We will attempt to create our own panoramas in this project.

Starting Images

Homography, Warping, and Image Rectification

We compute the homography between 2 images using the equation p’=Hp, where H is a 3x3 matrix with 8 degrees of freedom (we set the lower right corner, the scaling factor, to 1). p and p' are vectors of corresponding points.

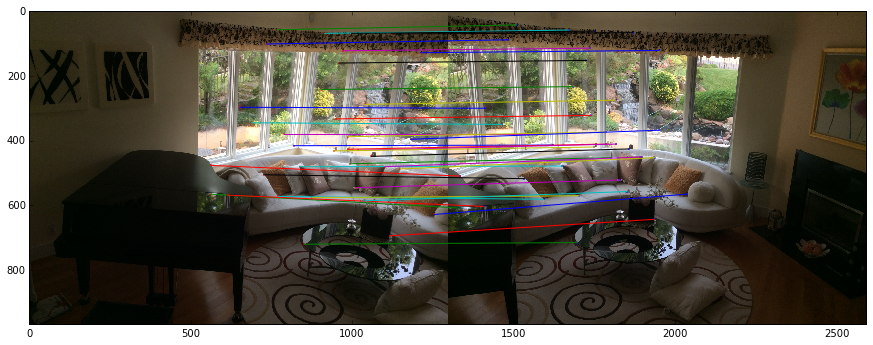

We first click on corresponding points in the two photos, to get these lists of correspondences (need at least 4 (x, y) correspondences to compute 8 degress of freedom). Then, we can compute the transformation matrix between the two images: H.

Once we compute the transformation, we can warp all of image1 to align with image2 (or vice versa). Since H defines the transformation, we apply H (using inverse warping preferably), to transform our image into the desired shape.

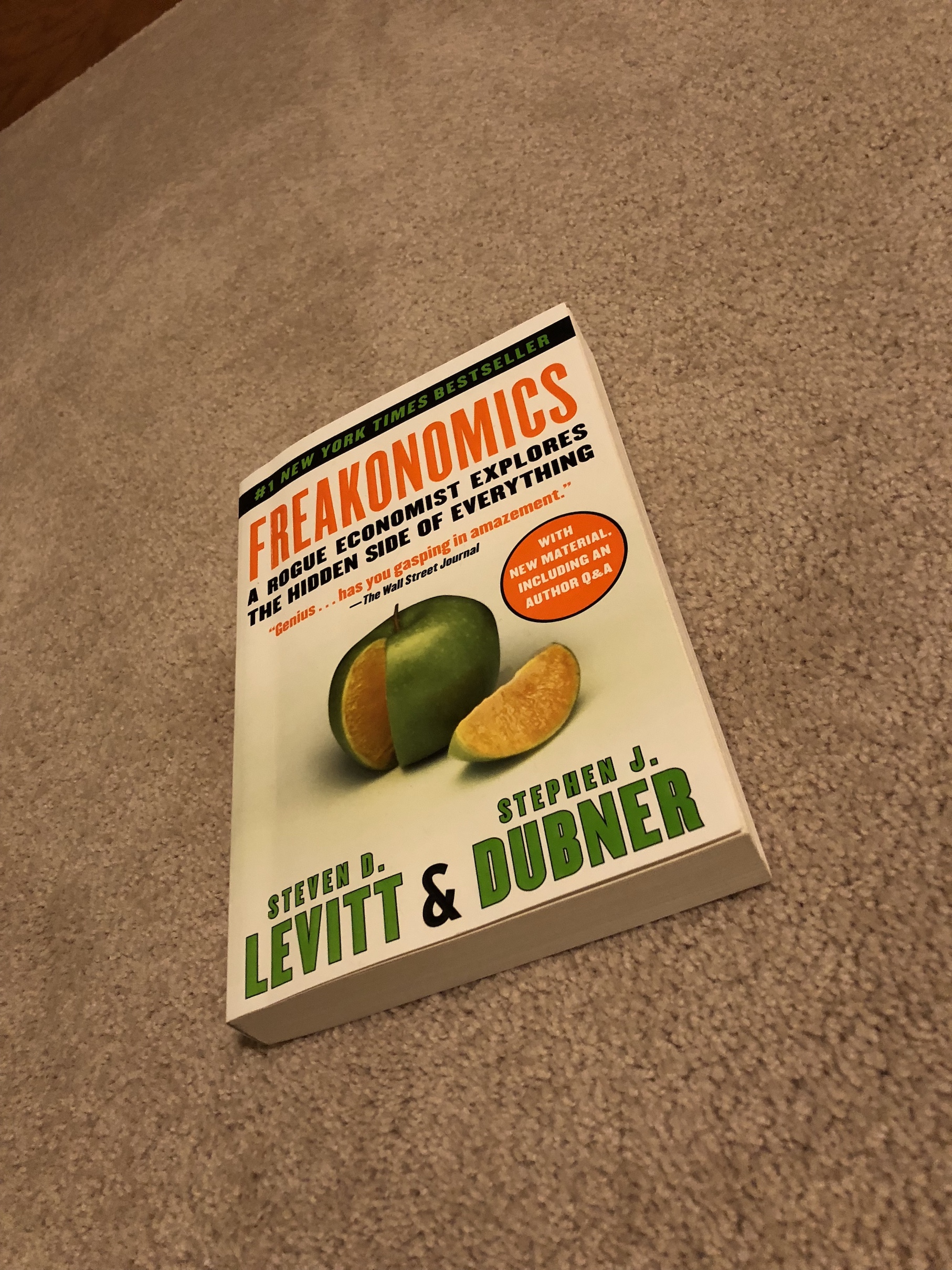

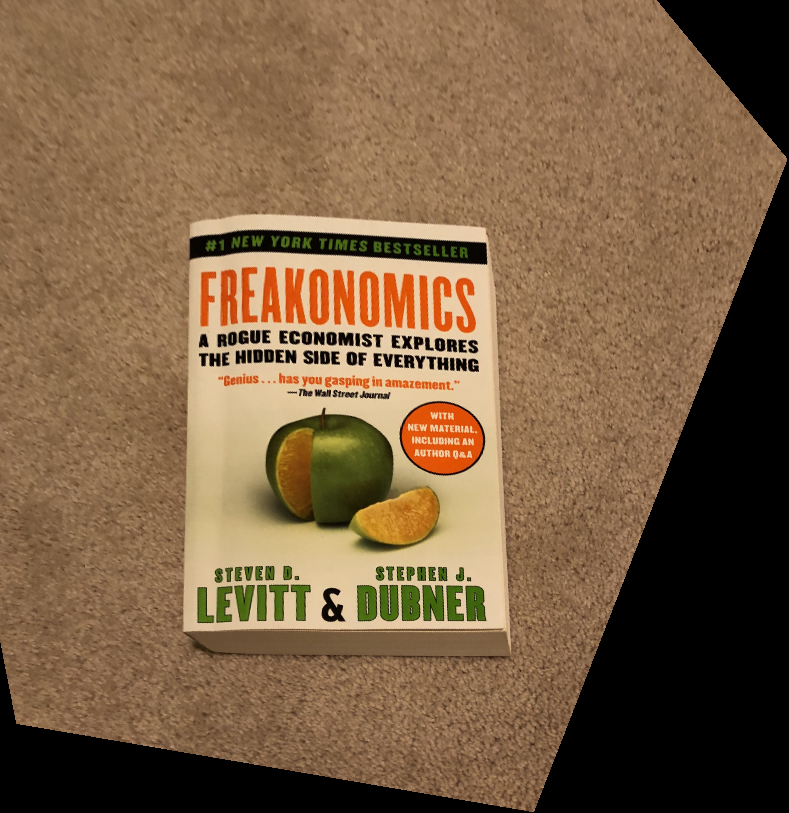

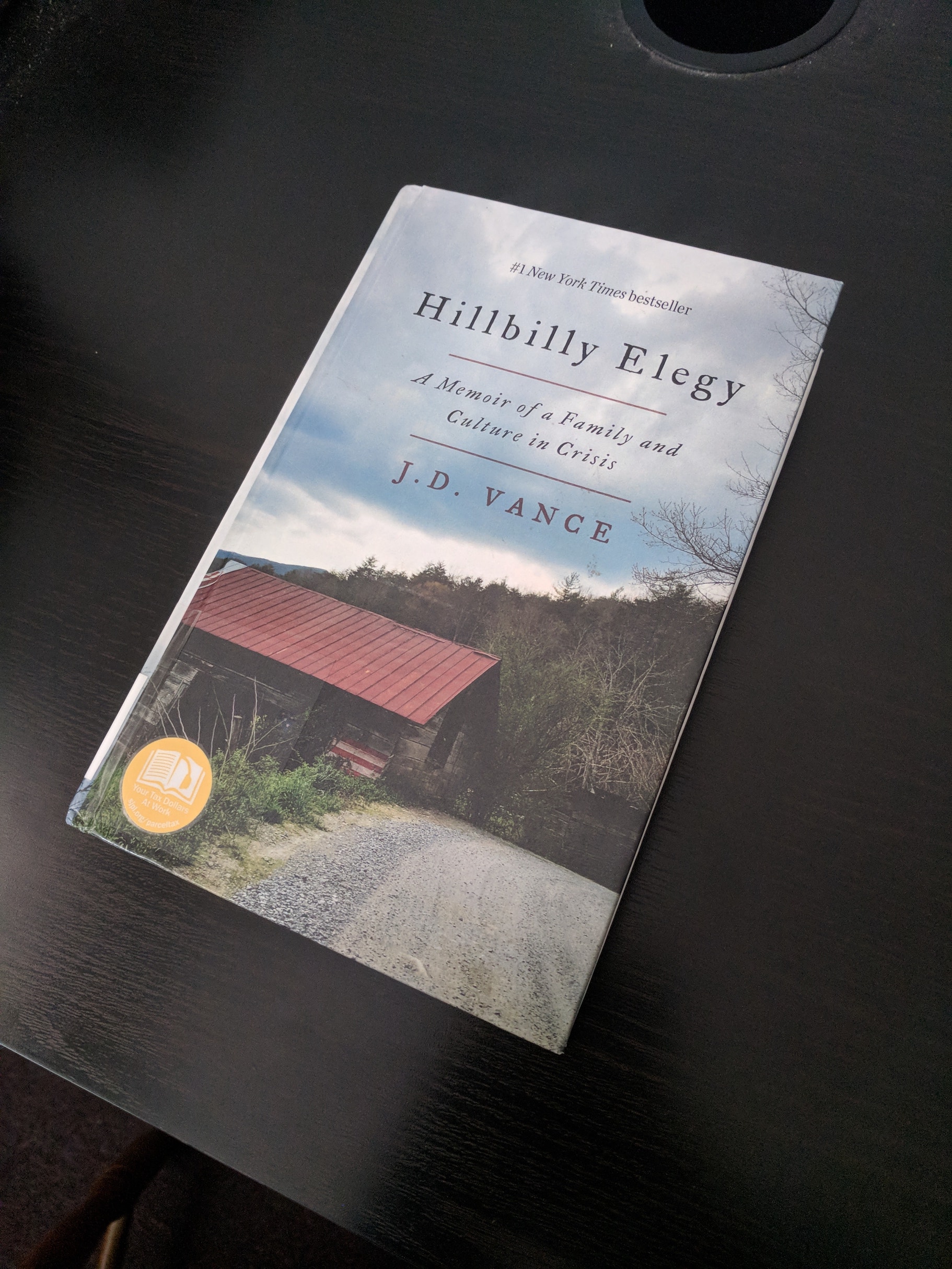

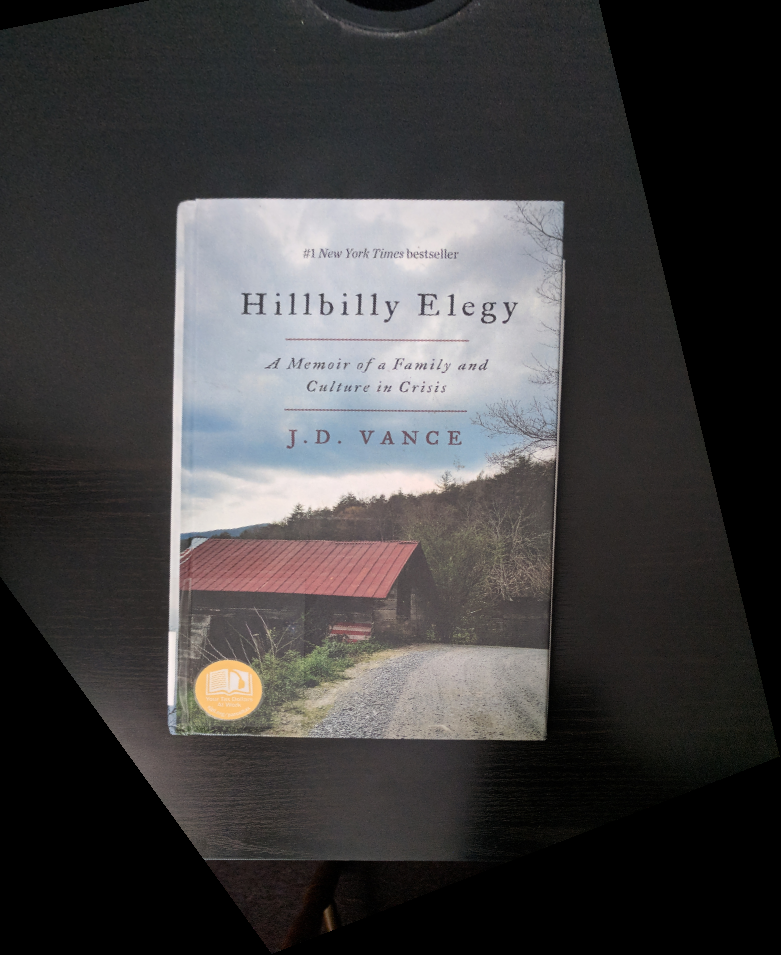

Below are two examples of image rectification. I took two photos of two books at angles such that they were not rectangular. Next, I selected the four corners of each book and manually set their corresponding points to be corners of a rectified rectangle. Using the computed homography from these correspondences, I warped the original image into tthe rectangle. You can see that the results are the books as if viewed top down from above. (Like the PDF scanner apps on our phones!)

Creating Mosaics

For each pair of images above, I first expanded the images through np.pad to get a final output image size. I clicked on correspondences, from which I computed the projective transformations. Then, I warped one image to align nicely with the other.

Then, to blend the two images together, in order to avoid strong visible edges, I used Laplacian blending (2-band/3-band) from proj3. I used a linear mask to specify how much of each image I wanted in each region, then used this mask and Gaussian and Laplacian stacks to smoothly blend the two images.

Here are the resulting cropped mosaics (click to enlarge):

There may be some seam artifacts, which I believe to be due to two main possibilities: shift in center of projection and quality of correspondences.

Manually clicking on correspondences with the ginput tool is not the most accurate, and when there's a lot of little detail in the images to match up, little errors can be quite visible.

Summary

I think this part is pretty cool and makes me appreciate the panorama tool on my phone! I have definitely shifted the COP on many panoramas taken on my phone and they still turn out to look great. Image rectification and homography is a cool idea especially when applied, and being able to "shift" the camera after taking a photo is a nifty idea. It's almost magical! Especially with the pretty simple idea of warping.