CS196-24 FA18 // David Xiong (cs194-26-abr)

In this project, we use image warping via homographies to generate a panorama from a sequence of images.

This writeup contains the both halves of the project: the first half deals with homeographies, image warping and rectification, and image mosaicing; and the second covers Harris corner detection, Adaptive Non-Maximal Suppression (ANMS), feature descriptor extraction and matching, and RANSAC for estimating a homeography.

In order to warp images, we must first recover the parameters of the transformation between each pair of images. By limiting the movement of our camera to rotation we are able to generate different perspectives of a single subject - we can then defiine correspondance points, and warp between them accordingly.

The equation \(p' = Hp\) represents the mapping between two points that share the same center of projection. We can use the homography matrix \(H\) to warp points \(p\) to the transformed set of points \(p'\).

Let's define \(H\) as a 3x3 matrix with 8 unknown values (we can set the bottom-right to always be 1 for ease-of-use). We can use a linear system with 8 unknown variables to solve for these values - we can set them up as shown below and use least-squares to solve for \(h\)

\( \begin{bmatrix} x_1 & y_1 & 1 & 0 & 0 & 0 & -x_1'x_1 & -x_1'y_1 \\ 0 & 0 & 0 & x_1 & y_1 & 1 & -y_1'x_1 & -y_1'y_1 \\ x_2 & y_2 & 1 & 0 & 0 & 0 & -x_2'x_2 & -x_2'y_2 \\ 0 & 0 & 0 & x_2 & y_2 & 1 & -y_2'x_2 & -y_2'y_2 \\ x_3 & y_3 & 1 & 0 & 0 & 0 & -x_3'x_3 & -x_3'y_3 \\ 0 & 0 & 0 & x_3 & y_3 & 1 & -y_3'x_3 & -y_3'y_3 \\ x_4 & y_4 & 1 & 0 & 0 & 0 & -x_4'x_4 & -x_4'y_4 \\ 0 & 0 & 0 & x_4 & y_4 & 1 & -y_4'x_4 & -y_4'y_4 \end{bmatrix} \begin{bmatrix} h_1 \\ h_2 \\ h_3 \\ h_4 \\ h_5 \\ h_6 \\ h_7 \\ h_8 \end{bmatrix} = \begin{bmatrix} x'_1 \\ y'_1 \\ x'_2 \\ y'_2 \\ x'_3 \\ y'_3 \\ x'_4 \\ y'_4 \\ \end{bmatrix} \)

For these image rectifications, we define the inputs as shown and warp them to their respective corner. The result warps the images to a new planar perspective and allows us to see slanted images from a straight-on view.

|

|---|

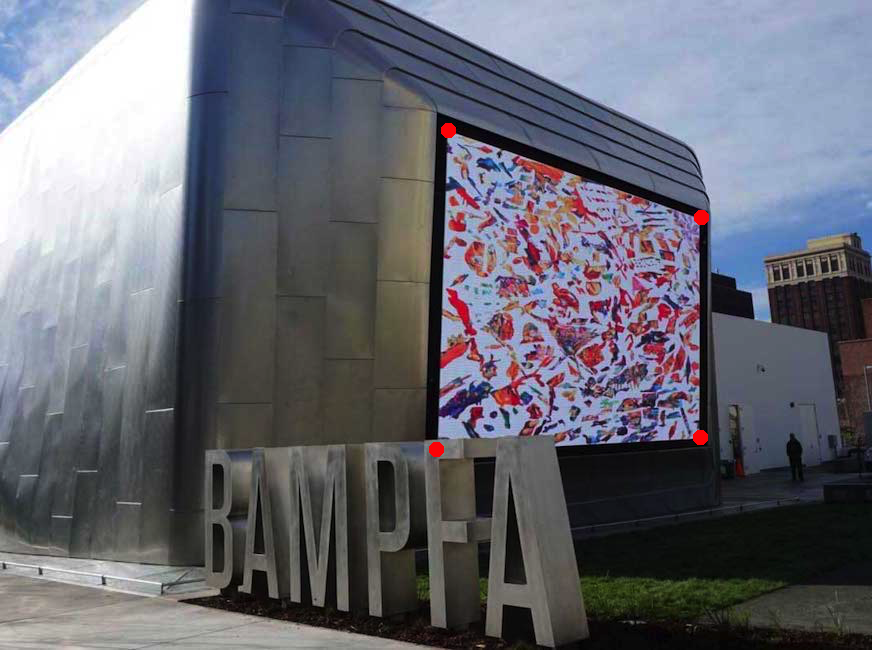

The Enchanted Domain, IX, 1953(Magritte) exhibit at SF MOMA

|

|---|

|

|---|

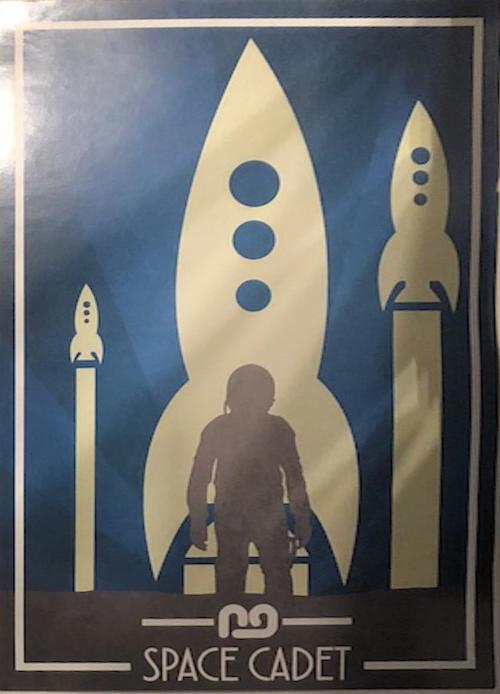

Space Cadet(Nigel Good) poster

Once we implement rectification, image mosaicing is a simple step forward. Rather than warping images to the four corners, we find the median image of our mosaic and each other image to the correspondences of that median image. We then linearly blend over the overlapped portion - in this case, I just averaged the intersecting portion.

|

|

|---|

|

|

|---|

|

|

|

|---|

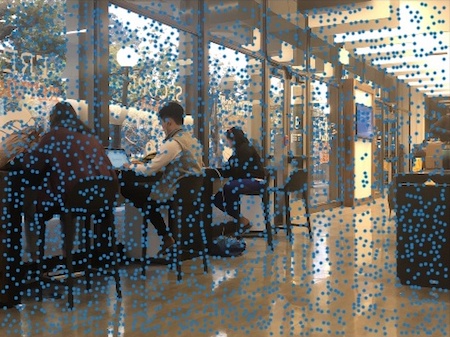

Now the real fun begins! In order to automatically detect features, we use the Harris Corner Detection algorithm. Here are the results of running the algorithm on the two Amazon pictures, each resulted in ~2500 points of interest.

|

|

|---|

We still need to select better points of interest from the ones Harris gives us, since we need to pare the ~2500 points we got from each image to a matching set of 4. To do this, we can start by employing Adaptive Non-Maximal Supression, or ANMS. Harris tends to detect many irrelevant points that don't necessarily correspond to recognizable features on the image. Therefore, we go through each point and find the find the largest radius in which it has the strongest weighted corner strength, and return the top 500 points with the largest corresponding radii.

|

|

|---|

Now that we have a collection of points that correspond to interesting features, we now must find which points correspond to each other in the two images. We can use Multi-scale Oriented Patches to accomplish this!

To do this, first we must normalize each point to account for lighting or positional differences. For each point, we take a 40x40 patch around the point and downsize it to an 8×8 patch, as per the paper linked above. We then normalize the patch by subtracting the mean and dividing by the standard deviation, and resize it into a size-64 feature vector - which becomes the descriptor for our point.

Now it is just a matter of matching these features using a similar process to that of the a technique described by David Lowe. For each vector, we calculate the squared distance between it and each other feature vector, and look at the ratio between the first and the second nearest neighbors. If the ratio is adequately close to 0, the point the feature vector describes likely also exists in the second image, and can be paired as such.

Here are the results after running this process on the descriptors fetched above. Notice that only a certain subset of points in the intersecting area (with a few outliers) are selected now.

|

|

|---|

Now that we have a list of corresponding points between the two images, we can use random sample consensus (RANSAC) to compute a homography that can support the largest number of inliers. Essentially, we randomly choose four pairs of points and compute their homeographies. We then project the points from the second image to the first, and run through the all the paired points again to calculate the distance between them. If the distance between the point on the first image and the projected point on the second image is sufficiently small, we can consider it an inlier. We run this process many times and take the set of points that gives us the most inliers.

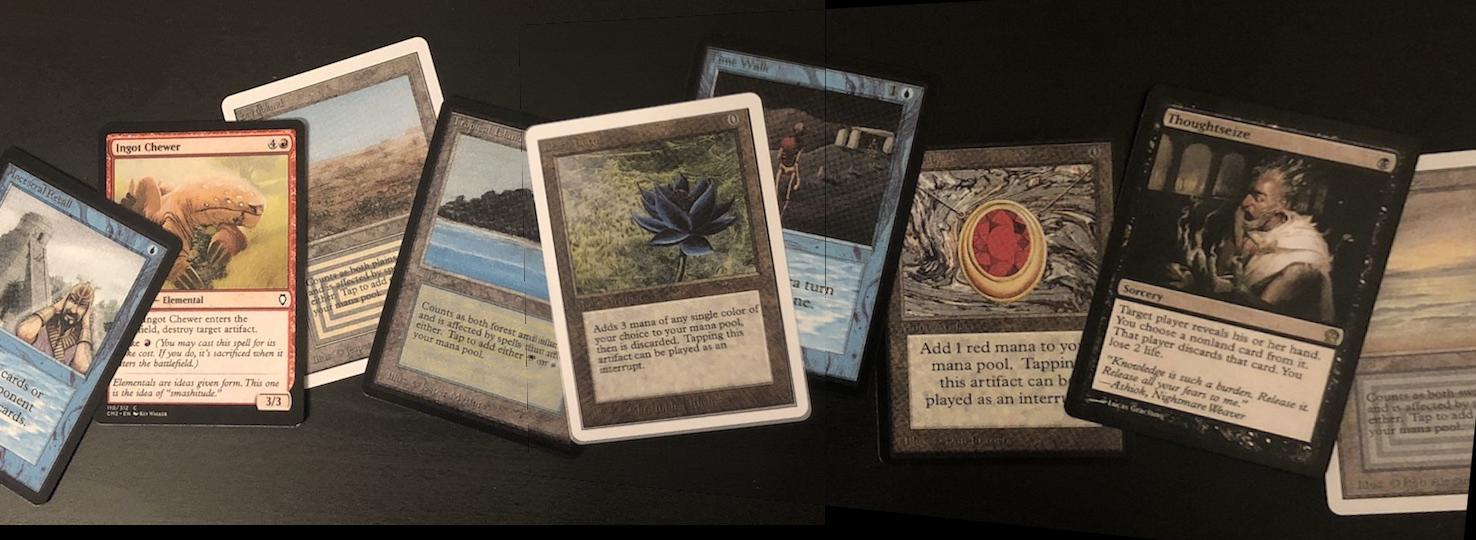

After this, it is merely a matter of warping and blending the images as seen above. Here are my final results after running this whole process on the three image sets I used in 1.3, along with the points that RANSAC ultimately ended up selecting.

|

|

|---|

|

|

|---|

|

|

|---|

This project was a lot of work, but quite fun! It's been cool to see how simple the concept of homeography matrices are and how they can be used to map points on an image for warping. I also enjoyed learning about how to use Harris, ANMS, and feature matching to generate a matching set of feature points between the two images - although it seems that Harris does a majority of the work, ANMS and feature matching do a great job of paring down the points. Between the two methods of selecting points, the automatic panoramas tended to be much more clear than the manual ones, likely because the automatic process was able to select pixel-perfect points whereas we have to account for human error in manual entry.

Writeup by David Xiong, for CS194-26 FA18 Image Manipulation and Computational Photography