Image Warping & Mosaicing Pt. 2 - Auto Image Panorama

CS 194-26 Computational Photography Fall 2018

Guowei Yang cs194-26-acg

Introduction

Part 1: Using Harris Interest Point Detector

In the second part of the project, having explored how to manually stitch the images together, we will be stitching images together automatically. The main idea is to detect features that align with each other.

Part 4: Feature Matching

Summary:

From this project, I have learned how to utilize the Harris Corner Detector to find corresponding points in images instead of manually defining the corresponding points. However, when performing the stitching, different images needs different sets of parameters, and tuning the parameters is kind of painful.

Part 2: Adaptive Non-Maximal Suppression (ANMS)

Part 3: Feature Descriptor Extraction

Part 5: RANSAC Image Warping & Stitching

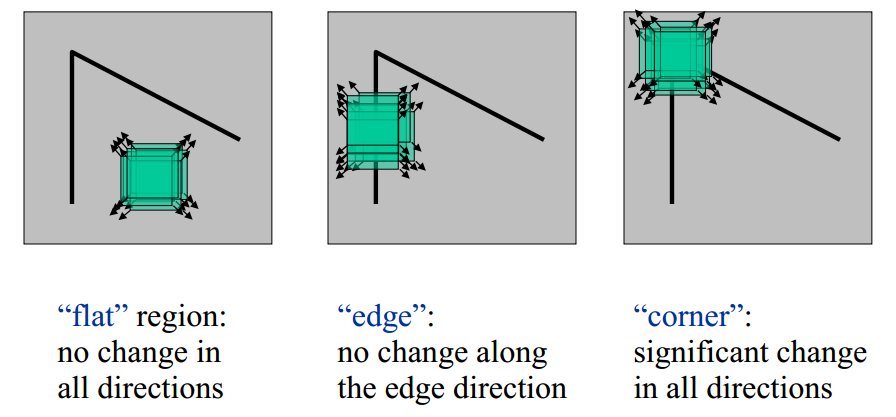

First of all, a good feature point usually lies on a corner of an object in the image. Therefore, we want to use some algorithm that could detect corners in the image.

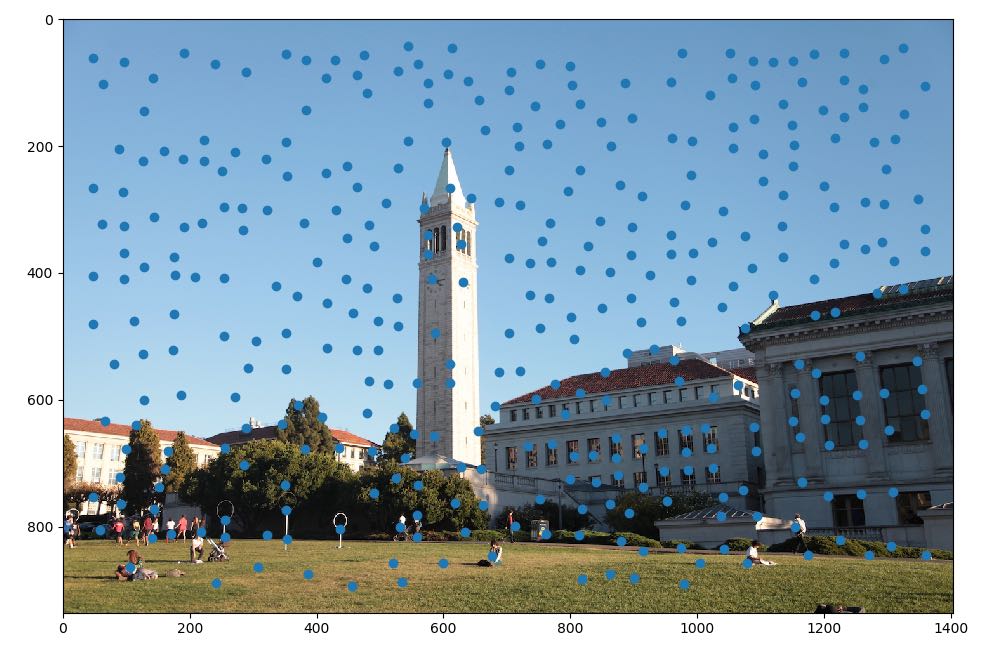

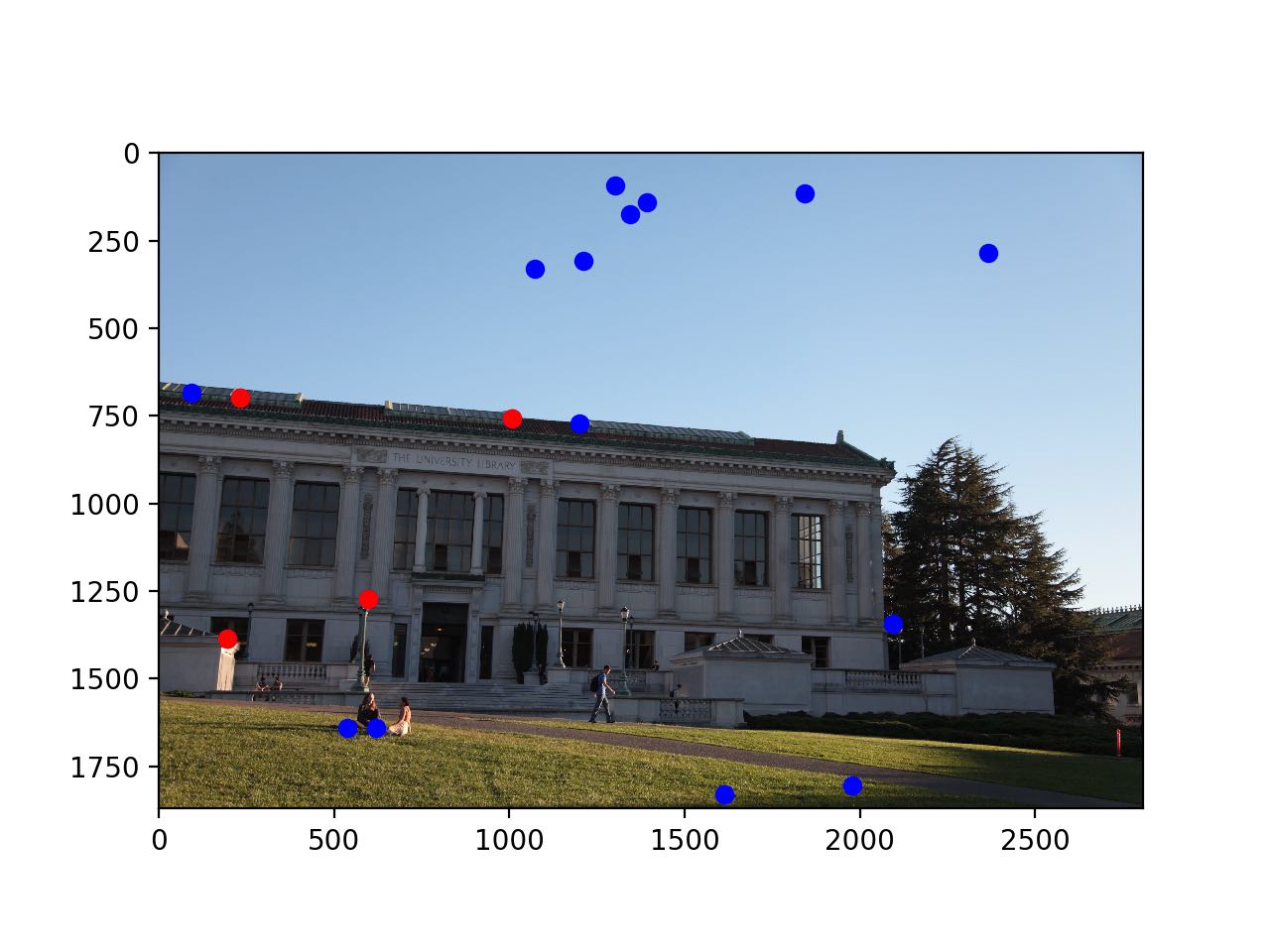

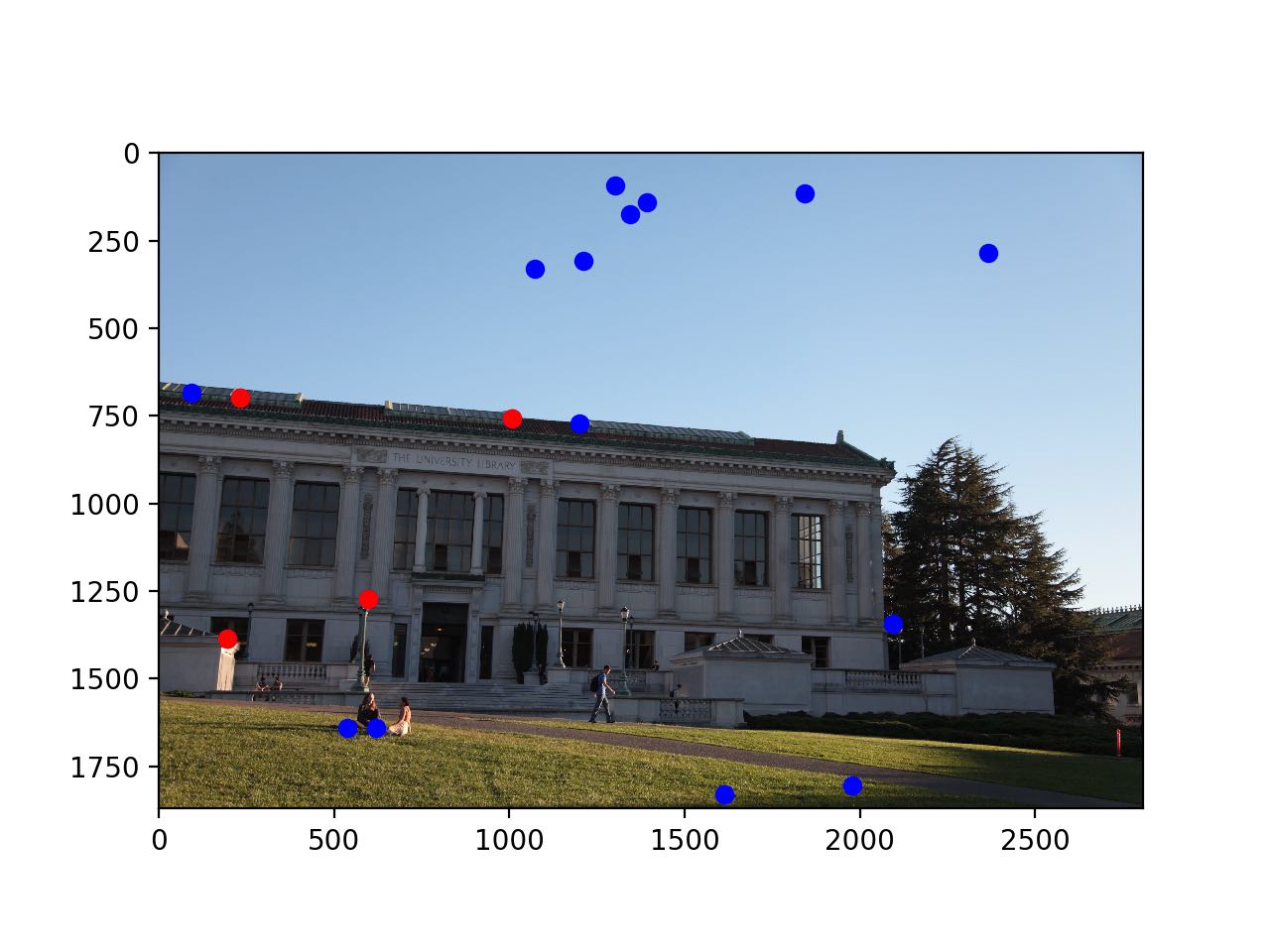

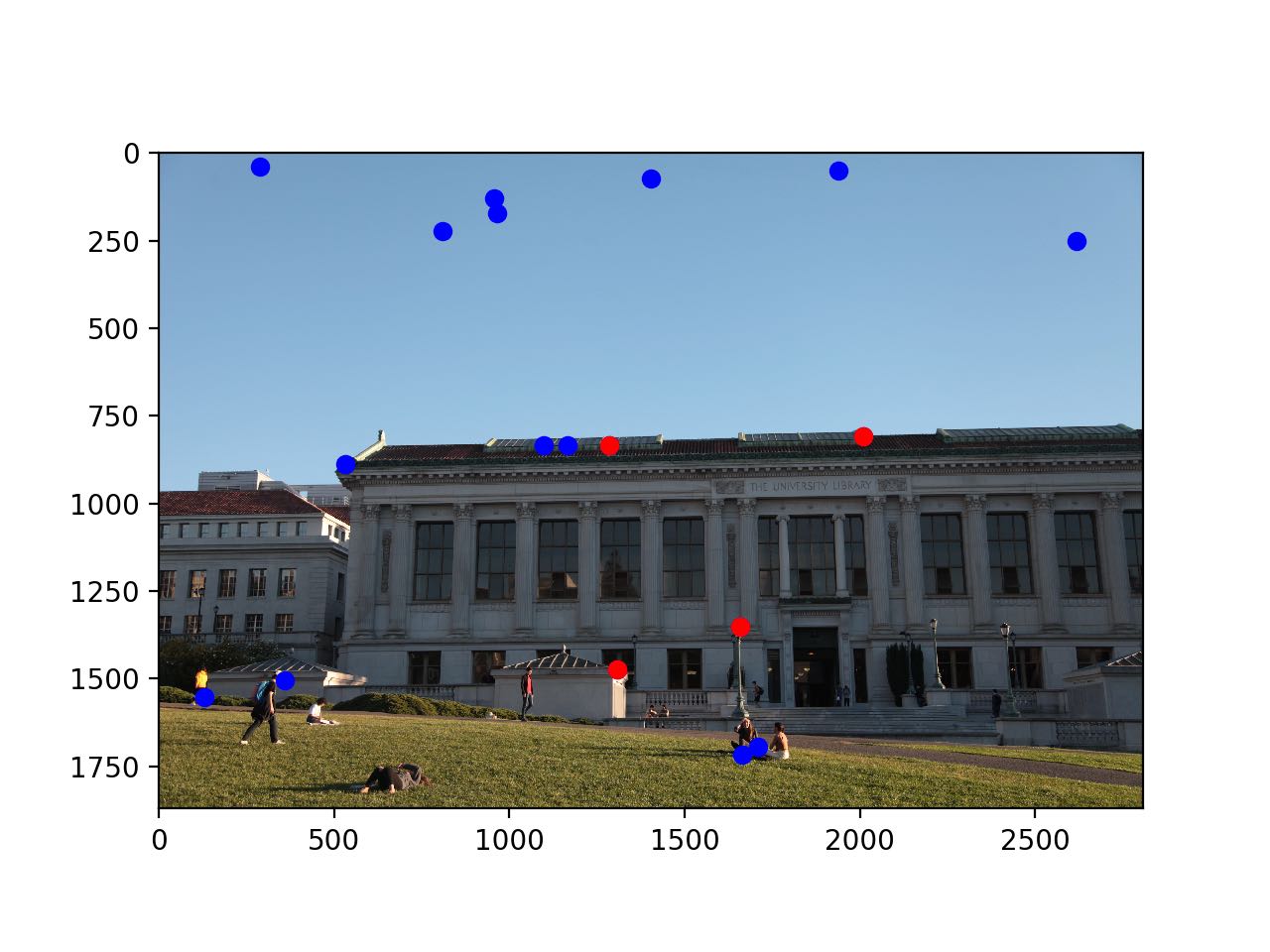

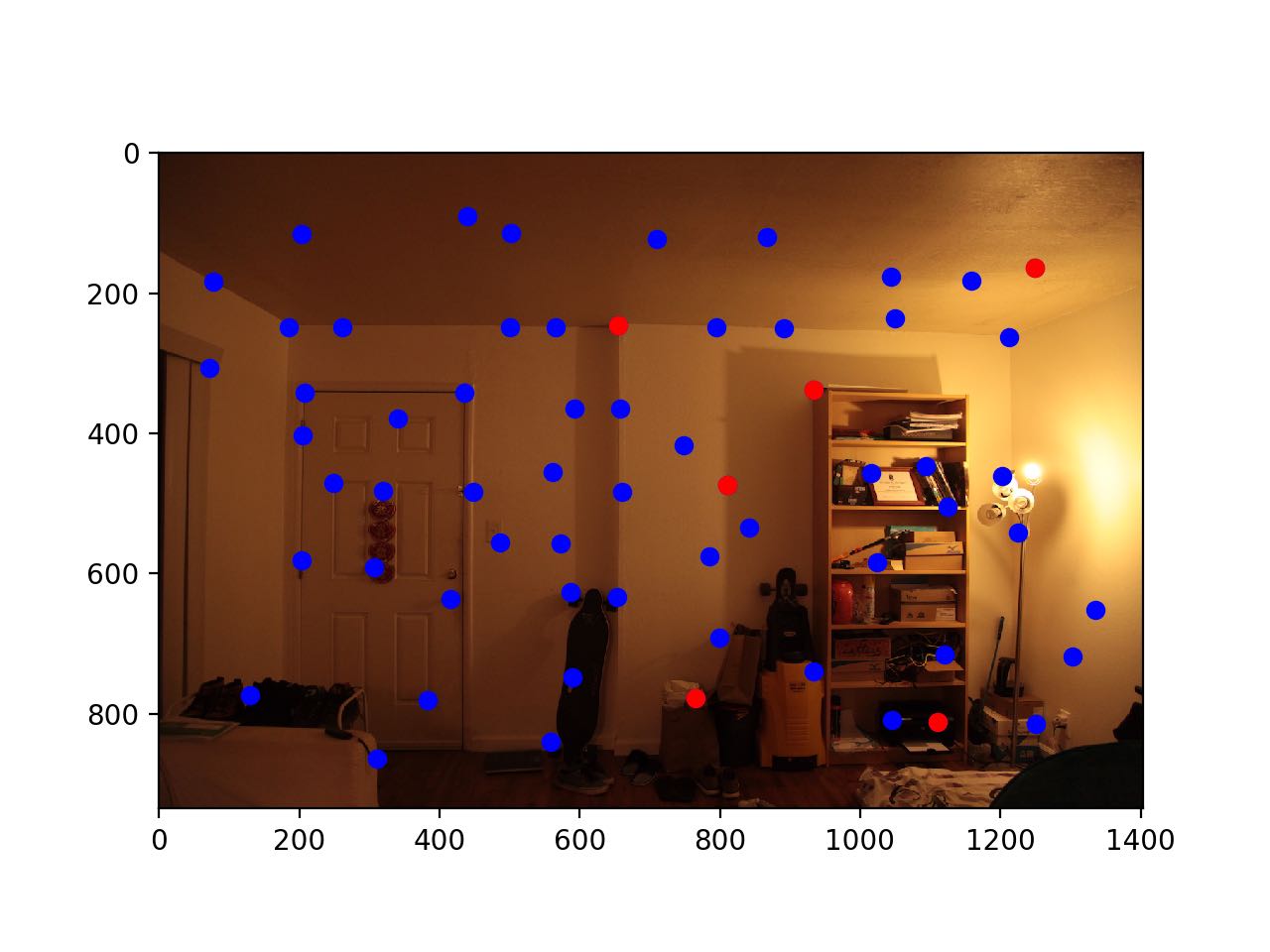

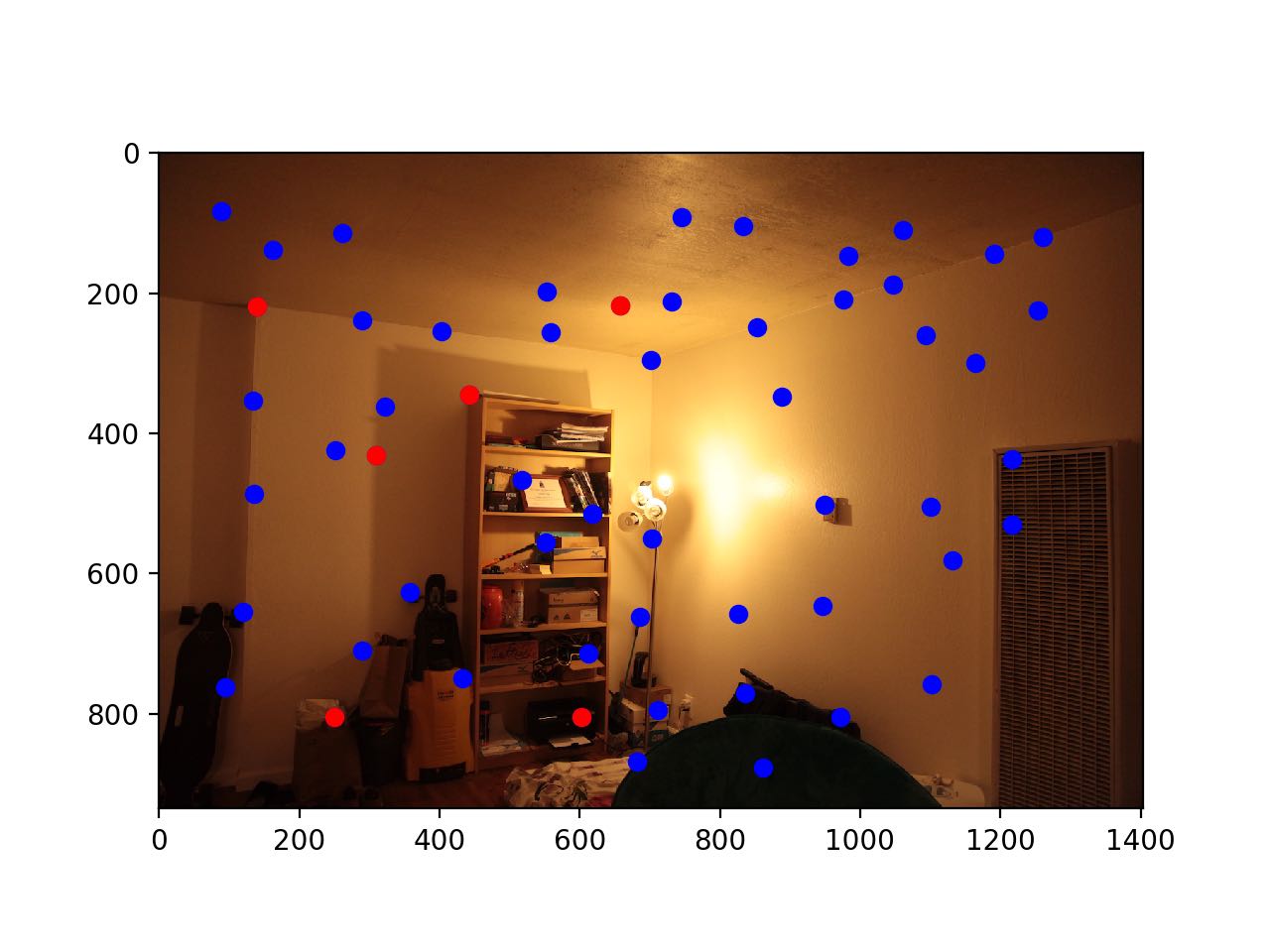

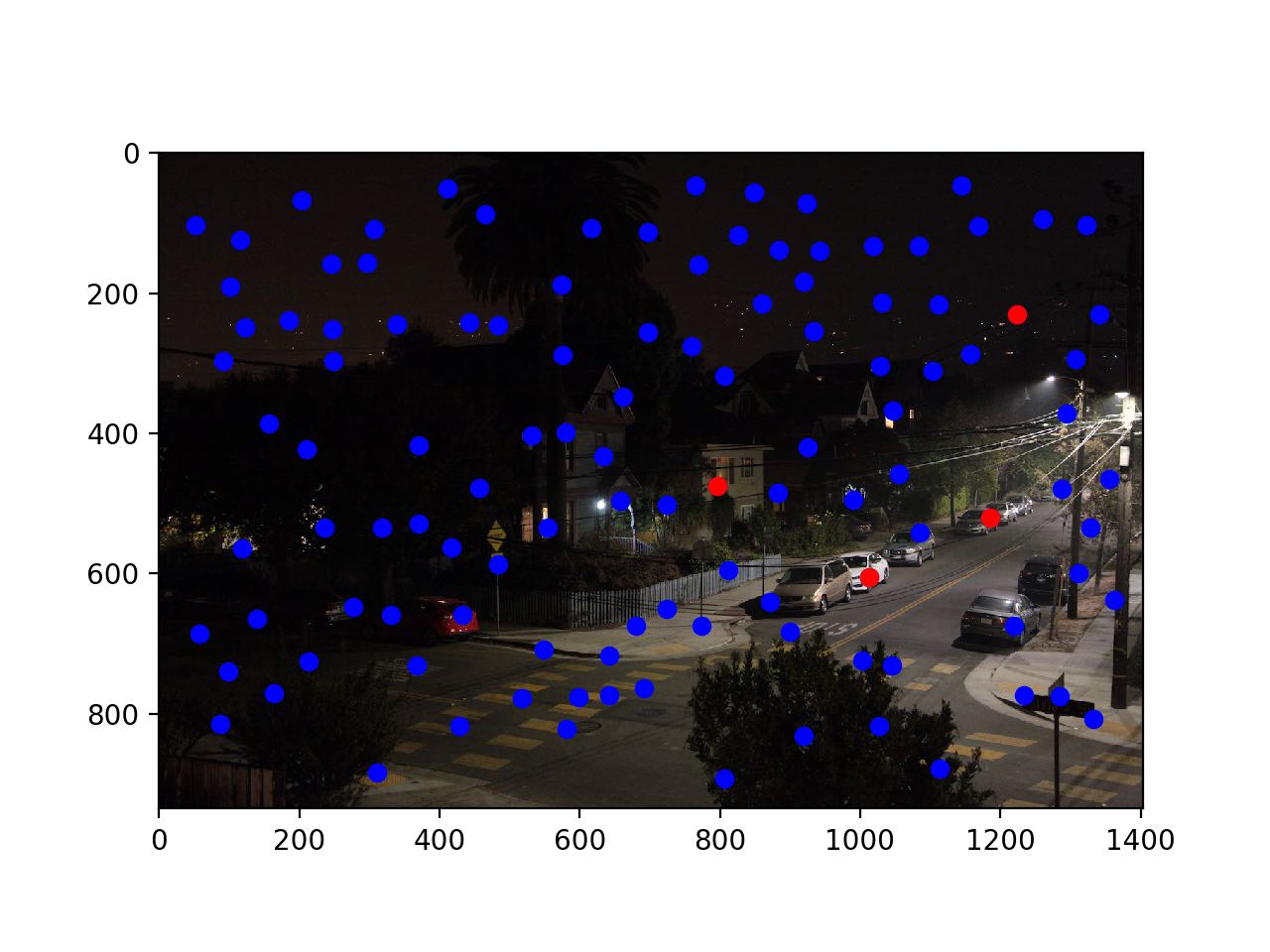

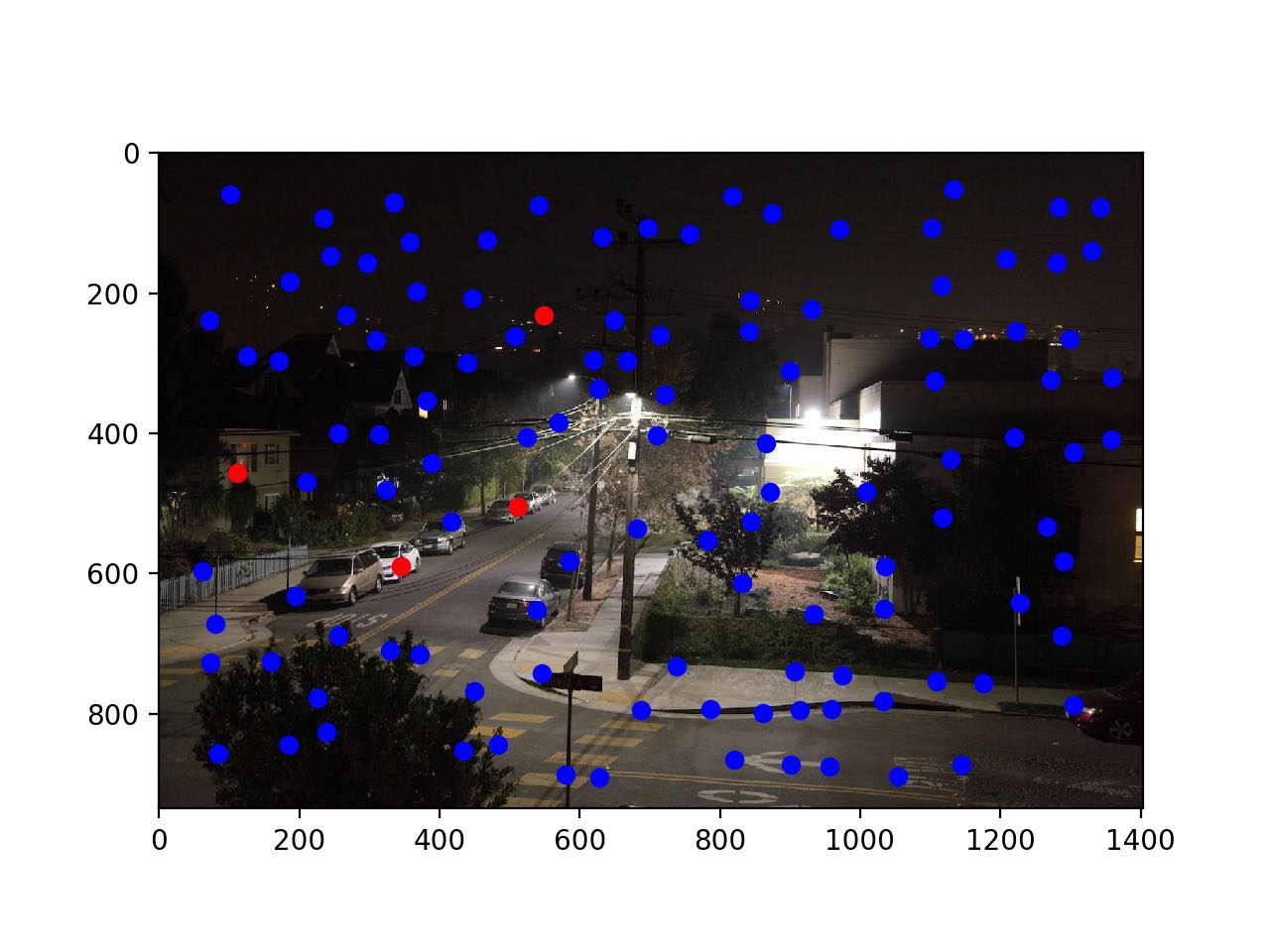

An ideal algorithm will move around a searching box, and detects a corner if significant change in all directions appears. Applying a Harris Interest Point Detector, I obtained the following corner points:

We could change different parameters so that different number of interest points are calculated.

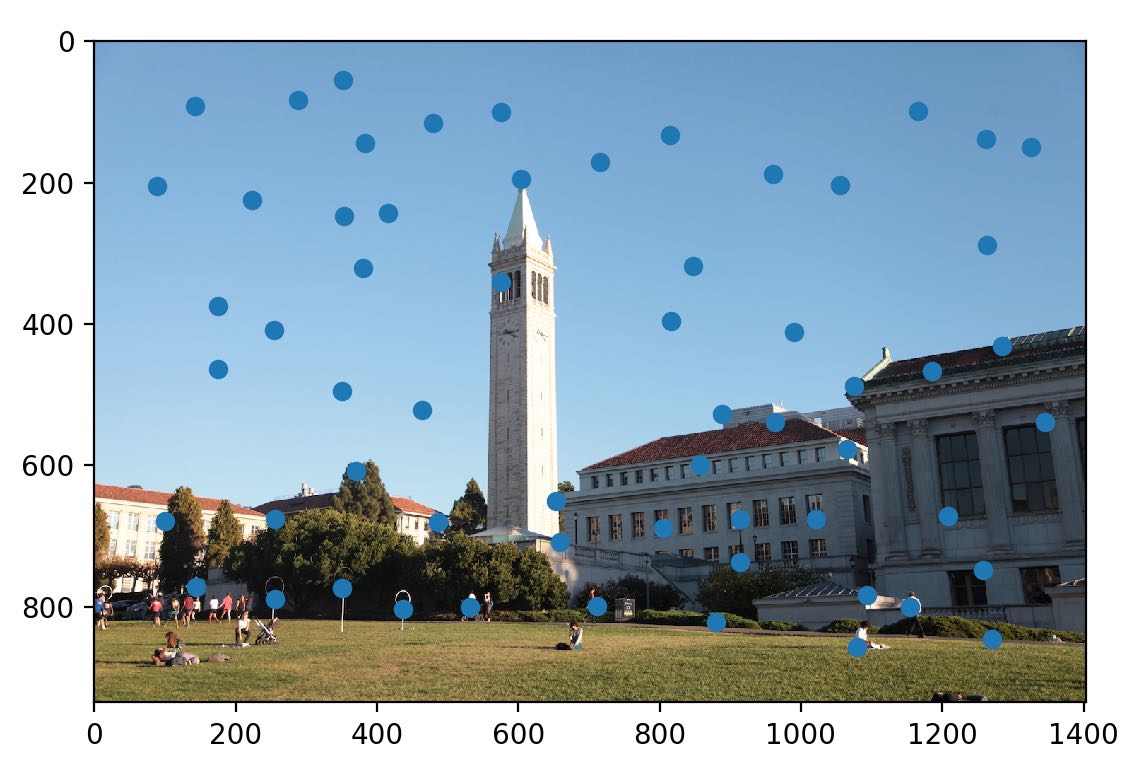

After getting this huge amount of points, we would like to filter out some points so that only valid corners are selected. We use the technique that is called Adaptive Non-Maximal Suppression.

After ANMS, we filtered out most of the points, leaving only several candidates that are valid corners.

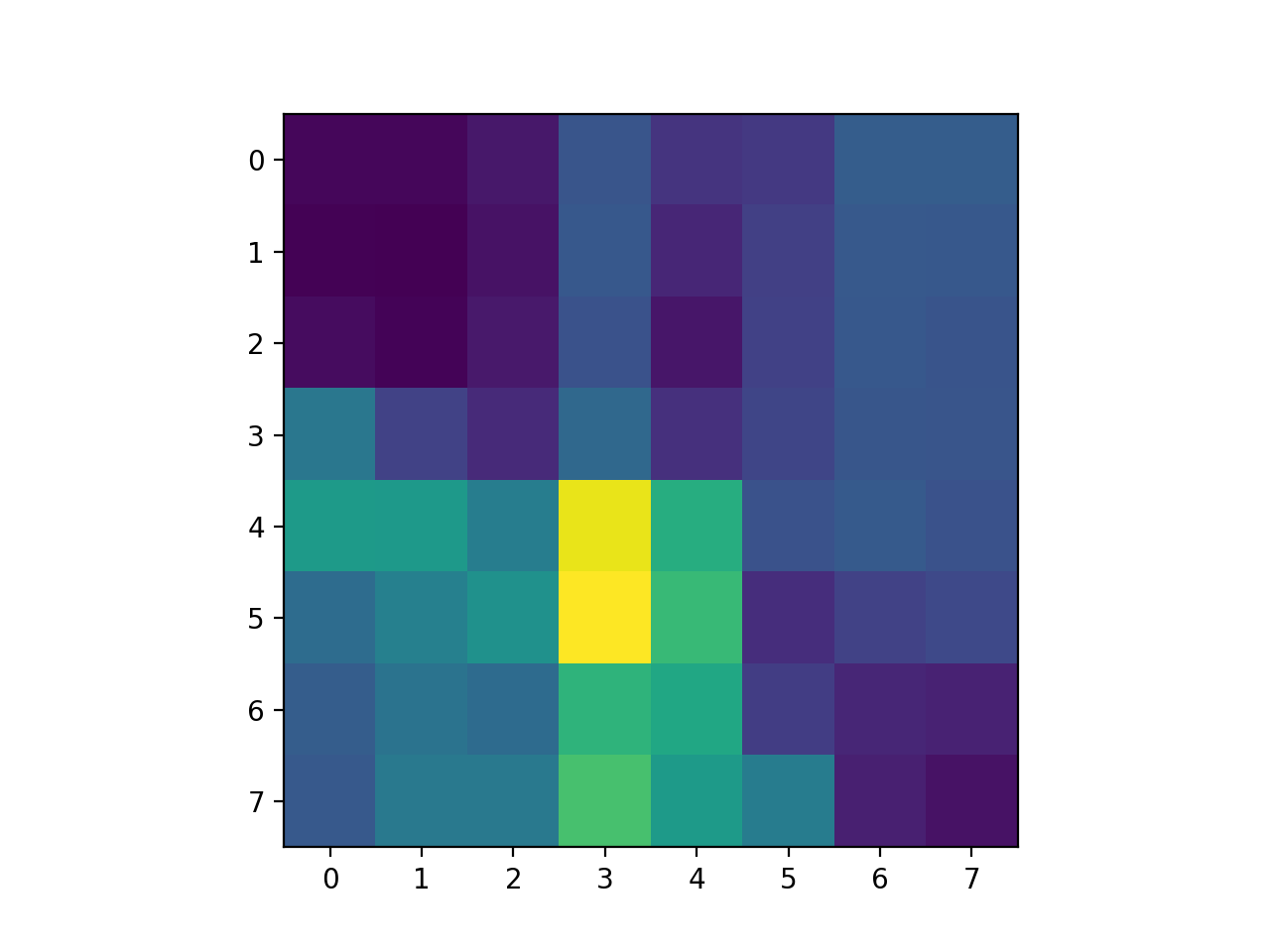

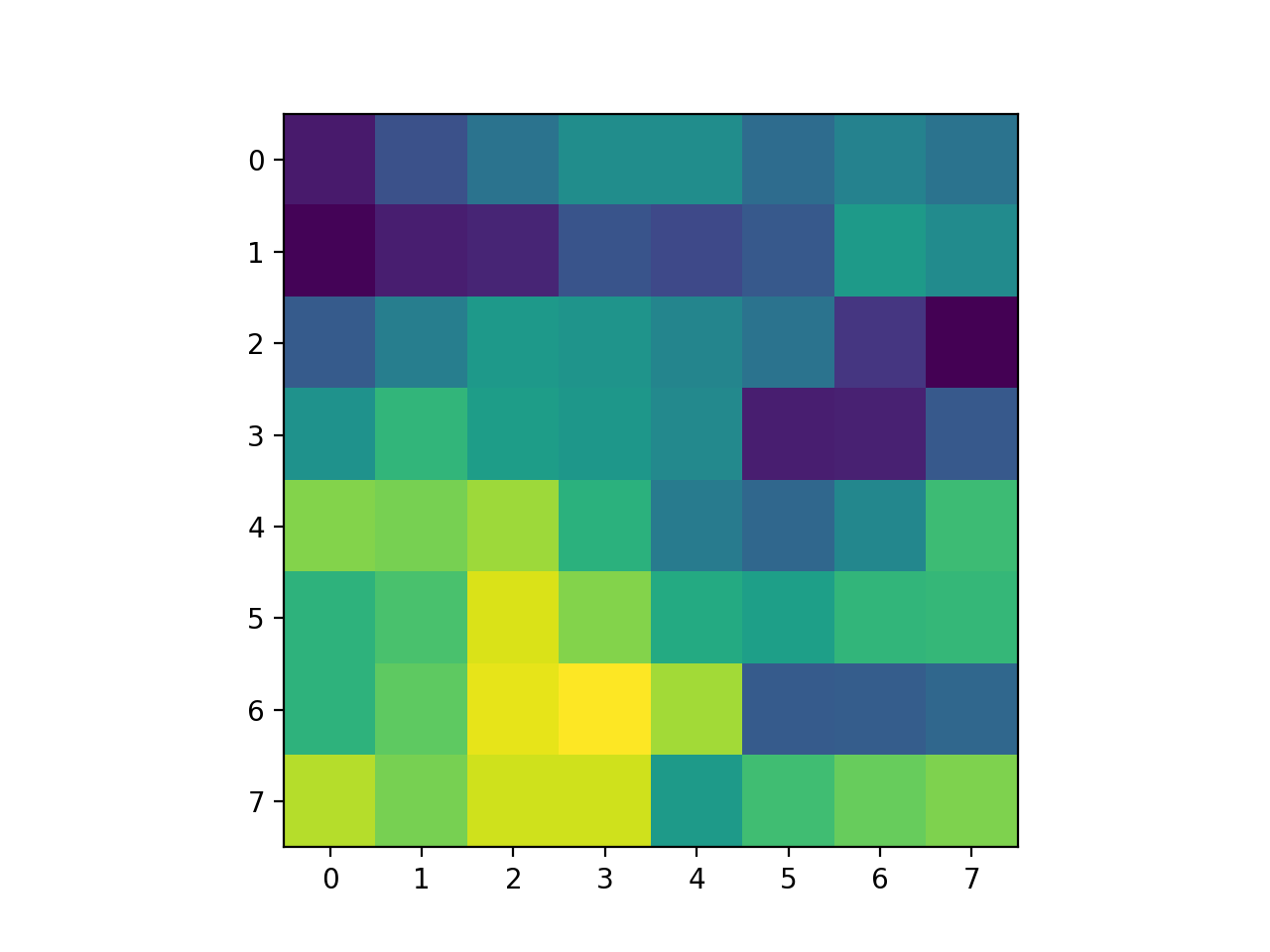

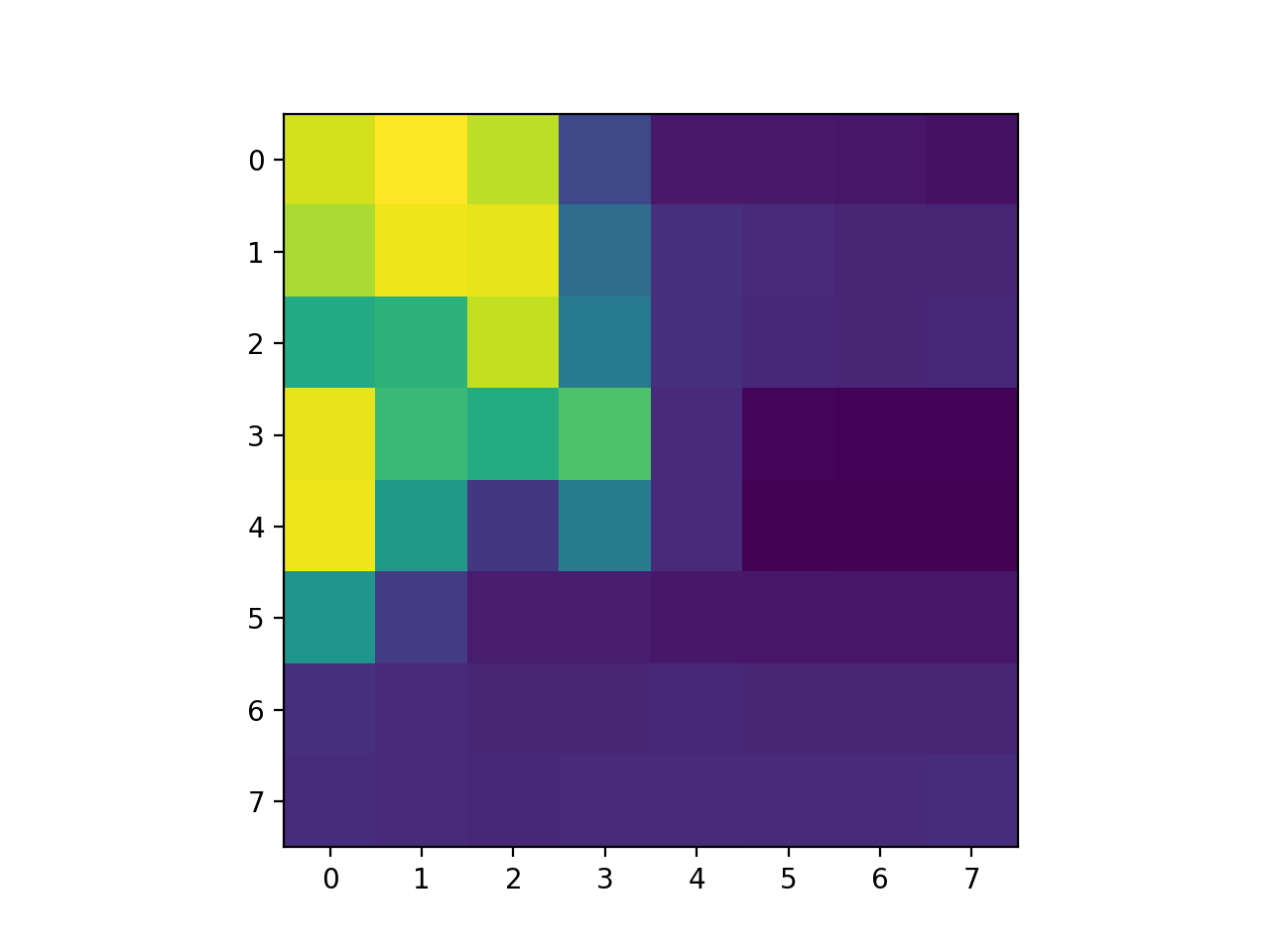

Once getting the corner positions, we sample a 40x40 patch around this center, and downsample this patch to 8x8. This way we will obtain a 8x8 feature descritor that describes the features around the center. We will use this feature to further match pairs of points from two images.

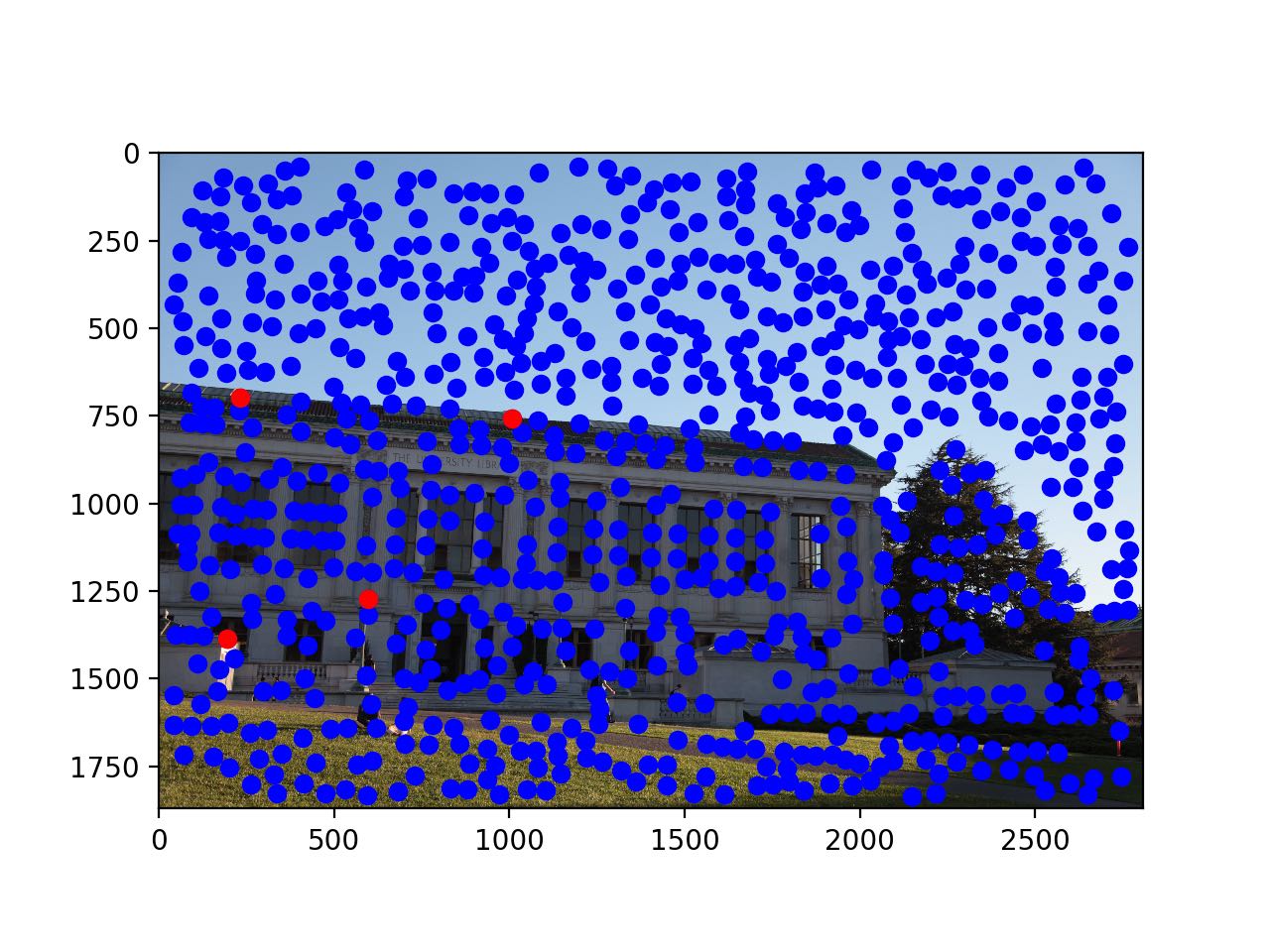

Once we obtained the feature descriptors, we will run a nearest neighbor algorithm to find the matching point in the target image given a point in the source image. We use SSD to see if a pair of feature descriptors from two images matches. We say that they are a match if the SSD between two descriptors are less than a threshold.

Once we obtain all the matching features, we get all the matching points. The rest is the same as project 6A, where we calculate the homography matrix and warp the image, then finally stitch the two images.

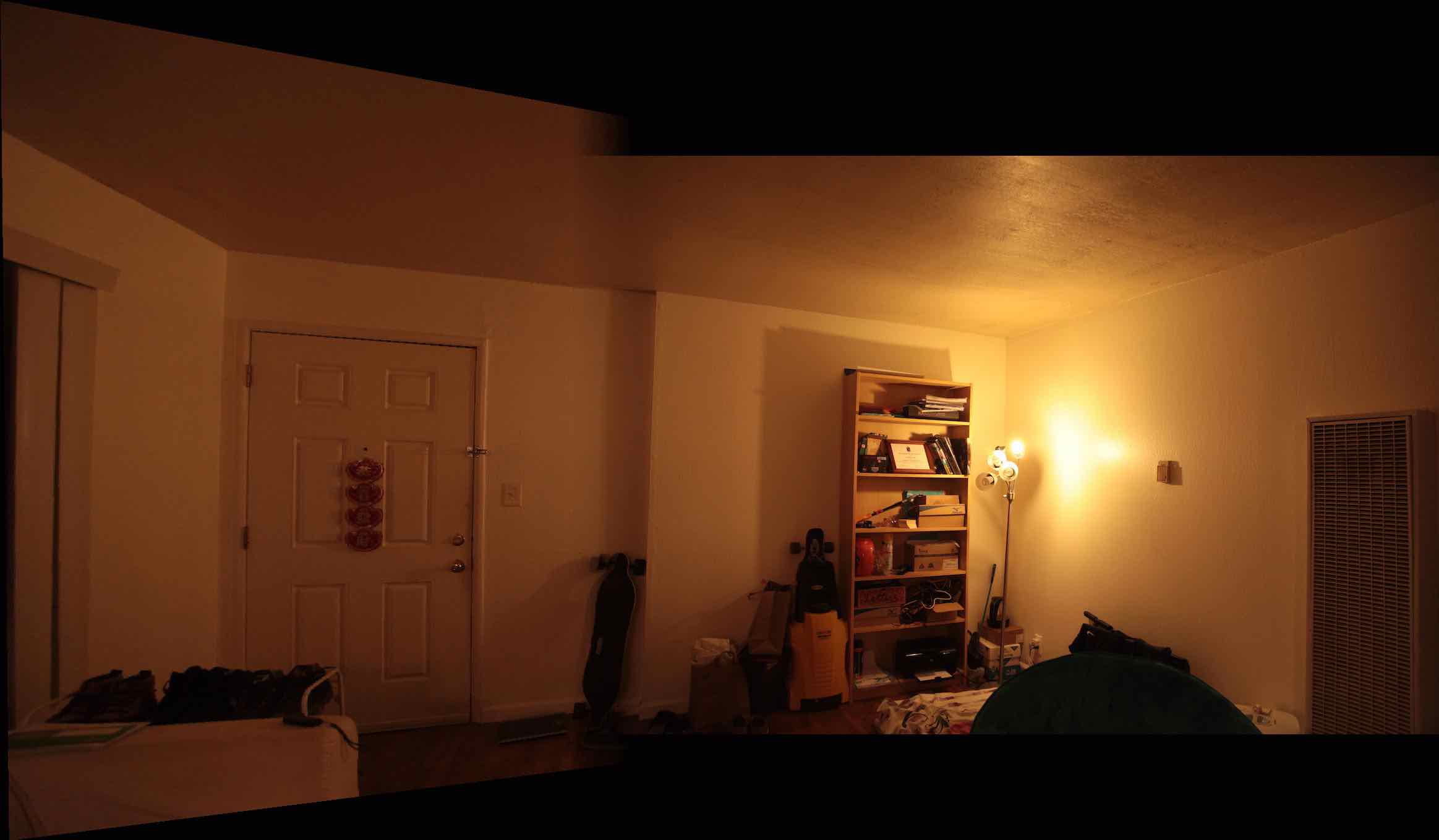

Auto Stitching

Manual Stitching

Auto Stitching

Manual Stitching

Auto Stitching

Manual Stitching